Oracle Java download from command line

Friday, February 12. 2016

As Linux system administrator every once in a while you need to install something requiring Java. Open-source guys tend to gear towards OpenJDK, the GPL-licensed version of java. Still, java developers tend to write a lot of crappy code requiring a specific version of run-time-engine. So, you're in a desperate need of Oracle's java.

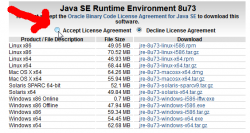

Now the Oracle people are very keen on you accepting their license before you can get your hands on their precious, leaky, JRE. At the same time all you have in front of you is a Bash-prompt and you're itching to go for a:

wget http://download.oracle.com/otn-pub/java/jdk/8u74-b02/jre-8u74-linux-x64.rpm

Yes. Everybody has tried that. No avail. ![]()

All you're going to get with that is a crappy HTML-page saying, that you haven't approved the license agreement and your request is unauthorized.

Darn!

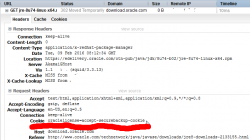

But wait! There is a solution! All the Oracle is looking to see is a specific cookie oraclelicense set with a value accept-securebackup-cookie.

So, to leech the file into your box, you can do a:

wget --header='Cookie: oraclelicense=accept-securebackup-cookie' http://download.oracle.com/otn-pub/java/jdk/8u74-b02/jre-8u74-linux-x64.rpm

Ta daa! No you're rocking. ![]()

Apache mod_rewrite: Blocking unwated requests

Thursday, February 11. 2016

As anybody ever attempting to use mod_rewrite knows, that it is kinda black magic. Here are couple of my previous stumbings with it: file rewrite and Ruby-on-rails with forced HTTPS.

The syntax in mod_rewrite is simple, there aren't too many directives to use, the options and flags make perfect sense, even the execution order is from top to down every time, so what's the big problem here?

About mod_rewrite run-time behaviour ...

It all boils down to the fact, that the directives are processed multiple times from top to down until Apache is happy with the result. The exact wording in the docs is:

If you are using RewriteRule in either .htaccess files or in <Directory> sections, it is important to have some understanding of how the rules are processed. The simplified form of this is that once the rules have been processed, the rewritten request is handed back to the URL parsing engine to do what it may with it. It is possible that as the rewritten request is handled, the .htaccess file or <Directory> section may be encountered again, and thus the ruleset may be run again from the start. Most commonly this will happen if one of the rules causes a redirect - either internal or external - causing the request process to start over.

What the docs won't mention is, that even in a <Location> section, it is very easy to create situation where your rules are re-evaluated again and again.

The setup

What I have there is a classic Plone CMS setup on Apache & Python -pair.

The <VirtualHost> section has following:

# Zope rewrite.

RewriteEngine On

# Force wwwthehost

RewriteCond %{HTTP_HOST} !^www\.thehost\.com$

RewriteRule ^(.*)$ http://www..com$1 [R=301,L]

# Plone CMS

RewriteRule ^/(.*) http://localhost:2080/VirtualHostBase/http/%{SERVER_NAME}:80/Plone/VirtualHostRoot/$1 [L,P]

Those two rulres make sure, that anybody accessing http://host.com/ will be appropriately redirected to http://www.thehost.com/. When host is correct, any incoming request is proxied to Zope to handle.

My problem

Somebody mis-configured their botnet and I'm getting a ton of really weird POSTs. Actually, the requests aren't that weird, but the data is. There are couple hunder of them arriving from various IP-addresses in a minute. As none of the hard-coded requests don't have the mandatory www.-prefix in them, it will result in a HTTP/301. As the user agent in the botnet really don't care about that, it just cuts off the connection.

It really doesn't make my server suffer, nor increase load, it just pollutes my logs. Anyway, because of the volume, I chose to block the requests.

The solution

I added ErrorDocument and a new rule to block a POST arriving at root of the site not having www. in the URL.

ErrorDocument 403 /error_docs/forbidden.htmlthehost

# Zope rewrite.

RewriteEngine On

RewriteCond %{REQUEST_METHOD} POST

RewriteCond %{HTTP_HOST} ^\.com$thehost

RewriteRule ^/$ - [F,L]

# Static

RewriteRule ^/error_docs/(.*) - [L]

# Force www

RewriteCond %{HTTP_HOST} !^www\.thehost\.com$

RewriteCond %{REQUEST_URI} !^/error_docs/.*

RewriteRule ^(.*)$ http://www..com$1 [R=301,L]

# Plone CMS

RewriteRule ^/(.*) http://localhost:2080/VirtualHostBase/http/%{SERVER_NAME}:80/Plone/VirtualHostRoot/$1 [L,P]

My solution explained:

- Before checking for www., I check for POST and return a F (as in HTTP/403) for it

- Returning an error triggers 2nd (internal) request to be made to return the error page

- The request for the error page flows trough these rules again, this time as a GET-request

- Since the incoming request for error page is (almost) indistinquishable from any incoming request, I needed to make it somehow special.

- A HTTP/403 has an own error page at /error_docs/forbidden.html, which of course I had to create

- When a request for /error_docs/forbidden.html is checked for missing

www., it lands at a no-op rewriterule of ^/error_docs/(.*) and stops processing. The Force www -rule will be skipped. - Any regular request will be checked for

www.and if it has it, it will be proxied to Zope. - If the request won't have the www. -prefix will be returning a HTTP/301. On any RFC-compliant user agent will trigger a new incoming (external) request for that, resulting all the rules to be evalueat from top to bottom.

All this sounds pretty complex, but that's what it is with mod_rewrite. It is very easy to have your rules being evaluated many times just to fulfill a "single" request. A single turns into many very easily.