- Homepage

- http://fi.linkedin.com/in/jariturkia/

- Country

- Finland

- Occupation

- ICT pro

- Hobbies

RAID Controller Upgrade

Saturday, January 31. 2026

It hasn't been an especially good couple of weeks. I've suffered couple hardware failures. One of my recent posts is about 13 year old SSD getting a retirement. There are other hardware failures waiting for a write-up.

A Linux gets security updates every once in a while. I have two boxes with a bleeding/cutting edge distro. The obvious difference between those is, that a "bleeding" edge is so new stuff, it doesn't let wounds heal. It literally breaks because of way too new components. A "cutting" edge is pretty new, but more tested. Concrete example of a bleeding edge would be ArchLinux and a cutting edge would be Fedora Linux.

My box got a new kernel version and I wanted to start running it. To my surprise, booting into the new version failed. Boot was stuck. Going to recover, I realized there was a physical storage device missing preventing automatic filesystem mount to happen, preventing successful boot. Rebooting again. This time eyeballing console display.

Whoa!

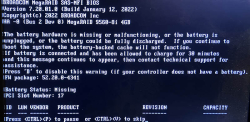

LSI 9260-4i

RAID status was missing from boot-sequence. This is what I was expecting to see, but was missing:

Hitting reset-button. Nothing.

Powering off the entire box. Oh yes! Now the PCIe-card was found, Linux booted and mounted the drive.

It was pretty obvious, any reliability the system may have had - was gone. From this point on, I was in a recovery mode. Any data on that mirrored pair of HDDs was on verge of being lost. Or, to be exact: system stability was at risk, not the data. On this quality RAID-controller from 2011, data saved to a mirrored drive has no header of any kind. Unplugging a drive from RAID-controller and plugging it into a USB3-dock makes the drive completely visible without tricks. Data not being lost at any point is a valuable thing.

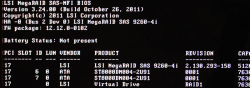

This is what a LSI 9240-4i would look like:

On a Linux, the card would look like (if UEFI finds it in boot):

kernel: scsi host6: Avago SAS based MegaRAID driver

kernel: scsi 6:2:1:0: Direct-Access LSI MR9260-4i 2.13 PQ: 0 ANSI: 5

kernel: sd 6:2:1:0: Attached scsi generic sg2 type 0

kernel: sd 6:2:1:0: [sdb] 11719933952 512-byte logical blocks: (6.00 TB/5.46 TiB)

LSI 9560-8i

Proper RAID controllers as brand new are expensive. Like 1000€ a piece. This is just a hobby, so I didn't need a supported device. My broken one was 14 years old. I could easily settle for an older model.

To eBay. Shopping for a replacement.

Surprisingly, 9240-4i was still available. I didn't want one. That model was End-of-Life'd years ago. I wanted something that might be still supported or went just out of support.

Lovely piece of hardware. Affordable even as second-hand PCIe -card.

On a Linux, the card shows as:

02:00.0 RAID bus controller: Broadcom / LSI MegaRAID 12GSAS/PCIe Secure SAS39xx

On 9560-8i Cabling

Boy, oh boy! This wasn't easy. This was nowhere near easy. Actually, this was very difficult part. Involving multiple nights spent with googling and talking to AI.

The internal connector is a single SFF-8654 (SlimSAS). Additionally, the card when you purchase one, doesn't come with any cabling with it. 9240-4i did have a proper breakout cable for its Mini-SAS SFF-8087. On the other end, there was a SAS/S-ATA -connector. As a SFF-8654 will typically be used to connect into a some sort of hot-swap bay, there a re multiple cabling options.

Unfortunately to me, SFF-spec doesn't have anything like that. But waitaminute! In the above picture, there is a SFF-8654 8i breakout cable with 8 SAS/S-ATA connectors in it. One is even connected to a HDD to demonstrate it working perfectly.

Well, this is where AliExpress steps up. Though, the spec doesn't say such thing exists, it doesn't mean that you couldn't buy such cable with money. I went with one vendor. It seemed semi-reliable with hundreds of 5-star transactions completed. Real, certified SFF-8654 -cables are expensive. 100+€ and much more. This puppy cost me 23€. What a bargain! I was in a hurry, so I paid 50€ for the shipping. And duty and duty invoicing fees and ... ah.

Configuring a replacment RAID-array

This was the easy part of the project. Apparently LSI/Broadcom -controllers write metadata to a drive. When I plugged in all the cables and booted the computer, it fould the previously configured array. RAID-configuration data IS stored to the drive somewhere, it's just not at a header of the drive. This is handy two-ways: unplugged drive looks like a regular drive, but on an appropriate controller the configs are readable.

Obviously the data on the drive was transferred away to an external USB-drive for safe-keeping. First I waited 12 hours for a degraded RAID-array to become intact again, then LVMing the data back.

A copy of drive was on an external drive connected via USB3. Recovery procedure with LVM:

- Partition the new RAID1-mirror as LVM with parted

- pvcreate the new physical device to make it visible into LVM

- vgextend the logical volume residing in external USB-drive to utilize newly created physical device. Note: Doing this does NOT move any data.

- pvmove:ing all data from external drive into internal drive. This forces logical volume to NOT use any extents on the drive. Result is moving data.

- Waiting. For 8 hours. This is a live system accessing the drive at all times.

- vgreduce:in the logical volume to stop using external drive.

- pvremove:in the external drive from LVM.

- Done!

On LSI/Broadcom Linux Software

This is what I learned:

- MegaRAID:

- Unsupported at the time of writing this blog post

- For RAID-controllers series 92xx

- My previous 9240 worked fine with MegaCli64

- StorCLI:

- Unsupported at the time of writing this blog post

- For RAID-controller series 93xx, 94xx and 95xx

- My 9560 worked fine with storcli64

- StorCLI2:

- Still supported!

- For RAID-controller series 96xx onwards

Running MegaCli64 with 95xx-series controller installed will make the command stuck. Like properly stuck. Stuck so well, that not even kill -9 does anything -stuck.

Running StorCLI2 with 95xx-series controller installed does nothing. There is a complaint, that no supported controller was found on the system. Nothing stuck. Much less dangerous than MegaCli64.

Status

Note the complaing about battery backup:

The battery hardware is missing or malfunctioning, or the battery is unplugged, or the battery could be fully discharged. If you continue to boot the system, the battery-backed cache will not function.

If battery is connected and has been allowed to charge for 30 minutes sand this message continues to appear, then contact technical support for lassistance.Battery Status: Missing

On Linux prompt by running command storcli64 /c0 /vall show:

CLI Version = 007.3007.0000.0000 May 16, 2024

Operating system = Linux 6.16.3-100.fc41.x86_64

Controller = 0

Status = Success

Description = None

Virtual Drives :

==============

-------------------------------------------------------------

DG/VD TYPE State Access Consist Cache Cac sCC Size Name

-------------------------------------------------------------

0/239 RAID1 Optl RW Yes RWTD - ON 5.457 TB

-------------------------------------------------------------

VD=Virtual Drive| DG=Drive Group|Rec=Recovery

Cac=CacheCade|OfLn=OffLine|Pdgd=Partially Degraded|Dgrd=Degraded

Optl=Optimal|dflt=Default|RO=Read Only|RW=Read Write|HD=Hidden|TRANS=TransportReady

B=Blocked|Consist=Consistent|R=Read Ahead Always|NR=No Read Ahead|WB=WriteBack

AWB=Always WriteBack|WT=WriteThrough|C=Cached IO|D=Direct IO|sCC=Scheduled

Check Consistency

Finally

Hopefully I don't need to touch these drives for couple years. In -23, I upgraded the drives. Something really weird happened in January this year and I had to replace the replacement drives. As I wrote in the article, the drives were in perfect condition.

Now I know, the controller started falling apart. I simply didn't realize it at the time.

Merry Christmas and Happy New Year 2026!

Tuesday, December 23. 2025

With this DALL-E 3 created image of Santa Claus' "cloud service" I'd like to wish you all Merry Christmas!

AI image generation has taken a leap forward since my last year's card. This time even an abstract concept is understood and can be visualized in a picture I could fine-tune into my liking.

Bonus:

When thinking a random word like "christmas", Spotify has 174188 albums and 783892 songs with that word in their title. Somebody gave their infosec personnel an early x-mas present.

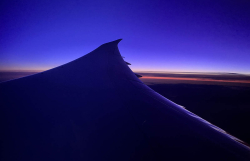

Bengaluru, India trip

Sunday, November 30. 2025

I had an opportunity to take a trip to India. My employer is expanding in Bengaluru and I went there to get a good look&feel how things are there.

From this trip, I'll share some pics:

For a Finn, there is lots of people and traffic. In traffic the constant 24/7 honking of horn is something I won't miss.

If Azure is down, your initial guess might be the electric grid next to Microsoft office. For any western standpoint that looks shady.

An audiophile didn't expect a hi-fi -store there to carry Genelec loudspeakers.

The more artistic pic is of a Boeing 787 Dreamliner winglet over Turkish airspace on a KLM flight back home.

30 Years of Code Anniversary

Thursday, October 23. 2025

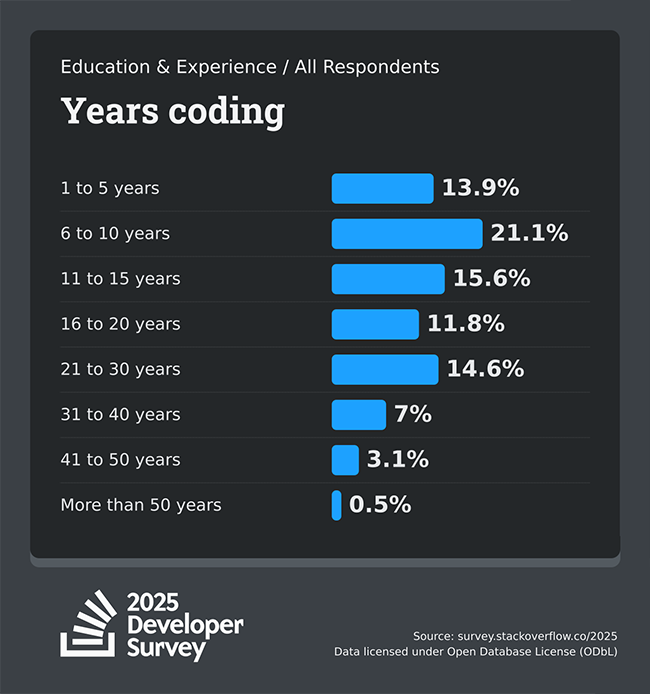

In Stack Overflow Developer Survey, 2024 there was a question (I'm paraphrasing here):

As a part of your work, how many years have you coded professionally?

(NOT including education)

Today, this date, my answer is 30!

For this achievement, I'm awarding myself an AI-generated trophy.

This is an uncommon achievement. Looking at 2025 statistics of developer coding years (including education):

The draw to stop coding is strong.

First: This is hard and difficult work. Most people on this Earth can not do it. Even less can keep doing it.

Second: There are so many tasks near coding that don't actually involve writing nor reading code in product management, business analysis, design, QA, operations and support. It is so easy to sit next to a coder and tell them what to do.

Third: Getting promoted. It is very hard to resist new responsibilities with more pay without need to do much hands-on technical work. To most people it is. To me that's a no-brainer.

I keep resisting. I'm not promising another 30 years. I promise 10 good ones. 20 if I'm lucky.

PS.

Including education and hobbies before turning pro, the real number is over 40.

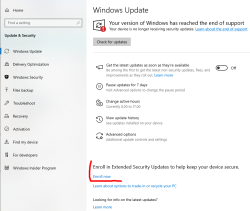

Windows 10 - EoL - Getting ESU

Saturday, October 18. 2025

Like the Dire Straits song says: "I want my ESU!" Or maybe I remember the lyrics wrong.

14th Oct came and went and I'm still not able to get my Windows 10 into Extended Support Updates -program. What gives!?

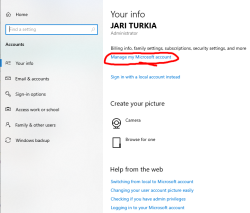

I meet all the criteria, but no avail. Apparently they changed something in license agreement and I haven't approved that yet. Going to my Microsoft Account:

There I'm first greeted by a hey-we-changed-something-you-need-to-approve-this-first -thingie. I just clicked approve can came back.

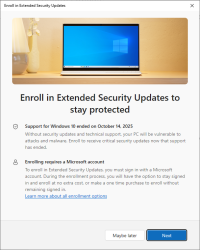

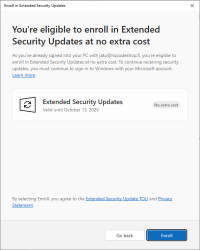

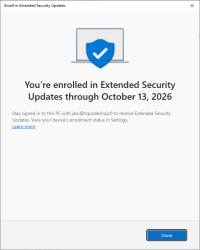

Now Windows Updates offers me an "Enroll Now" -option. Following that path:

I'm eligible, my PC is eligible, and all this at zero cost. Nice!

I so wish somebody had put any effort on this one. There are published videos and screenshots of people getting their ESU instantly. For me, nobody bothered to tell I'm missing some sort of approval.

Windows 10 - EoL, pt. 2

Wednesday, October 15. 2025

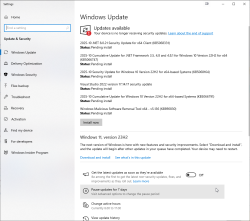

Yeah, Windows 10 still end-of-lifed and not getting any updates.

Can you guess what happened next:

There were six new security updates. Let me repeat: after not getting any, I got 6! That's what I'd call inconsistent.

Pressure is still high. After installing those security updates and rebooting:

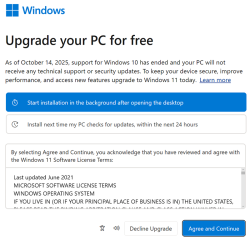

After login, I didn't get a login. Instead, there was a force-fed dialog on Windows 11 upgrade.

No thank you!

I love my "last Windows".

Additionally, any mention of ESU is gone from Windows Updates -dialog. Running

ClipESUConsumer.exe -evaluateEligibility

still yields ESUEligibilityResult as "coming soon".

Windows 10 - EoL

Tuesday, October 14. 2025

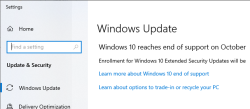

Today is 14th Oct 2025. End of support for the last version of Windows.

Good thing is, I can go for a paid support model, Extended Security Updates (ESU).

Oh wait!

The text says: "Enrollment for Windows 10 Extended Security Updates will be coming soon."

Looks like I'm not alone with this problem. "Enrolment for Windows 10 Extended Security Updates will be coming soon." still showing up not even a week before end of support - Can I fix this?

People are trying all kinds of registry tricks, in style of

reg.exe query "HKCU\SOFTWARE\Microsoft\Windows NT\CurrentVersion\Windows\ConsumerESU"

to get sanity on this one. My thinking is ESUEligibilityResult value 0xd = ComingSoon - EEA_REGION_POLICY_ENABLED

I'd rather get clarity on this one rather than guesswork.

Native IPv6 from Elisa FTTH

Thursday, September 25. 2025

My beloved ISP whom I love to hate has finally ... after all the two decades of waiting ... in Their infinite wisdom ... decided to grant us mere humans, humble payers of their monthly bills a native IPv6.

This is easily a day I thought would never happen. Like ever.

Eight years ago, when I was living in Stockholm, I had proper and good IPv6. There is a blog post of that with title Com Hem offering IPv6 via DHCPv6 to its customers.

By this post, I don't want to judge ongoing work nor say anything negative. This is just a heads-up. Something is about to happen. Everything isn't like it should be, but I have had patience this far. I can wait a bit longer.

Current Status

Here is a list of my observations as of 25th Sep 2025:

- There is no SLAAC yet. Delivery is via DHCPv6.

- One, as in a single, IPv6-address per DHCP-client host ID. I don't know how many addresses would I be able to extract. IPv6 has a "few" of them.

- No Prefix Delegation. 1 IPv6, no more. Not possible to run a LAN at this point.

- No default gateway in the DHCPv6 options. DNS ok. Connectivity to Internet is not there yet.

- No ISP firewall. Example: outgoing SMTP to TCP/25 egresses ok.

- Incoming IPv6 ok.

I'm sure many of these things will change to better and improve even during upcoming weeks and months.

Overcoming the obstacle: Figuring out a default gateway

Oh, did I mention there is no default gateway? That's a blocker!

Good thing is, that there is a gateway and it does route your IPv6-traffic as you'd expect. Getting the address is bit tricky and ISP doesn't announce it. (Credit: mijutu)

Running:

tcpdump -i eth0 -vv 'udp and (port 546 or port 547)'

while flipping the interface down&up will reveal something like this:

21:40:50.541022

IP6 (class 0xc0, hlim 255, next-header UDP (17) payload length: 144)

fe80::12e8:78ff:fe23:5401.dhcpv6-server > my-precious-box.dhcpv6-client:

[udp sum ok] dhcp6 advertise

That long porridge of a line is split up for clarity. The good bit is fe80::12e8:78ff:fe23:5401.dhcpv6-server. Now I have the link-local address of the DHCPv6-server. What would be the odds, it would also route my traffic if asked nicely?

ip -6 route add default via fe80::12e8:78ff:fe23:5401 dev eth0

Testing the Thing

Oh yes! My typical IPv6-test of ping -6 -c 5 ftp.funet.fi will yield:

PING ftp.funet.fi (2001:708:10:8::2) 56 data bytes

64 bytes from ipv6.ftp.funet.fi (2001:708:10:8::2): icmp_seq=1 ttl=60 time=5.42 ms

64 bytes from ipv6.ftp.funet.fi (2001:708:10:8::2): icmp_seq=2 ttl=60 time=5.34 ms

64 bytes from ipv6.ftp.funet.fi (2001:708:10:8::2): icmp_seq=3 ttl=60 time=5.29 ms

64 bytes from ipv6.ftp.funet.fi (2001:708:10:8::2): icmp_seq=4 ttl=60 time=5.31 ms

64 bytes from ipv6.ftp.funet.fi (2001:708:10:8::2): icmp_seq=5 ttl=60 time=5.39 ms

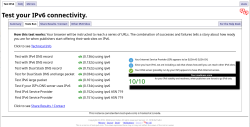

Outgoing SSH/HTTP/whatever works ok. Incoming SSH and HTTPS work ok. Everything works! Test-ipv6.com result:

Cloudflare Speedtest result:

This is very flaky. I ran it from Linux console over X11.

Also, the typical Speedtest.net, which I'd typically run, won't support IPv6 at all.

Finally

I'm so excited! I can not wait for Elisa's project to complete

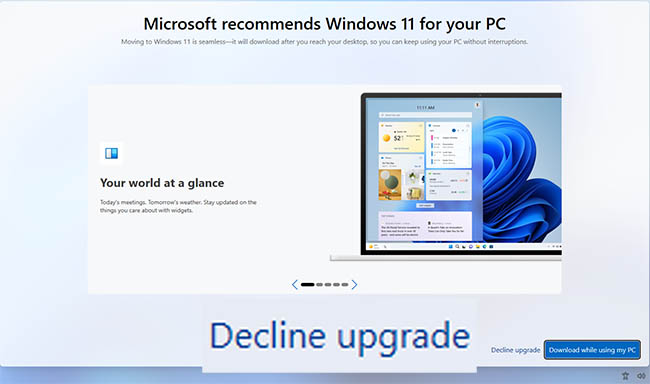

Windows 10 users getting pressure to upgrade - Part 2

Monday, September 8. 2025

The heat is on. Microsoft is already tightening thumb-screws. See my post from last month on that.

It's time to upgrade your PC before end of support

End of support for Windows 10 arrives on October 14th, 2025, Ths means your PC won't receive technical support or security updates after that date. Get Windows 11 to stay up to date.

Microsoft recommends Windows 11 for your PC

Moving to Windows 11 a seamless - it will download after you reach your desktop, so you can keep using your PC without interruptions.

Lemme thing this for a sec... NO!

I'll take a hard pass on that.

Microsoft 2015: ”Right now we’re releasing Windows 10, and because Windows 10 is the last version of Windows, we’re all still working on Windows 10.”

Microsoft 2025: Update now to Windows 11 - the not last Windows, but do update so that we can offer you nothing new, no improvements, but more license money from corporate version.

SSD Trouble - Replacement of a tired unit

Sunday, August 31. 2025

Trouble

Operating multiple physical computers is a chore. Things do happen, especially at times when you don't expect any trouble. On a random Saturday morning, an email sent by a system daemon during early hours would look something like this:

The following warning/error was logged by the smartd daemon:

Device: /dev/sda [SAT], FAILED SMART self-check. BACK UP DATA NOW!

Device info:

SAMSUNG MZ7PC128HAFU-000L1, S/N:S0U8NSAC900712, FW:CXM06L1Q, 128 GB

For details see host's SYSLOG.

Aow crap! I'm about to lose data unless rapid action is taken.

Details of the trouble

Details from journalctl -u smartd:

Aug 30 00:27:40 smartd[1258]: Device: /dev/sda [SAT], FAILED SMART self-check. BACK UP DATA NOW!

Aug 30 00:27:40 smartd[1258]: Sending warning via /usr/libexec/smartmontools/smartdnotify to root ...

Aug 30 00:27:40 smartd[1258]: Warning via /usr/libexec/smartmontools/smartdnotify to root: successful

Then it hit me: My M.2 SSD is a WD. What is this Samsung I'm getting alerted about? Its this one:

Oh. THAT one! It's just a 2.5" S-ATA SSD used for testing stuff. I think I have a Windows VM running on it. If you look closely, there is word "FRU P/N" written in block letters. Also under the barcode there is "Lenovo PN" and "Lenovo C PN". Right, this unit manufactured in September 2012 was liberated from a Laptop needing more capacity. Then it ran one Linux box for a while and after I upgraded that box, drive ended up gathering dust to one of my shelves. Then I popped it back into another server and used it for testing.

It all starts coming back to me.

More details with parted /dev/sda print:

Model: ATA SAMSUNG MZ7PC128 (scsi)

Disk /dev/sda: 128GB

Sector size (logical/physical): 512B/512B

Partition Table: gpt

Disk Flags:

Number Start End Size File system Name Flags

1 1049kB 106MB 105MB fat32 EFI system partition boot, esp, no_automount

2 106MB 123MB 16.8MB Microsoft reserved partition msftres, no_automount

3 123MB 127GB 127GB ntfs Basic data partition msftdata, no_automount

4 127GB 128GB 633MB ntfs hidden, diag, no_automount

Oh yes, Definitely a Windows-drive. Further troubleshooting with smartctl /dev/sda -x:

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAGS VALUE WORST THRESH FAIL RAW_VALUE

9 Power_On_Hours -O--CK 090 090 000 - 47985

12 Power_Cycle_Count -O--CK 095 095 000 - 4057

177 Wear_Leveling_Count PO--C- 017 017 017 NOW 2998

178 Used_Rsvd_Blk_Cnt_Chip PO--C- 093 093 010 - 126

179 Used_Rsvd_Blk_Cnt_Tot PO--C- 094 094 010 - 244

180 Unused_Rsvd_Blk_Cnt_Tot PO--C- 094 094 010 - 3788

190 Airflow_Temperature_Cel -O--CK 073 039 000 - 27

195 Hardware_ECC_Recovered -O-RC- 200 200 000 - 0

198 Offline_Uncorrectable ----CK 100 100 000 - 0

199 UDMA_CRC_Error_Count -OSRCK 253 253 000 - 0

233 Media_Wearout_Indicator -O-RCK 198 198 000 - 195

Just to keep this blog post brief, above is a shortened list of the good bits. Running the command spits out ~150 lines of information on the drive. Walking through what we see:

- Power on hours: ~48.000 is roughly 5,5 years.

- Since the unit manufacture of Sep -12 it has been powered on for over 40% of the time.

- Thank you for your service!

- Power cycle count: ~4000, well ... that's a few

- Wear level: ~3000. Or when processed 17. I have no idea what the unit of this would be or the meaning of this reading.

- Reserve blocks: 126 reserve used, still 3788 unused.

- That's good. Drive's internal diagnostics has found unreliable storage and moved my precious data out of it into reserve area.

- There is still plenty of reserve remaining.

- The worrying bit is obvious: bad blocks do exist in the drive.

- ECC & CRC errors: 0. Reading and writing still works, no hiccups there.

- Media wear: 195. Again, no idea of the unit nor meaning. Maybe a downwards counter?

Replacement

Yeah. Let's state the obvious. Going for the cheapest available unit is perfectly ok in this scenario. The data I'm about to lose won't be the most precious one. However, every single time I lose data, that's a tiny chunk stripped directly from my soul. I don't want any of that to happen.

Data Recovery

A simple transfer time dd if=/dev/sda of=/dev/sdd:

250069680+0 records in

250069680+0 records out

128035676160 bytes (128 GB, 119 GiB) copied, 4586.76 s, 27.9 MB/s

real 76m26.771s

user 4m30.605s

sys 14m49.729s

Hour and 16 minutes later my Windows-image was on a new drive. I/O-speed of 30 MB/second isn't much. With M.2 I'm used to a whole different readings. Do note, the replacement drive has twice the capacity. As it stands, 120 GB is plenty for the use-ase.

Going Mechanical

Some assembly with Fractal case:

Four phillips screws to the bottom of the drive. Plugging cables back. That's a solid 10 minute job. Closing the side cover of the case and booting the server to validate everything still working as expected.

New SMART

Doing a 2nd round of smartctl /dev/sda -x on the new drive:

Vendor Specific SMART Attributes with Thresholds:

ID# ATTRIBUTE_NAME FLAGS VALUE WORST THRESH FAIL RAW_VALUE

1 Raw_Read_Error_Rate -O--CK 100 100 000 - 0

9 Power_On_Hours -O--CK 100 100 000 - 1

12 Power_Cycle_Count -O--CK 100 100 000 - 4

148 Unknown_Attribute ------ 100 100 000 - 0

149 Unknown_Attribute ------ 100 100 000 - 0

167 Write_Protect_Mode ------ 100 100 000 - 0

168 SATA_Phy_Error_Count -O--C- 100 100 000 - 0

169 Bad_Block_Rate ------ 100 100 000 - 54

170 Bad_Blk_Ct_Lat/Erl ------ 100 100 010 - 0/47

172 Erase_Fail_Count -O--CK 100 100 000 - 0

173 MaxAvgErase_Ct ------ 100 100 000 - 2 (Average 1)

181 Program_Fail_Count -O--CK 100 100 000 - 0

182 Erase_Fail_Count ------ 100 100 000 - 0

187 Reported_Uncorrect -O--CK 100 100 000 - 0

192 Unsafe_Shutdown_Count -O--C- 100 100 000 - 3

194 Temperature_Celsius -O---K 026 035 000 - 26 (Min/Max 23/35)

196 Reallocated_Event_Count -O--CK 100 100 000 - 0

199 SATA_CRC_Error_Count -O--CK 100 100 000 - 131093

218 CRC_Error_Count -O--CK 100 100 000 - 0

231 SSD_Life_Left ------ 099 099 000 - 99

233 Flash_Writes_GiB -O--CK 100 100 000 - 173

241 Lifetime_Writes_GiB -O--CK 100 100 000 - 119

242 Lifetime_Reads_GiB -O--CK 100 100 000 - 1

244 Average_Erase_Count ------ 100 100 000 - 1

245 Max_Erase_Count ------ 100 100 000 - 2

246 Total_Erase_Count ------ 100 100 000 - 10512

||||||_ K auto-keep

|||||__ C event count

||||___ R error rate

|||____ S speed/performance

||_____ O updated online

|______ P prefailure warning

Whoa! That's the fourth power on to a drive unboxed from a retail packaking. Three of them had to be in the manufacturing plant. Power on hours reads 1, that's not much. SSD life left 99 (I'm guessing %).

Finally

All's well. No data lost. Just my stress level jumping up.

My thinking is: If that new drive survives next 3 years running a Windows on top of a Linux, then it has served its purpose.

On Profitability of Solar Panel Installation

Sunday, August 17. 2025

Solar panel, photovoltaic system, solar generator. These babies have many names.

There are three of 505 watt panels on my roof. Besides the ones in the picture, I do have more panels. This is just my 1500W on sunrise side. Having micro-inverters works well for east-west -installations.

Conversation around solar panels is constantly bubbling. Lot of discussion, not so much facts. Plenty of opinions back and forth. Topics being reviewed include:

- "Installation is expensive. Is this profitable?"

- "Installation is expensive. What's the breakeven in years?"

- "Sun doesn't shine in Finland! Will this make any sense?"

All of those are valid questions. As I was tempted to find out, I went and ordered an installation last year. In this blog post summarize my experience with solar power since last summer.

Summary

Here's the thing briefly with spoilers:

- No, the thing isn't profitable. It doesn't make any sense as breakeven in cost savings to cover the installation fees is many many many years.

- When it goes to electricity, I wanted some security of supply by having those panels on my roof. That went completely sideways! Those things won't do anything, unless there is a functioning electicity network.

- This is because inverter needs a place to feed excess electricity into.

- If no such sink exists, inverter chooses to go silent.

- This behavior can be altered by going for a more expensive offgrid installation. I do not have such thing.

- Sun does shine in Finland. Savings in electricity is real and tangible.

Measuring stuff

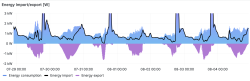

Last summer I wrote a piece about HAN/P1 -port. This is the basis. It is imperative to get exact readings on electricity consumption and readings on exported excess energy. This is available at electricity meter. Second thing to measure is the solar production, for this I have a TCP-based M-bus solution from solar panel controller hub.

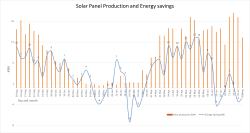

I'm skipping a ton of details, this is what a Grafana-visualization would look like:

Key:

- Blue area: total electricity consumption of my house

- Black line: amount of imported electricity

- Purple area: amount of exported electricity

Findings:

- When there is solar production indicated by purple excess export, black line for imported energy drops nicely below total consumption.

- Panels do reduce my electricity bill by providing some of my consumption from own production.

- There are cases where import actually reaches zero. Momentarily, I'm not paying anything for my electricity. Nice!

- There are many cases where black line for import isn't at zero while there is plenty of export.

- This is the design flaw with solar panel installation.

- The only real way of keeping all the solar harvest is to have a battery where export would go to at all times.

- Also, this is how electricity works. Panel must have a destination where harvested energy goes into each millisecond. If there isn't one, it goes to export.

Results of Measurement

For a period of 10 months, staring from 3rd August 2023 to 29th May 2024 is a reference. No own electricity production. From 29th May 2024 to 3rd August 2025 is the "new normal", panel assisted consumption. As a weekly average, a year with and without solar panels look like this:

Key:

- Orange bars indicate weekly average of produced energy.

- Blue line indicates difference between year without panels and year with panels. There are occasions where saving exceeds produced amount.

- Years are not comparable. Weather tends to do whatever it likes.

Findings:

- It would have been really nice to have a "clean" reference data for entire 12 month period. Unfortunately, this wasn't possible. Still, 10 months of a year is still a good reference material to measure improvement. The improvement is there.

- There is miniscule amount of solar production in November, December and January.

- Indeed. Sun does shine occasionally during nothern hemisphere dark months.

- Energy savings are real

- On the right hand side of the graph, months June and July indicate no energy saving as both years have solar panels. This is the flaw in refenrence consumption data.

The Important Stuff

Lots of graphs and details. What's the key takeaway here? Can we summarize all this somehow?

What Others Say on Profitability

There is a Master's Thesis from Feb 2024: Techno-economic analysis on optimizing the value of photovoltaic electricity in a high-latitude location.

Gist of the thesis is twofold: First, to maximize the profitability, consume your own production. Second, (this is self-evident) installation direction is a factor. In plain words, eat your own dog food and on northern hemisphere, install your panels to southernly direction. The design of PV system installation must be to capture as much sun as possible and consume your own production as much as possible.

Measuring Self-consumption

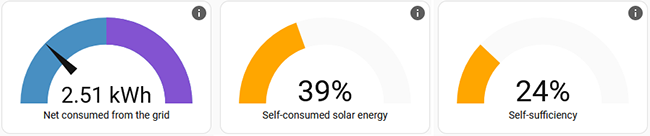

My Home Assistant setup comes with Energy-dashboard as default:

It has a reading for "self-consumed solar energy". Exactly what I should be monitoring!

As this is important, there is a discussion on calculation "Computation of self-sufficiency/autarkie and self-usage of PV". From the discussion thread following useful math can be found:

energy_used = energy_imported + energy_production - energy_exportednet_returned_to_grid = energy_exported - energy_importedself_sufficiency_perc = (energy_used - energy_imported ) / energy_used * 100.0self_consumed_solar_energy_perc = (energy_production - energy_exported ) / energy_production * 100.0

Doing the same with Home Assistant is rather simple. Btw. I'm using VictoriaMetrics add-on. Doing something like this in MetricsQL shows following data for the past year:

delta(sensor.active_energy_import[1y]) = 9000 kWhdelta(sensor.active_energy_export[1y]) = 1700 kWhdelta(sensor.total_production[1y]) = 3400 kWh

This is exactly what I'll need to get: self_consumed_solar_energy_perc = 49%

I can self-consume roughly half of my production and rest is exported as excess.

This is a vital metric, as indicated by the thesis on profitability. To increase this percentage, I'd need to store the production in a battery. This battery might be in a car. Little bit of Home Assistant -tinkering and I'd be able to charge the car on excess export energy. Alternative is to double my investment and go for a (expensive) solar battery storage. On those two, I'd might choose the EV.

Measuring Profitability

All the relevant numbers are there, let's convert all this into time and money. More math:

energy_saved = energy_production - energy_exportedenergy_saved = 3400 - 1700 = 1700 kWh

That is the amount I used of my own production, but didn't have to pay for import. My local network provider takes 4 cents / kWh for transfer. Assuming my energy costs 7 cents / kWh for the entire period, then 1700 kWh would have cost me 190€.

Assuming I'd have a good year and save 200€ on my electricity bill. Further, assuming my PV system cost after tax deductions would be 5000€. A simple 5000/200 division gives the breakeven. That's 25 years! No way, this is profitable nor sensible. Well, at least I have security of supply, when there is an outage .... oh, wait! As mentioned earlier, the panels turn off when there is no electricity. A complete bust!

Finally

On financial perspective, this is not sane. Without batteries, I simply cannot reach the self-consumption numbers needed.

Tinkering with these is a fun hobby, but that's as far it goes.

Windows 10 users getting pressure to upgrade

Saturday, August 16. 2025

This is what's happening in my system tray:

Blue is an optional update indicator. Yellow or orange indicate security patches.

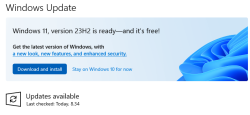

This is what happens when I click the update icon:

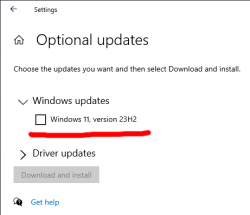

Pretty good advertisement space! Also, additional updates include:

I have zero intention to upgrade. If Windows 11 had anything better or something I'd ever need, I'd already be running the darned thing. As it's just a Windows 10 with pretty bad user interface, I'll stick with my 10 for time being.

Thanks, Microsoft!

Arch Linux 6.15.5 upgrade fail

Thursday, July 31. 2025

On my Arch, I was doing the basic update with pacman -Syu. There was an announcement on linux-6.15.5.arch1-1. Nice!

Aaaaaand it failed. ![]()

(75/75) checking for file conflicts [####################] 100%

error: failed to commit transaction (conflicting files)

linux-firmware-nvidia: /usr/lib/firmware/nvidia/ad103 exists in filesystem

linux-firmware-nvidia: /usr/lib/firmware/nvidia/ad104 exists in filesystem

linux-firmware-nvidia: /usr/lib/firmware/nvidia/ad106 exists in filesystem

linux-firmware-nvidia: /usr/lib/firmware/nvidia/ad107 exists in filesystem

Errors occurred, no packages were upgraded.

Oh. How unfortunate, that. How to get past that obstacle? I tried all kinds of pacman -S --overwrite "*" linux-firmware-nvidia and such, but kept failing. I was just getting error messages spat at me. That's a weird package as it contains number of subpackages, which in reality don't exist at all. Confusing!

The winning sequence was to first let the thing go pacman --remove linux-firmware and follow that with install pacman -S linux-firmware.

Before: Linux version 6.14.4-arch1-2

A reboot later: Linux version 6.15.5-arch1-1

Maybe that's why people think Arch isn't for regular users. Its only for nerds.

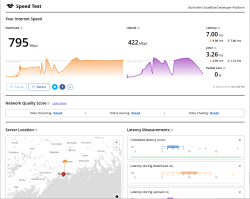

Upgraded Internet connection - Symmetric Fiber to the Home

Sunday, June 29. 2025

Long time no post. I've been busy doing tons of stuff at work, at home and elsewhere. Had very little time to post. Now that summer vacation is there, I finally have availability to do some posting.

Four years ago I got a really fast connection. The reason why I moved ages ago was to get a fiber Internet connection (also there was the obvious need for more space).

Now that my beloved ISP has more offering also in this region, I chose to go for a speedup. There is an article in Finnish about symmetric speeds: Kuituliittymien lähetysnopeudet nousee!

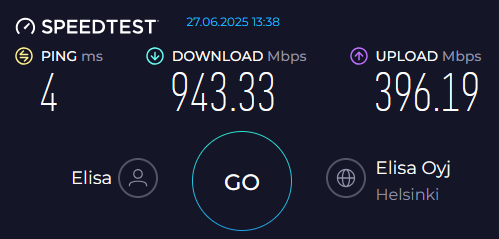

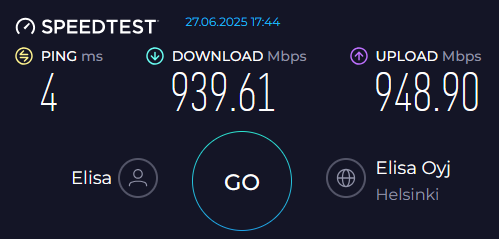

Before:

After:

Observations:

- Download speed measured in 2021 was 878 Mbit/s, now 940 and something. Nice improvement there.

- Then the obvious. 2021 upload was limited to 400 Mbit/s, measuring 393. The speedup is significant as we're nearing 950. Very nice!

Next:

Going above 1 Gbit. I have no idea how long that should take. Years? A decade? 10 Gbit/s connections are available in Finland, 100€ / month. As I'd love to have one, unfortunately, given geography and ISP's turf wars such thing isn't an option for me.

Also: Back-in-the-days I got lots of comments saying something like "Who needs 1 Gbit/s connection!" Note how that wasn't a question. As the Elisa article says, lot of ISP's customers got their gigabit symmetric before me, it is fair to say such speeds are commonly seen. No more luddites commenting on speeds.

Signal - Linked Devices

Monday, March 17. 2025

For messaging, there are plenty of choices. I must admit, my thinking is similar to criminals: the less any government knows about me and my messaging, the better. Today, full anonymity is gone. Really, really bad actors were staying below law enforcement radar, and now those really good ones for messaging are gone.

So, I'm doing what Mr. Snowden does and am using Signal daily.

For governments to keep track on me, Signal works via phone number. My issue with using a phone number as identifying factor is, in any country, there are "like five" different phone numbers (in reality an area code has roughly 10 million different numbers).

As you must feel confused, let me clarify. The reason, I say "5" is because when numbering scheme was designed, 10 million amounted roughtly to infinite. Today, anybody can dial 1000 numbers per second and exhaust the entire number space in less than three hours. Obviously, there are multiple area codes and prefixes, so we have multiple sets of 10 million numbers. So, it would take a day to dial all possible numbers. With single computer. What if somebody could obtain two computers? Or three?

Let's face it phone number as a technical invention has been obsoleted for years. It should NOT be used to identify me in any messaging app. It's convenient to do so. Governments have been tracking phones for many decades and they can demand messaging protocol operators to enforce phone identification. Still, by any measure Signal is the safest option.

Moving on. Phone numbers: bad. Signal: good.

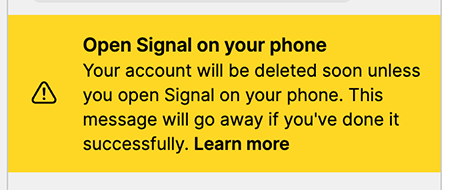

This is what happened the other day:

The text said:

Open Signal on your phone

Your account will be deleted soon unless you open Signal on your phone.

This message will go away if you're done it successfully.

For the past two years, I've never used Signal on a mobile device. To me typing messages with a non-keyboard is madness! So, I'm just using messaging from my computer(s).

It seems there is a limit to it.

Government wants to track you, so you must verify the existence of your phone number for every 2 years. Fair.