Wifi-6 USB on a Linux - Working!

Sunday, January 26. 2025

Last summer I wrote about an attempt to get 802.11ax / Wifi 6 to work on a Linux. Spoiler: It didn't.

A week ago, the author of many Realtek-drivers, Nick Morrow contacted me to inform of a new driver version for RTL8832BU and RTL8852BU Chipsets.

After ./install-driver.sh, the kernel module 8852bu is installed. Dmesg will still display product as 802.11ac WLAN Adapter, however incorrect information that will be. After couple of retries, I managed to get WPA3 authentication working.

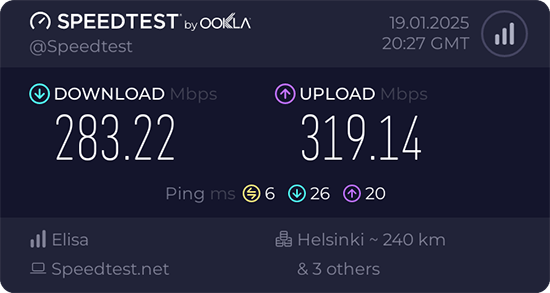

Ah joy. The USB-stick works! Performs quite fast also.

Very quirky driver, still. I can't seem to get the thing working on every plugin. Need to try multiple times. Typical failure is "No secrets were provided" -error with "state change: need-auth -> failed (reason 'no-secrets', managed-type: 'full')" in message log. I have absolutely no idea why this is happening, the built-in Realtek works every time.

Confessions of a Server Hugger - Fixing a RAID Array

Sunday, January 12. 2025

I have to confess: I'm a server hugger. Everything is in cloud or going there. My work is 100% in the clouds, my home pretty much is not.

There are drawbacks.

5.58am, fast asleep, there is a faint beeping waking you. It's relentless and won't go way. Not loud one to alarm you on fire, but not silent one to convince you to go back to sleep. Yup. RAID-controller.

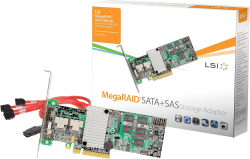

What I have is a LSI MegaRAID SAS 9260-4i. The controller is from 2013. Year later LSI ceased to exist by aquisition. Also the product is rather extinct, Broadcom isn't known for their end user support. As there is proper Linux-driver and tooling after 11 years, I'm still running the thing.

A trivial MegaCli64 -AdpSetProp -AlarmSilence -aALL makes the annoying beep go silent. Next, status of the volume: MegaCli64 -LDInfo -Lall -aALL reveals the source for alarm:

Adapter 0 -- Virtual Drive Information:

Virtual Drive: 0 (Target Id: 0)

Name :

RAID Level : Primary-1, Secondary-0, RAID Level Qualifier-0

Size : 7.276 TB

Sector Size : 512

Mirror Data : 7.276 TB

State : Degraded

Strip Size : 64 KB

Number Of Drives : 2

Darn! Degraded. Uh/oh. One out of two drives in a RAID-1 mirror is gone.

In detail, drive list MegaCli64 -PDList -a0 (for clarity, I'm omitting a LOT of details here):

Adapter #0

Enclosure Device ID: 252

Slot Number: 0

Drive's position: DiskGroup: 0, Span: 0, Arm: 1

Device Id: 7

PD Type: SATA

Raw Size: 7.277 TB [0x3a3812ab0 Sectors]

Firmware state: Online, Spun Up

Connected Port Number: 1(path0)

Inquiry Data: ZR14F8DXST8000DM004-2U9188 0001

Port status: Active

Port's Linkspeed: 6.0Gb/s

Drive has flagged a S.M.A.R.T alert : No

Enclosure Device ID: 252

Slot Number: 1

Drive's position: DiskGroup: 0, Span: 0, Arm: 0

Device Id: 6

PD Type: SATA

Raw Size: 7.277 TB [0x3a3812ab0 Sectors]

Firmware state: Failed

Connected Port Number: 0(path0)

Inquiry Data: ZR14F8PSST8000DM004-2U9188 0001

Port's Linkspeed: 6.0Gb/s

Drive has flagged a S.M.A.R.T alert : No

For slots 0-3, the one connected to cable #1 is off-line. I've never go the idea why ports have different numbering to slots. When doing the mechanical installation with physical devices, it is easy to verify cables matching the slot numbers, not port numbers.

From this point on, everything became clear. Need to replace the 8 TB Seagate BarraCudas with a fresh pair of drives. Time was of the essence, and 6 TB WD Reds were instantly available.

New Reds where in their allotted trays. BarraCudas where on my floor hanging from the cables.

Btw. for those interested, case is Fractal Define R6. Rack servers are NOISY! and I really cannot have them inside the house.

Creating a new array: MegaCli64 -CfgLdAdd -r1 [252:2,252:3] WT RA Direct NoCachedBadBBU -a0. Verify the result: MegaCli64 -LDInfo -L1 -a0

Virtual Drive: 1 (Target Id: 1)

Name :

RAID Level : Primary-1, Secondary-0, RAID Level Qualifier-0

Size : 5.457 TB

Sector Size : 512

Mirror Data : 5.457 TB

State : Optimal

Strip Size : 64 KB

Number Of Drives : 2

Span Depth : 1

Default Cache Policy: WriteThrough, ReadAhead, Direct, No Write Cache if Bad BBU

Current Cache Policy: WriteThrough, ReadAhead, Direct, No Write Cache if Bad BBU

Default Access Policy: Read/Write

Current Access Policy: Read/Write

Disk Cache Policy : Disk's Default

Encryption Type : None

Is VD Cached: No

To my surprise, the RAID-volume hot-plugged into Linux also! ls -l /dev/sdd resulted in a happy:

brw-rw----. 1 root disk 8, 48 Jan 5 09:32 /dev/sdd

Hot-plug was also visible in dmesg:

kernel: scsi 6:2:1:0: Direct-Access LSI MR9260-4i 2.13 PQ: 0 ANSI: 5

kernel: sd 6:2:1:0: [sdd] 11719933952 512-byte logical blocks: (6.00 TB/5.46 TiB)

kernel: sd 6:2:1:0: Attached scsi generic sg4 type 0

kernel: sd 6:2:1:0: [sdd] Write Protect is off

kernel: sd 6:2:1:0: [sdd] Write cache: disabled, read cache: enabled, supports DPO and FUA

kernel: sd 6:2:1:0: [sdd] Attached SCSI disk

Next up: Onboarding the new capacity while transferring data out of the old one. With Linux's Logical Volume Manager, or LVM, this is surprisingly easy. Solaris/BSD people are screaming: "It's sooooo much easier with ZFS!" and they would be right. Its capabilities are 2nd to none. However, what I have is Linux, a Fedora Linux, so LVM it is.

Creating LVM partition: parted /dev/sdd

GNU Parted 3.6

Using /dev/sdd

Welcome to GNU Parted! Type 'help' to view a list of commands.

(parted) mktable gpt

(parted) mkpart LVM 0% 100%

(parted) set 1 lvm on

(parted) p

Model: LSI MR9260-4i (scsi)

Disk /dev/sdd: 6001GB

Sector size (logical/physical): 512B/512B

Partition Table: gpt

Disk Flags:

Number Start End Size File system Name Flags

1 1049kB 6001GB 6001GB LVM lvm

(parted) q

With LVM, inform of a new physical volume: pvcreate /dev/sdd1

Physical volume "/dev/sdd1" successfully created.

Not creating system devices file due to existing VGs.

Extend the LVM volume group to the new physical volume: vgextend My_Precious_vg0 /dev/sdd1

Finally, inform LVM to vacate all data from degraded RAID-mirror. As VG has two PVs in it, this effectively copies all the data. On-the-fly. With no downtime. System running all the time. Command is: pvmove /dev/sdb1 /dev/sdd1

Such moving isn't fast. With time, the measured wallclock-time for command execution was 360 minutes. That's 6 hours! Doing more math with lvs -o +seg_pe_ranges,vg_extent_size, indicates PV extent size to be 32 MiB. On the PV, 108480 extents were allocated to VGs. That's 3471360 MiB in total. For 6 hour transfer, that's 161 MiB/s on average. To set that value into real World, my NVMe SSD benchmarks 5X faster on write. To repeat the good side: my system was running all the time, services were on-line without noticeable performance issues.

Before tearing down the hardware, final thing with LVM is to vacate broken array from VG: vgreduce My_Precious_vg0 /dev/sdb1 followed by pvremove /dev/sdb1.

Now that LVM was in The Desired State®, final command to run was to remove degraded volume from LSI: MegaCli64 -CfgLdDel -L0 -a0

To conclude this entire shit-show, it was time to shutdown system, remove BarraCudas and put case back together. After booting the system, annoying beep was gone.

Dust having settled, it was time to take a quick looksie on the old drives. Popping BarraCudas to a USB3.0 S-ATA -bridge revealed both drives being functional. Drives weren't that old, 2+ years of 24/7 runtime on them. Still today, I don't know exactly what happened. I only know LSI stopped loving one of the drives for good.

Stiga.com hack

Saturday, January 11. 2025

In this blog, I've established two facts: I own domains and I run my own mail server. When you merge those two together we get to the point where I have mailboxes which completely ignore the left side of user @ domain in an email address. This enables me to use unique email address to each an every possible usage. When I start getting spam from some poor bastard, I can easily identify and attribute blame. This does happen surprisingly often.

Last autumn, such an incident happened. As the "poor bastard" in question was Stiga.com I'm publishing the details here. For a small ones, I'm willing to give them the benefit of a doubt as most organizations don't have that much skills and resources on information security. Anybody having 450 million € sales per year, don't expect me to hold back.

Timeline

September 2024

Spam:

Reply-To: info@cuscmm.com

From: Mossack Fonseca <d33858864@gmail.com>

Date: Mon, 23 Sep 2024 15:02:47 +0100

I hope this email finds you well. On behalf of Jeff Bezos, the CEO of Amazon, I am writing to inform you that you have been randomly selected to receive a donation from his fortune of $194.6 billion usd. Yes, you read that right!

Spamming happened twice. Couple days between the spam. Please note, Google or Gmail has nothing to do with this. They're simply the transport media.

As it was easy to attribute the fraud to Stiga, I instantly send them feedback demanding my (EU) 2016/680 aka. GDPR allotted right to know what was leaked.

October 2024

Sign of life. Stiga is alive!

You can imagine the drill. "No this wasn't us." and "No such thing can happen with us." -style of email exhange. I laid out all the facts from the date I've created my Stiga.com account with every single detail on the timeline, the tone started to shift into "Please, elaborate." and "Can you send us all the details, please." which from my point of view was nice.

Obviously I assisted them with all the information I had.

November 2024

Boom! Announcement:

Notification pursuant to Article 34 of Regulation (EU) 2016/679

What has happened:

On 24.9.2024, STIGA's ICT team detected a security breach affecting our systems.

Specifically, the login details of one of our supplier were used inappropriately.

As a result, some of your personal information was temporarily disseminated without authorization.

Notice how ICT team "detected" this incident a day after I received the first spam.

Finally

I haven't received any further spam on that address. It seems the fallout wasn't especially big.

Meanwhile in Finland ...

Late 2024, a similar incident occurred. Article: Cyber attack hits Valio, putting data of 5,000 at risk.

Pretty much the same story. A vendor got hit. My thinking: a specific person at vendor working remotely from home. Credentials to the customer system got leaked. Subsequently those stolen creds were used to extract a dump of GDPR-protected personnel data.

Prediction

This seems to be a thing nowadays. Corpos are getting better and better at protecting their own data. However, the external parties they hire to maintain systems aren't.

Feel free to call me wrong on that.

Update: January 2025

Getting spam with my Stiga.com -email. What once leaks, can not be un-leaked.

Old Computers and Hardware @ Museum of Technology, Finland

Friday, January 10. 2025

For a couple months, there is an exhibition of "operators and automated data processing designers" in Museum of Technology.

I visited the exhibit as tons of old hardware was shipped from Computer Museum of Finland, Jyväskylä. As there were so many interesting pieces of hardware, I'm presenting a few pictures here. On any typical blog post, I'd blanket my text with links. Here, on purpose, I'm omitting them. "Do your own research" as conspiracy lunatics say!

Funet Cisco AGS+

Back in the day, in 1988, when you visited ftp.funet.fi, your traffic when through this exact router. It was the first ever router manufactured by Cisco Systems.

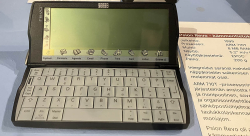

Psion Revo

I owned one. It was a magnificant piece of pocket-size computing power! As a minus, any kind of transfer required the thing to be plugged into a PC. This minor drawback didn't slow me. Neither did the black&white screen.

Later Nokia purchased Epoc and made it Symbian.

Nokia Communicator 9210

While this wasn't the first ever communicator by Nokia, it was the best one. Proper screen, good keyboard, Symbian 5, ah.

Back-in-the-days, I was poached to a company to write Symbian C++ code for this device. Fun times!

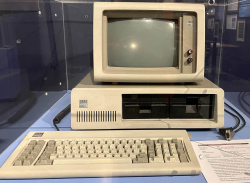

IBM PC

As in - the first one. Ever! From 1981.

For this invention everybody on this globe owes a lot. If IBM had kept the system closed, there wouldn't be no ecosystem for hardware manufacturers nor software crafters. This ecosystem made all the next rounds of evolution possible landing us where we are today.

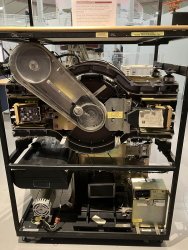

Ancient: IBM 3380 HDD

Moving to antics. Back in 60s this refridgerator-sized thing was one of the early HDDs invented by IBM. Capacity was 2,5 gigabytes. During that era RAM was in kilobytes, floppy disks barely reached megabyte.

Ancient: PDP-11

This DEC thing pre-dates me. Those things from 60s were so rare, I'm sure not many ever landed shores of Finland. The screen size is something from 2020s. However, the display is 1m x 1m x 1m and has to weigh a ton!

Ancient: DEC VT102 Terminal for the PDP-11

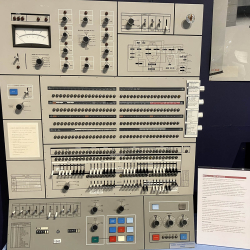

When you open a "terminal" in OS of your choice, it's a software version of that. Funny thing is, VT102 is still a common terminal type to emulate.

Ancient: IBM System/360 Control Panel

Your Windows 7 had a Control Panel. This is the same thing, but for IBM S/360. That's how you'd manage your computer's settings back in the 60s.