Schneier's Six Lessons/Truisms on Internet Security

Monday, November 11. 2019

Mr. Bruce Schneier is one of the influencers I tend to follow in The Net. He is a sought after keynote speaker and does that on regular basis. More on what he does on his site @ https://www.schneier.com/.

Quite few of his keynotes, speeches and presentations are made publicly available and I regularly tend to follow up on those. Past couple of years he has given a keynote having roughly the same content. Here is what he wants you, an average Joe Internet-user to know in form of lessons. To draw this with a crayon: Lessons are from Bruce Schneier, comments are mine.

These are things that are true and are likely to be true for a while now. They are not going to change anytime soon.

Truism 1: Most software is poorly written and insecure

The market doesn't want to pay for secure software.

Good / fast / cheap - pick any two! The maket has generally picked fast & cheap over good.

Poor software is full of bugs and some bugs are vulnerabilities. So, all software contains vulnerabilities. You know this is true because your computes are updated monthly with security patches.

Whoa! I'm a software engineer and don't write my software poorly and it's not insecure. Or ... that's what I claim. On a second thought, that's how I want my world to be, but is it? I don't know!

Truism 2: Internet was never designed with security in mind

That might seem odd 2019 saying that. But back in the 1970s, 80s and 90s it was conventional wisdom.

Two basic reasons: 1) Back then Internet wasn't being used for anything important, 2) There were organizational constraints about who could access the Internet. For those two reasons designers consciously made desicisions not to add security into the fabric of Internet.

Where still living with the effects of that decision. Whether its the domain name system (comment: DNS), the Internet routing system (comment: BGP), the security of Internet packets (comment: IP), security of email (comment: SMTP) and email addresses.

This truism I know from early days in The Net. We used completely insecure protocols like Telnet or FTP. Those were later replaced by SSH and HTTPS, but still the fabric of Internet is insecure. It's the applications taking care of the security. This is by design and cannot be changed.

Obviously there are known attempts to retrofit fixes in. DNS has extension called DNSSEC, IP has extensions called IPsec, mail transfer has a secure variant of SMTPS. Not a single one of those is fully in use. All of them are accepted, even widely used, but not every single user has them in their daily lives. We still have to support both the insecure and secure Net.

Truism 3: You cannot constrain the functionality of a computer

A computer is a general purpose device. This is a difference between computers and rest of the world.

No matter how hard is tried a (landline) telephone cannot be anything other than a telephone. (A mobile phone) is, of course, a computer that makes phone calls. It can do anything.

This has ramifications for us. The designers cannot anticipate every use condition, every function. It also means these devices can be upgraded with new functionality.

Malware is a functional upgrade. It's one you didn't want, it's one that you didn't ask for, it's one that you don't need, one given to you by somebody else.

Extensibility. That's a blessing and a curse at the same time. As an example, owners of Tesla electric cars get firmware upgrades like everything else in this world. Sometimes the car learns new tricks and things it can do, but it also can be crippled with malware like any other computer. Again, there are obvious attempts to do some level of control what a computer can do at the operating system level. However, that layer is too far from the actual hardware. The hardware still can run malware, regardless of what security measure are taken at higher levels of a computer.

Truism 4: Complexity is the worst enemy of security

Internet is the most complex machine mankind has ever built, by a lot.

The defender occupies the position of interior defending a system. And the more complex the system is, the more potential attack points there are. Attacker has to find one way in, defender has to defend all of them.

We're designing systems that are getting more complex faster than our ability to secure them.

Security is getting better, but complexity is getting faster faster and we're losing ground as we keep up.

Attack is easier than defense across the board. Security testing is very hard.

As a software engineer designing complex systems, I know that to be true. A classic programmer joke is "this cannot affect that!", but due to some miraculous chain, not ever designed by anyone, everything seems to depend on everything else. Dependencies and requirements in a modern distributed system are so complex, not even the best humans can ever fully comprehend them. We tend to keep some sort of 80:20-rule in place, it's enough to understand 80% of the system as it would take too much time to gain the rest. So, we don't understand our systems, we don't understand the security of our systems. Security is done by plugging all the holes and wishing anything wouldn't happen. Unfortunately.

Truism 5: There are new vulnerabilities in the interconnections

Vulnerabilities in one thing, affect the other things.

2016: The Mirai botnet. Vulnerabilities in DVRs and CCTV cameras allowed hackers to build a DDoS system that was raid against domain name server that dropped about 20 to 30% of popular websites.

2013: Target Corporation, an USA retailer, attacked by someone who stole the credentials of their HVAC contractor.

2017: A casino in Las Vegas was hacked through their Internet-connected fish tank.

Vulnerability in Pretty Good Privacy (PGP): It was not actually vulnerability in PGP, it was a vulnerability that arose because of the way PGP handled encryption and the way modern email programs handled embedded HTML. Those two together allowed an attacker to craft an email message to modify the existing email message that he couldn't read, in such a way that when it was delivered to the recipient (the victim in this case), a copy of the plaintext could be sent to the attacker's web server.

Such a thing happens quite often. Something is broken and its nobody's fault. No single party can be pinpointed as responsible or guilty, but the fact remains: stuff gets broken because an interconnection exists. Getting the broken stuff fixed is hard or impossible.

Truism 6: Attacks always get better

They always get easier. They always get faster. They always get more powerful.

What counts as a secure password is constantly changing as just the speed of password crackers get faster.

Attackers get smarter and adapt. When you have to engineer against a tornado, you don't have to worry about tornadoes adapting to whatever defensive measures you put in place, tornadoes don't get smarter. But attackers against ATM-machines, cars and everything else do.

An expertise flows downhill. Today's top-secret NSA programs become tomorrow's PhD thesis and next day's hacker tools.

Agreed. This phenomenon can be easily observed by everybody. Encryption always gets more bits just because today's cell phone is capable of cracking last decade's passwords.

If you want to see The Man himself talking, go see Keynote by Mr. Bruce Schneier - CyCon 2018 or IBM Nordic Security Summit - Bruce Schneier | 2018, Stockholm. Good stuff there!

Deprecated SHA-1 hashing in TLS

Sunday, November 3. 2019

This blog post is about TLS-protocol. It is a constantly evolving beast. Mastering TLS is absolutely necessary, as it is one the most common and widely used components keeping our information secure in The Net.

December 2018: Lot of obsoleted TLS-stuff deprecated

Nearly an year ago, Google as the developer of Chrome browser deprecated SHA-1 hashes in TLS. In Chrome version 72 (December 2018) they actually did deprecate TLS 1 and TLS 1.1 among other things. See 72 release notes for all the details.

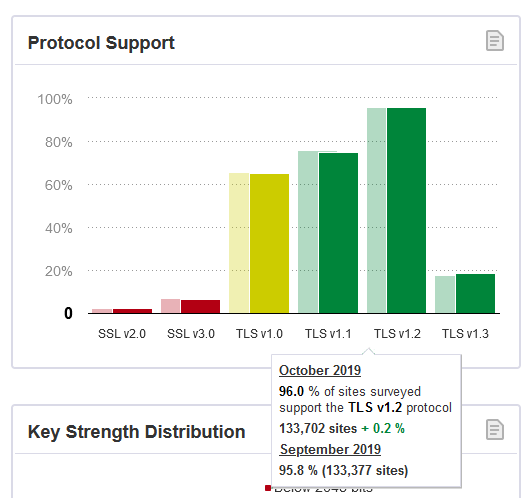

Making TLS 1.2 mandatory actually isn't too bad. Given Qualsys SSL Labs SSL Pulse stats:

96,0% of the servers tested (n=130.000) in SSL Labs tester support TLS 1.2. So IMHO, with reasonable confidence we're at the point where the 4% rest can be ignored.

Transition

The change was properly announced early enough and a lot of servers did support TLS 1.2 at the time of this transition, so the actual deprection went well. Not many people complained in The Net. Funnily enough, people (like me) make noise when things don't go their way and (un)fortunately in The Net that noise gets carried far. The amount of noise typically doesn't correlate anything, but phrase where there is smoke - there's fire seems to be true. In this instance, deprecating old TLS-versions and SHA-1 hashing made no smoke.

Aftermath in Chrome

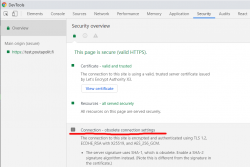

Now that we have been in post SHA-1 world for a while, funny stuff starts happening. On rare occasion, this happens in Chrome:

In Chrome developer tools Security-tab has a complaint: Connection - obsolete connection settings. The details state: The server signature users SHA-1, which is obsolete. (Note this is different from the signature in the certificate.)

Seeing that warning is uncommon, other than me have bumped into that one. An example: How to change signature algorithm to SHA-2 on IIS/Plesk

I think I'm well educated in the details of TLS, but this one baffled me and lots of people. Totally!

What's not a "server signature" hash in TLS?

I did reach out to number of people regarding this problem. 100% of them suggested to check my TLS cipher settings. In TLS, a cipher suite describes the algorithms and hashes used for encryption/decryption. The encrypted blocks are authenticated with a hash-function.

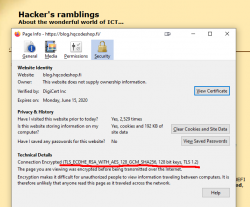

Here is an example of a cipher suite used in loading a web page:

That cipher suite is code 0xC02F in TLS-protocol (aka. ECDHE-RSA-AES128-GCM-SHA256). Lists of all available cipher suites can be found for example from https://testssl.sh/openssl-iana.mapping.html.

But as I already said, the obsoletion error is not about SHA-1 hash in a cipher suite. There exists a number of cipher suites with SHA-1 hashes in them, but I wasn't using one.

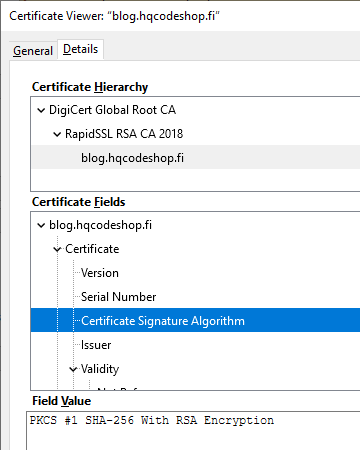

Second clue is from the warning text. It gives you a hint: Note this is different from the signature in the certificate ... This error wasn't about X.509 certificate hashing either. A certificate signature looks like this:

That is an example of the X.509 certificate used in this blog. It has a SHA-256 signature in it. Again: That is a certificate signature, not a server signature the complaint is about. SHA-1 hashes in certificates were obsoleted already in 2016, the SHA-1 obsoletion of 2018 is about rest of the TLS.

What is a "server signature" hash in TLS?

As I'm not alone with this, somebody else has asked this question in Stackexchange: What's the signature structure in TLS server key exchange message? That's the power of Stackexchange in action, some super-smart person has written a proper answer to the question. From the answer I learn, that apparently TLS 1 and TLS 1.1 used MD-5 and/or SHA-1 signatures. The algorithms where hard-coded in the protocol. In TLS 1.2 the great minds doing the protocol spec decided to soft-code the chosen server signature algorithm allowing secure future options to be added. Note: Later in 2019, IETF announced Deprecating MD5 and SHA-1 signature hashes in TLS 1.2 leaving the good ones still valid.

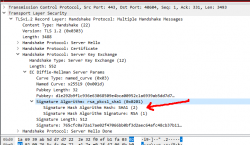

On the wire, TLS server signature would look like this, note how SHA-1 is used to trigger the warning in Chrome:

During TLS-connection handshake, Server Key Exchange -block specifies the signature algorithm used. That particular server I used loved signing with SHA-1 making Chrome complain about the chosen (obsoleted) hash algorithm. Hard work finally paid off, I managed to isolate the problem.

Fixing server signature hash

The short version is: It really cannot be done!

There is no long version. I looked long and hard. No implementation of Apache or Nginx on a Linux or IIS on Windows have setting for server signature algorithm. Since I'm heavily into cloud-computing and working with all kinds of load balancers doing TLS offloading, I bumped into this even harder. I can barely affect available TLS protocol versions and cipher suites, but changing the server signature is out-of-reach for everybody. After studying this, I still have no idea how the server signature algorithm is chosen in common web servers like Apache or Nginx.

So, you either have this working or not and there is nothing you can do to fix. Whoa!