Perl - The most disliked programming language?

Sunday, November 26. 2017

As you can see the top-3 three really stand out of the rest! You can easily disregard 2nd and 3rd "best", as nobody really uses VBA or Delphi anymore. Unlike those, Perl is being used. Even your Linux has it installed. All, but the tiny distros pre-install it into base image. Also those popular Mint and similar have it as an option. The obvious reason why Perl is being installed used everywhere is the wide popularity back in the 90s. Perl pre-dates Linux and was pretty much the only scripting language in that era, if not counting BASH or Tcsh scripting. Then times changed and Perl paved the way for PHP, Ruby, Python and the likes.

I don't understand who would NOT love a programming language that can be written with shift-key pressed down all the time! ![]()

Here, I present some of the most beautiful pieces of code ever written in Perl (also known as Obfuscated Perl Contest):

- The 1st Annual Obfuscated Perl Contest, Best in "The Perl Journal" category:

package S2z8N3;{

$zyp=S2z8N3;use Socket;

(S2z8N3+w1HC$zyp)&

open SZzBN3,"<$0"

;while(<SZzBN3>){/\s\((.*p\))&/

&&(@S2zBN3=unpack$age,$1)}foreach

$zyp(@S2zBN3){

while($S2z8M3++!=$zyp-

30){$_=<SZz8N3>}/^(.)/|print $1

;$S2z8M3=0}s/.*//|print}sub w1HC{$age=c17

;socket(SZz8N3,PF_INET,SOCK_STREAM,getprotobyname('tcp'))&&

connect(SZz8N3,sockaddr_in(023,"\022\x17\x\cv"))

;S2zBN3|pack$age}

- The 4st Annual Obfuscated Perl Contest, 3rd in Do Something Powerful category:

$_=q(s%(.*)%$_=qq(\$_=q($1),$1),print%e),s%(.*)%$_=qq(\$_=q($1),$1),print%e

- The 5h Annual Obfuscated Perl Contest, Winner of The Old Standby category:

#:: ::-| ::-| .-. :||-:: 0-| .-| ::||-| .:|-. :||

open(Q,$0);while(<Q>){if(/^#(.*)$/){for(split('-',$1)){$q=0;for(split){s/|

/:.:/xg;s/:/../g;$Q=$_?length:$_;$q+=$q?$Q:$Q*20;}print chr($q);}}}print"\n";

#.: ::||-| .||-| :|||-| ::||-| ||-:: :|||-| .

Who would ever hate that?

Look! Even this kitten thinks, it's just a concise form of programming. Nothing to be hated. ![]()

Book club: The Art of Deception: Controlling the Human Element of Security

Sunday, November 19. 2017

About Humble Bundle

Couple months ago there was a real good deal in Humble Bundle for eBooks. For those of you who don't know what Humble Bundle is, it's a for-business subsidiary of IGN Entertainment (which again is a subsidiary of Ziff Davis). Unlike regular charities , which just make a plea to give money to them, Humble Bundle makes deals with software vendors to sell products which are way past their prime money-making age. Their slice of the operation is ~20% and the rest goes to software vendors and the charity of your choosing. In many cases you can choose the charity:software-split from range 0-100% and if you feel like it, you can tip Humble Bundle with something extra.

They passed $100M USD donated in September 2017. As there are costs of running the business, they raked in money more than that, but so far nobody has proved that they wouldn't actually deliver on their charity-promise. They are doing business with major corporations and if Humble Bundle would be caught red-handed, they would face a horde of lawyers suing their asses. For the time being, I choose to believe that they keep their promises and occasionally when I see something interesting in their mailing list, keep sending my money to them.

About the author, Kevin Mitnick

Ok, enough Humble Bundle, this is supposed to be about the book.

Since the deal was sweet, I went for it and paid couple € for the de-luxe bundle of security-related books (I think the actual amount was in region of 25 €). They delivered the download link for unlocked PDFs instantly after my PayPal payment was accepted. So, now I'm a proud owner of 14 books about information security.

The one book that I really wanted was the famous Art of Deception by Kevin Mitnick, a reformed bad boy who turned white-hat hacking. A warrant to arrest him was issued back in 1992 and he managed to evade FBI till February 1995. Since there was no applicable legislation in USA to convict him from the cracks he made to various US Government agencies and private corporations, US Department of Justice managed to keep him incarcerated for 4 and half years without bail or trial. When he got released to general public, a judge slapped a 7 year ban for him not to profit from selling books or movies, still on 2002 he put together this book and managed to get it published. He also published a book The Art Of Intrusion: The Real Stories Behind The Exploits Of Hackers, Intruders, And Deceivers in 2005, but it's unclear to me did he profit from them at all. Wired confirms, that the ban from profiting ended in January 2007. If anybody knows that, please drop a comment.

About the book

Well, enough of the author, this is supposed to be about the book.

The stories about social engineering tricks either Mr. Mitnick pulled off himself or the stories he describes in his book are really intriguing. But at the same time the technical details are vastly outdated, remember it was published in 2002 and stories are from 60s to 90s. Reading stories about dial-up modems, faxes and landline telephone voice mails make me laugh in the wrong place of the story. So, while reading, I was constantly thinking if it would be possible to pull off a similar feat in the modern world.

The books is written on four sections. Parts 1 and 2 are more about how social engineering works. There is always sonebody with poor awareness, either by not doing what was instructed or the instructions are missing or poorly done to begin with. Part 3 contains the "war stories" how people were fooled to say or do something they wouldn't normally do and while doing it didn't understand the value of their deed to the opposing party. To summarize, it's never a single piece of information which can compromise your organization, it's always a combination of things. To pull off such a social engineering hack, you must have detailed information about procedures and find a weak spot there. Also, most of the hacks are done to multi-site corporations. If your organization is geographically bound to a single location, it will be very hard to call in and request something "for my boss" at the remote site. My experience about small and medium sized Finnish companies is, that most people know everybody at least by name. Any incoming call would immediately raise suspicion and would question the caller's true agenda and identity.

Part 4 contains instrictions how to prevent such socical engineering attacks. For anybody not making security policies that part might be little bit boring. Here is an example of a flowchart how to train personnel:

Copyright © 2002 by Kevin D. Mitnick

Given in modern world, that government organizations don't need to pull of such hacks to get your information. You've already volunteered all of that! All they need to do is capture it from your cloud service provider's hard drive. Also, generally speaking personnel are more aware of possibility for social engineering. In his CeBIT Global Conferences 2015 video he claims, that for example dumpster diving still works. I personally wouldn't believe it. In Europe we don't need to print source code o passwords to paper and if we would be that stupid, there is a special secret-material-to-be-destroyed -paper bin at the office for such confidential waste.

Also, I remember seeing a video (... which unfortunately I was unable to locate) of Mr. Mitnick describing an assignment he got from a company, where his social engineering failed. It happened, that the receptionist knew about it. When Mr. Mitnick was requesting for a piece of information to get his assignment going forward, the receptionist actually responded: "Have you heard of Kevin Mitnick's book Art of Deception?". So, that was an example of well trained personnel to save that day. But unfortunately the book doesn't have a single example of social engineering failing. I was kinda expecting to see some of those.

About recommendations: people already knowledgeable about social engineering and feats that Mr. Mitnick pulled off don't get that much out of the book, but for everybody else, the book offers mind-opening stories, which can be reflected in everybody's real life. When somebody asks you for something they should already know, it may be time to think about social engineering.

If you have 6 minutes to spare, here is a DEF CON 23 video of Mr. Mitnick pulling off a social engineering hack, with permission from the target corporation, targeted to a pre-selected employee. They lure the poor guy to go to a website using Internet Explorer, which at the time had a known security flaw in it. That way they get a some sort of remote-access-toolkit to his computer. Nice! But not possible without the actual injection using the flaw being there.

F-Secure Ultralight Anti-Virus

Sunday, November 5. 2017

Which anti-virus software to use on a Windows 10?

There are a number of software to choose from. Some are free, some are really good at detecting malware, some are award winning, industry recognized pieces of software and there is even one that comes with your Windows 10 installation.

For couple decades, my personal preference has been a product from F-Secure. For those of you expecting me to hand out a recommedation out of numerous F-Secure products, given the multiple computers I operate on daily basis, just picking a single specific product is not possible. Also, I'm a member of their Beta Program and run couple pieces of their software which are not flagged as production-quality.

Here is the part with a recommendation:

When Ultralight Anti-Virus (for Windows) gets released, that's the one I definitely urge you to try out. The user interface is an oddball, simple, but odd:

On an initial glance, the first question I had was: "Ok, Where are the settings? Where IS the user interface?!" But that's the beauty of the product, it has no more settings than the above screenshot contains. That's wildly out-of-the-box. Functional, yes. But something completely different. Naturally it's a F-Secure product, and they don't make any compromises with ability to detect malware. It has no firewall or plugins to your browser or anything unnecessary.

When/If the product is ever released, go check it out!

Cygwin X11 with window manager

Saturday, November 4. 2017

Altough, I'm a Cygwin fan, I have to admit, that the X11-port is not one of their finest work. Every once in a while I've known to run it.

Since there are number of window managers made available for Cygwin, I found it surprisingly difficult to start using one. According to docs (Chapter 3. Using Cygwin/X) and /usr/bin/startxwin, XWin-command is executed with a -multiwindow option. Then XWin man page says: "In this mode XWin uses its own integrated window manager in order to handle the top-level X windows, in such a way that they appear as normal Windows windows."

As a default, that's ok. But what if somebody like me would like to use a real Window Manager?

When startxwin executes xinit, it optionally can run a ~/.xserverrc as a server instead of XWin. So, I created one, and made it executable. In the script, I replace -multiwindow with -rootless to not use the default window manager.

This is what I have:

#!/bin/bash

# If there is now Window Maker installed, just do the standard thing.

# Also, if xinit wasn't called without a DISPLAY, then just quit.

if [ ! -e /usr/bin/wmaker ] || [ -z "$1" ]; then

exec XWin "$@"

# This won't be reached.

fi

# Alter the arguments:

# Make sure, there is no "-multiwindow" -argument.

args_out=()

for arg; do

[ $arg == "-multiwindow" ] && arg="-rootless"

args_out+=("$arg")

done

exec XWin "${args_out[@]}" &

# It takes a while for the XWin to initialize itself.

# Use xset to check if it's available yet.

while [ ! DISPLAY="${args_out[0]}" xset q > /dev/null ]; do

sleep 1

done

sleep 1

# Kick on a Window Manager

DISPLAY="${args_out[0]}" exec /usr/bin/wmaker &

wait

The script assumes, that there is a Window Maker installed (wmaker.exe). The operation requires xset.exe to exist. Please, install it from package xset, as it isn't installed by default.

Call of Duty: WWII launch

Thursday, November 2. 2017

Given, that I work in Activision/Blizzard/King -corporation, every once in a while the job has nice perks.

Today, I got to go to CoD WWII launch party in Stockholm!

It was the first time I've been to a launch party of a game ever! Of course a corpo party is a corpo party. Lot of jada-jada, blah-blah and free booze. But a game launch party of course is about the game. There was an option for every party guest to play the game on PS4. Then they had invited couple of Swedish semi-celebs to play in a friendly competition eSports-style 6-vs-6. Btw, the winners went home with brand new CoD WWII special edition PS4s. Insiders told me that all of the celeb-gamers had an option to practice playing the non-released game at the Activision office in Stockholm.

And of course, nobody went home with empty hands. Everybody was given a goodie-bag with a CoD WWII T-shirt and a PS4 store code for the game.

I'm not a huge fan of FPSs on console, so, I think I'm not going to play much that one. I'll wait for some PC Steam-codes to float around the office (eventually they will) and then start playing.

Games: Gran Turismo Sport

Sunday, October 29. 2017

Yes!

The best-ever time sink is out again! ![]()

I'm a huge fan of the GT-series, have been that since the first Gran Turismo was published for PlayStation in 1997. Because the PSX doesn't support racing wheels, I played the game with a NeGcon-controller. Most of you have never heard of it, because it supported only PSX and was discontinued around the time when PS2 was released in year 2000. PS2 has USB-port, which made generic USB 1.1 wheels available to PlayStation/Gran Turismo -world too. NeGcon is one of the weirdest game controllers anybody has ever seen. Its like a normal game controller with swivel in the middle. Twisting the controller makes it possible to act as a steering wheel. It also had three analog buttons in it making throttle and break control reasonable accurate for race gaming.

Fast forward trough GTs 2-6 to GT Sport, which is the latest, best, brighest and first GT for a PS4. The controller I'm using on my PS4 Pro is a Logitech G29, but I'd definitely like to give my NeGcon a go, if the game would support it somehow. ![]() Now I'm wasting space for a Wheel Stand Pro, a NeGcon wouldn't require that!

Now I'm wasting space for a Wheel Stand Pro, a NeGcon wouldn't require that!

Of course I have to put some miles to my GT Sport, so, not much happening here in my blogosphere.

Real-word Squid HTTP proxy hierarchy setup - Expat guide

Tuesday, October 17. 2017

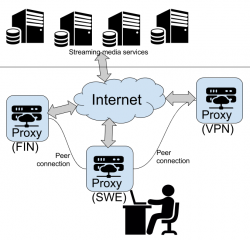

Half a year back I posted about moving to Sweden. I built network and router setup to gain access to The Net. One of the obvious use cases for a connection is to playback various streaming media services. I have touced the subject of configuring Squid for YLE Areena earlier.

As an ex-patriate, I like to see and hear some of the media services I've used to consuming in Finland. Those stupid distribution agreements make that unnecessarily difficult.

Spec

With my current setup, I need to access services:

- directly: Using no fancy routing, direct out of my Swedish IP-address. It's faster and less error-prone. This is majority of my HTTP-traffic.

- from Finland: To access geo-IP blocked services from a Finnish IP. I have my own server there and I'm running Squid on it.

- from my VPN-provider: To access selected geo-IP blocked services from a country of my choosing

Of course I can go to my browser settings and manually change settings to achieve any of those three, but what if I want to access all of them simultaneously? To get that, I started browsing Squid configuration directive reference. Unfortunately that is what it says, a reference manual. Some understanding about the subject would be needed for a reference manual to be useful.

Getting the facts

Luckily I have access to Safari Books Online. From that I found an on-line book called Squid: The Definitive Guide:

That has an useful chapter in it about configuring a Squid to talk to other Squids forming a hierarchy:

© for above excerpt: O'Reilly Media

Now I familiarized myself with Squid configuration directives like: cache_peer, cache_peer_access and always_direct.

... but. Having the book, reading it, reading the man-pages and lot of googling around left me puzzled. In the wild-wild-net there is lot of stumble/fall-situations and most people simply won't get the thing working and those who did, didn't configure their setup like I wanted to use it.

Darn! Yet again: if you want to get something done, you must figure it out by yourself. (again!)

Desired state

This is a digaram what I wanted to have:

Implementation

But how to get there? I kept stumbing with always_direct allow never and never_direct allow always -permutations while trying to declare some kind of ACLs or groupings which URLs should be processed by which proxy.

After tons and tons of failure & retry & fail again -loops, I got it right! This is my home (Sweden) proxy config targeting the two other Squids to break out of geo-IP -jail:

# 1) Define cache hierarchy

# Declare peers to whom redirect traffic to.

cache_peer proxy-us.vpnsecure.me parent 8080 0 no-query no-digest name=vpnsecure login=-me-here!-:-my-password-here!-

cache_peer my.own.server parent 3128 0 no-query no-digest name=mybox default

# 2) Define media URLs

# These URLs will be redirected to one of the peers.

acl media_usanetwork dstdomain www.usanetwork.com www.ipaddress.com

acl media_netflix dstdomain .netflix.com

acl media_yle dstdomain .yle.fi areenapmdfin-a.akamaihd.net yletv-lh.akamaihd.net .kaltura.com www.ip-adress.com

acl media_ruutu dstdomain ruutu.fi geoblock.nmxbapi.fi

acl media_katsomo dstdomain .katsomo.fi

acl media_cmore dstdomain cmore.fi

# 3) Pair media URLs and their destination peers.

# Everything not mentioned here goes directly out of this Squid.

cache_peer_access vpnsecure allow media_usanetwork

cache_peer_access vpnsecure allow media_netflix

cache_peer_access mybox allow media_yle

cache_peer_access mybox allow media_ruutu

cache_peer_access mybox allow media_katsomo

cache_peer_access mybox allow media_cmore

never_direct allow media_usanetwork

never_direct allow media_yle

never_direct allow media_ruutu

never_direct allow media_katsomo

never_direct allow media_cmore

never_direct allow media_netflix

# 4) end. Done!

request_header_access X-Forwarded-For deny all

Simple, isn't it! ![]()

Nope. Not really.

Notes / Explanation

Noteworthy issues from above config:

- On my web browser, I configure it to use the home proxy for all traffic. That simply makes my life easy. All traffic goes trough there and my local Squid makes the decisions what and where to go next, or not.

- In above configuration:

- I declare two peers (

cache_peer), how and where to access them. - I declare ACLs by URL's destination domain (

acl dstdomain). It's fast and easy! - I associate ACLs with peers (

cache_peer_access) to get HTTP-routing - I make sure, that any traffic destined to be routed, does not exit directly from Sweden (

never_direct allow). Slipping with that will be disastrous for geo-IP checks! - I don't declare my traffic to be originating from a HTTP-proxy (

request_header_access X-Forwarded-For deny). Some media services follow up to the real client-address and do their geo-IP checks on that.

- I declare two peers (

- The VPN-service, I'm using is VPNSecure or VPN.S as they like to be called nowadays. Two reasons:

- No mandatory softare installation needed. You can just configure your browser to use their proxy with proxy authentication. And if you are using Google Chrome as your browser, there is an VPN.S extension for super easy configuration!

- Price. It's very competitive. You don't have to pay the list prices. Look around and you can get ridiculous discounts on their announces list prices.

- My Finnish proxy is just out-of-the-box Squid with ACLs allowing my primary proxy to access it via Internet. Other than default settings, has

request_header_access X-Forwarded-For denyin it. - For testing my setup, I'm using following display-your-IP-address -services:

- Finnish services C More and MTV Katsomo are pretty much the same thing, share user accounts and in future are merging more and more. To have C More working, both ACLs need to declared.

- YLE Areena is using Akamai CDN extensively and they tend to change.

- Ruutu is using external service for geo-IP for added accuracy. They compare the results and assume the worst! Redirecting both is necessary to keep their checks happy.

- Netflix is using their own state-of-the-art CDN, which is very easy to configure. Regardless what Mr. Hastings publicly, they only care that the monthly recurring credit card payment passes. Using different countries for Netflix produces surprising results for availability of content.

Finally

This is working nicely! ![]()

Now moving from one media service to another is seamless and they can run simultaneously. Not that I typically would be watching two or more at the same time, but ... if I wanted to, I could!

WPA2 key exchange broken - KRACK attack

Monday, October 16. 2017

Mathy Vanhoef and Frank Piessens are both decorated security researchers from KU Leuven, Belgium. On an otherwise slow Sunday, 15th October 2017 Alex Hudson dropped a bombshell: Mr. Vanhoef and Mr. Piessens figured out a way to bend the authentication envelope, making WPA2 broken. Authors coined this as a Key Reinstallation AttaCK. Whaat?! Darn! ![]()

Looks like the release was planned carefully. OpenBSD had the fix on 1st March 2017. That's over 6 months earlier. Also Microsoft had a whiff of this flaw in the early phase as they managed to release a patch already. The complete vendor list can be seen @ cert.org. Its a sad read, that.

The information is at https://github.com/kristate/krackinfo and contains a very simple message:

Unless a known patch has been applied, assume that all WPA2 enabled Wi-fi devices are vulnerable.

Ok, that sounds really bad. To cut all the FUD and blah blah out, what's the big deal?

- This is a Wi-Fi client attack

- Explanation: The entire KRACK thing works by spoofing as your router to your client. After the attacker manages to lure your laptop or cell phone into connecting to a fake network, it acts as a man-in-the-middle reading your transmissions.

- You cannot hide your network information. If you transmit or read transmissions, somebody can read that. That's the bad part about radio transmissions, they travel to everyone.

- The only way to hide your radio transmissions is to turn Wi-Fi off.

- Anybody attacking YOU needs to be in same physical area than you

- Explanation: A random guy from The Net cannot attack you. They need to travel to where you are.

- Regardless of what, attacker will not gain your WPA password

- Explanation: This attack is about fooling your laptop/cell phone/refridgerator to talk to a malicious wireless router. It does not extract your Wi-Fi network password while doing it.

- So, this is almost as bad as the WEP weakness back in 2001. In that particular incident any random body could just crack their way to your network by figuring out what your password is. And then it was possible to read any captured past or future transmission. KRACK works only forwards, your historical transmissions are safe.

- If you are using TKIP or don't know if you are using it - you're doomed!

- Explanation: TKIP, or Temporal Key Integrity Protocol is weak anyway. You shouldn't be using that in the first place!

- If you are using Android or Linux - you're most certainly doomed!

- Explanation: The implementation in Linux (Android is a Linux, you know) is especially flawed! Attacker has easy means of forcing any client to fake router and then force your encryption key to something the attacker can predict, thus being able to read your traffic in real-time or introduce new data packets and spoof them to originating from your Linux/Android. That's bad, really bad!

- If you are not using Linux, and are using AES-encryption - you're doomed!

- Explanation: Yes, if attacker can lure you to use the faked router, your transmissions can be read.

- Examples of non-Linux clients include, but are not limited to: Windows, macOS, iOS.

- My router has Linux or I got my router free when I got my Internet connection and I don't know what operating system it has - you're doomed!

- Explanation: This is not about your router. This is about attacker faking to be your router. Your router won't be attacked, but attacker will spoof traffic to it.

- If a random attacker can read all my traffic and can inject new transmissions spoofing as you, is that bad?

- Explanation: Yes it is. Attacker can attempt to stop you from using SSL/TLS and there are number of scenarios where that can happen, so all your precious passwords, credit card numbers and other secrets can leak. However, there are number of scenarios where that is not possible. Still, if attacker has your encrypted traffic, other means of decrypting it exist.

As you can see from the above list, there are quite a few scenarios which end you in the 'you're doomed!' -state. So, assume that you are or will be!

The relevant question remains: Is there anything that can be done?

Answer is: Not really

Just wish/pray that your particular vendor releases fixed firmware sometimes soon. Most Android vendors won't.

macOS High Sierra upgrade from USB-stick

Sunday, October 15. 2017

Somebody at Apple really knows how to confuse users. I'm looking at Wikipedia article List of Apple operating systems:

- OS X Yosemite - 10.10

- OS X El Capitan - 10.11

- macOS Sierra - 10.12

- macOS High Sierra (Not to be confused with Sierra) - 10.13

Well ... too late! I'm already confused. Why the hell they had to use the same name again. To keep me confused, I guess the next macOS will be Low Sierra?

Further details about the creation process can be found from my previous blog post about macOS Sierra, here.

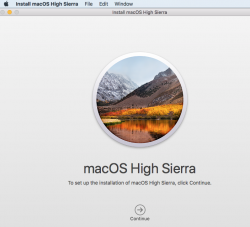

Get macOS High Sierra installer

Go to your Mac's app store, it should be there clearly visible. Start the installation and wait for the download to complete.

Option 1: Create your USB-stick from command line

You can always create your USB-stick from Terminal, as I've descibed in my previous posts:

Prepare USB-stick:

$ sudo diskutil partitionDisk /dev/disk9 1 GPT jhfs+ "macOS High Sierra" 0b

...

/dev/disk9 (external, physical):

#: TYPE NAME SIZE IDENTIFIER

0: GUID_partition_scheme *30.8 GB disk9

1: EFI EFI 209.7 MB disk9s1

2: Apple_HFS macOS High Sierra 30.4 GB disk9s2

Create installation media:

$ cd /Applications/Install\ macOS\ High\ Sierra.app/Contents/Resources/

$ sudo ./createinstallmedia \

--volume /Volumes/macOS\ High\ Sierra/ \

--applicationpath /Applications/Install\ macOS\ High\ Sierra.app/ \

--nointeraction

Option 2: Create your USB-stick with GUI app

Many people shy away from Terminal window and think the above (simple) commands are too complex. There is a nice solution for those people: DiskMaker X 7.

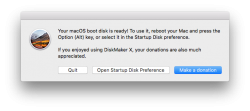

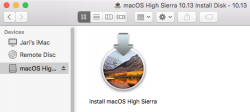

Download the .dmg, install the app and kick it on. You should see something like this:

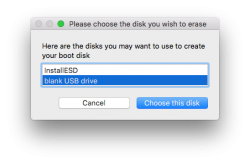

Next question is about type of disk to create (choose USB) and which USB to use:

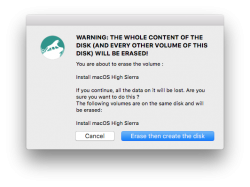

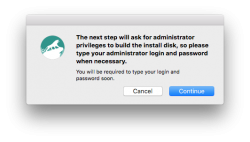

Then get past couple of warnings. Yes, you are about to destroy all data on the USB-stick:

The actual process will take a while and during the creation, plenty of notifications will flash on your sccreen:

After the long wait is over, your USB-stick is ready to go!

Go upgrade your macs

With your fresh USB-stick, you can continue the installation to any of your Macs.

Meanwhile, check What's new in High Sierra @ Apple.

Troubleshooting

I lost the High Sierra installation files, where can I get them?

Yup, that happened to me too. I had my Macs upgraded, but was requested to do a favor. Obviously my USB-stick was already overwritten with a Linux-image and I had to re-do the process. But whattahell!? The image files were gone! Only old OS X files were remaining. So, looks like after a successful upgrade, this one cleans up its own mess.

To re-load the installation, go to app store, search for "macos high sierra":

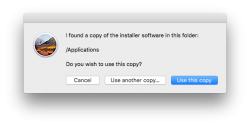

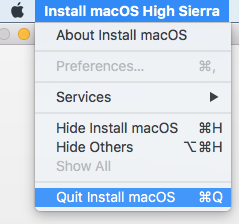

After the lenghty (re-)download, the installed will kick on:

Like always, that's your que to quit the installer:

Done!

Finally

As the pre-released information from Apple indicated, the "upgrade" is kind of letdown. It really doesn't provide much important stuff. The most critical update is the new APFS filesystem. This is a no-brainer, as HFS+ is nearing its 20 year mark. Like ext2 filesystem on Linux (ext3, ext4), HFS+ had its upgrades, but now its simply time to break free into something completely different.

After upgrade, I took a look at the partitions.

MacBook Pro, SSD, encrypted:

$ diskutil list

/dev/disk0 (internal, physical):

#: TYPE NAME SIZE IDENTIFIER

0: GUID_partition_scheme *251.0 GB disk0

1: EFI EFI 209.7 MB disk0s1

2: Apple_APFS Container disk1 250.1 GB disk0s2

/dev/disk1 (synthesized):

#: TYPE NAME SIZE IDENTIFIER

0: APFS Container Scheme - +250.1 GB disk1

Physical Store disk0s2

1: APFS Volume Macintosh HD 156.0 GB disk1s1

2: APFS Volume Preboot 20.6 MB disk1s2

3: APFS Volume Recovery 520.0 MB disk1s3

4: APFS Volume VM 2.1 GB disk1s4

On the pysical disk note the APFS container. On the synthesized disk, note how all macOS partitions have APFS type in them.

iMac, fusion drive, no encryption:

$ diskutil list

/dev/disk0 (internal, physical):

#: TYPE NAME SIZE IDENTIFIER

0: GUID_partition_scheme *121.3 GB disk0

1: EFI EFI 209.7 MB disk0s1

2: Apple_CoreStorage Macintosh HD 121.0 GB disk0s2

3: Apple_Boot Boot OS X 134.2 MB disk0s3

/dev/disk1 (internal, physical):

#: TYPE NAME SIZE IDENTIFIER

0: GUID_partition_scheme *1.0 TB disk1

1: EFI EFI 209.7 MB disk1s1

2: Apple_CoreStorage Macintosh HD 999.3 GB disk1s2

3: Apple_Boot Recovery HD 650.1 MB disk1s3

/dev/disk2 (internal, virtual):

#: TYPE NAME SIZE IDENTIFIER

0: Apple_HFS Macintosh HD +1.1 TB disk2

Logical Volume on disk0s2, disk1s2

Unencrypted Fusion Drive

As announced, fusion drives won't have APFS yet. In the partition info, SSD and spinning platter are nicely displayed separately, forming a virtual drive.

Worthless update or not, now its done. Like a true apple-biter, I have my gadgets running the latest stuff! ![]()

Finnish movie chain Finnkino and capacity planning

Tuesday, October 10. 2017

In Finland and Sweden, all major movie theaters are owned by Bridgepoint Advisers Limited. In Finland, the client-facing business is called Finnkino.

Since they run majority of all viewings of moving pictures, they also sell the tickets for them. When a superbly popular movie opens for ticket sales, the initial flood happens online. What Finnkino is well known is their inability to do capacity planning for online services. Fine examples of incidents where their ability to process online transactions was greatly impaired:

- 2015: Star Wars: The Force Awakens

- 2016: Rogue One: A Star Wars Story

- 2017: Star Wars: The Last Jedi

The sales of movie tickets happens trough their website. I'd estimate, that 100% of their sales, either eletronic or physical points-of-sale, is done via their sales software. The system they're using is MCS, or MARKUS Cinema System, which according to their website "can be deployed both on premise and on Microsoft Azure". Out of those two options, guess which mode of deployment Finnkino chose! ![]()

Quick analysis indicates, that their site is running on their own /28 IPv4 network. Nice!

Based on eyewitness reports, their entire system is heavily targeted to serve the local points-of-sale, which were up and running, but both their online sales and vending machines were down. So, I'm speculating, that their inability to do proper capacity planning is fully intentional. They choose to throw away the excess requests, keep serving people waiting in queue for the tickets and sell the tickets to avid fans later. That way they won't have to make heavy investments to their own hardware. And they escape from this nicely by apologizing in Twitter: "We're sorry (again)." And that's it. Done!

Hint to IT-staff of Finnkino: Consider cloud and/or hybrid-cloud options.

Google AdWords sending me free money?

Sunday, September 24. 2017

If you own your company and happen to use any Google services (I do both), then you're very likely to be approached by Google's AdWords marketing. They are pitching you initial free credits to use for your ads. Since my business really doesn't rely on advertising, or marketing or anything of such nature, I typically ignore any approaches regardless who is contacting me.

Google has this approach, that they send you snail-mail and the letter will contain a credit-card sized laminated card contaning my business' name and a discount code to be used during registration. As there are nice € sums of money in them, I never threw any of those away. And it so happened, that since I kept ignoring Google's marketing machine, they just kept sending me those cards over and over again.

There is 1150,- € worth of free advertising in those. ![]()

You at Google:

Keep sending them, I'll keep collecting them. And amp up the values, I won't even flinch with your 150,- € coupons!

Go big! Go for 500,- € or even bigger!

Apple's new privacy policy against on-line ads

Monday, September 18. 2017

This is a rare event: somebody is actually improving my privacy and I don't have do do anything. Nice!

Apple is introducing intelligent tracking prevention technology in upcoming Safari 11. They're pretty radical in their cookie blocking, they're "deleting even first-party cookies if it's been more than 30 days since you interacted with the website that set the cookie". Naturally corporations depending on tracking you don't much like that, but Apple's response is: Tough luck, privacy comes first. So, it's natural that advertisers don't want you to use Safari or know how to make their tracking harder.

Safari 8, 9 and 10 will block 3rd party cookies as default. You can opt out of that, but it's just ridiculous. I strongly advice against that. Nobody really needs 3rd party cookies, they are never up to no good. Couple years back a Swedish web shop I frequently purchased movies from changed their on-line payment vendor and they actually used 3rd party cookies for the payment transaction. I took a pause over 2 years before doing business with them again. Their current payment system works correctly.

Firefox allows 3rd party cookies, but there is a setting:

![]()

Same thing with Chrome, it allows 3rd party cookies, but you can disable it from well hidden setting:

![]()

What's really cool with Safari 11, that they'll move tracking protection to the next level. There is a "special" treatment for well-known 1st party cookies too. This is top notch stuff and it outperforms most add-ons and extensions. Really nice!

Fedora 26: SElinux-policy failing on StrongSWAN IPsec-tunnel

Monday, September 4. 2017

SElinux is a beautiful thing. I love it! However, the drawback of a very fine-grained security control is, that the policy needs to be exactly right. Almost right won't do it.

This bite me when I realized, that systemd couldn't control StrongSWAN's charon - IKE-daemon. It worked flawlessly, when running a simple strongswan start, but failing on systemctl start strongswan. Darn! When the thing works, but doesn't work as a daemon, to me it has the instant smell of SElinux permission being the culprit.

Very brief googling revealed, that other people were suffering from that same issue:

- Bug 1444607 - SELinux is preventing starter from execute_no_trans access on the file /usr/libexec/stro

ngswan/charon. - Bug 1467940 - SELinux is preventing starter from 'execute_no_trans' accesses on the file /usr/libexec/strongswan/charon.

Others had made the same conclusion: it's a SElinux -policy failure. Older bug report was from April. That's a month before Fedora 26 was released! But neither bug report had a fix for it. I went to browse Bodhi and found out that there is a weekly release of selinux-policy .rpm-file, but this hadn't gotten the love it desperately needed from RedHat guys.

Quite often self-help is the best help, so I ran audit2allow -i /var/log/audit/audit.log and deduced a following addition to my local policy:

#============= ipsec_t ==============

allow ipsec_t ipsec_exec_t:file execute_no_trans;

allow ipsec_t var_run_t:sock_file { unlink write };

I have no idea if that fix is ever going to be picked up by RedHat, but it definitely works for me. Now my IPsec tunnels survive a reboot of my server.

Update 10th Sep 2017:

Package selinux-policy-3.13.1-260.8.fc26.noarch.rpm has following changelog entry:

2017-08-31 - Lukas Vrabec <lvrabec@redhat.com> - 3.13.1-260.8

- Allow ipsec_t can exec ipsec_exec_t

... which fixes the problem.

To test that, I dropped my own modifications out of local policy and tested. Yes, working perfectly! Thank you Fedora guys for this.

EPIC 5 Fedora 26 build

Sunday, September 3. 2017

Not many people IRC anymore. I've been doing that for the past 23 years and I have no intention of stopping. For other IRC fans, I recommend reading Wikipedia article about EFnet. It contains a very nice history of IRC networks since 1990. For example I didn't realize that our European IRC is a split of original EFnet. Back in 1994 we really didn't have any names for the networks, there was just "irc".

Ok, back to the original topic. My weapon of choice has always been EPIC. Given the unpopularity of IRC-clients, for example Fedora Linux has a legacy EPIC 4 in it and I've been running EPIC 5 myself for past years, so I had to do some tinkering to get that properly packaged and running on my own box.

Latest version of EPIC5 is 1.2.0, but it won't compile on Fedora 26 as EPIC5 assumes to have OpenSSL 1.0.2 libraries and Fedora 26 has OpenSSL 1.1.0. The options are to either drop SSL-support completely or patch the source code for 1.1.0 support. I chose the latter.

Fedora 26 binary is at: http://opensource.hqcodeshop.com/EPIC/5/RPMS/epic5-1.2.0-1.fc26.x86_64.rpm

source is at: http://opensource.hqcodeshop.com/EPIC/5/SRPMS/epic5-1.2.0-1.fc26.src.rpm

Any comments are welcome!

Twitch'ing with Larpdog - Assembly and donation of a gaming PC

Sunday, August 6. 2017

Today I was helping a friend with his Twitch-stream. Apologies for non-Finnish readers, the stream and accompanying information is in Finnish.

Mr. Larpdog has a pretty cool Twitch-studio:

I've never seen an Elgato Stream Deck before. But having witnessed it being used in a live stream, it sure makes management so much easier.

So, the idea of this particular stream was to assemble a PC and donate it to a follower of the stream. The money (I think 988 €) for the PC parts was donated by other followers.

Entire stream is at https://www.twitch.tv/videos/164849338, and I make brief apperance there in the beginning.