Windows 8.1 upgrade and Media Center Pack

Wednesday, December 11. 2013

Earlier I wrote about upgrading my Windows 8 into Windows 8.1. At the time I didn't realize it, but the upgrade lost my Media Center Pack.

At the time I didn't realize that, but then I needed to play a DVD with the laptop and noticed, that the OS is not capable of doing that anymore. After Windows 8 was released it didn't have much media capabilities. To fix that, couple months after the release Microsoft distributed Media Center Pack keys for free to anybody who wanted to request one. I got a couple of the keys and installed one into my laptop.

Anyway, the 8.1 upgrade forgot to mention that it would downgrade the installation back to non-media capable. That should be an easy fix, right?

Wrong!

After the 8.1 upgrade was completed, I went to "Add Features to Windows", said that I already had a key, but Windows told me that nope, "Key won't work". Nice. ![]()

At the time I had plenty of other things to take care of and the media-issue was silently forgotten. Now that I needed the feature, again I went to add features, and hey presto! It said, that the key was ok. For a couple of minutes Windows did something magical and ended the installation with "Something went wrong" type of message. The option to add features was gone at that point, so I really didn't know what to do.

The natural thing to do next is to go googling. I found an article at the My Digital Life forums, where somebody complained having the same issue. The classic remedy for everything ever since Windows 1.0 has been a reboot. Windows sure likes to reboot. ![]() I did that and guess what, during shutdown there was an upgrade installing. The upgrade completed after the boot and there it was, the Windows 8.1 had Media Center Pack installed. Everything worked, and that was that, until ...

I did that and guess what, during shutdown there was an upgrade installing. The upgrade completed after the boot and there it was, the Windows 8.1 had Media Center Pack installed. Everything worked, and that was that, until ...

Then came the 2nd Tuesday, traditionally it is the day for Microsoft security updates. I installed them and a reboot was requested. My Windows 8.1 started disliking me after that. The first thing it did after a reboot, it complained about Windows not being activated! Aow come on! I punched in the Windows 8 key and it didn't work. Then I typed the Media Center Pack key and that helped. Nice. Luckily Windows 8 activation is in the stupid full-screen mode, so it is really easy to copy/paste a license key. NOT! ![]()

The bottom line is: Media Center Pack is really poorly handled. I'm pretty sure nobody at Microsoft's Windows 8 team ever installed the MCP. This is the typical case of end users doing all the testing. Darn!

Younited cloud storage

Monday, December 9. 2013

I finally got my account into younited. It is a cloud storage service by F-Secure, the Finnish security company. They boast that it is secure, can be trusted and data is hosted in Finland out of reach by those agencies with three letter acronyms.

The service offers you 10 GiB of cloud storage and plenty of clients for it. Currently you can get in only by invite. Windows-client looks like this:

Looks nice, but ... ![]()

I've been using Wuala for a long time. Its functionality is pretty much the same. You put your files into a secure cloud and can access them via number of clients. The UI on Wuala works, the transfers are secure, they are hosted on Amazon in Germany, company is from Switzerland owned by French company Lacie. When compared with Younited, there is a huge difference and it is easy to see which one of the services has been around for years and which one is in open beta.

Given all the trustworthiness and security and all, the bad news is: In its current state Younited is completely useless. It would work if you have one picture, one MP3 and one Word document to store. The only ideology of storing items is to sync them. I don't want to do only that! I want to create a folder and a subfolder under it and store a folder full of files into that! I need my client-storage and cloud storage to be separate entity. Sync is good only for a handful of things, but in F-Secure's mind that's the only way to go. They are in beta, but it would be good to start listening to their users.

If only Wuala would stop using Java in their clients, I'd stick with them.

CentOS 6 PHP 5.4 and 5.5 for Parallels Plesk Panel 10+

Friday, November 29. 2013

One of my servers is running Parallels Plesk Panel 11.5 on a CentOS 6. CentOS is good platform for web hosting, since it is robust, well maintained and it gets updates for a very long time. The bad thing is that version numbers don't change during all those maintenance years. In many cases that is a very good thing, but when talking about web development, once a while it is nice to get upgraded versions and the new features with them.

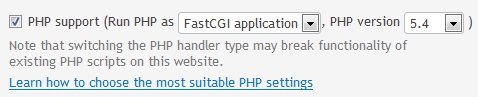

In version 10 Parallels Plesk introduced a possibility of having a choice for the PHP version. It is possible to run PHP via Apache's mod_php, but Parallels Plesk does not support that. The only supported option is to run PHP via CGI or FastCGI. Not having PHP via mod_php is not a real problem as FastCGI actually performs better on a web box when the load gets high enough. The problem is, that you cannot stack the PHP installation on top of each other. Different versions of a package tend to reside in the same exact physical directory. That's something that every sysadmin learns in the beginning stages of their learning curve.

CentOS being a RPM-distro can have relocatable RPM-packages. Still, if you install different versions of same package to diffent directories, the package manager complains about a version having been installed already. To solve this and have my Plesk multiple PHP versions I had to prepare the packages myself.

I started with Andy Thompson's site webtatic.com. He has prepared CentOS 6 packages for PHP 5.4 and PHP 5.5. His source packages are mirrored at http://nl.repo.webtatic.com/yum/el6/SRPMS/. He did a really good job and the packages are excellent. However, the last problem still resides. Now we can have a choice of the default CentOS PHP 5.3.3 or Andy's PHP 5.4/5.5. But only one of these can exist at one time due to being installed to the same directories.

My packages are at http://opensource.hqcodeshop.com/CentOS/6 x86_64/Parallels Plesk Panel/ and they can co-exist with each other and CentOS standard PHP. The list of changes is:

- Interbase-support: dropped

- MySQL (the old one): dropped

- mysqlnd is there, you shouldn't be using anything else anyway

- Thread safe (ZTS) and embedded versions: dropped

- CLI and CGI/FastCGI are there, the versions are heavily optimized to be used in a Plesk box

- php-fpm won't work, guaranteed!

- I did a sloppy job with that. In principle, you could run any number of php-fpm -daemons in the same machine, but ... I didn't do the extra job required as the Plesk cannot benefit from that.

After standard RPM-install, you need to instruct Plesk, that it knows about another PHP. Read all about that from Administrator's Guide, Parallels Plesk Panel 11.5 from the section Multiple PHP Versions. This is what I ran:

/usr/local/psa/bin/php_handler --add -displayname 5.4 \

-path /opt/php5.4/usr/bin/php-cgi \

-phpini /opt/php5.4/etc/php.ini \

-type fastcgi

After doing that, in the web hosting dialog there is a choice:

Note how I intentionally called the PHP version 5.4.22 as 5.4. My intention is to keep updating the 5.4-series and not to register a new PHP-handler for each minor update.

Also on a shell:

-bash-4.1$ /usr/bin/php -v

PHP 5.3.3 (cli) (built: Jul 12 2013 20:35:47)

-bash-4.1$ /opt/php5.4/usr/bin/php -v

PHP 5.4.22 (cli) (built: Nov 28 2013 15:54:42)

-bash-4.1$ /opt/php5.5/usr/bin/php -v

PHP 5.5.6 (cli) (built: Nov 28 2013 18:20:00)

Nice! Now I can have a choice for each web site. Btw. Andy, thanks for the packages.

Parallels Plesk Panel: Disabling local mail for a subscription

Thursday, November 28. 2013

The mail disable cannot be done via GUI. Going to subscription settings and un-checking the Activate mail service on domain -setting does not do the trick. Mail cannot be disabled for a single domain, the entire subscription has to be disabled. See KB Article ID: 113937 about that.

I found a website saying that domain command's -mail_service false -setting would help. It does not. For example, this does not do the trick:

/usr/local/psa/bin/domain -u domain.tld -mail_service false

It looks like this in the Postifx log /usr/local/psa/var/log/maillog:

postfix/pickup[20067]: F2B5222132: uid=0 from=<root>

postfix/cleanup[20252]: F2B5222132: message-id=<20131128122425.F2B5222132@da.server.com>

postfix/qmgr[20068]: F2B5222132: from=<root@da.server.com>, size=4002, nrcpt=1 (queue active)

postfix-local[20255]: postfix-local: from=root@da.server.com, to=luser@da.domain.net, dirname=/var/qmail/mailnames

postfix-local[20255]: cannot chdir to mailname dir luser: No such file or directory

postfix-local[20255]: Unknown user: luser@da.domain.net

postfix/pipe[20254]: F2B5222132: to=<luser@da.domain.net>, relay=plesk_virtual, delay=0.04, delays=0.03/0/0/0, dsn=2.0.0, status=sent (delivered via plesk_virtual service)

postfix/qmgr[20068]: F2B5222132: removed

![]() not cool.

not cool.

However KB Article ID: 116927 is more helpful. It offers the mail-command. For example, this does do the trick:

/usr/local/psa/bin/mail --off domain.tld

Now my mail exits the box:

postfix/pickup[20067]: 5218222135: uid=10000 from=<user>

postfix/cleanup[20692]: 5218222135: message-id=<mediawiki_0.5297385c4d15f5.15419884@da.server.com>

postfix/qmgr[20068]: 5218222135: from=<user@da.server.com>, size=1184, nrcpt=1 (queue active)

postfix/smtp[20694]: certificate verification failed for aspmx.l.google.com[74.125.136.27]:25: untrusted issuer /C=US/O=Equifax/OU=Equifax Secure Certificate Authority

postfix/smtp[20694]: 5218222135: to=<luser@da.domain.net>, relay=ASPMX.L.GOOGLE.COM[74.125.136.27]:25, delay=1.1, delays=0.01/0.1/0.71/0.23, dsn=2.0.0, status=sent (250 2.0.0 OK 1385642077 e48si8942242eeh.278 - gsmtp)

postfix/qmgr[20068]: 5218222135: removed

Cool!

How not to process bug reports - The Red Hat way

Wednesday, November 27. 2013

Over 5 years ago I filed a bug report about GCC crashing during ImageMagick compilation on RHEL 5. Nobody at Red Hat cared about that until couple days ago. ![]() Funny thing. At the time I had the issue, I simply kept the old ImageMagick and completed the project with that one. It would have been nice to have a more recent version, but since the new one would not compile, I just forgot about it.

Funny thing. At the time I had the issue, I simply kept the old ImageMagick and completed the project with that one. It would have been nice to have a more recent version, but since the new one would not compile, I just forgot about it.

Now the Red Hat guy Jeff is just being stupid. Why would anybody care anymore? Why did he have to do the obligatory works-for-me / need-more-information -routine. Now, at this point its just insulting, since they ignored the issue when it was actually present. Who would use RHEL 5 anymore. Not me.

OS X Time Machine waking up from sleep to do a backup

Tuesday, November 26. 2013

I was pretty amazed to notice that my Mac actually wakes up for the sole

purpose of running a scheduled backup and goes back to sleep. Oh, but

why?

I was pretty amazed to notice that my Mac actually wakes up for the sole

purpose of running a scheduled backup and goes back to sleep. Oh, but

why?

Going to web with the issue helped, I found Apple support community discussion with topic "time machine wake up unwanted". I don't think my pre 10.9 did that. Anyway I can confirm that 10.9 does this rather stupid thing.

Luckily the discussion thread also offers the fix: "Time Machine won't wake up a Mac, unless another Mac is backing-up to a shared drive on it via your network, and the Wake for network access box is checked in System Preferences > Energy Saver". Definitely something for me to try.

It helped! I can confirm, that there are no backups for the time my Mac was asleep. Pretty soon I woke it up, it started a TM-backup and completed it.

Thanks Pondini in Florida, USA!

Vim's comment line leaking is annoying! Part 2

Monday, November 25. 2013

This is my previous blog-entry about vim's comment leaking.

It looks like, my instructions are not valid anymore. When I launch a fresh vim and do the initial check:

:set formatoptions

As expected, it returns:

formatoptions=crqol

However, doing my previously instructed:

:set formatoptions-=cro

Will not change the formatting. Darn! I don't know what changed, but apparently you cannot change multiple options at once. The new way of doing that is:

:set formatoptions-=c formatoptions-=r formatoptions-=o

After that, the current status check will return:

formatoptions=ql

Now, the comments do not leak anymore.

Apache configuration: Exclude Perl-execution for a single directory

Wednesday, November 20. 2013

My attempt to distribute the Huawei B593 exploit-tool failed yesterday. Apparently people could not download the source-code and got a HTTP/500 error instead.

The reason for the failure was, that the Apache HTTPd actually executed the Perl-script as CGI-script. Naturally it failed miserably due to missing dependencies. Also the error output was not very CGI-compliant and Apache chose to dis-like it and gave grievance as output. In the meantime, my server actually has runnable Perl-scripts, for example the DNS-tester. The problem now was: how to disable the script-execution for a single directory and allow it for the virtual host otherwise?

Solution:

With Google I found somebody asking something similar for ColdFusion (who uses that nowadays?), and adapted it to my needs. I created .htaccess with following content:

<Files ~ (\.pl$)>

SetHandler text/html

</Files>

Ta daa! It does the trick! It ignores the global setting, and processes the Perl-code as regular HTML making the download possible.

Exploit: Running commands on B593 shell

Tuesday, November 19. 2013

Mr. Ronkainen at http://blog.asiantuntijakaveri.fi/ has done some really good research on Huawei B593 web interface. He discovered that the ping-command in diagnostics runs any command you'd like to. Really! Any command.

I being a lazy person didn't want to use cURL to do all the hacking, that's way too much work for me. So, I did a quick hack for a Perl-script to do the same thing. Get my script from http://opensource.hqcodeshop.com/Huawei%20B593/exploit/B593cmd.pl

To use my script, you'll need 3 parameters

- The host name or IP-address of your router, typically it is 192.168.1.1

- The admin password, typically it is admin

- The command to run. Anything you want.

Example command B593cmd.pl 192.168.1.1 admin "iptables -nL INPUT" will yield:

Chain INPUT (policy ACCEPT)

target prot opt source destination

DROP all -- 0.0.0.0/0 0.0.0.0/0 state INVALID

ACCEPT all -- 0.0.0.0/0 0.0.0.0/0 state RELATED,ESTABLISHED

INPUT_DOSFLT all -- 0.0.0.0/0 0.0.0.0/0

INPUT_SERVICE_ACL all -- 0.0.0.0/0 0.0.0.0/0

INPUT_URLFLT all -- 0.0.0.0/0 0.0.0.0/0

INPUT_SERVICE all -- 0.0.0.0/0 0.0.0.0/0

INPUT_FIREWALL all -- 0.0.0.0/0 0.0.0.0/0

In my box the SSHd does not work. No matter what I do, it fails to open a prompt. I'll continue investigating the thing to see if it yields with a bigger hammer or something.

Happy hacking!

Telenor firmware for B593u-12

Saturday, November 16. 2013

I was looking for a firmware for s-22 and minutes after finding the Vodafone firmware, a Telenor one popped up. It must be a really new one, as I cannot find any comments about it from The Net. The download location for the firmware version V100R001C00SP070 is at http://stup.telenor.net/huawei-b593/V100R001C00SP070/.

I didn't test this version either. If you do the upgrade, please drop me a comment. The version number and router compatibility information comes from extracted firmware header it says (in hexdump -C):

# hexdump -C Telenor_fmk/image_parts/header.img | head

00000000 48 44 52 30 00 d0 8f 00 cf 3b 61 18 00 00 01 00 |HDR0.....;a.....|

00000010 1c 01 00 00 a4 14 16 00 00 00 00 00 42 35 39 33 |............B593|

00000020 2d 55 31 32 00 00 00 00 00 00 00 00 56 31 30 30 |-U12........V100|

00000030 52 30 30 33 43 30 33 42 30 30 38 00 00 00 00 00 |R003C03B008.....|

00000040 00 00 00 00 00 00 00 00 00 00 00 00 56 31 30 30 |............V100|

00000050 52 30 30 31 43 30 30 53 50 30 37 30 00 00 00 00 |R001C00SP070....|

00000060 00 00 00 00 00 00 00 00 00 00 00 00 56 65 72 2e |............Ver.|

00000070 42 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |B...............|

00000080 00 00 00 00 00 00 00 00 00 00 00 00 31 31 2e 34 |............11.4|

00000090 33 33 2e 36 31 2e 30 30 2e 30 30 30 00 00 00 00 |33.61.00.000....|

This is the the obligatory warning: if you have a s-22 DON'T update with this firmware. If you don't know which version you have: DON'T update with this firmware. Nobody want's to brick the router, right? It is expensive and all.

Update 5th March 2014:

Mr. Bjørn Grønli shared his test results with us. Here is a spreadsheet to various B593u-12 firmware and their features.

Vodafone firmware for B593u-12

Saturday, November 16. 2013

I was looking for a firmware for s-22 and bumped into Vodafone's firmware. It seems to be in use at least in Vodafone Germany. The download location for the firmware version V100R001C35SP061 is at http://vve.su/vvesu/files/misc/B593/.

Just to be clear, the 3.dk's version for u-12 is V100R001C26SP054. Another thing: I didn't test the new version, currently my router runs just fine. If you do, please drop me a comment. The version number and router compatibility information comes from extracted firmware header it says (in hexdump -C):

# hexdump -C Vodafone_fmk/image_parts/header.img | head

00000000 48 44 52 30 00 10 9b 00 04 da 6f d2 00 00 01 00 |HDR0......o.....|

00000010 1c 01 00 00 d4 14 16 00 00 00 00 00 42 35 39 33 |............B593|

00000020 2d 55 31 32 00 00 00 00 00 00 00 00 56 31 30 30 |-U12........V100|

00000030 52 30 30 33 43 30 33 42 30 30 38 00 00 00 00 00 |R003C03B008.....|

00000040 00 00 00 00 00 00 00 00 00 00 00 00 56 31 30 30 |............V100|

00000050 52 30 30 31 43 33 35 53 50 30 36 31 00 00 00 00 |R001C35SP061....|

00000060 00 00 00 00 00 00 00 00 00 00 00 00 56 65 72 2e |............Ver.|

00000070 42 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |B...............|

00000080 00 00 00 00 00 00 00 00 00 00 00 00 31 31 2e 33 |............11.3|

00000090 33 35 2e 33 33 2e 30 30 2e 30 30 30 00 00 00 00 |35.33.00.000....|

This is the the obligatory warning: if you have a s-22 DON'T update with this firmware. If you don't know which version you have: DON'T update with this firmware. Nobody want's to brick the router, right? It is expensive and all.

Huawei B593 different models

Sunday, November 10. 2013

Just to clarify: My exact mode of Huawei 4G router is CPE B593u-12.

So my previous writings about 3's firmware and Saunalahti's firmware are specific to that exact model. According to 4G LTE mall website following models exist:

- B593u-12: FDD 800/900/1800/2100/2600MHz

- B593s-22: TDD 2600 FDD 800/900/1800/2100/2600MHz (Speed to 150Mbps)

- B593s-82: TDD 2300/2600MHz

- B593s-58: TDD 1900/2300/2600MHz

- B593s-58b: TDD 1900/2300MHz

- B593u-91: TDD 2300/2600MHz

- B593u: LTE FDD 850/900/1800/1900/2600 MHz

- B593s: Band 42 (3400-3600MHz)

- B593u-513

- B593s-42

- B593u-501

- B593u-41

- B593s-601

In Finland the most common models are the two first ones: u-12 and s-22.

There are number of discussions for getting a new firmware (they even copy/paste stuff from my blog without crediting me as the author), but please carefully find out the exact model before upgrading. If you manage to inject an incorrect firmware, it will most likely brick your thing. I didn't try that and don't plan to.

What's funny is that Huawei does not publicly have a B593 in their product portfolio, apparently their only sales/support channel is via their client Telcos and they don't publish anything except the GPL-code required by GPL v2 license.

Ridiculously big C:\windows\winsxs directory

Tuesday, November 5. 2013

Lot of my Windows 7 testing is done in a virtual installation. I run it under KVM and aim for small disc footprint, I have a number of other boxes there too. One day I came to realize that my Windows 7 installation is running out of disc space. That would be strange, I literally have nothing installed in it and it still manages to eat up 30 GiB of space.

There is Microsoft KB Article ID: 2795190 How to address disk space issues that are caused by a large Windows component store (WinSxS) directory explaining this behavior.

The fix is to use Deployment Image Servicing and Management tool DISM. Run something like this as administrator will help:

C:\Windows\System32\Dism.exe /online /Cleanup-Image /SpSuperseded

it will say something like "Removing backup files created during service pack installation". It will remove more than 4 GiB of space. Rest of the garbage will stay on the drive as they originate from regular windows updates.

Upgrading into Windows 8.1

Friday, October 25. 2013

Why does everything have to be updated via download? That's completely fucked up! In the good old days you could download an ISO-image and update when you wanted and as many things you needed. The same plague is in Mac OS X, Windows, all important applications. I hate this!

Getting a Windows 8.1 upgrade was annoying since it failed to upgrade my laptop. It really didn't explain what happened, it said "failed rolling back". Then I bumped into an article "How to download the Windows 8.1 ISO using your Windows 8 retail key". Nice! Good stuff there. I did that and got the file.

Next thing I do is take my trustworthy Windows 7 USB/DVD download tool to create a bootable USB-stick from the 8.1 upgrade ISO-file. A boot from the stick and got into installer which said that this is not the way to do the upgrade. Come on! Second boot back to Windows 8 and start the upgrade from the USB-stick said that "Setup has failed to validate the product key". I googled that and found a second article about getting the ISO-file (Windows 8.1 Tip: Download a Windows 8.1 ISO with a Windows 8 Product Key), which had a comment from Mr. Robin Tick had a solution for this. Create the sources\ei.cfg-file into the USB-stick with contents:

[EditionID]

Professional

[Channel]

Retail

[VL]

0

Then the upgrade started to roll my way. To my amazement my BitLocker didn't make much of a stopper for the upgrade to proceed. It didn't much ask for the PIN-code or anything, but went trough all the upgrade stages: Installing, Detecting devices, Applying PC settings, Setting up a few more things, Getting ready and it was ready to go. It was refreshing to experience success after the initial failure. I'm guessing that the BitLocked made the downloaded upgrade to fail.

Next thing I tried was to upgrade my office PC. It is a Dell OptiPlex with OEM Windows 8. Goddamn it! It did the upgrade, but at that point my OEM Windows 8 was converted into a retail Windows 8.1. Was that really necessary? How much of an effort would that be to simply upgrade the operating system? Or at least give me a warning, that in order to proceed with the upgrade the OEM status will be lost. Come on Microsoft!

Authenticating HTTP-proxy with Squid

Thursday, October 24. 2013

Every now and then you don't want to approach a web site from your own address. There may be a number of "valid" reasons for doing that besides any criminal activity. Even YouTube has geoIP-limitations for certain live feeds. Or you may be a road warrior approaching the Internet from a different IP every time and want to even that out.

Anyway, there is a simple way of doing that: expose a HTTP-proxy to the Net and configure your browser to use that. Whenever you choose to have someting agains the wild-wild-net be very aware, that you're opening a door for The Bad Guys also. In this case, if somebody gets a hint that they can act anonymously via your server, they'd be more than happy to do so. To get all The Bad Guys out of your own personal proxy using some sort of authentication mechanism would be a very good idea.

Luckily, my weapon-of-choice Squid caching proxy has a number of pluggable authentication mechanisms built-into it. On Linux, the simplest one to have would be the already existing unix users accounts. BEWARE! This literally means transmitting your password plain text for each request you make to the wire. At the present only Google's Chrome can access Squid via HTTPS to protect your proxy password, and even that can be quirky, an autoconfig needs to be used. For example Mozilla Firefox has had a request for support in the existence since 2007, but no avail yet.

If you choose to go unencrypted HTTP-way and have your password exposed each time, this is the recipe for CentOS 6. First edit your /etc/squid/squid.conf to contain (the gray things should already be there):

# Example rule allowing access from your local networks.

# Adapt localnet in the ACL section to list your (internal) IP networks

# from where browsing should be allowed

http_access allow localnet

http_access allow localhost

# Proxy auth:

auth_param basic program /usr/lib64/squid/pam_auth

auth_param basic children 5

auth_param basic realm Authenticating Squid HTTP-proxy

auth_param basic credentialsttl 2 hours

cache_mgr root@my.server.com

# Authenticated users

acl password proxy_auth REQUIRED

http_access allow password

# And finally deny all other access to this proxy

# Allow for the (authenticated) world

http_access deny all

Reload Squid and your /var/log/messages will contain:

(pam_auth): Unable to open admin password file: Permission denied

This happens for the simple reason, that Squid effectively runs as user squid, not as root and that user cannot access the shadow password DB. LDAP would be much better for this (it is much better in all cases). Original:

# ls -l /usr/lib64/squid/pam_auth

-rwxr-xr-x. 1 root root 15376 Oct 1 16:44 /usr/lib64/squid/pam_auth

The only reasonable fix is to run the authenticator as SUID-root. This is generally a stupid idea and dangerous. For that reason we explicitly disallow usage for the authenticator for anybody else:

# chmod u+s /usr/lib64/squid/pam_auth

# chmod o= /usr/lib64/squid/pam_auth

# chgrp squid /usr/lib64/squid/pam_auth

Restart Squid and the authentication will work for you.

Credit for this go to Squid documentation, Squid wiki and Nikesh Jauhari's blog entry of Squid Password Authentication Using PAM.