CentOS 6 PHP 5.4 and 5.5 yum repository

Wednesday, February 19. 2014

I maintain RPM-packages for PHP 5.4 and 5.5, see earlier post about it.

As any sysadmin can expect, there was too much trouble running the updates. Since CentOS 6 native way is running yum repositories, I created one.

Kick things going by installing the repo-definition:

yum install \

http://opensource.hqcodeshop.com/CentOS/6%20x86_64/Parallels%20Plesk%20Panel/plesk-php-repo-1.0-1.el6.noarch.rpm

After that, a simple yum install command:

yum install plesk-php55

... will yield something like this:

/opt/php5.5/usr/bin/php -v

PHP 5.5.9 (cli) (built: Feb 9 2014 22:04:05)

Copyright (c) 1997-2014 The PHP Group

Zend Engine v2.5.0, Copyright (c) 1998-2014 Zend Technologies

I'll be compiling new versions to keep my own box in shape.

Parallels Plesk Panel: Bug - DNS zone twice in DB

Sunday, February 16. 2014

Earlier I had trouble with with a disabled DNS-zone not staying disabled. I'm running version 11.5.30 Update #32.

The problem bugged me and I kept investigating. To debug, I enabled the DNS-zone and actually transferred it with AXFR to an external server. There I realized, that the SOA-record was bit strange. Further trials reveald that in the NS-records, there was always an extra one. With that in mind, I went directly to the database to see what I had stored there for the zone.

To access the MySQL database named psa, I have to get the password for that. See KB article ID 170 [How to] How can I access MySQL databases in Plesk? for details about that. The database schema is not documented, but it has become familiar to me during all the years I've been sysadmining Plesk Panels. To get the ID for the DNS-zone I did:

SELECT *

FROM dns_zone

WHERE name = '-the-zone-';

And what do you know! There were two IDs for the given name. That is a big no-no. It's like you having two heads. A freak of nature. It cannot happen. It is so illegal, that there aren't even laws about it. To fix that I did rename the one with a smaller ID:

UPDATE dns_zone

SET name = '-the-zone-_obsoleted', displayName = '-the-zone-_obsoleted'

WHERE id = -the-smaller-ID-;

After that a manual refresh of the BIND records from the DB:

/usr/local/psa/admin/bin/dnsmng --update -the-zone-

And confirmation from the raw BIND-file:

less /var/named/chroot/var/-the-zone-

Now everything was in order. I'm hoping that will help and keep the zone disabled. To me it is now obvious why that happened. Database had become badly skewed.

Triggering Adobe Flash Player update manually

Wednesday, February 12. 2014

No matter how much I think it, it simply does not make any sense to me. Why an earth, isn't there a button to manually update Adobe Flash Player? What good will it do to download it every single time you want it updated? All the parts are already there in your computer, but there is no reasonable way of telling it to:

Go! Update! Now!

With the help of an excellent tool, Windows Sysinternals Process Explorer, I snooped out the location and parameters of the update application.

On a 64-bit Windows

It is highly likely, that your browser is 32-bit. You need to be some sort of hacker (like me) not to have a 32-bit browser. So, the assumption is this applies to you.

All the good parts are in C:\Windows\SysWOW64\Macromed\Flash\

On a 32-bit Windows

If your PC is old, then you'll have this. (Or, alternate case: you are a hacker and running 64-bit browser.)

All the good stuff is in C:\Windows\System32\Macromed\Flash\

Triggering the update

NOTE:

The version number of the application will change on each update. What I demonstrate here was valid at the time of writing this, but I assure you, the exact name of the application will be something else next month.

The location of the files is ...

For all other browsers than Internet Explorer:

FlashUtil32_12_0_0_43_Plugin.exe -update plugin

For Internet Explorer:

FlashUtil32_12_0_0_44_ActiveX.exe -update activex

Running that command as a regular user will trigger the same process that would be triggered during a Windows login. Since I login rarely, the update almost never triggers for me. I simply put the computer to sleep and wake it up and unlock the screen, which again does not trigger the version check.

This isn't the only stupid thing Adobe does. They don't take your security seriously. Shame on them!

Advanced mod_rewrite: FastCGI Ruby on Rails /w HTTPS

Friday, February 7. 2014

mod_rewrite comes handy on number of occasions, but when the rewrite deviates from the most trivial things, understanding how exactly the rules are processed is very very difficult. The documentation is adequate, but the information is spread around number of configuration directives, and it is a challenging task to put it all together.

RewriteRule Order of processing

Apache has following levels of configuration from top to bottom:

- Server level

- Virtual host level

- Directory / Location level

- Filesystem level (.htaccess)

Typically configuration directives have effect from bottom to top. A lower level directive overrides any upper level directive. This also the case with mod_rewrite. A RewriteRule in a .htaccess file is processed first and any rules on upper layers in reverse order of the level. See documentation of RewriteOptions Directive, it clearly says: "Rules inherited from the parent scope are applied after rules specified in the child scope". The rules on the same level are executed from top to bottom in a file. You can think all the Include-directives to be combining a large configuration file, so the order can be determined quite easily.

However, this order of processing rather surprisingly contradicts the most effective order of execution. The technical details documentation of mod_rewrite says:

Unbelievably mod_rewrite provides URL manipulations in per-directory context, i.e., within

.htaccessfiles, although these are reached a very long time after the URLs have been translated to filenames. It has to be this way because.htaccessfiles live in the filesystem, so processing has already reached this stage. In other words: According to the API phases at this time it is too late for any URL manipulations.

This results in a looping approach for any .htaccess rewrite rules. The documentation of RewriteRule Directive PT|passthrough says:

The use of the [PT] flag causes the result of the RewriteRule to be passed back through URL mapping, so that location-based mappings, such as Alias, Redirect, or ScriptAlias, for example, might have a chance to take effect.

and

The PT flag implies the L flag: rewriting will be stopped in order to pass the request to the next phase of processing.

Note that the PT flag is implied in per-directory contexts such as <Directory> sections or in .htaccess files.

What that means:

- L-flag does not stop anything, it especially does not stop RewriteRule processing in .htaccess file.

- All RewriteRules, yes all of them, are being matched over and over again in a .htaccess file. That will result in a forever loop if they keep matching. RewriteCond should be used to stop that.

- RewriteRule with R-flag pointing to the same directory will just make another loop. R-flag can be used to exit looping by redirecting to some other directory.

- When not in .htaccess-context, L-flag and looping does not happen.

So, the morale of all this is that doing any rewriting on .htaccess-level performs really bad and will cause unexpected results in the form of looping.

Case study: Ruby on rails -application

There are following requirements:

- The application is using Ruby on Rails

- Interface for Ruby is mod_fcgid to implement FastCGI

- All non-HTTPS requests should be redirected to HTTPS for security reasons

- There is one exception for that rule, a legacy entry point for status updates must not be forced to HTTPS

- The legacy entry point is using Basic HTTP authentication. It does not work with FastCGI very well.

That does not sound too much, but in practice it is.

Implementation 1 - failure

To get Ruby on Rails application running via FastCGI, there are plenty of examples and other information. Something like this in .htaccess will do the trick:

RewriteCond %{REQUEST_FILENAME} !-f

RewriteRule ^(.*)$ /dispatch.fcgi/$1 [QSA]

The dispatch.fcgi comes with the RoR-application and mod_rewrite is only needed to make the Front Controller pattern required by the application framework to function properly.

To get the FastCGI (via mod_fcgid) working a simple AddHandler fastcgi-script .fcgi will do the trick.

With these, the application does work. Then there is the HTTPS-part. Hosting-setup allows to edit parts of the virtual host -template, so I added own section of configuration, rest of the file cannot be changed:

<VirtualHost _default_:80 >

<IfModule mod_rewrite.c>

RewriteEngine On

RewriteCond %{HTTP_HOST} ^www.my.service$ [NC]

RewriteRule ^(.*)$ http://my.service$1 [L,R=301]

</IfModule>RewriteCond %{HTTPS} !=on

RewriteCond %{REQUEST_URI} !^/status/update

RewriteRule ^(.*)$ https://%{HTTP_HOST}$1 [R=301,QSA,L]</VirtualHost>

The .htaccess file was taken from RoR-application:

# Rule 1:

# Empty request

RewriteRule ^$ index.html [QSA]# Rule 2:

# Append .html to the end.

RewriteRule ^([^.]+)$ $1.html [QSA]# Rule 3:

# All non-files are processed by Ruby-on-Rails

RewriteCond %{REQUEST_FILENAME} !-f

RewriteRule ^(.*)$ /dispatch.fcgi/$1 [QSA]

It failed. Mainly because HTTPS rewriting is done too late. There were lot of repetition in the replaced URLs and HTTPS-redirect was the last thing done after /dispatch.fcgi/, so the result looked rather funny and not even close what I was hoping for.

Implementation 2 - success

After the failure I started really studying how the rewrite-mechanism works.

The first thing I did was dropped the HTTPS out of virtual host configuration to the not-so-well-performing .htaccess -level. The next thing I did was got rid of the loop-added dispatch.fcgi/dispatch.fcgi/dispatch.fcgi -addition. During testing I noticed, that I didn't account the Basic authentication in any way.

The resulting .htaccess file is here:

# Rule 0:

# All requests should be HTTPS-encrypted,

# except: message reading and internal RoR-processing

RewriteCond %{HTTPS} !=on

RewriteCond %{REQUEST_URI} !^/status/update

RewriteCond %{REQUEST_URI} !^/dispatch.fcgi

RewriteRule ^(.*)$ https://%{HTTP_HOST}/$1 [R=301,QSA,skip=4]# Rule 0.1

# Make sure that any HTTP Basic authorization is transferred to FastCGI env

RewriteRule .* - [E=HTTP_AUTHORIZATION:%{HTTP:Authorization}]# Rule 1:

# Empty request

RewriteRule ^$ index.html [QSA,skip=1]# Rule 2:

# Append .html to the end, but don't allow this thing to loop multiple times.

RewriteCond %{REQUEST_URI} !\.html$

RewriteRule ^([^.]+)$ $1.html [QSA]# Rule 3:

# All non-files are processed by Ruby-on-Rails

RewriteCond %{REQUEST_FILENAME} !-f

RewriteCond %{REQUEST_URI} !/dispatch.fcgi

RewriteRule ^(.*)$ /dispatch.fcgi/$1 [QSA]

Now it fills all my requirements and it works!

Testing the thing

Just to develop the thing and make sure all the RewriteRules work as expected and didn't interfere with each other in a bad way I had to take a test-driven approach to it. I created a set of "unit" tests in the form of manually executed wget-requests. There was no automation on it, just simple eyeballing the results. My tests were:

- Test the index-page, must redirect to HTTPS:

- wget http://my.service/

- Test the index-page, no redirects, must display the page:

- wget https://my.service/

- Test the legacy entry point, must not redirect to HTTPS:

- curl --user test:password http://my.service/status/update

- Test an inner page, must redirect to HTTPS of the same page:

- Test an inner page, no redirects, must return the page:

Those tests cover all the functionality defined in the above .htaccess-file.

Logging rewrites

The process of getting all this together would have been impossible without rewrite logging. The caveat is that logging must be defined in virtual host -level. This is what I did:

RewriteLogLevel 3

RewriteLog logs/rewrite.log

Reading the logfile of level 3 is very tedious. The rows are extremely long and all the good parts are at the end. Here is a single line of log split into something humans can read:

1.2.3.219 - -

[06/Feb/2014:14:56:28 +0200]

[my.service/sid#7f433cdb9210]

[rid#7f433d588c28/initial] (3)

[perdir /var/www/my.service/public/]

add path info postfix:

/var/www/my.service/public/status/update.html ->

/var/www/my.service/public/status/update.html/update

It simply reads that in a .htaccess file was being processed and it contained a rule which was applied. The log file clearly shows the order of rules being executed. However, most of the regular expressions are '^(.*)$' , so it is impossible to distinguish the rules from each other simply by reading the log file.

Final words

This is an advanced topic. Most sysadmins and developers don't have to meet the complexity of this magnitude. If do, I'm hoping this helps. It took me quite a while to put all those rules together.

SplashID wasted my entire password database

Wednesday, February 5. 2014

I've been using SplashID as my password solution. See my earlier post about that. Today I tried to log in into the application to retrieve a password, but it turned out my user account was changed into null. Well... that's not reassuring. ![]()

After the initial shock I filed a support ticket to them, but I'm not expecting any miracles. The database has been lost in my bookkeeping. The next thing I did was checked my trustworthy(?) Acronis True Image backups. I had them running on daily rotation and this turned out to be the first time I actually needed it for a real situation.

They hid the "Restore files and directories" -option well. My laptop is configured to run backups for the entire disk, so the default recover-option is to restore the entire disk. In this case that seems a bit overkill. But in the gear-icon, there actually is such an option. After discovering the option (it took me a while reading the helps), the recover was user friendly and intuitive enough. I chose to restore yesterday's backup to the original location. The recover went fine, but SplashID database was flawed on that point. I simply restored two days old backup and that seemed to be an intact one.

Luckily I don't recall any additions or changes to my passwords during the last two days. It looks like I walked away with this incident without harm.

Update 7th Feb 2014:

I got a reply to my support ticket. What SplashData is saying, that the password database is lost due to a bug (actually they didn't use that word, but they cannot fool me). The bug has been fixed in later version of SplashID. Luckily I had a backup to restore from. IMHO the software should have better notification about new versions.

Parallels Plesk Panel: Disabling DNS for a domain

Tuesday, January 28. 2014

Parallels has "improved" their support-policy. Now you need support contract or pre-purchased incidents just to report a bug. Because my issue is not on my own box (where I have support), but on a customer's server, there is nobody left for me to complain about this. So, here goes:

For some reason on Parallels Plesk Panel 11.5.30 Update #30 (which is the latest version at the time of writing this) a single and every time the same domain creates a DNS-zone into /etc/bind.conf. That would be fully understandable, if that particular domain would have the DNS enabled. It doesn't. The web-GUI clearly indicates the DNS-service for the domain as switched off.

I did investigate this and found that couple of commands will temporarily fix the issue:

/usr/local/psa/bin/dns --off -the-domain-

/usr/local/psa/admin/sbin/dnsmng --remove -the-domain-

The first command will hit the DNS with a big hammer to make sure it is turned off. The second command will polish the leftovers from the /etc/bind.conf and properly notify BIND about configuration change. The problem is, that the zone will keep popping back. I don't know what exactly makes it re-appear, but it has done so couple of times for me. That is really, really annoying.

Parallels: You're welcome. Fix this for the next release, ok?

Rest of you: Hopefully this helps. I had a nice while debugging really misguided DNS-queries just to figure out a zone has DNS enabled.

Nginx with LDAP-authentication

Monday, December 23. 2013

I've been a fan of Nginx for a couple of years. It performs so much better than the main competitor Apache HTTP Server. However, on the negative side is that Nginx does not have all the bells and whistles as the software which has existed since dawn of Internet.

So I have to do lot more myself to get the gain. I package my own Nginx RPM and have modified couple of the add-on modules. My fork of the Nginx LDAP authentication module can be found from https://github.com/HQJaTu/nginx-auth-ldap.

It adds following functionality to Valery's version:

- per location authentication requirements without defining the same server again for different authorization requirement

- His version handles different requirement by defining new servers.

- They are not actual new servers, the same server just is using different authorization requirement for a Nginx-location.

- case sensitive user accounts, just like all other web servers have

- One of the services in my Nginx is Trac. It works as any other *nix software. User accounts are case sensitive.

- However, in LDAP pretty much nothing is. The default schema defines most things as case insensitive.

- The difference must be compensated during authentication into Nginx. That's why I added the :caseExactMatch: into LDAP search filter.

- LDAP-users can be specified with UID or DN

- In Apache, a required user is typically specified as require user admin.

- Now in LDAP-oriented approach the module requires users to be specified as a DN (for the non-LDAP people a DN is an unique name for an entry in the LDAP).

- LDAP does have the UID (user identifier), so in my version it also is a valid requirement.

IMHO those changes make the authentication much, much more useful.

Thanks Valery for the original version!

Younited cloud storage

Monday, December 9. 2013

I finally got my account into younited. It is a cloud storage service by F-Secure, the Finnish security company. They boast that it is secure, can be trusted and data is hosted in Finland out of reach by those agencies with three letter acronyms.

The service offers you 10 GiB of cloud storage and plenty of clients for it. Currently you can get in only by invite. Windows-client looks like this:

Looks nice, but ... ![]()

I've been using Wuala for a long time. Its functionality is pretty much the same. You put your files into a secure cloud and can access them via number of clients. The UI on Wuala works, the transfers are secure, they are hosted on Amazon in Germany, company is from Switzerland owned by French company Lacie. When compared with Younited, there is a huge difference and it is easy to see which one of the services has been around for years and which one is in open beta.

Given all the trustworthiness and security and all, the bad news is: In its current state Younited is completely useless. It would work if you have one picture, one MP3 and one Word document to store. The only ideology of storing items is to sync them. I don't want to do only that! I want to create a folder and a subfolder under it and store a folder full of files into that! I need my client-storage and cloud storage to be separate entity. Sync is good only for a handful of things, but in F-Secure's mind that's the only way to go. They are in beta, but it would be good to start listening to their users.

If only Wuala would stop using Java in their clients, I'd stick with them.

CentOS 6 PHP 5.4 and 5.5 for Parallels Plesk Panel 10+

Friday, November 29. 2013

One of my servers is running Parallels Plesk Panel 11.5 on a CentOS 6. CentOS is good platform for web hosting, since it is robust, well maintained and it gets updates for a very long time. The bad thing is that version numbers don't change during all those maintenance years. In many cases that is a very good thing, but when talking about web development, once a while it is nice to get upgraded versions and the new features with them.

In version 10 Parallels Plesk introduced a possibility of having a choice for the PHP version. It is possible to run PHP via Apache's mod_php, but Parallels Plesk does not support that. The only supported option is to run PHP via CGI or FastCGI. Not having PHP via mod_php is not a real problem as FastCGI actually performs better on a web box when the load gets high enough. The problem is, that you cannot stack the PHP installation on top of each other. Different versions of a package tend to reside in the same exact physical directory. That's something that every sysadmin learns in the beginning stages of their learning curve.

CentOS being a RPM-distro can have relocatable RPM-packages. Still, if you install different versions of same package to diffent directories, the package manager complains about a version having been installed already. To solve this and have my Plesk multiple PHP versions I had to prepare the packages myself.

I started with Andy Thompson's site webtatic.com. He has prepared CentOS 6 packages for PHP 5.4 and PHP 5.5. His source packages are mirrored at http://nl.repo.webtatic.com/yum/el6/SRPMS/. He did a really good job and the packages are excellent. However, the last problem still resides. Now we can have a choice of the default CentOS PHP 5.3.3 or Andy's PHP 5.4/5.5. But only one of these can exist at one time due to being installed to the same directories.

My packages are at http://opensource.hqcodeshop.com/CentOS/6 x86_64/Parallels Plesk Panel/ and they can co-exist with each other and CentOS standard PHP. The list of changes is:

- Interbase-support: dropped

- MySQL (the old one): dropped

- mysqlnd is there, you shouldn't be using anything else anyway

- Thread safe (ZTS) and embedded versions: dropped

- CLI and CGI/FastCGI are there, the versions are heavily optimized to be used in a Plesk box

- php-fpm won't work, guaranteed!

- I did a sloppy job with that. In principle, you could run any number of php-fpm -daemons in the same machine, but ... I didn't do the extra job required as the Plesk cannot benefit from that.

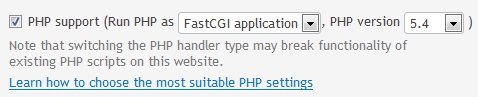

After standard RPM-install, you need to instruct Plesk, that it knows about another PHP. Read all about that from Administrator's Guide, Parallels Plesk Panel 11.5 from the section Multiple PHP Versions. This is what I ran:

/usr/local/psa/bin/php_handler --add -displayname 5.4 \

-path /opt/php5.4/usr/bin/php-cgi \

-phpini /opt/php5.4/etc/php.ini \

-type fastcgi

After doing that, in the web hosting dialog there is a choice:

Note how I intentionally called the PHP version 5.4.22 as 5.4. My intention is to keep updating the 5.4-series and not to register a new PHP-handler for each minor update.

Also on a shell:

-bash-4.1$ /usr/bin/php -v

PHP 5.3.3 (cli) (built: Jul 12 2013 20:35:47)

-bash-4.1$ /opt/php5.4/usr/bin/php -v

PHP 5.4.22 (cli) (built: Nov 28 2013 15:54:42)

-bash-4.1$ /opt/php5.5/usr/bin/php -v

PHP 5.5.6 (cli) (built: Nov 28 2013 18:20:00)

Nice! Now I can have a choice for each web site. Btw. Andy, thanks for the packages.

Parallels Plesk Panel: Disabling local mail for a subscription

Thursday, November 28. 2013

The mail disable cannot be done via GUI. Going to subscription settings and un-checking the Activate mail service on domain -setting does not do the trick. Mail cannot be disabled for a single domain, the entire subscription has to be disabled. See KB Article ID: 113937 about that.

I found a website saying that domain command's -mail_service false -setting would help. It does not. For example, this does not do the trick:

/usr/local/psa/bin/domain -u domain.tld -mail_service false

It looks like this in the Postifx log /usr/local/psa/var/log/maillog:

postfix/pickup[20067]: F2B5222132: uid=0 from=<root>

postfix/cleanup[20252]: F2B5222132: message-id=<20131128122425.F2B5222132@da.server.com>

postfix/qmgr[20068]: F2B5222132: from=<root@da.server.com>, size=4002, nrcpt=1 (queue active)

postfix-local[20255]: postfix-local: from=root@da.server.com, to=luser@da.domain.net, dirname=/var/qmail/mailnames

postfix-local[20255]: cannot chdir to mailname dir luser: No such file or directory

postfix-local[20255]: Unknown user: luser@da.domain.net

postfix/pipe[20254]: F2B5222132: to=<luser@da.domain.net>, relay=plesk_virtual, delay=0.04, delays=0.03/0/0/0, dsn=2.0.0, status=sent (delivered via plesk_virtual service)

postfix/qmgr[20068]: F2B5222132: removed

![]() not cool.

not cool.

However KB Article ID: 116927 is more helpful. It offers the mail-command. For example, this does do the trick:

/usr/local/psa/bin/mail --off domain.tld

Now my mail exits the box:

postfix/pickup[20067]: 5218222135: uid=10000 from=<user>

postfix/cleanup[20692]: 5218222135: message-id=<mediawiki_0.5297385c4d15f5.15419884@da.server.com>

postfix/qmgr[20068]: 5218222135: from=<user@da.server.com>, size=1184, nrcpt=1 (queue active)

postfix/smtp[20694]: certificate verification failed for aspmx.l.google.com[74.125.136.27]:25: untrusted issuer /C=US/O=Equifax/OU=Equifax Secure Certificate Authority

postfix/smtp[20694]: 5218222135: to=<luser@da.domain.net>, relay=ASPMX.L.GOOGLE.COM[74.125.136.27]:25, delay=1.1, delays=0.01/0.1/0.71/0.23, dsn=2.0.0, status=sent (250 2.0.0 OK 1385642077 e48si8942242eeh.278 - gsmtp)

postfix/qmgr[20068]: 5218222135: removed

Cool!

Vim's comment line leaking is annoying! Part 2

Monday, November 25. 2013

This is my previous blog-entry about vim's comment leaking.

It looks like, my instructions are not valid anymore. When I launch a fresh vim and do the initial check:

:set formatoptions

As expected, it returns:

formatoptions=crqol

However, doing my previously instructed:

:set formatoptions-=cro

Will not change the formatting. Darn! I don't know what changed, but apparently you cannot change multiple options at once. The new way of doing that is:

:set formatoptions-=c formatoptions-=r formatoptions-=o

After that, the current status check will return:

formatoptions=ql

Now, the comments do not leak anymore.

Apache configuration: Exclude Perl-execution for a single directory

Wednesday, November 20. 2013

My attempt to distribute the Huawei B593 exploit-tool failed yesterday. Apparently people could not download the source-code and got a HTTP/500 error instead.

The reason for the failure was, that the Apache HTTPd actually executed the Perl-script as CGI-script. Naturally it failed miserably due to missing dependencies. Also the error output was not very CGI-compliant and Apache chose to dis-like it and gave grievance as output. In the meantime, my server actually has runnable Perl-scripts, for example the DNS-tester. The problem now was: how to disable the script-execution for a single directory and allow it for the virtual host otherwise?

Solution:

With Google I found somebody asking something similar for ColdFusion (who uses that nowadays?), and adapted it to my needs. I created .htaccess with following content:

<Files ~ (\.pl$)>

SetHandler text/html

</Files>

Ta daa! It does the trick! It ignores the global setting, and processes the Perl-code as regular HTML making the download possible.

Authenticating HTTP-proxy with Squid

Thursday, October 24. 2013

Every now and then you don't want to approach a web site from your own address. There may be a number of "valid" reasons for doing that besides any criminal activity. Even YouTube has geoIP-limitations for certain live feeds. Or you may be a road warrior approaching the Internet from a different IP every time and want to even that out.

Anyway, there is a simple way of doing that: expose a HTTP-proxy to the Net and configure your browser to use that. Whenever you choose to have someting agains the wild-wild-net be very aware, that you're opening a door for The Bad Guys also. In this case, if somebody gets a hint that they can act anonymously via your server, they'd be more than happy to do so. To get all The Bad Guys out of your own personal proxy using some sort of authentication mechanism would be a very good idea.

Luckily, my weapon-of-choice Squid caching proxy has a number of pluggable authentication mechanisms built-into it. On Linux, the simplest one to have would be the already existing unix users accounts. BEWARE! This literally means transmitting your password plain text for each request you make to the wire. At the present only Google's Chrome can access Squid via HTTPS to protect your proxy password, and even that can be quirky, an autoconfig needs to be used. For example Mozilla Firefox has had a request for support in the existence since 2007, but no avail yet.

If you choose to go unencrypted HTTP-way and have your password exposed each time, this is the recipe for CentOS 6. First edit your /etc/squid/squid.conf to contain (the gray things should already be there):

# Example rule allowing access from your local networks.

# Adapt localnet in the ACL section to list your (internal) IP networks

# from where browsing should be allowed

http_access allow localnet

http_access allow localhost

# Proxy auth:

auth_param basic program /usr/lib64/squid/pam_auth

auth_param basic children 5

auth_param basic realm Authenticating Squid HTTP-proxy

auth_param basic credentialsttl 2 hours

cache_mgr root@my.server.com

# Authenticated users

acl password proxy_auth REQUIRED

http_access allow password

# And finally deny all other access to this proxy

# Allow for the (authenticated) world

http_access deny all

Reload Squid and your /var/log/messages will contain:

(pam_auth): Unable to open admin password file: Permission denied

This happens for the simple reason, that Squid effectively runs as user squid, not as root and that user cannot access the shadow password DB. LDAP would be much better for this (it is much better in all cases). Original:

# ls -l /usr/lib64/squid/pam_auth

-rwxr-xr-x. 1 root root 15376 Oct 1 16:44 /usr/lib64/squid/pam_auth

The only reasonable fix is to run the authenticator as SUID-root. This is generally a stupid idea and dangerous. For that reason we explicitly disallow usage for the authenticator for anybody else:

# chmod u+s /usr/lib64/squid/pam_auth

# chmod o= /usr/lib64/squid/pam_auth

# chgrp squid /usr/lib64/squid/pam_auth

Restart Squid and the authentication will work for you.

Credit for this go to Squid documentation, Squid wiki and Nikesh Jauhari's blog entry of Squid Password Authentication Using PAM.

Parallels Plesk Panel 11 RPC API, part 2

Thursday, October 17. 2013

My adventures with Parallels Plesk Panel's API continued. My previous fumblings can be found here. A fully working application started to say:

11003: PleskAPIInvalidSecretKeyException : Invalid secret key usage. Please check logs for details.

Ok. What does that mean? Google (my new favorite company) found nothing with that phrase or error code. Where is the log they refer to?

After a while I bumped into /usr/local/psa/admin/logs/panel.log. It said:

2013-10-16T11:34:35+03:00 ERR (3) [panel]: Somebody tries to use the secret key for API RPC "-my-super-secret-API-key-" from "2001:-my-IPv6-address-"

Doing a:

/usr/local/psa/bin/secret_key --list

revealed that previously they accepted an IPv4 address for secret key, but apparenly one of those Micro-Updates changed the internal policy to start using IPv6 if one is available.

When I realized that, it was an easy fix. The log displayed the IP-address, I just created a new API-key with secret_key-utility and everything started to work again.

Parallels: Document your changes and error codes, please.

Acronis True Image 2014 royal hang

Wednesday, October 2. 2013

My Acronis TI installation fucked up my laptop. Again. I was on customer's office with my laptop at the time a backup was scheduled to run. That should be no biggie, right?

Wrong.

2013 did that, I wrote about that earlier. Some update for 2013 fixed that. I stopped suffering about the issue at some point. When it did happen I mis-identified the problem about being related to windows update, later I found out that it was because of a stuck Acrnois backup job.

That shouldn't be too difficult to fix? Acronis? Anyone?