Upgrading into Windows 8.1

Friday, October 25. 2013

Why does everything have to be updated via download? That's completely fucked up! In the good old days you could download an ISO-image and update when you wanted and as many things you needed. The same plague is in Mac OS X, Windows, all important applications. I hate this!

Getting a Windows 8.1 upgrade was annoying since it failed to upgrade my laptop. It really didn't explain what happened, it said "failed rolling back". Then I bumped into an article "How to download the Windows 8.1 ISO using your Windows 8 retail key". Nice! Good stuff there. I did that and got the file.

Next thing I do is take my trustworthy Windows 7 USB/DVD download tool to create a bootable USB-stick from the 8.1 upgrade ISO-file. A boot from the stick and got into installer which said that this is not the way to do the upgrade. Come on! Second boot back to Windows 8 and start the upgrade from the USB-stick said that "Setup has failed to validate the product key". I googled that and found a second article about getting the ISO-file (Windows 8.1 Tip: Download a Windows 8.1 ISO with a Windows 8 Product Key), which had a comment from Mr. Robin Tick had a solution for this. Create the sources\ei.cfg-file into the USB-stick with contents:

[EditionID]

Professional

[Channel]

Retail

[VL]

0

Then the upgrade started to roll my way. To my amazement my BitLocker didn't make much of a stopper for the upgrade to proceed. It didn't much ask for the PIN-code or anything, but went trough all the upgrade stages: Installing, Detecting devices, Applying PC settings, Setting up a few more things, Getting ready and it was ready to go. It was refreshing to experience success after the initial failure. I'm guessing that the BitLocked made the downloaded upgrade to fail.

Next thing I tried was to upgrade my office PC. It is a Dell OptiPlex with OEM Windows 8. Goddamn it! It did the upgrade, but at that point my OEM Windows 8 was converted into a retail Windows 8.1. Was that really necessary? How much of an effort would that be to simply upgrade the operating system? Or at least give me a warning, that in order to proceed with the upgrade the OEM status will be lost. Come on Microsoft!

Authenticating HTTP-proxy with Squid

Thursday, October 24. 2013

Every now and then you don't want to approach a web site from your own address. There may be a number of "valid" reasons for doing that besides any criminal activity. Even YouTube has geoIP-limitations for certain live feeds. Or you may be a road warrior approaching the Internet from a different IP every time and want to even that out.

Anyway, there is a simple way of doing that: expose a HTTP-proxy to the Net and configure your browser to use that. Whenever you choose to have someting agains the wild-wild-net be very aware, that you're opening a door for The Bad Guys also. In this case, if somebody gets a hint that they can act anonymously via your server, they'd be more than happy to do so. To get all The Bad Guys out of your own personal proxy using some sort of authentication mechanism would be a very good idea.

Luckily, my weapon-of-choice Squid caching proxy has a number of pluggable authentication mechanisms built-into it. On Linux, the simplest one to have would be the already existing unix users accounts. BEWARE! This literally means transmitting your password plain text for each request you make to the wire. At the present only Google's Chrome can access Squid via HTTPS to protect your proxy password, and even that can be quirky, an autoconfig needs to be used. For example Mozilla Firefox has had a request for support in the existence since 2007, but no avail yet.

If you choose to go unencrypted HTTP-way and have your password exposed each time, this is the recipe for CentOS 6. First edit your /etc/squid/squid.conf to contain (the gray things should already be there):

# Example rule allowing access from your local networks.

# Adapt localnet in the ACL section to list your (internal) IP networks

# from where browsing should be allowed

http_access allow localnet

http_access allow localhost

# Proxy auth:

auth_param basic program /usr/lib64/squid/pam_auth

auth_param basic children 5

auth_param basic realm Authenticating Squid HTTP-proxy

auth_param basic credentialsttl 2 hours

cache_mgr root@my.server.com

# Authenticated users

acl password proxy_auth REQUIRED

http_access allow password

# And finally deny all other access to this proxy

# Allow for the (authenticated) world

http_access deny all

Reload Squid and your /var/log/messages will contain:

(pam_auth): Unable to open admin password file: Permission denied

This happens for the simple reason, that Squid effectively runs as user squid, not as root and that user cannot access the shadow password DB. LDAP would be much better for this (it is much better in all cases). Original:

# ls -l /usr/lib64/squid/pam_auth

-rwxr-xr-x. 1 root root 15376 Oct 1 16:44 /usr/lib64/squid/pam_auth

The only reasonable fix is to run the authenticator as SUID-root. This is generally a stupid idea and dangerous. For that reason we explicitly disallow usage for the authenticator for anybody else:

# chmod u+s /usr/lib64/squid/pam_auth

# chmod o= /usr/lib64/squid/pam_auth

# chgrp squid /usr/lib64/squid/pam_auth

Restart Squid and the authentication will work for you.

Credit for this go to Squid documentation, Squid wiki and Nikesh Jauhari's blog entry of Squid Password Authentication Using PAM.

Mac OS X 10.9 Mavericks .ISO image

Wednesday, October 23. 2013

Since Apple made a decision not to publish their operating systems as ISO-images on OS X 10.8, everybody has to create their own excitement (the phrase used to mock Gentoo Linux).

With Google, I found a very nice recipe for creating an image at the point where App Store has downloaded your free 10.9 upgrade, but it is not running yet. See it at Apple Insider forums with topic HOWTO: Create bootable Mavericks ISO. I followed the recipe with a simple copy / paste attitude and it did the trick. No funny stuff, no weird things you needed to know, it just did the trick and ended up with the thing I need.

I'll copy / paste the excellent writing of Mr./Mrs./Ms. CrEOF here:

# Mount the installer image

hdiutil attach /Applications/Install\ OS\ X\ Mavericks.app/Contents/SharedSupport/InstallESD.dmg \

-noverify -nobrowse -mountpoint /Volumes/install_app

# Convert the boot image to a sparse bundle

hdiutil convert /Volumes/install_app/BaseSystem.dmg -format UDSP -o /tmp/Mavericks

# Increase the sparse bundle capacity to accommodate the packages

hdiutil resize -size 8g /tmp/Mavericks.sparseimage

# Mount the sparse bundle for package addition

hdiutil attach /tmp/Mavericks.sparseimage -noverify -nobrowse -mountpoint /Volumes/install_build

# Remove Package link and replace with actual files

rm /Volumes/install_build/System/Installation/Packages

cp -rp /Volumes/install_app/Packages /Volumes/install_build/System/Installation/

# Unmount the installer image

hdiutil detach /Volumes/install_app

# Unmount the sparse bundle

hdiutil detach /Volumes/install_build

# Resize the partition in the sparse bundle to remove any free space

hdiutil resize -size `hdiutil resize -limits /tmp/Mavericks.sparseimage | \

tail -n 1 | awk '{ print $1 }'`b /tmp/Mavericks.sparseimage

# Convert the sparse bundle to ISO/CD master

hdiutil convert /tmp/Mavericks.sparseimage -format UDTO -o /tmp/Mavericks

# Remove the sparse bundle

rm /tmp/Mavericks.sparseimage

# Rename the ISO and move it to the desktop

mv /tmp/Mavericks.cdr ~/Desktop/Mavericks.iso

Thanks!

Fixing Google's new IPv6 mail policy with Postfix

Friday, October 18. 2013

I covered Google's new & ridiculous e-mail policy in my previous post.

The author of my favorite MTA, Postfix, Mr. Wietse Venema offered a piece of advice to another poor postmaster like me in the official Postfix User's Mailing list "disable ipv6 when sending to gmail?"

The idea is to use Postfix's SMTP reply-filter feature. With that, postmaster can re-write something the remote server said into something useful to alter Postfix's behavior. In this case, I'd prefer a retry using IPv4 instead of IPv6. Luckily the ability of dropping down to IPv4 is already built in, the only issue is to convince Postfix that what Google said is not true. For the IPv6-issue they state that the e-mail in question cannot be delivered due to a permanent error. A status code of 5.5.0 is given in this case. What Wietse suggest is to re-write the 5.5.0 into a 4.5.0 which indicates a temporary failure. This triggers the mechanism to do an IPv4 attempt immediately after failure.

I added following into /etc/postfix/main.cf:

# Gmail IPv6 retry:

smtp_reply_filter = pcre:/etc/postfix/smtp_reply_filter

Then I created the file of /etc/postfix/smtp_reply_filter and made it contain:

# Convert Google Mail IPv6 complaint permanent error into a temporary error.

# This way Postfix will attempt to deliver this e-mail using another MX

# (via IPv4).

/^5(\d\d )5(.*information. \S+ - gsmtp.*)/ 4${1}4$2

Reload Postfix just to make sure the main.cf change is in effect, no need to postmap the PCRE-file.

Effectively the last line of Google error message:

550-5.7.1 [2001:-my-IPv6-address-here- 16] Our system has detected

550-5.7.1 that this message does not meet IPv6 sending guidelines regarding PTR

550-5.7.1 records and authentication. Please review

550-5.7.1 https://support.google.com/mail/?p=ipv6_authentication_error for more

550 5.7.1 information. dj7si12191118bkc.191 - gsmtp (in reply to end of DATA command))

will be transformed into:

450 4.7.1 information. dj7si12191118bkc.191 - gsmtp (in reply to end of DATA command))

And my mail gets delivered! Nice. Thanks Wietse! Shame on you Google!

Parallels Plesk Panel 11 RPC API, part 2

Thursday, October 17. 2013

My adventures with Parallels Plesk Panel's API continued. My previous fumblings can be found here. A fully working application started to say:

11003: PleskAPIInvalidSecretKeyException : Invalid secret key usage. Please check logs for details.

Ok. What does that mean? Google (my new favorite company) found nothing with that phrase or error code. Where is the log they refer to?

After a while I bumped into /usr/local/psa/admin/logs/panel.log. It said:

2013-10-16T11:34:35+03:00 ERR (3) [panel]: Somebody tries to use the secret key for API RPC "-my-super-secret-API-key-" from "2001:-my-IPv6-address-"

Doing a:

/usr/local/psa/bin/secret_key --list

revealed that previously they accepted an IPv4 address for secret key, but apparenly one of those Micro-Updates changed the internal policy to start using IPv6 if one is available.

When I realized that, it was an easy fix. The log displayed the IP-address, I just created a new API-key with secret_key-utility and everything started to work again.

Parallels: Document your changes and error codes, please.

Thanks Google for your new IPv6 mail policy

Wednesday, October 16. 2013

The short version is: Fucking idiots!

Long version:

Google Mail introduced a new policy somewhere in August 2013 for receiving e-mail via IPv6. Earlier the policy was same for IPv4 and IPv6, but they decided to make Internet a better place by employing a much tighter policy for e-mail senders. Details can be found from their support pages.

For e-mail Authentication & Identification they state:

- Use a consistent IP address to send bulk mail.

- Keep valid reverse DNS records for the IP address(es) from which you send mail, pointing to your domain.

- Use the same address in the 'From:' header on every bulk mail you send.

- We also recommend publishing an SPF record

- We also recommend signing with DKIM. We do not authenticate DKIM using less than a 1024-bit key.

- The sending IP must have a PTR record (i.e., a reverse DNS of the sending IP) and it should match the IP obtained via the forward DNS resolution of the hostname specified in the PTR record. Otherwise, mail will be marked as spam or possibly rejected.

- The sending domain should pass either SPF check or DKIM check. Otherwise, mail might be marked as spam.

First: My server does not send bulk mail. It sends mail now an then. If the idiots label my box as a "bulk sender" (whatever that means), there is nothing I can do to help it.

Second: I already have done all of the above. I even checked my PTR-record twice. Yes, it is in the above list two times using different words.

Still, after jumping all the hoops, crossing all the Ts and dotting all the Is: they don't accept email from my box anymore. They dominate the universe, they set new policies, start to enforce them without notice and fail to provide any kind of support. At minimum a web page to fill in couple of fields to a form to test how they perceive your server and give a result what to fix. But no. They don't do that, they just stop to accept any email.

To provide matching words for their search engine, I post a log entry (wrapped to multiple lines) from my Postfix:

postfix/smtp[6803]: A82C94E6CE:

to=<my@sending.address.fi>,

orig_to=<the@recipient's.address.net>,

relay=aspmx.l.google.com[2a00:1450:4008:c01::1b]:25,

delay=0.76,

delays=0.04/0/0.35/0.37,

dsn=5.7.1,

status=bounced (host aspmx.l.google.com[2a00:1450:4008:c01::1b] said:

550-5.7.1 [2001:-my-IPv6-address- 16]

Our system has detected 550-5.7.1 that this message does not meet IPv6 sending guidelines regarding

PTR 550-5.7.1 records and authentication.

Please review 550-5.7.1 https://support.google.com/mail/?p=ipv6_authentication_error for more 550 5.7.1 information.

qc2si10501687bkb.307 - gsmtp (in reply to end of DATA command))

I'm not alone with my problem. Easily a number of people complaining about the same issue can be found: Gmail, why are you doing this to me? and Google, your IPv6-related email restrictions suck. Most people simply stop using IPv6 to deliver mail to Google. My choice is to fight to the bitter end.

While complaining the un-justified attitude I get from Google, I got a piece of advice: "Why don't you check what Google's DNS thinks of your setup?". I was like "WHAAT? What Google DNS?"

In fact there is a public DNS offered by Google. It is described in article Using Google Public DNS. I did use that to confirm that my DNS and reverse-DNS were set up correctly. I typed this into a BASH-shell:

# dig -x 2001:-my-IPv6-address- @2001:4860:4860::8888

It yielded correct results. There was nothing I could do to fix this issue more. ![]() As it turned out, I did not change anything but after a couple of days, they just seemed to like my DNS more and allowed my email to pass. Perhaps one of these days I'll write something similar to my open recursive DNS tester.

As it turned out, I did not change anything but after a couple of days, they just seemed to like my DNS more and allowed my email to pass. Perhaps one of these days I'll write something similar to my open recursive DNS tester.

Idiots!

Bug in Linux 3.11: Netfilter MASQUERADE-target does not work anymore

Wednesday, October 9. 2013

This is something I've been trying to crack ever since I installed Fedora 19 alpha into my router. My HTTP-streams do not work. At all. Depending on the application and its retry-policy implementation some things would work, some won't. Examples:

- Playstation 3 updates: Updates load up to 30% and then nothing, this one I mistakenly thought was due to PS3 firmware update

- YLE Areena: No functionality after first 10-40 seconds

- Netflix: Poor picture quality, HD pretty much never kicks in, super-HD... dream on.

- Spotify: Works ok

- Ruutu.fi: Endless loop of commercials, the real program never starts

- Regular FTP-stream: Hang after first bytes

My Fedora 19 Linux is a router connecting to Internet and distributing the connection to my home LAN via NAT. The IPtables rule is:

iptables -t nat -A POSTROUTING -o em1 -j MASQUERADE

I found an article with title IPTables: DNAT, SNAT and Masquerading from LinuxQuestions.org. It says:

"SNAT would be better for you than MASQUERADE, but they both work on outbound (leaving the server) packets. They replace the source IP address in the packets for their own external network device, when the packet returns, the NAT function knows who sent the packet and forwards it back to the originating workstation inside the network."

So, I had to try that. I changed my NAT-rule to:

iptables -t nat -D POSTROUTING -o em1 -j MASQUERADE

iptables -t nat -A POSTROUTING -o em1 -j SNAT --to-source 80.my.source.IP

... and everything starts to work ok! I've been using the same masquerade-rule for at least 10 years without problems. Something must have changed in Linux-kernel.

I did further studies with this problem. On a remote server I did following on a publicly accessible directory:

# dd if=/dev/urandom of=random.bin bs=1024 count=10240

10240+0 records in

10240+0 records out

10485760 bytes (10 MB) copied, 1.76654 s, 5.9 MB/s

It creates a random file of 10 MiB. For testing purposes, I can load the file with wget-utility:

# wget http://81.the.other.IP/random.bin

Connecting to 81.the.other.IP:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 10485760 (10M) [application/octet-stream]

Saving to: `random.bin'

100%[=================>] 10,485,760 7.84M/s in 1.3s

2013-10-08 17:06:02 (7.84 MB/s) - `random.bin' saved [10485760/10485760]

No problems. The file loads ok. The speed is good, nothing fancy there. I change the rule back to MASQUERADE and do the same thing again:

# wget http://81.the.other.IP/random.bin

Connecting to 81.the.other.IP:80... connected.

HTTP request sent, awaiting response... 200 OK

Length: 10485760 (10M) [application/octet-stream]

Saving to: `random.bin'

10% [=======> ] 1,090,200 --.-K/s eta 85m 59s

After waiting for 10 minutes, there was no change in the download. wget simply hung there and would not process without manual intervention. Its official: masquerade is busted.

Me finding a bug in Linux kernel is almost impossible. I'm not a kernel developer, or anything, but anything I try finds nothing from the net. So I had to double check to rule out following:

- Hardware:

- Transferring similar file from router-box to client works fully. I tested a 100 MiB file. No issues with my LAN or the client computer.

- Transferring similar file from outside-server to router-box works fully. I tested a 100 MiB file. No issues with my Internet connection.

- When not NATing, everything works ok. Based on this I don't suspect any hardware issues.

- There is no difference in my home if using WLAN or Ethernet. The problem is related to my POSTROUTING-setting.

- IPv4:

- I have a SixXS IPv6-tunnel at my disposal. Transferring a 100 MiB file from outside-server via IPv6 to the same a IPv4 NATed client works fully. No issues.

- My original claim is that MASQUERADE is broken, SNAT works. Functioning IPv6 connection supports that claim.

To further see if it would be a Fedora-thing, or affecting entire Linux, I took official Linux 3.11.4 source code and Fedora kernel-3.11.3-201.fc19.src.rpm and ran a diff:

# diff -aur /tmp/linux.orig/linux-3.11.4/net/ipv4/netfilter \

/tmp/linux.fc19/linux-3.11/net/ipv4/netfilter

Nothing. No differences encountered. Looks like I have to file a bug report to Fedora and possibly Netfilter-project. Looking at the change log of /net/ipv4/netfilter/ipt_MASQUERADE.c reveals absolutely nothing, the change must be somewhere else.

Why cloud platforms exist - Benchmarking Windows Azure

Tuesday, October 8. 2013

I got permission to publish a grayed out version of a project I was contracted to do this summer. Since the customer paid big bucks for it, you're not going to see all the details. I'm sorry to act as a greedy idiot, but you have to hire me to do something similar to see your results.

The subject of my project is something that personally is intriguing to me: how much better does a cloud-native application perform when compared to a traditional LAMP-setup. I chose the cloud platform to be Windows Azure, since I know that one best.

The Setup

There was a pretty regular web-application for performing couple of specific tasks. Exactly the same sample data was populated to Azure SQL for IaaS-test and Azure Table Storage for PaaS -test. People who complain about using Azure SQL can imagine a faster setup being used on a virtual machine and expect the thing to perform faster.

To simulate a real web application, memory cache was used. Memcache for IaaS and Azure Cache for PaaS. On both occasions using memory cache pushes the performance of the application further as there is no need to do so much expensive I/O.

Results

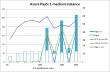

In the Excel-charts there are number of simulated users at the horizontal axis. There are two vertical axis used for different items.

Following items can be read from the Excel-charts:

- Absolute number of pages served for giving measurement point (right axis)

- Absolute number of pages returned, which returned erroneous output (right axis)

- Percentage of HTTP-errors: a status code which we interpret as an error was returned (left axis)

- Percentage of total errors: HTTP errors + requests which did not return a status code (left axis)

- Number successful pages returned per second (left axis)

Results: IaaS

I took a ready-made CentOS Linux-image bundled with Nginx/PHP-FPM -pair and lured it to work under Azure and connect to ready populated data from Azure SQL. Here are the test runs from two and three medium instances.

Adding a machine to the service absolutely helps. With two instances, the application chokes completely at the end of test load. Added machine also makes the application perform much faster, there is a clear improvement on page load speed.

Results: PaaS

Exactly the same functionality was implemented with .Net / C#.

Here are the results:

Astonishing! Page load speed is so much higher on similar user loads, also no errors can be produced. I pushed the envelope with 40 times the users, but couldn't be sure if it was about test setup (which I definitely saturated) or Azure's capacity fluctuating under heavy load. The test with small role was also very satisfactory, it beats the crap out of running two medium instances on IaaS!

Conclusion

I have to state the obvious: PaaS-application performs much better. I just couldn't belive that it was impossible to get exact measurement from the point where the application chokes on PaaS.

Why Azure PaaS billing cannot be stopped? - revisit

Monday, October 7. 2013

In my earlier entry about Azure PaaS billing, I was complaining about how to stop the billing.

This time I managed to do it. The solution was simple: delete the deployments, but leave the cloud service intact. Then Azure stops reserving any (stopped) compute units for the cloud service. Like this:

Here is the proof:

Zero billing. Nice! ![]()

Acronis True Image 2014 royal hang

Wednesday, October 2. 2013

My Acronis TI installation fucked up my laptop. Again. I was on customer's office with my laptop at the time a backup was scheduled to run. That should be no biggie, right?

Wrong.

2013 did that, I wrote about that earlier. Some update for 2013 fixed that. I stopped suffering about the issue at some point. When it did happen I mis-identified the problem about being related to windows update, later I found out that it was because of a stuck Acrnois backup job.

That shouldn't be too difficult to fix? Acronis? Anyone?

Why Azure PaaS billing cannot be stopped?

Tuesday, October 1. 2013

In Windows Azure stopping an IaaS virtual machine stops the billing, there is no need to delete the stopped instance. When you stop a PaaS cloud service, following happens:

Based on billing:

This is really true. On 26th and 27th I had a cloud service running on Azure, but I stopped it. On 28th and 29th there is billing for a service that has been stopped, and for which I got the warning about. I don't know why on 30th there is one core missing from the billing. Discount, perhaps? ![]()

My bottom line is:

Why? What possible idea could be, that your PaaS cloud service needs to be deleted in order to stop billing? Come on Microsoft! Equal rules for both cloud services!