Making USB-bootable PLD RescueCD from your Linux

Tuesday, December 31. 2013

PLD RescueCD is my new favorite Linux rescue CD. It has a ton of stuff in it, even the ipmitool from OpenIPMI-project. One of these days, it so happened that I lost my IPMI network access due to own mis-configuration. I just goofed up the conf and oops, there was no way of reaching management interface anymore. If the operating system on the box would have been ok, it might have been possible to do some fixing via that, but I chose not to. Instead I got a copy of PLD and started working.

The issue is, that PLD RescueCD comes as ISO-image only. Well, erhm... nobody really boots CDs or DVDs anymore. To get the thing booting from an USB-stick appeared to be a rather simple task.

Prerequisites

- A working Linux with enough root-access to do some work with USB-stick and ISO-image

- syslinux-utility installed, all distros have this, but not all of them install it automatically. Confirm that you have this or you won't get any results.

- GNU Parted -utility installed, all distros have this. If yours doesn't you'll have to adapt with the partitioning weapon of your choice.

- An USB-stick with capacity of 256 MiB or more, the rescue CD isn't very big for a Linux distro

- WARNING! During this process you will lose everything on that stick. Forever.

- Not all old USB-sticks can be used to boot all systems. Any reasonable modern ones do. If you are failing, please try again with a new stick.

- PLD RescueCD downloaded ISO-file, I had RCDx86_13_03_10.iso

- You'll need to know the exact location (as in directory) for the file

- The system you're about to rescue has a means of booting via USB. Any reasonable modern system does. With old ones that's debatable.

Assumptions used here:

- Linux sees the USB-stick as /dev/sde

- ISO-image is at /tmp/

- Mount location for the USB-stick is /mnt/usb/

- Mount location for the ISO-image is /mnt/iso/

- syslinux-package installs it's extra files into /usr/share/syslinux/

- You will be using the 32-bit version of PLD Rescue

On your system those will most likely be different or you can adjust those according to your own preferences.

Information about how to use syslinux can be found from SYSLINUX HowTos.

Steps to do it

- Insert the USB-stick into your Linux-machine

- Partition the USB-stick

- NOTE: Feel free to skip this if you already have a FAT32-partition on the stick

- Steps:

- Start GNU Parted:

parted /dev/sde - Create a MS-DOS partition table to the USB-stick:

mktable msdos - Create a new 256 MiB FAT32 partition to the USB-stick:

mkpart pri fat32 1 256M - Set the newly created partition as bootable:

set 1 boot on - End partitioning:

quit - Format the newly created partition:

mkfs.vfat -F 32 /dev/sde1 - Copy a syslinux-compatible MBR into the stick:

dd if=/usr/share/syslinux/mbr.bin of=/dev/sde conv=notrunc bs=440 count=1 - Install syslinux:

syslinux /dev/sde1 - Mount the USB-stick to be written into:

mount /dev/sde1 /mnt/usb/ - Mount the ISO-image to be read:

mount /tmp/RCDx86_13_03_10.iso /mnt/iso/ -o loop,ro - Copy the ISO-image contents to the USB-stick:

cp -r /mnt/iso/* /mnt/usb/ - Convert the CD-boot menu to work as USB-boot menu:

mv /mnt/usb/boot/isolinux /mnt/usb/syslinux - Take the 32-bit versions into use:

cp /mnt/usb/syslinux/isolinux.cfg.x86 /mnt/usb/syslinux/syslinux.cfg - Umount the USB-stick:

umount /mnt/usb - Umount the ISO-image:

umount /mnt/iso - Un-plug the USB-stick and test!

Result

Here is what a working boot menu will look like:

Like always, any comments or improvements are welcome. Thanks Arkadiusz for your efforts and for the great product you're willing to share with rest of us. Sharing is caring, after all! ![]()

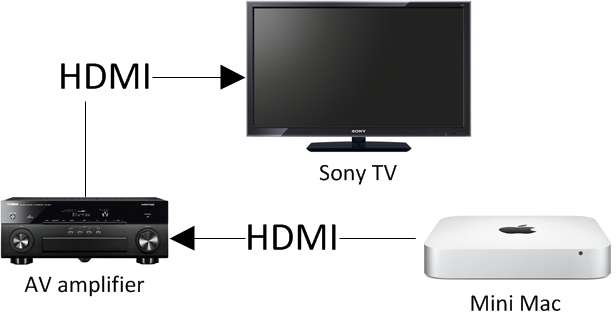

Mac OS X Dolby Digital 5.1 with Mac Mini [solved]

Monday, December 30. 2013

This is my 2nd attempt of trying to clarify how to get Dolby Digital 5.1 output via HDMI. The previous attempt can be found here. All the information I can find on this subject shows that for some people multi-channel audio works fine, nothing special is required, but then again for some of us this is a pain and its almost impossible to get this working.

Here is my setup:

The problem, like presented earlier is depicted here:

The Audio MIDI setup clearly displays as Sony TV being the HDMI output device. The problem is, that it actually isn't. Here is another screen capture of Audio MIDI setup from my brother's computer:

Whoa! His Mac Mini displays his A/V amp as the HDMI destination. Totally different from what my Mac displays. The only explanation for this is, that my Yamaha takes the TV's spec from the HDMI and proxies it to the Mac and his Onkyo doesn't take anything, it simply presents itself as the destination. If you'd ask me, Onkyo's solution is much better than Yamaha's. Anyway, the amp has to pick up the audio signal to be sent to loudspeakers and do a stereo mixdown of a multi-channel signal to be sent for the TV. So there will be a lot of processing at the amp, why not declare itself as the destination for the Mac. It seems to be confusing.

The Solution

Here is what I did to get proper 5.1 channel sound working from my Mac Mini. The problem is, that I cannot get it back to the broken mode again, it simply stays fully functional no matter what I do. There must be something going on at the amp end and something else going on at the Mac end. For some reason they don't match or they do match and there is very little I can do to control it. But anyway, here are my steps with Audio MIDI Setup utility:

- In the Mac, set HDMI to Use this device for sound output and Play alerts and sound effects trough this device

- Confirm that the speaker setup is correct and click the speakers to confirm that test tone does not output as expected

- In the amp, make sure that the input HDMI is decoding multi-channel audio as expected

- Auto-detect or stereo won't work

- Previously my instructions stopped here

- In the Mac, at the HDMI, in Format set it as Encoded Digital Audio, the Hz setting is irrelevant

- This will effectively unset HDMI as output device and set Built-in Output as the output device. It also pretty much makes all sounds in the system non-functional.

- Again at the HDMI, in Format select 8ch-24bit Integer, it will reset the HDMI to Use this device for sound output and Play alerts and sound effects trough this device

- Re-confirm that speaker setup is correct. At this point the test tone should work from the speaker correctly.

- You're done!

This fix and pretty much everything about Mac Mini's HDMI audio output is bit fuzzy. Any real solution should be reproducible somehow. This isn't. But I can assure you, that now my multi-channel audio really works as expected.

Any feedback about this solution is welcome!

Update 1st Jan 2014:

The number of channels configured into Audio Setup does not reflect the actual number of speakers you have. That is done in Configure Speakers. I have 8ch (or 8 speakers) configured in the Audio Setup, but this is a screenshot of my speaker setup:

They have different number of speakers! It still works. That's how it is supposed to be.

Certificate Authority setup: Doing it right with OpenSSL

Friday, December 27. 2013

In my previous post about securing HTTP-connections HTTP Secure: Is Internet really broken? I was speculating about the current state of encryption security in web applications. This article is about how to actually implement a CA in detail and the requirements of doing so.

During my years as server administrator, I've been setting up CAs into Windows domains. There many of the really complex issues I'm describing below have been handled so that you don't even notice them. I'm sure that using a commercial CA-software on Linux will yield similar results, but I just haven't really tested them. This article is about implementing a properly done CA with OpenSSL sample minimal CA application. Out-of-the-box it does many things minimally (as promised), but it is the most common CA. It ships with every Linux distro and the initial investment price (0 €) is about right.

Certificate what?

In cryptography, a certificate authority or certification authority (CA), is an entity that issues digital certificates.

— Wikipedia

Why would one want to have a CA? What is it good for?

For the purpose of cryptograpic communications between web servers and web browsers, we use X.509 certificates. Generally we talk about SSL certificates (based on the original, now obsoleted standard) or TLS certificates based on the currently valid standard. The bottom line is: when we do HTTP secure or HTTPS we will need a certificate issued by a Certficiate Authority.

Historically the HTTPS was developed by Netscape back in the 1994. They took X.509 as starting poing and adapted it to function with HTTP. A standard which was developed by well known Tim Berners-Lee in CERN couple of years before that. The RFC 5280 describes X.509. It has funny title "Internet X.509 Public Key Infrastructure Certificate and Certificate Revocation List (CRL) Profile", but is the current version.

Wikipedia says: "In cryptography, X.509 is an ITU-T standard for a public key infrastructure (PKI) and Privilege Management Infrastructure (PMI). X.509 specifies, amongst other things, standard formats for public key certificates, certificate revocation lists, attribute certificates, and a certification path validation algorithm."

So essentially X.509 just dictates what certificates are and how they can be issued, revoked and verified. Netscape's contribution simply was very important at the time as they added encryption of HTTP data being transferred. Contribution was so important, that the invention is still used today, and nobody has proposed anything serious to replace that.

So, enough history... let's move on.

Encryption: The ciphers

It is also important to understand, that a certificate can be used to encrypt the transmission using various cipher suites. Run openssl ciphers -tls1 -v to get a cryptic list of all supported ones. Examples:

RC4-MD5 SSLv3 Kx=RSA Au=RSA Enc=RC4(128) Mac=MD5

DES-CBC3-SHA SSLv3 Kx=RSA Au=RSA Enc=3DES(168) Mac=SHA1

AES128-SHA SSLv3 Kx=RSA Au=RSA Enc=AES(128) Mac=SHA1

AES256-SHA256 TLSv1.2 Kx=RSA Au=RSA Enc=AES(256) Mac=SHA256

The actual list is very long, but not all of them are considered secure, see the chart in Wikipedia about some of the secure and insecure ciphers.

The columns are as follows:

- Name (or identifier)

- The protocol version which added the support

- Kx=: Key exchange method, RSA, DSA, Diffie-Hellman, etc.

- Au=: Authentication method, RSA, DSA, Diffie-Hellma or none

- Enc=: Encryption method with number of bits used

- Mac=: Message digest algorithm, MD5 or SHA1

If not counting any possible bugs, a number of flaws have been found in TLS/SSL, especially if using weak cipher suites. One of the recent and well known is the BEAST attack which can easily be mitigated by limiting the available cipher suites. If the BEAST is successfully applied, it can decrypt cookies from request headers. Thus, it is possible for somebody eavesdropping to gain access to your account even if you're using HTTPS. So essentially you've been doing exactly what every instruction about safe browsing tells you to, you've been using HTTPS, but still your traffic can be seen by observing parties.

Please note that when talking about cipher suites, there are two different entities which have the number of bits: server key and encryption key. The server key is the server's public/private -key pair, it can have for example 2048 bits in it. The server key is used only to transmit the client generated "real" encryption key to server, it can have for example 256 bits in it. Server key is typically never renewed, on the other hand the encryption key can have a lifetime of couple seconds.

The easy way: Self-signed certificates

Ok. Now we have established that there is a need for certificates to encrypt transmission and to issue a false sense of identified verification about the other party. Why not go the path-of-least-resistance? Why bother doing the complex process of building a CA because it is very easy to issue self-signed certificates. A self-signed certificate provides the required encryption, your browser may complain about invalid certificate, but even that complaint can be suppressed.

Most instruction tell you to run some magical openssl-commands to get your self-signed certificate, but in reality there exists a machine for that Self-Signed Certificate Generator. It doesn't get any easier than that.

My personal recommendation is to never use self-signed certificates for anything.

An excellent discussion about when them could be used can be found from Is it a bad practice to use self-signed SSL certificates? and What's the risk of using self-signed SSL? and When are self-signed certificates acceptable?

Practically a self-signed certificate is slipped into use like this:

- A lazy developer creates the self-signed certificate. He does it for himself and he chooses to ignore any complaints by his browser. Typical developers don't care about the browser complaints anyway nor they know how to suppress them.

- In the next phase the error occurs: the software goes to internal testing and number of people start using the bad certificate. Then they add couple of customer's people or external testers.

- Now a bunch of people have been trained to to pay any attention about certificate errors. They carry that trait for many decades.

I've not seen any self-signed certificates in production, so typically a commercial is eventually purchased. What puzzles me is that why don't they set up their own CA, or get a free certificate from CAcert or StartSSL.

CA setup

How to actually set up your own CA with OpenSSL has been extensively discussed since ever. One of the best instructions I've seen is by Mr. Jamie Nguyen. His a multi-part instructions are: How to act as your own certificate authority (CA), How to create an intermediate certificate authority (CA) and How to generate a certificate revocation list (CRL) and revoke certificates.

I'd also like to credit following as they helped me with setting up my own CA:

- Howto: Make Your Own Cert And Revocation List With OpenSSL

- OpenSSL CA and non CA certificate

- Generate a root CA cert for signing, and then a subject cert

Other non-minimal CAs which may be interesting, but I didn't review them:

The good way: The requirements for a proper CA

My requirements for a proper CA are:

- 2-level CA (This is what Jamie instructed):

- Root CA: For security reasons, make sure this is not in the same machine as intermediate CA. Typically this needs to be protected well and is considered off-line, except for the weekly revocation list updates.

- Intermediate: The active CA which actually issues all certificates

- Passes Windows certutil.exe -verification

- All certificates are secure and have enough bits in them

- A certificate requested for www.domain.com implicitly also has domain.com in it

- A certificate identifies the authority that issued it

- A certificate has location of revocation information in it

- CA certificates (both root and intermediate) identify themselves as CA certificates with proper usage in them

Verifying an issued certificate

In the requirements list there is only one really difficult thing: to get Windows certutil.exe to verify an issued certificate. The reason for that is, because pretty much all of the requirements must be met in order to achieve that.

A successful run is very long, but will look something like this (this is already a reduced version):

PS D:\> C:\Windows\System32\certutil.exe -verify -urlfetch .\test.certificate.crt

Issuer:

C=FI

Subject:

CN=test.certificate

C=FI

Cert Serial Number: 1003

-------- CERT_CHAIN_CONTEXT --------

ChainContext.dwInfoStatus = CERT_TRUST_HAS_PREFERRED_ISSUER (0x100)

ChainContext.dwRevocationFreshnessTime: 24 Days, 20 Hours, 35 Minutes, 29 Seconds

SimpleChain.dwInfoStatus = CERT_TRUST_HAS_PREFERRED_ISSUER (0x100)

SimpleChain.dwRevocationFreshnessTime: 24 Days, 20 Hours, 35 Minutes, 29 Seconds

CertContext[0][0]: dwInfoStatus=102 dwErrorStatus=0

Element.dwInfoStatus = CERT_TRUST_HAS_KEY_MATCH_ISSUER (0x2)

Element.dwInfoStatus = CERT_TRUST_HAS_PREFERRED_ISSUER (0x100)

---------------- Certificate AIA ----------------

Verified "Certificate (0)" Time: 0

---------------- Certificate CDP ----------------

Verified "Base CRL (1005)" Time: 0

CertContext[0][1]: dwInfoStatus=102 dwErrorStatus=0

Element.dwInfoStatus = CERT_TRUST_HAS_KEY_MATCH_ISSUER (0x2)

Element.dwInfoStatus = CERT_TRUST_HAS_PREFERRED_ISSUER (0x100)

---------------- Certificate AIA ----------------

Verified "Certificate (0)" Time: 0

---------------- Certificate CDP ----------------

Verified "Base CRL (1008)" Time: 0

---------------- Base CRL CDP ----------------

No URLs "None" Time: 0

---------------- Certificate OCSP ----------------

No URLs "None" Time: 0

--------------------------------

CRL 1006:

CertContext[0][2]: dwInfoStatus=10a dwErrorStatus=0

Element.dwInfoStatus = CERT_TRUST_HAS_KEY_MATCH_ISSUER (0x2)

Element.dwInfoStatus = CERT_TRUST_IS_SELF_SIGNED (0x8)

Element.dwInfoStatus = CERT_TRUST_HAS_PREFERRED_ISSUER (0x100)

---------------- Certificate AIA ----------------

No URLs "None" Time: 0

---------------- Certificate CDP ----------------

Verified "Base CRL (1008)" Time: 0

---------------- Certificate OCSP ----------------

No URLs "None" Time: 0

--------------------------------

------------------------------------

Verified Issuance Policies: None

Verified Application Policies:

1.3.6.1.5.5.7.3.1 Server Authentication

Cert is an End Entity certificate

Leaf certificate revocation check passed

CertUtil: -verify command completed successfully.

The tool runs the entire certification chain from leaf [0][0] into intermediate CA [0][1] and to root CA [0][2] and reads the information, does the URL fetching and confirms everything it sees, knows about and learns about. Your setup really needs to be up to par to do that!

NOTE:

Your CA root certificate must be loaded into Window's machine account. The verify does not work properly, if you install the root certificate to user store. Something like this run with administrator permissions will fix the verify:

C:\Windows\System32\certutil.exe -enterprise -addstore root Root-CA.cer

You'll just need to download the certificate file Root-CA.cer (in PEM-format) as a prerequisite.

The setup

I'm not going to copy here what Jamie instructed, but after you've done the basic setup my work starts.

The key to achieve all my requirements is in the v3 extensions. RFC 5280 lists following standard extensions: Authority Key Identifier, Subject Key Identifier, Key Usage, Certificate Policies, Policy Mappings, Subject Alternative Name, Issuer Alternative Name, Subject Directory Attributes, Basic Constraints, Name Constraints, Policy Constraints, Extended Key Usage, CRL Distribution Points, Inhibit anyPolicy and Freshest CRL (a.k.a. Delta CRL Distribution Point). Also the private internet extensions: Authority Information Access and Subject Information Access are worth noting. I highlighted the good ones.

What I have is an own Bash-script for approving a request on my intermediate CA. A fragment of it contains generation of openssl.cnf appendix:

# NOTE: Used for web certificate approval

[ v3_web_cert ]

# PKIX recommendation.

subjectKeyIdentifier=hash

authorityKeyIdentifier=keyid:always,issuer

# PKIX recommendation.

basicConstraints=CA:FALSE

# Key usage

# @link http://www.openssl.org/docs/apps/x509v3_config.html

keyUsage = digitalSignature, keyEncipherment, keyAgreement

extendedKeyUsage = serverAuth

authorityInfoAccess = caIssuers;URI:http://ca.myown.com/Intermediate-CA.cer

crlDistributionPoints = URI:http://ca.myown.com/Intermediate-CA.crl

subjectAltName = @alt_names

[alt_names]

DNS.1 = ${SUBJECT}

DNS.2 = ${ALT_NAME}

After generation, it is used in the script like this:

openssl ca -config ${CA_ROOT}/openssl.cnf \

-keyfile ${CA_ROOT}/private/intermediate.key.pem \

-cert ${CA_ROOT}/certs/intermediate.cert.pem \

-extensions v3_web_cert -extfile cert.extensions.temp.cnf \

-policy policy_anything -notext -md sha1 \

-in Input_file.req

On my root CA I have for intermediate CA certificate:

# Used for intermediate CA certificate approval

[ v3_int_ca ]

# PKIX recommendation.

subjectKeyIdentifier=hash

authorityKeyIdentifier=keyid:always,issuer

# PKIX recommendation.

basicConstraints = critical,CA:true

# Key usage: this is typical for a CA certificate

# @link http://www.openssl.org/docs/apps/x509v3_config.html

keyUsage = critical, keyCertSign, cRLSign

authorityInfoAccess = caIssuers;URI:http://ca.myown.com/Root-CA.cer

crlDistributionPoints = URI:http://ca.myown.com/Root-CA.crl

Also on root CA I have for the root certificate itself:

# Used for root certificate approval

[ v3_ca ]

# PKIX recommendation.

subjectKeyIdentifier=hash

authorityKeyIdentifier=keyid:always,issuer

# PKIX recommendation.

basicConstraints = critical,CA:true

# Key usage: this is typical for a CA root certificate

# @link http://www.openssl.org/docs/apps/x509v3_config.html

keyUsage = critical, digitalSignature, keyEncipherment, keyAgreement, keyCertSign, cRLSign

crlDistributionPoints = URI:http://ca.myown.com/Root-CA.crl

Notice how root CA certificate does not have AIA (Authority Information Access) URL in it. There simply is no authority above the root level. For intermediate CA there is and that is reflected in the issued certificate. The CRL distribution points (Certificate Revocation Lists) are really important, that's the basic difference between the "minimum" and properly done CAs.

Your next task is to actually make sure, that the given URLs have the indicated files in them. If you didn't get it: you will need a web server which can be accessed by your web browser to retrieve the files. The revocation lists can be auto-generated daily or weekly, but it has a limited life span. Out-of-the-box OpenSSL has in its .cnf-file: default_crl_days= 30 So a month is the absolute maximum.

I'll compile the list the 4 URLs here for brevity:

- Certificate locations:

- authorityInfoAccess = caIssuers;URI:http://ca.myown.com/Root-CA.cer

- authorityInfoAccess = caIssuers;URI:http://ca.myown.com/Intermediate-CA.cer

- Revocation list locations:

- crlDistributionPoints = URI:http://ca.myown.com/Root-CA.crl

- crlDistributionPoints = URI:http://ca.myown.com/Intermediate-CA.crl

For the simplicity I'll keep the public files in a single point. My CRL generation script verifies the existing certificates and creates alerts if old ones do not match the current ones. Perhaps somebody changed them, or I did a mistake myself.

Summary

The requirements have been met:

- 2-level CA

- Pass!

- Passes Windows certutil.exe -verify

- Pass!

- All certificates are secure and have enough bits in them

- Pass!

- The server keys are 2048 bits minimum

- The web servers where the certificates are used have

SSLCipherSuite ECDHE-RSA-AES128-SHA256:AES128-GCM-SHA256:RC4:HIGH:!MD5:!aNULL:!EDH - A certificate requested for www.domain.com also has domain.com in it

- Pass!

- A script creates the configuration block dynamically to include the subjectAltName-list

- A certificate identifies the authority that issued it

- Pass!

- All certificates have the authorityInfoAccess in them

- A certificate has location of revocation information in it

- Pass!

- Intermediate and issued certificates have crlDistributionPoints in them

- CA certificates (both root and intermediate) identify themselves as CA certificates with proper usage in them

- Pass!

- CA certificates have basicConstraints=CA:TRUE in them

That's it! Feel free to ask for any details I forgot to mention here.

My experience is that only 1% of the system admins have a rough understanding of this entire issue. My favorite hobby of cryptography, VPN-tunnels and X.509 certificates don't seem to light nobody else's fire like they do for me. ![]()

HTTP Secure: Is Internet really broken?

Wednesday, December 25. 2013

What is HTTP Secure

HTTP secure (or HTTPS) is HTTP + encryption. For the purpose of cryptograpic communications between web servers and people accessing them, we use X.509 certificates issued by a Certificate Authority.

In cryptography, a certificate authority or certification authority (CA), is an entity that issues digital certificates.

— Wikipedia

Using HTTPS and assuming its safe to do so

One of the most famous HTTPS critics is Mr. Harri Hursti.

He is pretty famous computer security expert and has succesfully hacked

a number or things. He is currently works in his own company SafelyLocked.

#SSL is so insecure it’s only worth a post-it note from the NSA.

- Harri Hursti in Slush 2013 as relayed by Twitter

During an interview in Finnish newspaper Talouselämä he said:

SSL, one of the cornerstones in network security is broken and it cannot be fixed. It cannot be trusted.

That's a stiff claim to make.

What HTTPS certificates are for

I'm quoting a website http://www.sslshopper.com/article-when-are-self-signed-certificates-acceptable.html here:

SSL certificates provide one thing, and one thing only: Encryption between the two ends using the certificate.

They do not, and never been able to, provide any verification of who is on either end. This is because literally one second after they are issued, regardless of the level of effort that goes into validating who is doing the buying, someone else can be in control of the certificate, legitimately or otherwise.

Now, I understand perfectly well that Verisign and its brethren have made a huge industry out of scamming consumers into thinking that identification is indeed something that a certificate provides; but that is marketing illusion and nothing more. Hokum and hand-waving.

It all comes down to, can you determine that you are using the same crypto key that the server is? The reason for signing certificates and the like is to try to detect when you are being hit with a man-in-the-middle attack. In a nutshell, that attack is when you try to open a connection to your 'known' IP address, say, 123.45.6.7. Even though you are connecting to a 'known' IP address of a server you trust, doesn't mean you can necessarily trust traffic from that IP address. Why not? Because the Internet works by passing data from router to router until your data gets to it's destination.

Every router in between is an opportunity for malicious code on that router to re-write your packet, and you'd never know the difference, unless you have some way to verify that the packet is from the trusted server.

The point of a CA-signed certificate is to give slightly stronger verification that you are actually using the key that belongs to the server you are trying to connect to.

Long quote, huh. The short version of that is: Not to assume that if somebody has been verified has somebody or something, that they really are. You can safely assume, that traffic between that party is encrypted, but you really don't know who has the key to decrypt.

If we ignore the dirty details of encryption keys and their exchange during encrypted request initiation and concentrate only on the encryption cipher suite, not all of the cipher suites used are secure enough. There exists pretty good ones, but the worst ones are pretty crappy and cannot be trusted at all.

Argh! Internet is broken!

To add credit Mr Hursti's claims I'll pick the cases of Comodo and DigiNotar. DigiNotar didn't survive the crack, their PR failed so miserably that they lost all of their business.

On March 15th 2011 an affiliate company was used to create a new user

account into Comodo's system. That newly created account was used to

issue a number of certificates: mail.google.com, www.google.com, login.yahoo.com, login.skype.com, addons.mozilla.org and login.live.com. The gain of having those domains is immediately clear. If combined with some other type of attack (most likely DNS cache poisoning)

a victim can be lured into a rogue site for Google, Yahoo, Microsoft

and Mozilla without them ever knowing that all of their data is

compromised. Some of the possible attack vectors include injecting rogue

software updates to victims' computers.

On July 1st 2011 DigiNotar's CA was compromised by allegedly Iranian crackers. The company didn't even notice the crack at until 19th of July. At the time there had been a valid certificate issued to Google in the wild. Again, these false certificates were combined with some other type of security flaw (most likely a DNS poisoning) and redirected victims to access a fake "Google" site without them ever known that all the passwords and transmitted data was leaked.

Both of those cases scream: DON'T use certificates for verification. But in reality Microsoft Windows, Skype and Mozilla Firefox are built so that if a certificate on the other end is verified by the connecting client, all is good. The remote party is valid, trusted and verified. Remember from earlier: "They do not, and never been able to, provide any verification of who is on either end". Still a lot of software is written to misuse certificates like the examples above do.About trust in Internet

From the above incidents we learn the following: Who do you trust in Internet? Who would you consider a trustworthy party, so that you'd assume that what the other party says or does is so? Specifically in Internet context, if a party identifies themselves, do you trust that they are who they claim to be? Or if a party says, that they'll take your credit card details and agree to deliver goods which you purchased back to you, do you trust that they'll keep their promise?

Lot of questions, no answers. Trust is a complicated thing after all. Most people would say, that they'll trust major service providers like Google or Microsoft or Amazon, but frown upon smaller ones with no reputable history to prove their actions. The big companies allegedly work with NSA and gladly deliver your details and behavior for US Government agency to study if you're about to commit serious crime. So you choose to trust them, right? ![]() No.

No.

Well then, are smaller parties any safer? Nobody really knows. They can be, or then again not.

Regular users really don't realize, that the vendor of their favorite web browser and operating system has chosen for you as an user of their software to trust a number of other parties. Here is a glimpse of the list of trusted certification authorities from Mozilla Firefox:

I personally don't trust a company with AddTrust AB or America Online Inc. or CNNIC or ... the list goes on. I don't know who they are, what they do or why should they be worth my trust. All I know for a fact that they paid Mozilla money to include their root certificate in Mozilla's software product to establish implicit trust between them and me, when I access a website they chose to issue a certificate to. That's a tall order!

There really is no reliable way of identifying the other party you're talking or communicating to in the Internet. Many times I wish there was, but since the dawn of time, Internet has been designed to be an anonymous place and that's the way it stays until drastic changes are introduced into it.

Conclusions

No, The Internet is not broken, despite what Mr. Hursti claims. The Internet is much more than HTTP or encryption or HTTP + encryption. For the purpose of securing HTTP we'll just need some secure method of identification for the other party. Critical applications would be on-line banking and on-line shopping. Optimally all traffic would be encrypted all the time (see HTTP 2.0 details), but it is an overkill after all. Encryption adds extra bytes to transfers and for example most large files don't contain any information that would need to be hidden. When moving credit card data or passwords or such, the amount of bytes transferred is typically small in comparison to downloading system updates or install images.

Also I'd like to point out that to keep a connection secure, it's mostly about the keys. Keeping the keys safe and exchanging keys safely. The actual ongoing encryption during a session can be thought to be secure enough to be trusted. However, a great deal of thought must be put into allowing or disallowing a cipher suite. And still: all that is based on current knowledge. Once a while some really smart people find out that a cipher that was thought to be secure isn't because of a flaw or mathematical breakthrough which renders a cipher suite not secure enough to be used.

We need encrypted HTTP but not the way they implemented it in 1994. It is not the task of Verisign (or any other CA-issuer) to say: "The other party has been verified by us, they are who they claim to be". Also there must be room for longer and longer keys and new cipher suites to keep the secure Internet reliable.

Nginx with LDAP-authentication

Monday, December 23. 2013

I've been a fan of Nginx for a couple of years. It performs so much better than the main competitor Apache HTTP Server. However, on the negative side is that Nginx does not have all the bells and whistles as the software which has existed since dawn of Internet.

So I have to do lot more myself to get the gain. I package my own Nginx RPM and have modified couple of the add-on modules. My fork of the Nginx LDAP authentication module can be found from https://github.com/HQJaTu/nginx-auth-ldap.

It adds following functionality to Valery's version:

- per location authentication requirements without defining the same server again for different authorization requirement

- His version handles different requirement by defining new servers.

- They are not actual new servers, the same server just is using different authorization requirement for a Nginx-location.

- case sensitive user accounts, just like all other web servers have

- One of the services in my Nginx is Trac. It works as any other *nix software. User accounts are case sensitive.

- However, in LDAP pretty much nothing is. The default schema defines most things as case insensitive.

- The difference must be compensated during authentication into Nginx. That's why I added the :caseExactMatch: into LDAP search filter.

- LDAP-users can be specified with UID or DN

- In Apache, a required user is typically specified as require user admin.

- Now in LDAP-oriented approach the module requires users to be specified as a DN (for the non-LDAP people a DN is an unique name for an entry in the LDAP).

- LDAP does have the UID (user identifier), so in my version it also is a valid requirement.

IMHO those changes make the authentication much, much more useful.

Thanks Valery for the original version!

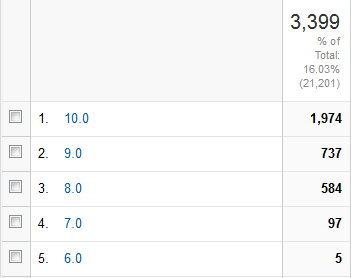

Downgrading Internet Explorer 9 into 8 on Windows 7

Wednesday, December 18. 2013

That should be an easy task, right? You guessed it. If I'm writing about it, it isn't. Once in a while I still test with a real IE8. The IE10 and IE11 emulators should be good enough, but they aren't. Here are the stats from this blog:

People seem to run with IE8 a lot. They shouldn't but ... they do. ![]()

Here is what I did:

- List of Windows updates installed on the computer:

- Managed to find Internet Explorer 9 in it (on Windows 7, that's pretty normal):

- Un-install starts:

- Yep. It took a while and hung. Darn!

- I waited for 20 minutes and rebooted the hung piece of ...

- Reboot did some mopping up and here is the result. No IE anywhere:

- Guess who cannot re-install it. On a normal installation it is listed in Windows features, like this:

In my case, no:

No amount of reboots, running sfc.exe or anything I can think of will fix this. This is what I already tried:

- Attempt fix with Windows Resource Checker:

PS C:\Windows\system32> sfc /scannow

Beginning system scan. This process will take some time.

Beginning verification phase of system scan.

Verification 100% complete.

Windows Resource Protection did not find any integrity violations.

It simply fails to restore the files, because all the bookkeeping says, that IE8 shouldn't be there! - Let' just download the installation package and re-install manually. Download Center - Internet Explorer 8

- Ok, we're not going to do that, because the IE 8 installation package for Windows 7 does not exist. Reason is very simple. Win 7 came with IE8. It is an integral part of the OS. You simply cannot run the Windows 7 without some version of IE. No installation packages necessary, right? Internet Explorer 8 for Windows 7 is not available for download

- Right then. I took the Windows Vista package and ran the installation anyway. All the compatiblity modes and such yield the same result. Internet Explorer 8 is not supported on this operating system

- Re-installation instructions, part 1: Reinstalling IE8 on Windows 7. Not much of a help. The

- Re-installation instructions, part 2: How to Reinstall Internet Explorer in Windows 7 and Vista. No help there either.

- Registry tweaking into HKEY_LOCAL_MACHINE\SOFTWARE\Microsoft\Windows NT\CurrentVersion\Winlogon\GPExtensions to see if {7B849a69-220F-451E-B3FE-2CB811AF94AE} and {CF7639F3-ABA2-41DB-97F2-81E2C5DBFC5D} are still there as suggested by How to Uninstall IE8. Nope. No help with that either. That article mentions "European Windows 7". What could the difference be?

- Perhaps re-installing Service Pack 1 would help? But well... in Windows 7 SP1 cannot be re-installed like it was possible in Windows XP. That actually did fix a lot of operating systems gone bad at the XP-era.

The general consensus seems to be, that you simply cannot lose IE from Windows 7. Magically I did. ![]() Just based on the Wikipedia article about removing IE, it is possible.

Just based on the Wikipedia article about removing IE, it is possible.

Some of the symptoms I'm currently having is Windows Explorer Refuses to Open Folders In Same Window. Some of the necessary DLLs are not there and Windows Explorer behaves funnily.

I don't know what to attempt next.

Barcode weirdness: Exactly the same, but different

Tuesday, December 17. 2013

I was transferring an existing production web-application to a new server. There was a slight gap in the operating system version and I ended up refactoring lot of the code to compensate newer libraries in use. One of the issues I bumped into and spent a while fixing was barcode printing. It was a pretty standard Code 39 barcode for the shipping manifest.

The old code was using a commercial proprietary TrueType font file. I don't know when or where it was purchased by my client, but it was in production use in the old system. Looks like such a fonts sell for $99. Anyway, the new library had barcode printing in it, so I chose to use it.

Mistake. It does somewhat work. It prints a perfectly valid barcode. Example:

![]()

The issue is, that it does not read. I had a couple of readers to test with, and even the really expensive laser-one didn't register the code very well. So I had to re-write the printing code to simulate the old functionality. The idea is to read the TTF-font, convert it into some internal form and output a ready-to-go file into a data directory, so that conversion is needed only once. After that the library can read the font and produce nice looking graphics including barcodes. Here is an example of the TTF-style:

![]()

The difference is amazing! Any reader reads that easily. Both barcodes contain the same data in them, but they look so much different. Wow!

Perhaps one day a barcode wizard will explain me the difference between them.

Worst mobile app ever? Danske Bank's mobile pay

Monday, December 16. 2013

Danske has a huge ad campaign here in Finland about the new mobile payment system. You can send and receive money simply by using a phone number. I'm not going to dwell into the security issues of such a system today, because what could possibly go wrong! ![]() Ok, I'll give them that they have limited the damage by built in a cap of the amount you can transfer, 250,- € per day and 15.000,- € per year. So, in any unfortunate event people are not going to much (if 250,- € is all you have, then ... it's another story).

Ok, I'll give them that they have limited the damage by built in a cap of the amount you can transfer, 250,- € per day and 15.000,- € per year. So, in any unfortunate event people are not going to much (if 250,- € is all you have, then ... it's another story).

Anyway. I got the app from the App Store and started their registration process. It's long. It's tedious. It'll drive you crazy. Looks like they don't want your business.

The information they ask during registration:

- First name, last name

- E-mail address

- Phone number

- Credit card number

- IBAN-number of your bank account

Not a problem. I have all of those. But guess who has all the information in the same phone, you're supposed to enter the data. Typically that's not a problem. A simple task switch to password vault software, copy the numbers and back to registration.

Now the idiots who designed and wrote the app expect everybody to know and type long series of input data. Nobody ever does that! That's what the mobile computers are for: they store data and make it possible to copy and paste it between apps. But these design geniuses chose not to use anything standard. If you switch apps between registration, the entire process needs to be started over. Nice! ![]() Really nice thinking. The paste won't work anyway, so ...

Really nice thinking. The paste won't work anyway, so ...

Definitely this is a good example of now not to write apps.

Getting the worst of Windows 7 - Install updates automatically (recommended)

Friday, December 13. 2013

Why doesn't my setting of NOT installing important updates automatically stick? Every once in a while it seems to pop itself back to the stupid position and does all kinds of nasty things in the middle of the night. From now on I'll start a counter how many times I'll go there to reset the setting back to the one I as system administrator chose.

All this rant is for the simple reason: I've lost data and precious working time trying to recover it. This morning I woke up and while roaming in the house in a semi-conscious state attempting to regain a thought, I noticed couple of LEDs glimmering in a place there shouldn't be any. My desktop PC was on and it shouldn't be. On closer inspection at 3 am it chose to un-sleep for the sake of installing Windows updates. This is yet another stupid thing for a computer to be doing (see my post about OS X waking up).

The bottom line is that the good people (fucking idiots) at Microsoft don't respect my decisions. They choose to force feed me theirs based on the assumption that I accidentally chose not to do a trivial system administration taks automatically. I didn't. I don't want to lose my settings, windows, documents, the list goes on.

The only real option for me would be to set up a Windows Domain. In Active Directory there would be possibility of fixing the setting and making it un-changeable. I just don't want to do it for a couple of computers. Idiots!

Windows 8.1 upgrade and Media Center Pack

Wednesday, December 11. 2013

Earlier I wrote about upgrading my Windows 8 into Windows 8.1. At the time I didn't realize it, but the upgrade lost my Media Center Pack.

At the time I didn't realize that, but then I needed to play a DVD with the laptop and noticed, that the OS is not capable of doing that anymore. After Windows 8 was released it didn't have much media capabilities. To fix that, couple months after the release Microsoft distributed Media Center Pack keys for free to anybody who wanted to request one. I got a couple of the keys and installed one into my laptop.

Anyway, the 8.1 upgrade forgot to mention that it would downgrade the installation back to non-media capable. That should be an easy fix, right?

Wrong!

After the 8.1 upgrade was completed, I went to "Add Features to Windows", said that I already had a key, but Windows told me that nope, "Key won't work". Nice. ![]()

At the time I had plenty of other things to take care of and the media-issue was silently forgotten. Now that I needed the feature, again I went to add features, and hey presto! It said, that the key was ok. For a couple of minutes Windows did something magical and ended the installation with "Something went wrong" type of message. The option to add features was gone at that point, so I really didn't know what to do.

The natural thing to do next is to go googling. I found an article at the My Digital Life forums, where somebody complained having the same issue. The classic remedy for everything ever since Windows 1.0 has been a reboot. Windows sure likes to reboot. ![]() I did that and guess what, during shutdown there was an upgrade installing. The upgrade completed after the boot and there it was, the Windows 8.1 had Media Center Pack installed. Everything worked, and that was that, until ...

I did that and guess what, during shutdown there was an upgrade installing. The upgrade completed after the boot and there it was, the Windows 8.1 had Media Center Pack installed. Everything worked, and that was that, until ...

Then came the 2nd Tuesday, traditionally it is the day for Microsoft security updates. I installed them and a reboot was requested. My Windows 8.1 started disliking me after that. The first thing it did after a reboot, it complained about Windows not being activated! Aow come on! I punched in the Windows 8 key and it didn't work. Then I typed the Media Center Pack key and that helped. Nice. Luckily Windows 8 activation is in the stupid full-screen mode, so it is really easy to copy/paste a license key. NOT! ![]()

The bottom line is: Media Center Pack is really poorly handled. I'm pretty sure nobody at Microsoft's Windows 8 team ever installed the MCP. This is the typical case of end users doing all the testing. Darn!

Younited cloud storage

Monday, December 9. 2013

I finally got my account into younited. It is a cloud storage service by F-Secure, the Finnish security company. They boast that it is secure, can be trusted and data is hosted in Finland out of reach by those agencies with three letter acronyms.

The service offers you 10 GiB of cloud storage and plenty of clients for it. Currently you can get in only by invite. Windows-client looks like this:

Looks nice, but ... ![]()

I've been using Wuala for a long time. Its functionality is pretty much the same. You put your files into a secure cloud and can access them via number of clients. The UI on Wuala works, the transfers are secure, they are hosted on Amazon in Germany, company is from Switzerland owned by French company Lacie. When compared with Younited, there is a huge difference and it is easy to see which one of the services has been around for years and which one is in open beta.

Given all the trustworthiness and security and all, the bad news is: In its current state Younited is completely useless. It would work if you have one picture, one MP3 and one Word document to store. The only ideology of storing items is to sync them. I don't want to do only that! I want to create a folder and a subfolder under it and store a folder full of files into that! I need my client-storage and cloud storage to be separate entity. Sync is good only for a handful of things, but in F-Secure's mind that's the only way to go. They are in beta, but it would be good to start listening to their users.

If only Wuala would stop using Java in their clients, I'd stick with them.