Led Lenser K2 vs. MagLite Solitaire LED

Saturday, February 8. 2014

A while ago I a friend send a link to Jamie and Adam Tested -YouTube channel. I'm a fan of Mythbusters, so he knew that I'd love their stuff. One of the videos they have there is Inside Adam Savage's Cave: Hacking a Flashlight for Adam's EDC. So, I felt that I should blog about flashlights too.

Last year my old and trustworthy MagLite Solitaire broke down after serving me well for 18 years and I had to get a replacement. My old Solitare became un-fixable due to some sort of stress in the inside plastic parts. They broke down to a number of new pieces that didn't fit anymore. Apparently my key chain with number of keys in it cause stress to a flashlight's guts.

In the above video Adam is doing a hack to his JETBeam. Me as a Leatherman man I went for a Led Lenser (apprently they are owned by same company). Model K2 to be specific. However it turned to be a mistake. The LED is bright, it really is, and the flashlight is really tiny, but its aluminum body is not built to be hung in a key chain and stuffed into a pocket over and over again. It broke after 8 months of "usage". Actually I didn't use the lamp that much, but ... It broke. Aow come on! My previous lamp lasted for 18 years!

Here is a pic of the broken Led Lenser K2 (the short one) next to my new flashlight:

Thankfully my favorite flashlight company is back! I don't know what MagLite did for 15 years or so, but they certainly lost the market leader position by not releasing any new products for a very, very long time. So... after failing with Led Lenser I went back to MagLite. Their new LED-products are really good and I got one of their new releases a Solitaire LED. I'm hoping it lasts a minimum of 18 years! ![]()

Advanced mod_rewrite: FastCGI Ruby on Rails /w HTTPS

Friday, February 7. 2014

mod_rewrite comes handy on number of occasions, but when the rewrite deviates from the most trivial things, understanding how exactly the rules are processed is very very difficult. The documentation is adequate, but the information is spread around number of configuration directives, and it is a challenging task to put it all together.

RewriteRule Order of processing

Apache has following levels of configuration from top to bottom:

- Server level

- Virtual host level

- Directory / Location level

- Filesystem level (.htaccess)

Typically configuration directives have effect from bottom to top. A lower level directive overrides any upper level directive. This also the case with mod_rewrite. A RewriteRule in a .htaccess file is processed first and any rules on upper layers in reverse order of the level. See documentation of RewriteOptions Directive, it clearly says: "Rules inherited from the parent scope are applied after rules specified in the child scope". The rules on the same level are executed from top to bottom in a file. You can think all the Include-directives to be combining a large configuration file, so the order can be determined quite easily.

However, this order of processing rather surprisingly contradicts the most effective order of execution. The technical details documentation of mod_rewrite says:

Unbelievably mod_rewrite provides URL manipulations in per-directory context, i.e., within

.htaccessfiles, although these are reached a very long time after the URLs have been translated to filenames. It has to be this way because.htaccessfiles live in the filesystem, so processing has already reached this stage. In other words: According to the API phases at this time it is too late for any URL manipulations.

This results in a looping approach for any .htaccess rewrite rules. The documentation of RewriteRule Directive PT|passthrough says:

The use of the [PT] flag causes the result of the RewriteRule to be passed back through URL mapping, so that location-based mappings, such as Alias, Redirect, or ScriptAlias, for example, might have a chance to take effect.

and

The PT flag implies the L flag: rewriting will be stopped in order to pass the request to the next phase of processing.

Note that the PT flag is implied in per-directory contexts such as <Directory> sections or in .htaccess files.

What that means:

- L-flag does not stop anything, it especially does not stop RewriteRule processing in .htaccess file.

- All RewriteRules, yes all of them, are being matched over and over again in a .htaccess file. That will result in a forever loop if they keep matching. RewriteCond should be used to stop that.

- RewriteRule with R-flag pointing to the same directory will just make another loop. R-flag can be used to exit looping by redirecting to some other directory.

- When not in .htaccess-context, L-flag and looping does not happen.

So, the morale of all this is that doing any rewriting on .htaccess-level performs really bad and will cause unexpected results in the form of looping.

Case study: Ruby on rails -application

There are following requirements:

- The application is using Ruby on Rails

- Interface for Ruby is mod_fcgid to implement FastCGI

- All non-HTTPS requests should be redirected to HTTPS for security reasons

- There is one exception for that rule, a legacy entry point for status updates must not be forced to HTTPS

- The legacy entry point is using Basic HTTP authentication. It does not work with FastCGI very well.

That does not sound too much, but in practice it is.

Implementation 1 - failure

To get Ruby on Rails application running via FastCGI, there are plenty of examples and other information. Something like this in .htaccess will do the trick:

RewriteCond %{REQUEST_FILENAME} !-f

RewriteRule ^(.*)$ /dispatch.fcgi/$1 [QSA]

The dispatch.fcgi comes with the RoR-application and mod_rewrite is only needed to make the Front Controller pattern required by the application framework to function properly.

To get the FastCGI (via mod_fcgid) working a simple AddHandler fastcgi-script .fcgi will do the trick.

With these, the application does work. Then there is the HTTPS-part. Hosting-setup allows to edit parts of the virtual host -template, so I added own section of configuration, rest of the file cannot be changed:

<VirtualHost _default_:80 >

<IfModule mod_rewrite.c>

RewriteEngine On

RewriteCond %{HTTP_HOST} ^www.my.service$ [NC]

RewriteRule ^(.*)$ http://my.service$1 [L,R=301]

</IfModule>RewriteCond %{HTTPS} !=on

RewriteCond %{REQUEST_URI} !^/status/update

RewriteRule ^(.*)$ https://%{HTTP_HOST}$1 [R=301,QSA,L]</VirtualHost>

The .htaccess file was taken from RoR-application:

# Rule 1:

# Empty request

RewriteRule ^$ index.html [QSA]# Rule 2:

# Append .html to the end.

RewriteRule ^([^.]+)$ $1.html [QSA]# Rule 3:

# All non-files are processed by Ruby-on-Rails

RewriteCond %{REQUEST_FILENAME} !-f

RewriteRule ^(.*)$ /dispatch.fcgi/$1 [QSA]

It failed. Mainly because HTTPS rewriting is done too late. There were lot of repetition in the replaced URLs and HTTPS-redirect was the last thing done after /dispatch.fcgi/, so the result looked rather funny and not even close what I was hoping for.

Implementation 2 - success

After the failure I started really studying how the rewrite-mechanism works.

The first thing I did was dropped the HTTPS out of virtual host configuration to the not-so-well-performing .htaccess -level. The next thing I did was got rid of the loop-added dispatch.fcgi/dispatch.fcgi/dispatch.fcgi -addition. During testing I noticed, that I didn't account the Basic authentication in any way.

The resulting .htaccess file is here:

# Rule 0:

# All requests should be HTTPS-encrypted,

# except: message reading and internal RoR-processing

RewriteCond %{HTTPS} !=on

RewriteCond %{REQUEST_URI} !^/status/update

RewriteCond %{REQUEST_URI} !^/dispatch.fcgi

RewriteRule ^(.*)$ https://%{HTTP_HOST}/$1 [R=301,QSA,skip=4]# Rule 0.1

# Make sure that any HTTP Basic authorization is transferred to FastCGI env

RewriteRule .* - [E=HTTP_AUTHORIZATION:%{HTTP:Authorization}]# Rule 1:

# Empty request

RewriteRule ^$ index.html [QSA,skip=1]# Rule 2:

# Append .html to the end, but don't allow this thing to loop multiple times.

RewriteCond %{REQUEST_URI} !\.html$

RewriteRule ^([^.]+)$ $1.html [QSA]# Rule 3:

# All non-files are processed by Ruby-on-Rails

RewriteCond %{REQUEST_FILENAME} !-f

RewriteCond %{REQUEST_URI} !/dispatch.fcgi

RewriteRule ^(.*)$ /dispatch.fcgi/$1 [QSA]

Now it fills all my requirements and it works!

Testing the thing

Just to develop the thing and make sure all the RewriteRules work as expected and didn't interfere with each other in a bad way I had to take a test-driven approach to it. I created a set of "unit" tests in the form of manually executed wget-requests. There was no automation on it, just simple eyeballing the results. My tests were:

- Test the index-page, must redirect to HTTPS:

- wget http://my.service/

- Test the index-page, no redirects, must display the page:

- wget https://my.service/

- Test the legacy entry point, must not redirect to HTTPS:

- curl --user test:password http://my.service/status/update

- Test an inner page, must redirect to HTTPS of the same page:

- Test an inner page, no redirects, must return the page:

Those tests cover all the functionality defined in the above .htaccess-file.

Logging rewrites

The process of getting all this together would have been impossible without rewrite logging. The caveat is that logging must be defined in virtual host -level. This is what I did:

RewriteLogLevel 3

RewriteLog logs/rewrite.log

Reading the logfile of level 3 is very tedious. The rows are extremely long and all the good parts are at the end. Here is a single line of log split into something humans can read:

1.2.3.219 - -

[06/Feb/2014:14:56:28 +0200]

[my.service/sid#7f433cdb9210]

[rid#7f433d588c28/initial] (3)

[perdir /var/www/my.service/public/]

add path info postfix:

/var/www/my.service/public/status/update.html ->

/var/www/my.service/public/status/update.html/update

It simply reads that in a .htaccess file was being processed and it contained a rule which was applied. The log file clearly shows the order of rules being executed. However, most of the regular expressions are '^(.*)$' , so it is impossible to distinguish the rules from each other simply by reading the log file.

Final words

This is an advanced topic. Most sysadmins and developers don't have to meet the complexity of this magnitude. If do, I'm hoping this helps. It took me quite a while to put all those rules together.

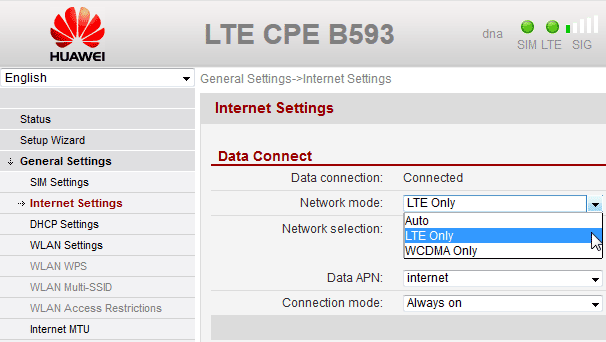

Huawei B593: Forcing 4G LTE mode

Thursday, February 6. 2014

First I'd like to apologize. At least twice I've said that it is impossible to force B593 to stay out of 3G-mode and force it to stay on 4G LTE. That is not true. It is an incorrect statement by me and I'm sorry that I didn't investigate the facts before making such statements.

Here is a (slightly photoshopped) screenshot of my own device:

There actually is such an option in General Settings --> Internet Settings --> Network mode. And you can select LTE Only and it will work as expected.

For device hackers, the /var/curcfg.xml will have the setting:

<?xml version="1.0" ?>

<InternetGatewayDeviceConfig>

<InternetGatewayDevice>

<WANDevice NumberOfInstances="3">

<WANDeviceInstance InstanceID="2">

<WANConnectionDevice NumberOfInstances="1">

<WANConnectionDeviceInstance InstanceID="1">

<WANIPConnection NumberOfInstances="2">

<WANIPConnectionInstance InstanceID="1"

X_NetworkPriority="LTE Only"

Valid options for X_NetworkPriority "AUTO", "WCDMA Only" and "LTE Only".

After changing the setting my connection has been more stable than ever (on Danish 3's firmware). There has been occasions where my connection has dropped to 2.5G, see the blog post about it, but after fixing the LTE-only -mode things changed to most robust ever.

SplashID wasted my entire password database

Wednesday, February 5. 2014

I've been using SplashID as my password solution. See my earlier post about that. Today I tried to log in into the application to retrieve a password, but it turned out my user account was changed into null. Well... that's not reassuring. ![]()

After the initial shock I filed a support ticket to them, but I'm not expecting any miracles. The database has been lost in my bookkeeping. The next thing I did was checked my trustworthy(?) Acronis True Image backups. I had them running on daily rotation and this turned out to be the first time I actually needed it for a real situation.

They hid the "Restore files and directories" -option well. My laptop is configured to run backups for the entire disk, so the default recover-option is to restore the entire disk. In this case that seems a bit overkill. But in the gear-icon, there actually is such an option. After discovering the option (it took me a while reading the helps), the recover was user friendly and intuitive enough. I chose to restore yesterday's backup to the original location. The recover went fine, but SplashID database was flawed on that point. I simply restored two days old backup and that seemed to be an intact one.

Luckily I don't recall any additions or changes to my passwords during the last two days. It looks like I walked away with this incident without harm.

Update 7th Feb 2014:

I got a reply to my support ticket. What SplashData is saying, that the password database is lost due to a bug (actually they didn't use that word, but they cannot fool me). The bug has been fixed in later version of SplashID. Luckily I had a backup to restore from. IMHO the software should have better notification about new versions.

Managing PostgreSQL 8.x permissions to limit application user's access

Wednesday, February 5. 2014

I was working with a legacy project with PostgreSQL 8 installation. A typical software developer simply does not care about DBA enough to think more than once about the permissions setup. The thinking is that for the purpose of writing lines of working code which executes really nice SQL-queries a user with lots of power in its sleeves is a good thing. This is something I bump into a lot. It would be a nice eye-opener if every coder would had to investigate a server which has been cracked into once or twice in the early programming career. I'm sure that would improve the quality of code and improve security thinking.

Anyway, the logic for ignoring security is ok for a development box, given the scenario that it is pretty much inaccessible outside the development team. When going to production things always get more complicated. I have witnessed production boxes which are running applications that have been configured to access DB with Admin-permissions. That happens in an environment where any decent programmer/DBA can spot out a number of other ignored things. Thinking about security is both far above the pay-grade and the skill envelope your regular coder possesses.

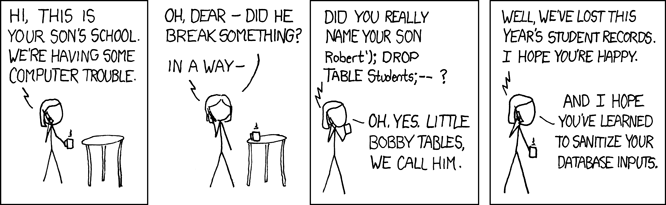

In an attempt to do things the-right-way(tm), it is a really good idea to create a specific user for accessing the DB. Even better idea is to limit the permissions so, that application user cannot run the classic "; DROP TABLE users; -- " because lacking the permission to drop tables. We still remember Exploits of a Mom, right?

Image courtesy of xkcd.com.

Back to reality... I was on a production PostgreSQL and evaluated the situation. Database has owner of postgres, schema public had owner of postgres, but all the tables, sequences and views where owned by the application user. So any exploit would allow the application user to drop all tables. Not cool, huh! ![]()

To solve this three things are needed: first, owner of the entire schema must be postgres. Second, the application user needs only to have enough permission for CRUD-operations, nothing more. And third, the schema must not allow users to create new items on it. As default everybody can create new tables and sequences, but if somebody really pops your box and can run anything on your DB, creating new items (besides temporary tables) is not a good thing.

On a PostgreSQL 8 something of a trickery is needed. Version 9.0 introduced us the "GRANT ... ALL TABLES IN SCHEMA", but I didn't have that at my disposal. To get around the entire thing I created two SQL-queries which were crafted to output SQL-queries. I could simply copy/paste the output and run it in pgAdmin III query-window. Nice!

The first query to gather all tables and sequences and change the owner to postgres:

SELECT 'ALTER TABLE ' || table_schema || '.' || table_name ||' OWNER TO postgres;'

FROM information_schema.tables

WHERE

table_type = 'BASE TABLE' and

table_schema NOT IN ('pg_catalog', 'information_schema')

UNION

SELECT 'ALTER SEQUENCE ' || sequence_schema || '.' || sequence_name ||' OWNER TO postgres;'

FROM information_schema.sequences

WHERE

sequence_schema NOT IN ('pg_catalog', 'information_schema')

It will output something like this:

ALTER TABLE public.phones OWNER TO postgres;

ALTER SEQUENCE public.user_id_seq OWNER TO postgres;

I ran those, and owner was changed.

NOTE: that effectively locked the application user out of DB completely.

So it was time to restore access. This is the query to gather information about all tables, views, sequences and functions:

SELECT 'GRANT ALL ON ' || table_schema || '.' || table_name ||' TO my_group;'

FROM information_schema.tables

WHERE

table_type = 'BASE TABLE' and

table_schema NOT IN ('pg_catalog', 'information_schema')

UNION

SELECT 'GRANT ALL ON ' || table_schema || '.' || table_name ||' TO my_group;'

FROM information_schema.views

WHERE

table_schema NOT IN ('pg_catalog', 'information_schema')

UNION

SELECT 'GRANT ALL ON SEQUENCE ' || sequence_schema || '.' || sequence_name ||' TO my_group;'

FROM information_schema.sequences

WHERE

sequence_schema NOT IN ('pg_catalog', 'information_schema')

UNION

SELECT 'GRANT ALL ON FUNCTION ' || nspname || '.' || proname || '(' || pg_get_function_arguments(p.oid) || ') TO my_group;'

FROM pg_catalog.pg_proc p

INNER JOIN pg_catalog.pg_namespace n ON pronamespace = n.oid

WHERE

nspname = 'public'

It will output something like this:

GRANT ALL ON public.phones TO my_user;

GRANT ALL ON SEQUENCE public.user_id_seq TO my_user;

NOTE: you need to find/replace my_user to something that fits your needs.

Now the application was again running smoothly, but with reduced permission in effect. The problem with all this is that TRUNCATE-clause (or DELETE FROM -tablename-) are still working. To get the maximum out of enhanced security, some classification of data would be needed. But the client wasn't ready to do that (yet).

The third thing is to limit schema permissions so that only usage is allowed for the general public:

REVOKE ALL ON SCHEMA public FROM public;

GRANT USAGE ON SCHEMA public TO public;

Now only postgres can create new things there.

All there is to do at this point is to test the appliation. There should be errors for DB-access if something went wrong.

Tar: resolve failed weirness

Tuesday, February 4. 2014

The ancient tar is de-facto packing utility in all *nixes. Originally it was used for tape backups, but since tape backups are pretty much in the past, it is used solely for file transfers. Pretty much everything distributed for a *nix in the net is a single compressed tar-archive. However, there is a hidden side-effect in it. Put a colon-character (:) in the filename and tar starts mis-behaving.

Example:

tar tf 2014-02-04_12\:09-59.tar

tar: Cannot connect to 2014-02-04_12: resolve failed

What resolve! The filename is there! Why there is a need to resolve anything?

Browsing the tar manual at chapter 6.1 Choosing and Naming Archive Files reveals following info: "If the archive file name includes a colon (‘:’), then it is assumed to be a file on another machine" and also "If you need to use a file whose name includes a

colon, then the remote tape drive behavior

can be inhibited by using the ‘--force-local’ option".

Right. Good to know. The man-page reads:

Device selection and switching:

--force-local

archive file is local even if it has a colon

Let's try again:

tar --force-local tf 2014-02-04_12\:09-59.tar

tar: You must specify one of the `-Acdtrux' or `--test-label' options

Hm.. something wrong there. Another version of that would be:

tar -t --force-local f 2014-02-04_12\:09-59.tar

Well, that hung until I hit Ctrl-d. Next try:

tar tf 2014-02-04_12\:09-59.tar --force-local

Whooo! Finally some results.

I know that nobody is going to change tar-command to behave reasonably. But who really would use it over another machine (without a SSH-pipe)? That legacy feature makes things overly complex and confusing. You'll get my +1 for dropping the feature or changing the default.

Installing own CA root certificate into openSUSE

Monday, February 3. 2014

This puzzled me for a while. It is almost impossible to install the root certificate from own CA into openSUSE Linux and make it stick. Initially I tried the classic /etc/ssl/certs/-directory which works for every OpenSSL-installation. But in this case it looks like some sort of script cleans out all weird certificates from it, so effectively my own changes won't last beyond couple of weeks.

This issue is really poorly documented. Also searching the Net yields no usable results. I found something usable in Nabble from a discussion thread titled "unify ca-certificates installations". There they pretty much confirm the fact that there is a script doing the updating. Luckily they give a hint about the script.

To solve this, the root certificate needs to be in /etc/pki/trust/anchors/. When the certificate files (in PEM-format) are placed there, do the update with update-ca-certificates -command. Example run:

# /usr/sbin/update-ca-certificates

2 added, 0 removed.

The script, however, does not process revocation lists properly. I didn't find anything concrete about them, except manually creating symlinks to /var/lib/ca-certificates/openssl/ -directory.

Example of verification failing:

# openssl verify -crl_check_all test.certificate.cer

test.certificate.cer: CN = test.site.com

error 3 at 0 depth lookup:unable to get certificate CRL

To get this working, we'll need a hash of the revocation list. The hash value is actually same than the certificate hash value, but this is how you'll get it:

openssl crl -noout -hash -in /etc/pki/trust/anchors/revoke.crl

Then create the symlink:

ln -s /etc/pki/trust/anchors/revoke.crl \

/var/lib/ca-certificates/openssl/-the-hash-.r0

Now test the verify again:

# openssl verify -crl_check_all test.certificate.cer

test.certificate.cer: OK

Yesh! It works!

Funny how openSUSE chose a completely different way of handling this... and then chose not to document it enough.

Change iCloud account in iOS 7 - Is it possible?

Sunday, February 2. 2014

The way Apple chose to implement changing iCloud-account is far from making any sense at all. The phrase "Delete Account" puts every users' imagination into high gear. By clicking this red button what could possibly go wrong! Does it implode your entire iCloud-account with all the data in it so that everything is gone permanently and forever? Or does it simply disconnect that particular iOS device from the Apple's cloud?

Image courtesy of http://assets.ilounge.com/images/articles_jdh/ask-20121114-1.jpg

Image courtesy of http://assets.ilounge.com/images/articles_jdh/ask-20121114-1.jpg

Apparently it is the latter one. The user interface is really poorly designed, no matter what. I think the idea was to scare users from testing what happens if they click it.

The discussion-thread in Apple's forums (HT4895 How do I change my iCloud account to my new apple ID?) is one of the sources for confirmation, that it does not wipe your account. It just detaches that particular device from your cloud-account.

To actually change the device to use a new iCloud account is much more tricky, as the article points out. And on top of that, iMessage, Facetime and AppStore still need to re-connect separately. Luckily that's not a big deal at that point.

However, if you combine changing the account with taking a new iPad into use, then you see a flood of e-mail from Apple. The e-mails come from different systems at Apple, but it certainly made me laugh a for a while. There are e-mails from Find My iPhone (my device was iPad), then there are security notifications about Apple ID being used in a new device and when all is set up, there is the welcome to a new device -mail. It would sound like a better idea to switch the account into some sort of changing-devices -mode, but they don't have that yet.

The good thing is that it is possible to change accounts. The bad thing is that they implemented the bare minimum of it.