DLMS - Reading data from an utility meter

Thursday, July 11. 2024

DLMS/COSEM or Device Language Message Specification / Companion Specification for Energy Metering is something IEC 62056 set of standards define. The use case is to enable a consumer to access readings of a smart meter. Technology is robust, it was introduced in Netherlands nearly 30 years ago. Accessing data is also very straightforward, DLMS.dev has instructions for this.

Port and magnet-attaching reader look like these:

Simple & robust. Then there is the but -part. (there always is one)

My electricity meter (let's state the obvious: provided by my utility company) looks like this:

Unit is a Landis+Gyr E450 and it has the port ... aaand the port doesn't work. In my books "work" would indicate some sort of data flow. To sort this out, I contacted tech support with questions. The reply I got was astonishing! DLMS is disabled for all units because of expensive license fee. The greedy bastards at Landis+Gyr want more money for (EU) 2019/944 given consumer rights. As the price is steep, my provider chose not to comply, which translates into no data for me.

Given EU laws and regulations, the story does not end there. In their infinite wisdom, L+G license fee for HAN P1 interface makes commercial sense, so:

Now I'm running a Landis+Gyr E360.

Obviously, the HAN P1 doesn't work yet. It needs to be enabled from network control. For the mentioned license fee. I'll get back to this when I have any data.

DisplayPort Cables - Follow up

Sunday, July 7. 2024

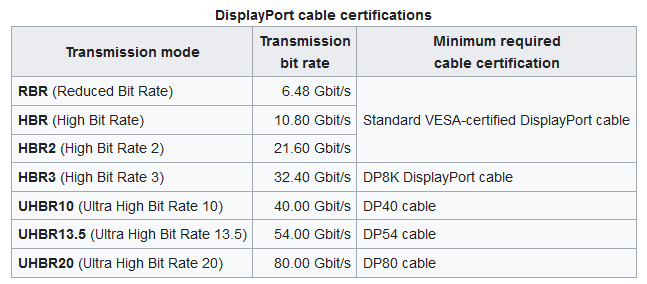

Last month I bumped into an incompatible DisplayPort -cable. There are many different speeds and DP 2.0 requires so much more on your cable to achieve those speeds 4K, 5K and 8K monitors require.

A famous phrase states "Go big or go home!" So I did that. Went big:

These two cables with 8K and 10K spec should have the oompf required to run any of my future monitors.

Color-coding is puzzling to me:

No matter how much I do looking & searching, there isn't anything I can find on those colors. My obvious assumption is for the manufacturer Deltaco to mark 8K with a red connector and 10K with a blue. Exactly what cable speed that translates into, I dunno. My speculation is with UHBR13.5 and UHBR20, but that's only my guess.

In my previous post on the topic I did complain on lacking markings. The boxes have semi-reasonable markings, cables have none. Besides the undocumented color coding.

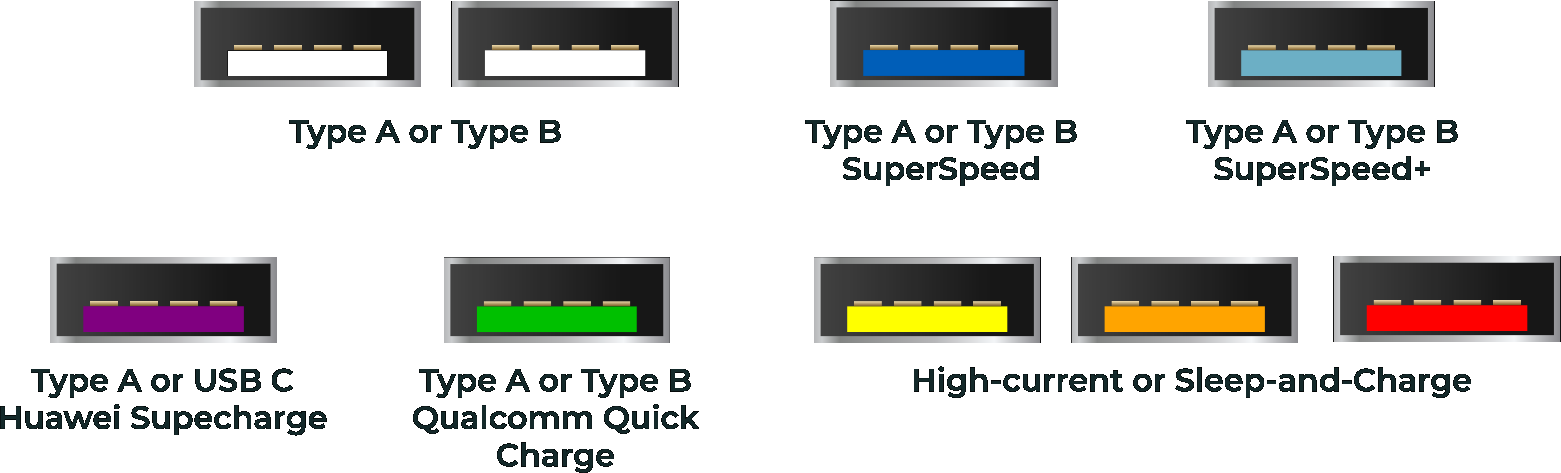

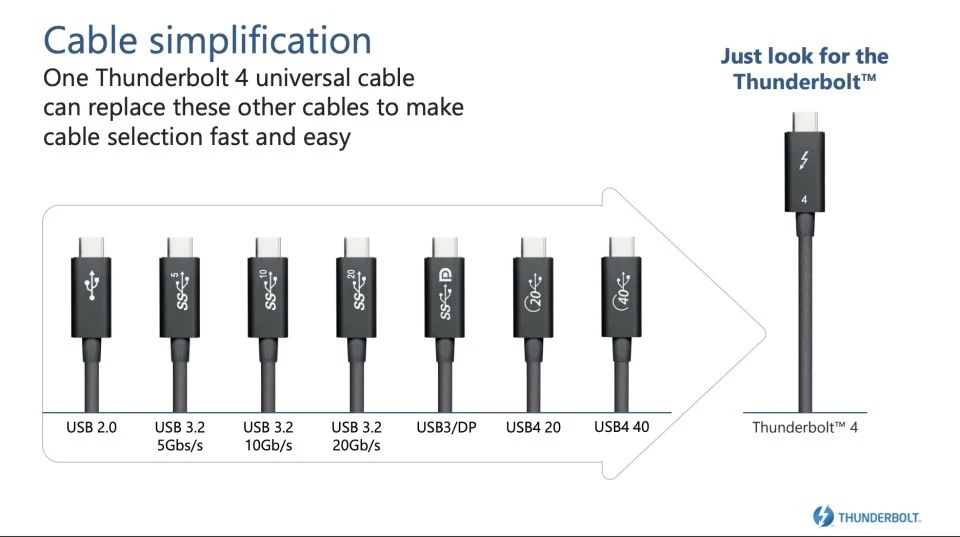

I you'd compare USB-A -connectors:

Or USB4-connectors:

Both have well documented system. DisplayPort, not so much.

On Technology Advancement - Does anybody really use generative AI?

Monday, June 17. 2024

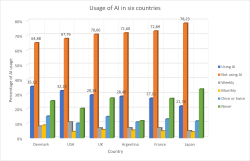

According to this, not.

(Ref.: What does the public in six countries think of generative AI in news? survey)

AI is still in its infancy. Those who lived the IT-bubble of 2000 can relate.

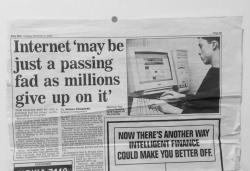

British newspaper Daily Mail wrote in its December 5th 2000 issue about Internet. At that point, it was obvious: Internet wasn't a passing fad. Publishing that article back in -95 might have been sane. Just before the bubble was about to burst, not so much.

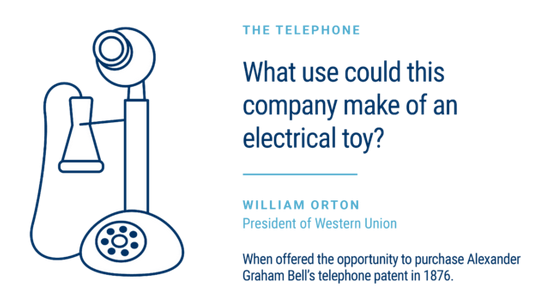

Let's dig up more examples. When we're faced with technology leap, our reactions can be surprising.

On hindsight (that's always 20/20), it would have made sense to see the vastly improved user experience on communications. Spoken word versus written word isn't a fair fight. Spoken word wins when communication target is time-sensitive and message is brief. Today, most of use don't use our pocket computers for phoning, we use them for writing and reading messages.

How about transportation?

If I had asked people what they wanted, they would have said faster horses.

A phrase Henry Ford did not say. There is a Harvard Business Review story about this.

Not that the line makes sense in any case.

Because “faster” wasn’t the selling point of cars over horses.

Speed wasn’t the problem.

Spending huge amounts of money, time, and space on keeping horses alive and dealing with the literal horse shit was the problem.

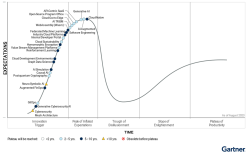

So, yes. We will use AI. We are already using AI. It's just like with Internet, telephone or cars. We're learning how to use them. Gartner seems to place generative AI on top of their Hype Cycle for Emerging Technologies 2023:

That translates into falling flat with most ridiculous use-cases of AI and sane ones remaining. Before that happens I'll keep using AI, chuckling when it makes a silly mistake and nodding in acceptance when it speeds up my task-at-hand.

New monitor DisplayPort trouble: Flickering / Blackout

Sunday, April 28. 2024

I spend lot of time doing stuff with a computer or computers to be exact. As a heavy-duty user, I love to have good displays to do the computing with. As every thing in consumer electronics, also monitor technology has improved a lot.

One of these days, I wanted a new monitor with really good spec.

Delivery guy brought me one, I installed it into my VESA monitor arm and then everything turned sour.

My expensive monitor "kinda" worked. Picture was there, it was crisp, backlight was really good, HDR-colors were really vivid until the monitor chose to flicker a bit and black out. This unfortunate blackout was a totally random event. It could occur three times per minute, or alternatively there could easily be 20 minutes without problems. Such random problems are very difficult to troubleshoot. In any easy case, reproducability is the key. No such joy here.

When in doubt - Google the problem!

Obviously, I went online with a description of the symptoms. Quite soon, this is what I found from Reddit: I'm having screen flickering/blackout via Displayport on my new 1440p 144 hz monitor. The suggestions pointed towards testing different cables and discussion about DisplayPort versions. Good ideas!

GPU

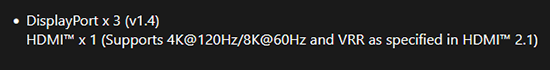

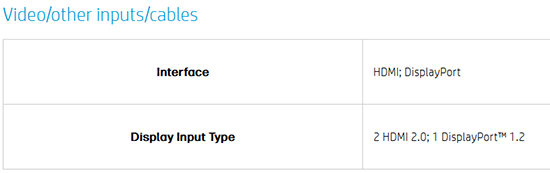

From GPU spec:

Confirmed GPU DisplayPort to be at version 1.4.

Previous Monitor

Spec says:

Confirmed old monitor DisplayPort to be at version 1.2. Hm. Everything worked at 4K resolution, no 144Hz though.

New Monitor

Spec:

Confirmed monitor DisplayPort to be at version 1.4. Equal to GPU.

Maybe the problem IS with the cable as suggested in Reddit!

Theory: DisplayPort Cable

Doing research on DisplayPort: DisplayPort 1.4 vs. 1.2: What's the Difference?

DisplayPort 1.2, originally released in 2010, offers more bandwidth than all but the latest HDMI standards.

DisplayPort 1.4 is a much more capable standard, with limited competition from even the latest and greatest

DisplayPort 1.4 supports resolutions of up to 8K at 60Hz or 4K at 240Hz

DisplayPort 1.2 supports resolutions of up to 4K at 60Hz

Doing research on cabling: How to Tell the Difference Between Display Port 1.2 and 1.4 Cables

Just to be clear, DisplayPort cables are not classified by version, they are classified by the amount of bandwidth they can handle.

Good thing there was a DisplayPort cable with the monitor. Changed it into use and oh yes! Flickering was gone.

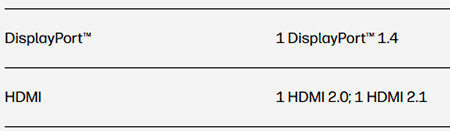

Wikipedia says in DisplayPort -article:

There are seven (7) different specs for a cable. Well, that's a surprise.

Practice: DisplayPort Cable

Let's look at those cables bit closer. This is the non-functioning one:

There are zero clues on cable spec. Nothing! It has WEEE label and CE marking, that's all. Given reality, I'm guessing it is HBR / HBR2 -spec.

Still no idea of cable details. DisplayPort-logo with 8K suggests spec to be at least HBR3.

Reality Check

There is really no way of telling how fast a DisplayPort-cable is by hooking it into a computer or eyeballing it.

Beware: Most DisplayPort cables aren't sold with correct information

Aow come on! This is horrible. ![]()

Good thing is my money wasn't wasted on a faulty unit.

On VAT Calculation / Rounding Monetary Values

Thursday, April 18. 2024

Yesterday API design was hard, today creating invoice line items with VAT on them is hard.

When calculating taxes on items, a very careful approach must be taken on rounding. Line items of an invoice need to be stand-alone units and special care must be taken when calculating sum of rounded values. For unaware developer, this could be trickier than expected.

Products

I'll demonstrate the problem with a ficticous invoice. Let's say I have five different products to sell with following unit prices:

- Product 1 1.24 €

- Product 2 2.77 €

- Product 3 3.31 €

- Product 4 4.01 €

- Product 5 5.12 €

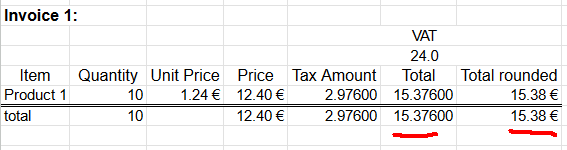

Example invoice #1

A happy customer bought ten of Product 1. Following invoice could be generated from the transaction:

Rather trivial arithmetic with value added tax being 24% can be made. 10 units costing 1,24€ each will total 12,40€. Adding 24% tax on them will result VAT amount of 2,976€. As €-cents really needs to be between 0 and 99 rounding is needed on total amount. Minor numeric cruelty will be needed to make result feasible as money. That's ok. The end result is correct and everybody are happy.

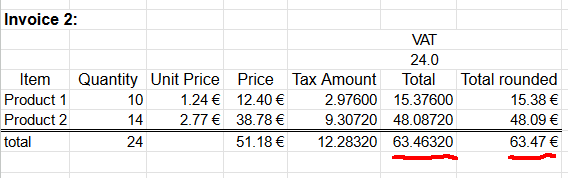

Example invoice #2

Second happy customer bought same as the first customer and additionally 14 of Product 2. Following invoice could be generated from the transaction:

Looking at columns total and total rounded reveal the unpleasant surprise. If rounding is done once, after sum, the rounded value isn't 63,47 as expected. When rounding the total sum into two decimals 63,4632 will result 63,46. There is a cent missing! Where did it go? Or ... alternatively, where did the extra cent appear into total rounded column?

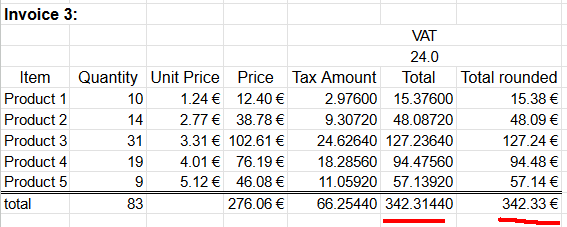

Example invoice #3

Let's escalate. A really good customer bought everything. Lots of everything. Invoice will look like this:

Whoooo! It escalates. Now we're missing two cents already. Total sum of 342,3144€ will round down to 342,31€.

Lesson Learned

Doing one rounding at the end is mathematically sound. Arithmetic really works like that. However, when working with money, there are additional constraints. Each line needs to be real and could be a separate invoice. Because this constraint, we're rounding on each line. Calculating sum of rounding stops making sense mathematically.

Please note, these numbers were semi-randomly selected to demonstrate the problem. In real world scenario roundings can easily cancel each other and detecting this problem will be much more difficult.

Modeling real-world into computer software is surprisingly tricky.

- Anonymous

On API design

Wednesday, April 17. 2024

API design is hard.

If your software makes the behavior hard to change, your software thwarts the very reason that software exists.

- Robert C. Martin

ref. Sandia LabNews on February 14, 2019

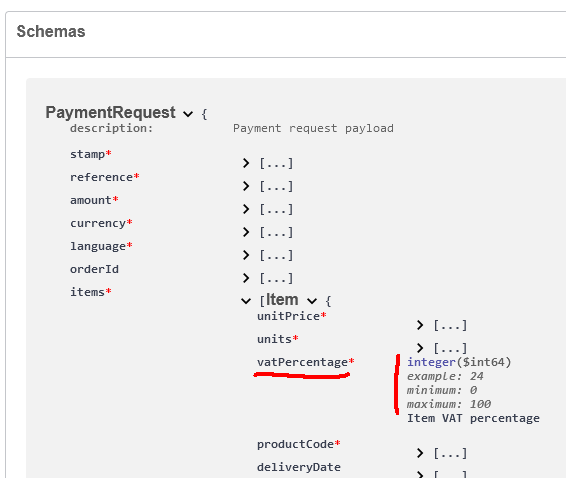

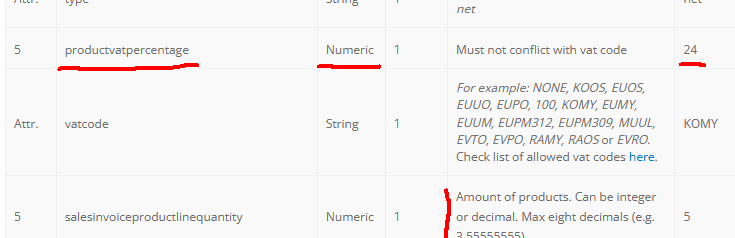

What happens when in a design meeting, you're sure that a thing will NEVER happen? Until it does!

What if you were adamant, a value added tax percentage will NEVER be anything else than an integer? Until it does!

From news on 16th Apr 2024: Reports: Finnish government to raise general value-added tax rate to 25.5%

Meanwhile...

At Paytrail API (https://docs.paytrail.com/#/):

At Visma Netvisor API (https://support.netvisor.fi/en/support/solutions/articles/77000554152-import-sales-invoice-or-order-salesinvoice-nv):

I don't think there is much to do. You take yout your humility hat and start eating the bitter oat porridge to get the thing fixed.

Btw. thanks to Afterdawn on bringing this to our attention.

Bottom line: It shouldn't be too hard to figure out what politicians do. They don't think rational (thoughts nor numbers).

Examples from France and Monaco (VAT rates 20%, 10%, 5.5%, 2.1%), Ireland (VAT rates 23%, 13.5%, 9%, 4.8%), Liechtenstein (VAT rates 8.1%, 2.6%, 3.8%), Slovenia (VAT rates 22%, 9.5%, 5%) or Switzerland (VAT 8.1%, 2.6%, 3.8%).

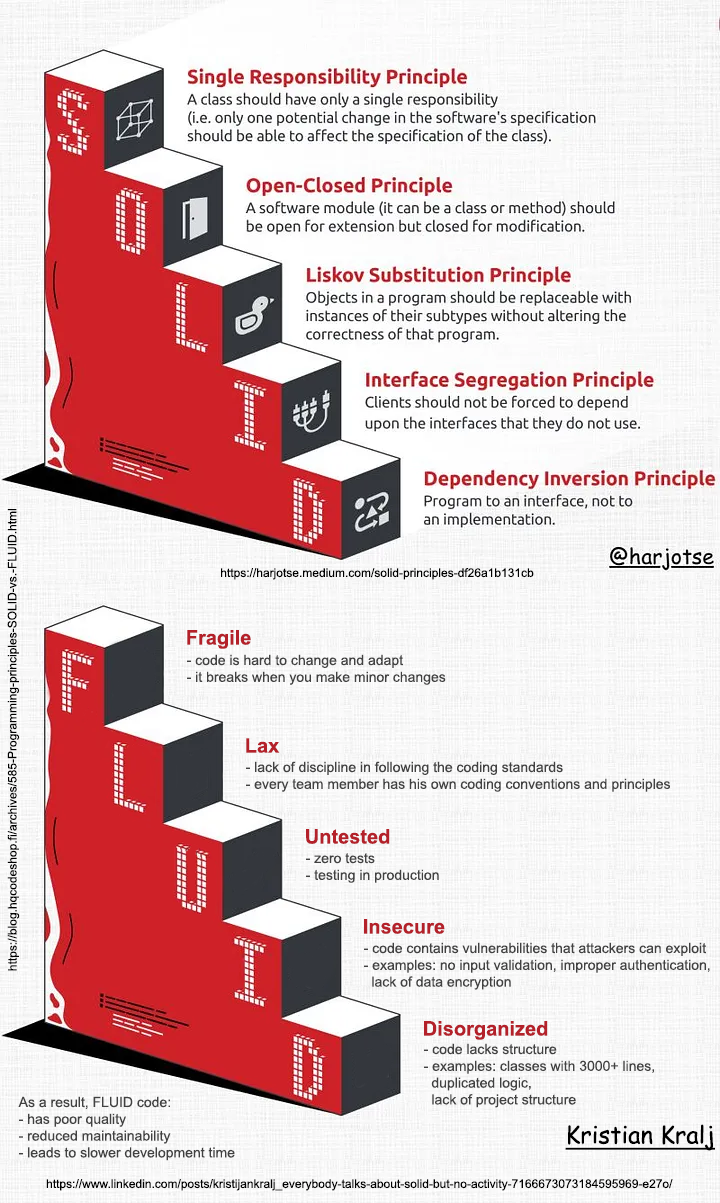

Programming principles: SOLID vs. FLUID

Sunday, March 3. 2024

As Mr. Kralj puts it:

Everybody talks about S.O.L.I.D.

But no one mentions the opposite principles

Well said!

Classic principle is defined as:

- Single Responsibility

- Open-Close (this one is hard to grasp!)

- Liskov Substitution (this one is even harder!)

- Interface Segregation

- Dependency Inversion

This new(er) priciple defines opposite for crappy code:

- Fragile

- Lax

- Untested

- Insecure

- Disorganized

Alternate F.L.U.I.D. clarifies original S.O.LI.D. and is from 2011:

- Functional

- Loose

- Unit Testable

- Introspective

- (i)Dempotent

However, I found Mr. Henney's concept of re-doing S.O.L.I.D. with clarifications and went with Mr. Kralj's derivative of defining the opposite instead.

Credits to Mr. Harjot Singh for his original artwork at https://harjotse.medium.com/solid-principles-df26a1b131cb and Kristian Kralj for his idea!

System update of 2024

Thursday, February 29. 2024

I''ve been way too busy with my dayjob to do any blogging nor system maintenance.

Ever since S9y 2.4.0 update, my blog has been in a disarray. This has been a tough one for me as I'd love to run my system with much better quality.

Ultimately I had to find the required time and do tons of maintenance to get out of the sad state.

Mending activities completed:

- Changed hosting provider to Hetzner

- Rented a much beefier VM for the system

- Changed host CPU:

- manufacturer from Intel to ?

- architecture from AMD64 into ARMv8

- generation from Pentium 4 into something very new

- Upgraded OS into Oracle Linux 9

- Upgraded DB into PostgreSQL 16

- Allocated more RAM for web server

- Tightened up security even more

- Made Google Search happier

- ... fixeds a ton of bugs in Brownpaper skin

Qualys SSL Report still ranks this site as A+ having HTTP/2. Netcraft Site report still ranks this site into better half of top-1-Million in The World.

Now everything should be so much better. Now this AI-generated image portrays a fixed computer system:

Happy New Year 2024!

Sunday, December 31. 2023

I've been really busy working with number of things other than my daily job. This, unfortunately, translates into not much time to do any blogging.

One of the things I've been tinkering with is generative AI. The buzzword you keep bumping into everywhere. A really good example of what AI can do for you is to improve my non-existing artistic talent. The above image is generated with Nightcafe. Go like it there!

Fedora Linux 39 - Upgrade

Sunday, November 12. 2023

Twenty years with Fedora Linux. Release 39 is out!

I've ran Red Hat since 90s. Version 3.0.3 was released in 1996 and ever since I've had something from them running. As they scrapped Red Hat Linux in 2003 and went for Fedora Core / RHEL, I've had something from those two running. For the record: I hate those semi-working everything-done-only-half-way Debian/Ubuntu crap. When I want to toy around with something that doesn't quite fit, I choose Arch Linux.

To get your Fedora in-place-upgraded, process is rather simple. Docs are in article Performing system upgrade.

First you make sure existing system is upgraded to all latest stuff with a dnf --refresh upgrade. Then make sure upgrade tooling is installed: dnf install dnf-plugin-system-upgrade. Now you're good to go for download all the new packages with a: dnf system-upgrade download --releasever=39

Note: This workflow has existed for a long time. It is likely to work also in future. Next time all you have to do is replace the release vesion with the one you want to upgrade into.

Now all prep is done and you're good to go for the actual upgrade. To state the obvious: this is the dangerous part. Everything before this has been a warm-up run.

dnf system-upgrade reboot

If you have a console, you'll see the progress.

Installing the packages and rebooting into the new release shouldn't take too many minutes.

When back in, verify: cat /etc/fedora-release ; cat /proc/version

This resulted in my case:

Fedora release 39 (Thirty Nine)

Linux version 6.5.11-300.fc39.x86_64 (mockbuild@d23353abed4340e492bce6e111e27898) (gcc (GCC) 13.2.1 20231011 (Red Hat 13.2.1-4), GNU ld version 2.40-13.fc39) #1 SMP PREEMPT_DYNAMIC Wed Nov 8 22:37:57 UTC 2023

Finally, you can do optional clean-up: dnf system-upgrade clean && dnf clean packages

That's it! You're done for at least for next 6 months.

Bare Bones Software - BBEdit - Indent with tab

Saturday, October 28. 2023

As a software developer I've pretty much used all text editors there is. Bold statement. Fact remains there aren't that many of them commonly used.

On Linux, I definitely use Vim, rarely Emacs, almost never Nano (any editor requiring Ctrl-s over SSH is crap!).

On Windows, mostly Notepad++ rarely Windows' own Notepad. Both use Ctrl-s, but won't work over SSH-session.

On Mac, mostly BBEdit.

Then there is the long list of IDEs available on many platforms I've used or am still using to work with multiple programming languages and file formats. Short list with typical ones would be: IntelliJ and Visual Studio.

Note: VScode is utter crap! People who designed VScode were high on drugs or plain old-fashioned idiots. They have no clue what a developer needs. That vastly overrated waste-of-disc-space a last resort editor for me.

BBEdit 14 homepage states:

It doesn’t suck.®

Oh, but it does! It can be made less sucky, though.

Here is an example:

In above example, I'm editing a JSON-file. It happens to be in a rather unreadable state and sprinkling bit of indent on top of it should make the content easily readable.

Remember, earlier I mentioned a long list of editors. Virtually every single one of those has functionality to highlight a section of text and indent selection by pressing Tab. Not BBEdit! It simply replaces entire selection with a tab-character. Insanity!

Remember the statement on not sucking? There is a well-hidden option:

The secret option is called Allow Tab key to indent text blocks and it was introduced in version 13.1.2. Why this isn't default ... correction: Why this wasn't default behaviour from get-go is a mystery.

Now the indention works as expected:

What puzzles me is the difficulty of finding the option for indenting with a Tab. I googled wide & far. No avail. Those technical writers @ Barebones really should put some effort on making this option better known.

Wi-Fi Router Lifespan: A Threat to National Security?

Sunday, October 15. 2023

Wireless LAN, or Wi-Fi, is topic I've written a lot about. Router hardware is common. Most end-user appliance people use are wireless. Wi-Fi combined with proper Internet connection has tons of bandwidth and is responsive. From hacking perspective quite many of those boxes run Linux or a thing with hackable endpoints. Or alternatively, on the electronics board, there are interesting pins that a person with knowledge can lure the box do things manufacturer didn't expect to happen. Oh, I love hardware hacks! ![]()

Routers are exploitable

Back in 2016 these routers were harnessed to a new use. From hacker's perspective, there exists a thing which works perfectly, but doesn't do the thing hacker wishes it to do. So, after little bit of hacking, the device "learns" a new skill. This new skill was to participate in criminal activity as a DDoS traffic generator. Geekflare article How to Secure Your Router Against Mirai Botnet Attacks explains as follows:

According to Paras Jha, one of the authors of the Mirai bot, most of the IoT devices infected and used by the Mirai Botnet were routers.

A word from national intelligence organization

Fast forward nine years to 2023. Things really haven't changed much. There exists even more Wi-Fi routers. The routers are manufactured in huge volumes, designed to make maximum profit for the manufacturer and are lacking a ton of security features. Combine these hackable routers with all the geopolitical tension in the World right now our beloved routers have become the interest of Finnish Security and Intelligence Service (or Supo, acronym in Finnish).

This month, in their annual National Security Overview 2023, in Threats to national security are continually evolving, they issued a warning: "Cyber espionage exploits unprotected consumer devices". Actually, they did pretty much the same thing back in March -21 with following statement: "Supo has observed that the intelligence services of authoritarian states have been exploiting dozens of network devices and servers of Finnish individuals and businesses in cyber espionage operations."

How?

Having a national intelligence service to warn about crappy network hardware is a big deal. They don't do the same warning about your toaster or dish washer or cheap Android phone. Same characteristics don't really apply to anything else. A device needs to be:

- On-line / Internet connected

- See Mr. Hyppönen's book: If It’s Smart, It’s Vulnerable

- And btw., all routers are computers. Any computer is considered as a "smart" device.

- Insecure

- Yeah. Even the expensive Wi-Fi routers have crappy manufacturer firmware in them. Cheap ones are especially vulnerable. And even the good ones expire after couple of years as manufacturer loses interest and ceases publishing new upgrades.

- Exist in masses

- Literally every home and business has at least one. I don't know the total number of homes and businesses in the World, but it must be a big number!

On those three characteristics, following things are true:

- Every single vulnerable device can be found easily.

- As the internet, there are 3,706,452,992 public IPv4 addresses. That seems like a big number, but in reality it isn't. (Actually, the scale of the number is same as the number of homes+businesses.)

- In 2013 the entire address space could be scanned in 44 minutes. (See Washington Post article Here’s what you find when you scan the entire Internet in an hour)

- Every single vulnerable device can be cracked wide open without human interaction by automated tools in a split second.

- Every single cracked device can be made to do whatever the attacker wants.

- Any typical scenario is some sort of criminal activity.

Why?

100% of all lay-persons I've talked to state "I don't care if I'm hacked. I'm not an important person and have nothing to hide." What they miss is, attacker wanting to use their paid connection while impersonating as them to commit crimes.

We also have learned not all attackers are after money, some of them are state actors doing espionage. There are multiple types of attackers ranging from teenagers smearing their school's website to cybercriminals stealing money to government sponsored spies stealing state secrets.

Now we're getting to the reason why intelligence services are issuing warnings!

Scaring consumers - There is profit to be made

Since these intelligence service warnings have existed for couple years, in May -23 a major ISP / Telco in Finland, DNA, issued a press release (this is in Finnish, sorry) stating following:

Does you home have over four year old router? An expert reveals why it can be a risk.

Translated quote:

As a rule of thumb I'd say: a four year old router for basic user is aged. Advanced users may replace their routers every two years.

Going open-source!

For clarity: I'm not disputing the fact an aged router with never being upgraded to latest available firmware wouldn't be a security risk. It is! As a hacker I'm disputing is the need to purchase a new one. Gen. pop. will never ever be able to hack their devices into running OpenWrt or DD-WRT, that's for sure. Instead, educating people with risks involved with cheap consumer electronics and offering advice on smart choices would be preferred.

Here is my advice:

- Router manufacturers (and ISPs) are commercial entities targeting to maximize their profit. Their intent is to sell you a new router even when the hardware of your old device is still ok.

- Part of profit maximizing is to abandon the device after couple years of its release. There exists manufacturers which have never released a single security patch. Profit maximizing is very insecure for you as consumer.

- Hardware doesn't expire as fast as software does. There are exeptions to this. Power supplies and radio frequency electronics takes the greatest wear&tear on your 24/7 enabled device, sometimes getting a new box is the best option.

- Your old hardware may be eligible for re-purposing with open-source options. Ask your local hacker for details.

- Open-source firmware gets upgrades for both features and security for any forseeable future. This can happen as open-source firmware unifies all various hardware under a single umbrella.

- Make a habit of upgrading your open-source firmware every now and then. New firmwares will be made available on a regular basis.

Personally, for the past 19 years I've only purchased Wi-Fi routers which have OpenWrt or DD-WRT -support. Typically, after unboxing the thing, factory firmware runs only those precious minutes to get a proper Linux running into them. This is what I recommend everybody else to do!

PS. Kudos to those manufacturers who skipped the part with creating something and abandoning firmware of their own and license open-source solutions. There aren't many of you. Keep it up!

More USB Ports for iMac - HyperDrive 5-in-1 USB-C Hub

Sunday, September 3. 2023

Apple computers are known to have not-so-many ports. Design philosophy is for a machine to be self-sufficient and to not need any extensions nor ports for extensions. Reality bites and eats any ideology for a breakfast. I definitely need the extensions!

So, for my new iMac, I went shopping for more ports. Whenever I need to do this, I'll first check Hyper. Their products are known to be of highest quality and well designed to meet the specific requirements of a Mac. To be clear: on an iMac there are four ports: two USB-C and two Thunderbolt 4. This ought to be enough for everybody, right? Nope. All of them are in the back of the computer. What if you need something with easy access?

This is what's in a HyperDrive for iMac box:

Those changeable covers are designed to match the colour of the iMac. This reminds me of 1998 when Nokia introduced the Xpress-on Covers for 5110:

Image courtesy of nokiaprojectdream.com.

This is how the USB-hub clamps into iMac:

Now I don't have to try and blindly attempt to touchy/feely the location of a port behind the computer. On my desk, back of the iMac is definitely not visible nor available. Also, it is noteworthy, somebody might declare the setup "ugly" or complain of my choice of color express-on cover. The iMac is silver, but I have a yellow cover on the HyperDrive. That's how I roll! ![]()

New toys - Apple iMac

Monday, August 28. 2023

Summer here in Finland is over. It's windy and raining cats&dogs. Definitely beginning of autumn.

For me, summer is typically time to do lots of other things than write blog posts. No exceptions this time. I did tinker around with computers some: new rack server to replace the old Intel Atom, some USB-probing via DLMS, some Python code, etc. etc. I may post someething on those projects later.

And: I got a new iMac. Here are some pics:

Back-in-the-days, there used to be 21.5" and 27" iMac. Actually, my old one is the small one. Since Apple abandoned Intel CPUs, one-size-fits-all, only 24" option available. Also, the iMacs I have are VESA-mounted ones. There is no room on my desk.

Apple's magic mouse is for somebody else. I hate the thing! Good thing I still have a perfectly working MX Anywhere 2. On my other computer I (obviously) use a MX Anywhere 3 and have plans to upgrade it into a 3S.

Cabling in an iMac is not-so-standard:

Ethernet RJ-45 -socket is in the PSU, which has your standard IEC C5 "Mickey Mouse" connector. On the other end, there is a Magsafe. With Ethernet in it! It has to be some sort of USB-C / Thunderbolt 4 -thingie with really weird magnetic connector.

Transferring setteings and data from a OS X to modern macOS works like a charm. Nothing in Windows can touch that level of easiness. Also, now I have an OS that can do Time Machine backups to a Linux/Samba. Nice! Maybe I should post something about that setup also.

Next: Run the thing for 10+ years. Btw. the old one is for sale, it really has no monetary value, but it works and I don't need it anymore.

Nuvoton NCT6793D lm_sensors output

Monday, July 3. 2023

LM-Sensors is set of libraries and tools for accessing your Linux server's motheboard sensors. See more @ https://github.com/lm-sensors/lm-sensors.

If you're ever wondered why in Windows it is tricky to get readings from your CPU-fan rotation speed or core temperatures from you fancy GPU without manufacturer utilities. Obviously vendors do provide all the possible readings in their utilities, but people who would want to read, record and store the data for their own purposes, things get hairy. Nothing generic exists and for unknown reason, such API isn't even planned.

In Linux, The One toolkit to use is LM-Sensors. On kernel side, there exists The Linux Hardware Monitoring kernel API. For this stack to work, you also need a kernel module specific to your motherboard providing the requested sensor information via this beautiful API. It's also worth noting, your PC's hardware will have multiple sensors data providers. An incomplete list would include: motherboard, CPU, GPU, SSD, PSU, etc.

Now that sensors-detect found all your sensors, confirm sensors will output what you'd expect it to. In my case there was a major malfunction. On a boot, following thing happened when system started sensord (in case you didn't know, kernel-stuff can be read via dmesg):

systemd[1]: Starting lm_sensors.service - Hardware Monitoring Sensors...

kernel: nct6775: Enabling hardware monitor logical device mappings.

kernel: nct6775: Found NCT6793D or compatible chip at 0x2e:0x290

kernel: ACPI Warning: SystemIO range 0x0000000000000295-0x0000000000000296 conflicts with OpRegion 0x0000000000000290-0x0000000000000299 (_GPE.HWM) (20221020/utaddress-204)

kernel: ACPI: OSL: Resource conflict; ACPI support missing from driver?

systemd[1]: Finished lm_sensors.service - Hardware Monitoring Sensors.

This conflict resulted in no available mobo readings! NVMe, GPU and CPU-cores were ok, the part I was mostly looking for was fan RPMs and mobo temps just to verify my system health. No such joy. ![]() Uff.

Uff.

It seems, this particular Linux kernel module has issues. Or another way to state it: mobo manufacturers have trouble implementing Nuvoton chip into their mobos. On Gentoo forums, there is a helpful thread: [solved] nct6775 monitoring driver conflicts with ACPI

Disclaimer: For ROG Maximus X Code -mobo adding acpi_enforce_resources=no into kernel parameters is the correct solution. Results will vary depending on what mobo you have.

Such ACPI-setting can be permanently enforced by first querying about the Linux kernel version being used (I run a Fedora): grubby --info=$(grubby --default-index). The resulting kernel version can be updated by: grubby --args="acpi_enforce_resources=no" --update-kernel DEFAULT. A reboot shows fix in effect, ACPI Warning is gone and mobo sensor data can be seen.

As a next step you'll need userland tooling to interpret the raw data into human-readable information with semantics. A new years back, I wrote about Improving Nuvoton NCT6776 lm_sensors output. It's mainly about bridging the flow of zeros and ones into something having meaning to humans. This is my LM-Sensors configuration for ROG Maximus X Code:

chip "nct6793-isa-0290"

# 1. voltages

ignore in0

ignore in1

ignore in2

ignore in3

ignore in4

ignore in5

ignore in6

ignore in7

ignore in8

ignore in9

ignore in10

ignore in11

label in12 "Cold Bug Killer"

set in12_min 0.936

set in12_max 2.613

set in12_beep 1

label in13 "DMI"

set in13_min 0.550

set in13_max 2.016

set in13_beep 1

ignore in14

# 2. fans

label fan1 "Chassis fan1"

label fan2 "CPU fan"

ignore fan3

ignore fan4

label fan5 "Ext fan?"

# 3. temperatures

label temp1 "MoBo"

label temp2 "CPU"

set temp2_max 90

set temp2_beep 1

ignore temp3

ignore temp5

ignore temp6

ignore temp9

ignore temp10

ignore temp13

ignore temp14

ignore temp15

ignore temp16

ignore temp17

ignore temp18

# 4. other

set beep_enable 1

ignore intrusion0

ignore intrusion1

I'd like to credit Mr. Peter Sulyok on his work about ASRock Z390 Taichi. This mobo happens to use the same Nuvoton NCT6793D -chip for LPC/eSPI SI/O (I have no idea what those acronyms are for, I just copy/pasted them from the chip data sheet). The configuration is in GitHub for everybody to see: https://github.com/petersulyok/asrock_z390_taichi

Also, I''d like to state my ignorance. After reading less than 500 pages of the NCT6793D data sheet, I have no idea what is:

- Cold Bug Killer voltage

- DMI voltage

- AUXTIN1 is or exactly what temperature measurement it serves

- PECI Agent 0 temperature

- PECI Agent 0 Calibration temperature

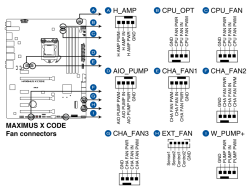

Remember, I did mention semantics. From sensors-command output I can read a reading, what it translates into, no idea! Luckily there are some of the readings seen are easy to understand and interpret. As an example, fan RPMs are really easy to measure by removing the fan from its connector. Here is an excerpt from my mobo manual to explain fan-connectors:

As data quality is taken care of and output is meaningful, next step is to start recording data. In LM-Sensors, there is sensord for that. It is a system service taking a snapshot (you can define the frequency) and storing it for later use. I'll enrich the stored data points with system load averages, this enables me to estimate a relation with high temperatures and/or fan RPMs with how much my system is working.

Finally, all data gathered into a RRDtool database can be easily visualized with rrdcgi into HTML + set of PNG-images to present a web page like this:

Nice!