Let's Encrypt Transitioned to ISRG's Root

Sunday, January 10. 2021

My previous post @ Let's Encrypt Transitioning to ISRG's Root.

Let's Encrypt's announcement: Standing on Our Own Two Feet also note their support plan for old Android phones: Extending Android Device Compatibility for Let's Encrypt Certificates to maintain their IdenTrust root as an alternative source.

To put it short, plans made back in 2019 got refined and went to execution. The certificates issued by Let's E have their own root CA certificate and are not depending on a partner organization's root.

I'm getting certs from Let's E, why should I care?

If you're lucky, no need to.

If you're like me, stuff stops working.

An example: my OpenLDAP slapd is configured to serve LDAPS (that's TLS-wrapped LDAP) from TCP/636 with a Let's E cert I'm getting from them every 60 days. Last week I did run the update and my recent cert update was issued by their new R3 intermediate server, as X3 having been phased out.

To state the obvious, lot of stuff in Linux depend on ability to access users. Now that it was gone, the previously mentioned "lot of stuff" ceased to function.

The exact message I managed to dig out with slapd -d 3 was:

TLS trace: SSL_accept:TLSv1.3 early data

TLS trace: SSL_accept:error in TLSv1.3 early data

5ff9a0ec connection_get(23): got connid=1008

5ff9a0ec connection_read(23): checking for input on id=1008

TLS trace: SSL3 alert read:fatal:unknown CA

TLS trace: SSL_accept:error in error

TLS: can't accept: error:14094418:SSL routines:ssl3_read_bytes:tlsv1 alert unknown ca.

5ff9a0ec connection_read(23): TLS accept failure error=-1 id=1008, closing

Note: fatal:unknown CA

Ok, my stuff got broken, what now?

Luckily the fix is easy, go get the new R3 cert from https://letsencrypt.org/certs/lets-encrypt-r3.pem. To see all of their certs in Let's E chain-of-trust, go to https://letsencrypt.org/certificates/

When targeting specifically OpenLDAP and slapd, I went to /etc/openldap/certs/ and symlinked lets-encrypt-r3.pem from /etc/pki/tls/certs/ which is the standard Fedora/CentOS/RedHat location for certificate PEM-files.

After downloading the cert, next thing was to get the hash of the downloaded R3-cert:

# openssl x509 -hash -noout -in /etc/pki/tls/certs/lets-encrypt-r3.pem

Which outputted the result of (your result must be identical to this):

8d33f237

This hash needs to point to the actual PEM-file, symlink:

# ln -s /etc/pki/tls/certs/lets-encrypt-r3.pem 8d33f237.0

That should be the fix. Next systemctl restart slapd and observe functioning OpenLDAP-server.

After any changes to my LDAP-configuration, I'll verify the result with a query similar what my Linux system would do with a direct LDAP-search of:

$ ldapsearch -H "ldaps://my.ldap.server.example.com/" -x \

-b ou=People,dc=example,dc=com \

"(cn=Jari Turkia)"

and system wrapper for above:

$ getent passwd jatu

Confirmed as working!

Done.

Final thoughts

I and everybody else had over an year to prepare for this. Did I? Nope. Had other more "important" things to do instead. Fail!

Advent of Code 2020

Saturday, December 26. 2020

As I don't have too many projects on my hands during this COVID-19 ridden year, I decided to go for an ultimate time-sink of AoC 2020.

For the curious, here are my stats:

----Part 1----- ----Part 2-----

Day Time Rank Time Rank

23 03:35:13 5086 - -

19 09:53:13 8934 09:53:26 5961

18 03:08:25 6521 04:04:25 6063

17 16:12:13 16057 16:12:23 15108

16 03:01:21 9251 03:52:53 6641

15 02:14:07 8224 02:16:33 6855

14 02:54:23 8940 03:52:58 7359

13 04:20:46 13423 06:15:57 7818

12 04:26:10 12452 04:55:22 10616

11 02:34:45 9354 03:22:14 8110

10 02:46:44 15237 04:17:26 10408

9 01:52:12 11970 02:13:22 11396

8 01:49:09 12056 03:06:07 12907

7 04:12:28 14520 04:12:38 11238

6 03:30:29 17152 03:46:03 16033

5 04:28:02 18252 05:15:07 19367

4 02:17:40 14478 02:38:02 10416

3 02:41:11 16008 02:53:35 15164

2 04:30:05 23597 04:37:14 21925

1 >24h 77025 >24h 72031

My weapon-of-choice was Python. I'm a fan of IntelliJ, so I wrote my code with that.

As you can see, I didn't complete all of them. It's mostly about time required to complete the latter ones. As an example 19 took way too many hours in a Saturday, I chose to opt out at that point. I did have time to complete first part of 23.

1-9 were really trivial ones. Task in 7 was really badly worded, but after couple of failures manageable. 10 was very tricky for the optimization requirement. It is possible to populate an entire tree, but it is so heavy on resources and time-consuming, going for the math was the better way. 11 and anything after it was beyond trivial. 13 was a huge math problem and it took a while to solve. 17 was a 3D game-of-life (a 2D GoL was done in 11 already) and required really careful work. 18 involved solving reverse polish notation calculations and I considered that as rather easy. Then came 19 which involves parsing a set of rules, but given references to other rules, the approach becomes tricky and tangled soon. I completed it and decided it would take too much of my daily hours to complete any subsequent tasks. However, for 23 I did spend couple minutes just to realize my approach was badly optimized for any large set of data. At that point I churned.

Initially I did enjoy the tasks, but when the complexity ramped up I was torn. I didn't want to not do it just because the was complexity, but on the other hand writing code to be discarded for hours wasn't the best use of my time while Chrismas was nearing. At that point I didn't enjoy the tasks anymore, they were more like chores I "had" to do.

Next year, the AoC will probably be arranged as it has been since 2015. I may not participate on that one.

Merry Christmas 2020!

Friday, December 25. 2020

Merry Christmas!

Happy Holidays!

Hyvää Joulua!

Btw. as the maps by Jakub Marian are so cool, here is an another one:

Full attribution to his work. Go see the originals at https://jakubmarian.com/merry-christmas-in-european-languages-map/ and https://jakubmarian.com/christmas-gift-bringers-of-europe/. Mr. Marian fully deserves all the possible credit for permission to use his material with attribution and also for the really cool stuff he has made. Check it out yourself!

podman - Running containers in Fedora 31+

Tuesday, November 10. 2020

To clarify, I'll put the word here: Docker

Naming confusion

Next, I'll go and fail explaining why Docker isn't Docker anymore. There is an article from year 2017 OK, I give up. Is Docker now Moby? And what is LinuxKit? trying to do the explaining, nearly with a success. In that article, word "docker" is presented a number of times in different context. Word "docker" might mean the company, Docker Inc., the commercial techology with open source packaging Docker CE or paid version Docker EE. I'll add my own twist, there might be command docker in your Linux which may or may not have something to do with Docker Inc.'s product.

In short: What you and I both call Docker isn't anymore. It's Moby.

Example, in Fedora 33:

# rpm -q -f /usr/bin/docker

moby-engine-19.03.13-1.ce.git4484c46.fc33.x86_64

Translation: Command docker, located in /usr/bin/ is provided by a RPM-package called moby-engine.

Further, running dnf info moby-engine in Fedora 33:

Name : moby-engine

Version : 19.03.13

Release : 1.ce.git4484c46.fc33

Architecture : x86_64

Size : 158 M

Source : moby-engine-19.03.13-1.ce.git4484c46.fc33.src.rpm

Repository : @System

From repo : fedora

Summary : The open-source application container engine

URL : https://www.docker.com

License : ASL 2.0

Description : Docker is an open source project to build, ship and run any

: application as a lightweight container.

This moby-thingie is good old docker after all!

Fedora confusion

Installing Docker into a Fedora 33 with dnf install docker, making sure the daemon runs with systemctl start docker, pulling an image and in an attempt to debug what the container image about to be debugged has eaten by going with a classic:

docker run -it verycoolimagenamehere /bin/bash

... will blow up on your face! What!?

Error message you'll see states following:

docker: Error response from daemon: OCI runtime create failed: this version of runc doesn't work on cgroups v2: unknown.

Uh. Ok?

- Docker-daemon returned an error.

- OCI runtime create failed (btw. What's an OCI runtime?)

- runc failed (btw. What's a runc?)

- doesn't work on cgroups v2 (btw. What's cgroups and what other versions exist than v2?)

Lot of questions. No answers.

Why there is Fedora confusion?

Going to google-search will reveal following information: cgroups is the mechanism which makes Docker tick. There exist versions 1 and 2 of it.

Real nugget is article Fedora 31 and Control Group v2 by RedHat. I'm not going to copy/paste the contents entirely here, but to put it briefly: In Fedora 31 a decision was made to fall forward into cgroups v2. However, there is a price for doing this and one of them is broken backwards-compatiblity. cgroups v1 and v2 cannot co-exist at the same time. Running v2 has lots of benefits, but major drawback is with the specific softare by Docker Inc. which will not work with this newer tech and apparently will not start working in a near future.

Part of the confusion is that nobody else besides Fedora has the balls to do this. All other major distros are still running cgroups v1. This probably will change sometimes, but not soon. Whenever the most popular distros would go for v2, all others would follow suit. We've seen this happen in systemd and other similar advances.

Mitigating Fedora confusion

When Fedora-people chose to fall forward, they had some backing for it. They didn't simply throw us users out of the proverbial airplane without a parachute. For Fedora 31 (and 32 and 33 and ...) there exists a software package that is a replacement for docker. It is called podman. Website is at https://podman.io/ and it will contain more details. Source code is at https://github.com/containers/podman and it has explanation: "Podman (the POD MANager): A tool for managing OCI containers and pods". Shortly: It's docker by RedHat.

Installing podman and running it feels like running Docker. Even the commands and their arguments match!

Something from earlier:

podman run -it verycoolimagenamehere /bin/bash

... will work! No errors! Expected Bash-prompt! Nice.

Mitigating differences

There exists lot of stuff in this world with full expectance of command docker and it's configuration ~/.docker/config.json.

A good example is Google Cloud Platform SDK accessing GCP Container Registry. (Somebody from the back row is yelling: AWS ECR! ... which I'll be skipping today. You'll have to figure out how aws ecr get-login-password works by yourself.)

Having installed GCP SDK and running command gcloud auth configure-docker (note! in Fedora 33: CLOUDSDK_PYTHON=python2 gcloud auth configure-docker, to confirm Python 2.x is used) will modify the Docker config-file with appropriate settings. Podman won't read any of that! Uff. Doing a podman pull or podman login into GCR will politely ask for credentials. And nope, don't enter them. That's not a very secure way of going forward.

Throwing a little bit of GCP-magic here:

- (skip this, if you already logged in) Log into GCP:

gcloud auth login - Display logged in GCP-users with a:

gcloud auth list - Display the (rather long) OAuth2 credential:

gcloud auth print-access-token '<account-id-here!>' - Glue this into a podman-command:

podman login \<account-id-here!>

-u oauth2accesstoken \

-p "$(gcloud auth print-access-token '')" \

https://gcr.io - Success: Login Succeeded!

Now you have successfully authenticated and a podman pull will work from you private container repo.

Finally

Lot of confusion.

Lot of questions.

Hopefully you'll find some answers to yours.

Getting rid of Flash from Windows - For good

Wednesday, October 28. 2020

Today, Microsoft released KB4577586, Update for the removal of Adobe Flash Player. This is wonderful news! I've been waiting for this moment to happen for many many years. Many organizations will cease to support Adobe Flash end of this year and this release is an anticipated step on that path. Goodbye Flash!

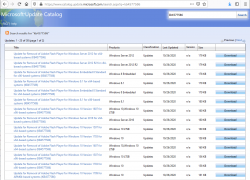

Updates need to be manually downloaded from Microsoft Update Catalog, link: https://www.catalog.update.microsoft.com/search.aspx?q=kb4577586. The reason for this is the earliness. If you're not as impatient as I, the update will go its natural flow and eventually be automatically offered by your Windows.

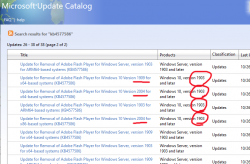

As you can see from the listing, you need to be super-careful when picking the correct file to download. Also, to make things worse, there are some discrepancies in the descriptions:

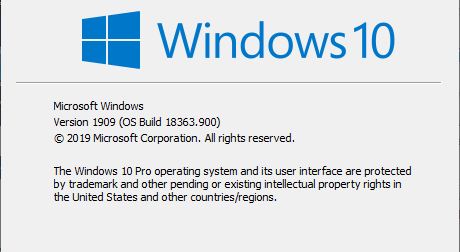

To get the exact version of your Windows, run winver. It will output something like this:

In that example, the package required is for Windows 10 release 1909. To download the correct package, you also need to know the processor architecture. Whether it's AMD-64, Intel-32 or ARM-64 cannot be determined from above information. If you have no idea, just go with x64 for processor architecture, that's a very likely match. Those not running x64 will know the answer without querying.

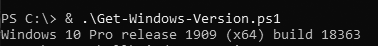

As a software engineer, I obviously wanted to extract all the required information programmatically. I automated the process of getting to know the exact version your particular Windows is running by writing and publishing a helper-script for PowerShell Core. If you are able to run PowerShell Core, the script is available at: https://gist.github.com/HQJaTu/640d0bb0b96215a9c5ce9807eccf3c19. Result will look something like this:

Result will differ on your machine, but that's the output on my Windows 10 Pro release 1909 (x64) build 18363. Couple of other examples are:

Windows 10 Pro release 2004 (x64) build 20241 on my Windows Insider preview

Windows 8.1 Pro with Media Center (x64) build 9600 on my Windows 8.1

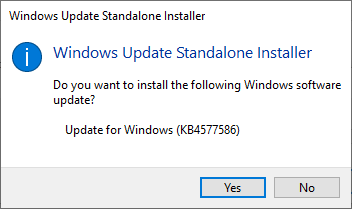

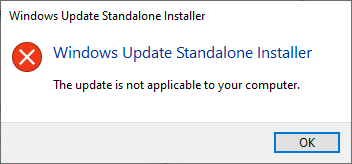

If you managed to pick out the correct .msu-file, on running it, output will query if you want to proceed with installation:

In case of a mismatch, error will say something about that particular update not being applicable to your computer:

After installation, go verify the results of Flash being removed. Running your regular Chrome (or Firefox) won't do the trick. They won't support Flash anyways. What you just did was removed Flash from Windows, aka. Internet Explorer. Go to https://www.whatismybrowser.com/detect/is-flash-installed and observe results:

On a Windows, where KB4577586 hasn't been successfully applied, message will indicate existence of Adobe Flash player:

That's it. Flash-b-gone!

Tracking your location via Mobile network

Sunday, October 25. 2020

Privacy, especially your/mine/everybody's, has been an always actual topic ever since The Internet begun its commercial expansion in the beginning of 90s. Being an important topic, it has been thought everybody should have the right for privacy. In EU, the latest regulation is called on the protection of natural persons with regard to the processing of personal data and on the free movement of such data, or General Data Protection Regulation. In California, USA they'd call it California Consumer Privacy Act.

Privacy protection gone wrong

Both above mentioned regulations have good intention. Forcing websites to bombard all of their users with stupid questions are a complete waste of bandwidth! Everybody is tired of seeing popups like this while surfing:

Somehow the good intention turned on itself. Literally nobody wants to make those choices before entering a site. There should be a setting in my browser and all those sites should read the setting and act on it without bothering me.

Mobile phone tracking

Meanwhile, your cell service provider is using your data and you won't be offered a set of checkboxes to tick.

As an example, Telia’s anonymized location data helps Finnish Government fight the coronavirus (April 2020). This corporation has a product called Crowd Insights. Anybody with a reasonable amount of money (actual price of the service is not known) can purchase location data of actual persons moving around a city. There is a brief investigation by Finnish Chancellor of Justice stating that the service is legal and won't divulge any protected data. The decision (unfortunately, only in Finnish) Paikannustietojen hyödyntäminen COVID-19 –epidemian hillinnässä states, the service's data is daily or hourly and while a reasonable accurate location of a mobile device can be obtained, if that location data doesn't identify a person, obviously, it's not protected by any laws.

On the topic of COVID-19, Future of Privacy Forum has published an article A Closer Look at Location Data: Privacy and Pandemics, where they raise points from ethics and privacy perspective of such tracking. A good read, that!

Application of mobile movement tracking

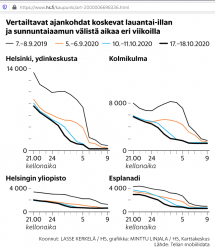

Here is one. A newspaper article titled "Puhelinten sijaintitiedot paljastavat, kuinka ihmismassojen liikkuminen yöllisessä Helsingissä muuttui" (https://www.hs.fi/kaupunki/art-2000006698336.html):

For non-Finnish -speaking readers: this article is about movement of people in center of Helsinki. For a reference point, there is Crowd Insights data from September 2019, time before COVID-19. Movement data from pandemic is from September 5th, October 10th and 17th. To state the obvious: in 2019 between Saturday 9pm and Sunday 9am people moved a lot. What's also visible is how this global pandemic changed this behaviour. In September 2020 there were no strict regulations for night clubs and bars, which is clearly visible in the data.

Anyway, this is the kind of data of you walking around in your hometown streets, your mobile in pocket, can be easily gathered. Doing the same walk without a cell network -connect mobile device wouldn't show in that data set.

What! Are they tracking my movements via my cell phone?

Short: Yes.

Long: Yes. Your cell network provider knows every second to which cell tower all devices in their network are connected to. They also know the exact location of that identified cell tower. This coarse location information can be used by somebody or not.

Everybody knows the Hollywood movie cliché where a phone call is being tracked and somebody throws the frase "Keep them talking longer, we havent' got the trace yet!". In reality they'll know your location if your phone is turned on. It doesn't have to have an ongoing call or message being received. This is how all cell networks are designed and that's how they have always operated. They know your coarse location at all times. How exact a "coarse" location is, depends. Who has access to the location information is protected by multiple laws. The point is: they have the information. At all times.

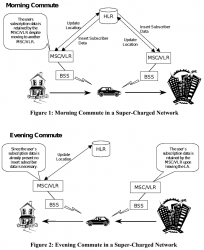

Example illustration from 3GPP spec TR 23.912 Technical report on Super-Charger:

I'm skipping most the TLA (Three-Letter Acronyms) from the pic, but the main concept is having the car (with a phone in it) moving around the network. A HLR (or Home Location Register) will always keep track, on which BSS (note: I think it's called Radio Network Subsysten, RNS in UMTS and LTE) the mobile device talks to. This BSS (or RNS) will send updates on any jumping between the serving cells.

To simplify this further: Just replace the car with a phone in your pocket and this fully applies to people bar-hopping in center of Helsinki.

Database of cell towers

As the cell tower locations are the key component when pinpointing somebody's location, we need to know which cell towers exist and exact locations of those. Unfortunately telcos think that's a trade secret and won't release such information to general public. At the same time, from our phones we can see the identifier of the cell tower a phone is connected to and some hints to neighbouring cells. I wrote about iPhone field test mode couple years back. What a phone also has is a GPS pinpointing the exact location where a particual cell tower and couple of its friends are located at. When added with the information of, a phone typically connecting to the tower with best signal, it is possible to apply some logic. Further gathering couple of data points more, it is possible to calculate a coarse location of a cell tower your phone connects to.

Being an iPhone user, I'm sorry to say an iPhone is not technically suitable for such information gathering. Fortunately, an Android being much more open (to malware) is. Necessary interfaces exist in Android-system to query for cell tower information with an app like Tower Collector. This kind of software it is possible to create records of cell tower information and send them to OpenCelliD to be further processed and distributed to other interested parties.

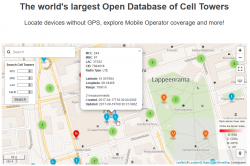

OpenCelliD website contains an interactive map:

The above example is from my home town of Lappeenranta, Finland. What it depicts is approximation of a LTE cell tower location having following attributes:

- MCC: 244

- MNC: 91

- LAC: 37322

- CID: 7984918

MCC and MNC indicate the telco. CellID Finder has following information for Finland at https://cellidfinder.com/mcc-mnc#F:

| MCC | MNC | Network | Operator or brand name | Status |

| 244 | 3 | DNA Oy | DNA | Operational |

| 244 | 5 | Elisa Oyj | Elisa | Operational |

| 244 | 9 | Finnet Group | Finnet | Operational |

| 244 | 10 | TDC Oy | TDC | Operational |

| 244 | 12 | DNA Oy | DNA | Operational |

| 244 | 14 | Alands Mobiltelefon AB | AMT | Operational |

| 244 | 15 | Samk student network | Samk | Operational |

| 244 | 21 | Saunalahti | Saunalahti | Operational |

| 244 | 29 | Scnl Truphone | Operational | |

| 244 | 91 | TeliaSonera Finland Oyj | Sonera | Operational |

What a LAC (Location Area Code) and CID indicate cannot be decoded without a database like OpenCelliD. Wikipedia article GSM Cell ID gives some hints about LAC and CID. The page also lists other databases you may want to take a look.

COVID-19 tracking

Apple and Google being the manufactures of major operating systems for mobile devices combined forces and created Exposure Notifications. This technology does NOT utilize cell towers nor GPS it it. It works only on Bluetooth LE.

As mentioned in appropriate Wikipedia article, the protocol is called Decentralized Privacy-Preserving Proximity Tracing (or DP-3T for short).

Finally

The key takeaway from all this is:

Location of your mobile device is always known.

Your location, movements between locations and timestamp you did the moving (or not moving) are actively being used to track everybody of us. That's because the technology in mobile networks requires the information.

If this information is shared to somebody else, that's a completely different story.

Mountain biking in Lappeenranta /w GoPro

Friday, October 23. 2020

To test my new GoPro, I published a track of some bicycling into Jälki.fi.

GPS-track is at https://jalki.fi/routes/4070-tyyskan-rantareitti-2020-09-24.

4K video is at https://youtu.be/TUIbstiFisE.

Advance-fee scam - 2.0 upgrade /w Bitcoin

Thursday, October 22. 2020

From Wikipedia https://en.wikipedia.org/wiki/Advance-fee_scam:

An advance-fee scam is a form of fraud and one of the most common types of confidence tricks. The scam typically involves promising the victim a significant share of a large sum of money, in return for a small up-front payment, which the fraudster requires in order to obtain the large sum.

Any Internet user knows this loved scam is very common, used actively all the time and has number of aliases, including Nigerian scam and 419 scam.

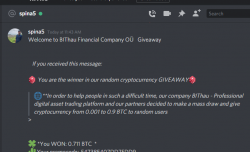

I was on my computer minding my own business when a bot approached me in Discord (that chat-thing gamers use):

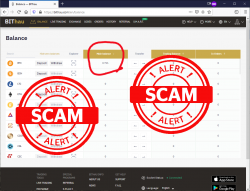

Basically what they're saying, I'd get ~7000 € worth in Bitcoins by going to their scam-site, registering as new user and applying the given promo code.

Ok. For those whose bullshit detector wouldn't start dinging already think this for a second:

Why would a complete stranger offer you 7k€ in Discord!!

no

they

wouldn't.

Being interested on their scam, I went for it. Clicked the link to their website, registered a new account, followed instructions and applied the promo code. Hey presto! I was rich!

I was a proud owner of 0.711 BTC. Serious money that!

Further following the instructions:

Obviously I wanted to access my newly found riches. Those precious Bitcoins were calling my name and I wanted to transfer them out to a wallet I controlled and could use them for buying new and shiny things.

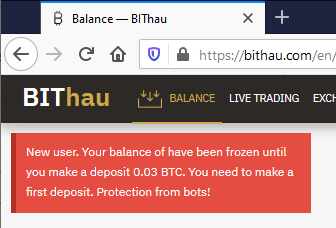

Not minding the 0.005 BTC transaction fee, this is what happens when you try accessing your Bitcoin giveaway -price:

Now they're claiming my new account has been frozen, because they think I'm a bot. Thawing my funds would be easy, simply transfer ~300€ worth of my money to them! As I wanted to keep my own hard-earned money, I did not send them the requested 0.03 BTC. I'm 100% sure, they'll keep inventing "surprising" costs and other things requiring subsequent transfer of funds. I would never ever be able to access the fake-price they awarded me.

Nice scam!

Custom X.509 certificate in Windows 10 Remote Desktop Service

Wednesday, October 21. 2020

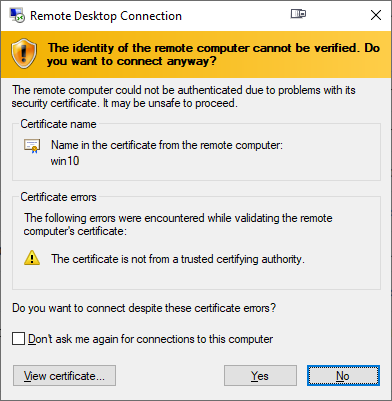

On a non-AD environment, this is what your average Windows 10 Remote Desktop client will emit on a connection:

For those who are AD-domain admins, they may have seen this rarely. If an AD has Certification Authority installed, it is easy and typical to deploy certs from it to workstations and never see the above message. The Net is full of instructions like Replace RDP Default Self Sign Certificate.

Problem that needs to be solved

For anybody like me, not running an AD-domain, simply having couple of Windows 10 -boxes with occasional need to RDP into them, that popup becomes familiar.

Note: I'll NEVER EVER click on Don't ask me again -prompts. I need to know. I want to know. Suppressing such information is harmful. Getting to know and working on the problem is The WayⓇ.

Gathering information about solution

If this was easy, somebody had created simple instructions for updating RDP-certificates years ago. Decades even. But no. No proper and reasonable easy solution exists. Searching The Net far & wide results only in bits and pieces, but no real tangible turn-key(ish) solution.

While in quest for information, given existence of The Net, I find other people asking the same question. A good example is: How to provide a verified server certificate for Remote Desktop (RDP) connections to Windows 10.

As presented in the above StackExchange answer, the solution is a simple one (I think not!!). These five steps need to be done to complete the update:

- Purchase a genuine verified SSL-certificate

- Note: It's a TLS-certificate! The mentioned protocol has been deprecated for many years. Even TLS 1 and TLS 1.1 have been deprecated. So it's a X.509 TLS-certificate.

- Note 2: Ever heard of Let's Encrypt? ZeroSSL? Buypass? (and many others) They're giving away perfectly valid and trusted TLS-certificates for anybody who shows up and can prove they have control over a domain. No need to spend money on that.

- Wrap the precious certificate you obtained in step 1) into a PKCS#12-file. A

.pfxas Windows imports it.- Note: Oh, that's easy! I think PKCS#12 is the favorite file format of every Regular Joe computer user.

Not!

Not!

- Note: Oh, that's easy! I think PKCS#12 is the favorite file format of every Regular Joe computer user.

- Install the PKCS#12 from step 2) into Windows computer account and make sure user NETWORK SERVICE has access to it.

- Note: Aow come on! Steps 1) and 2) were tedious and complex, but this is wayyyyyy too hard to even begin to comprehend! Actually doing it is beyond most users.

- Open a Registry Editor and add the SHA-1 fingerprint of the certificate into

HKEY_LOCAL_MACHINE\SYSTEM\CurrentControlSet\Control\Terminal Server\WinStations\RDP-Tcp\into a binary value calledSSLCertificateSHA1Hash.- Note: Oh really! Nobody knows what's a SHA-1 fingerprint nor how to extract that from a certificate in a format suitable to a registry binary value!

- Reboot the Windows!

- Note: As all Windows-operations, this requires a reboot.

- Note: As all Windows-operations, this requires a reboot.

Mission accomplished! Now the annoying message is gone. Do you want to guess what'll happen after 90 days passes? That's the allotted lifespan of a Let's Encrypt -certificate. Yup. You'll be doing the all of the above again. Every. Single. Painstaking. Step.

Problems needing to be solved

Let's break this down. As the phrase goes, an elephant is a mighty big creature and eating one is a big task. It needs to be done one bit at a time.

0: Which cert is being used currently?

If you simply want to get the SHA-1 hash of the currently installed RDP-certificate, a simple (or maybe not?) command of:

wmic /namespace:"\\root\cimv2\TerminalServices" PATH "Win32_TSGeneralSetting" get "SSLCertificateSHA1Hash"

... will do the trick. No admin-permissions needed or anything fancy.

To state the obvious problem: you'll be presented a hex-string but you have zero idea to where it points to and what to do with this information.

Hint:

You can to browse Windows Certificate Mchine Store. System certificates are not stored in your personal Certificate Store, so carefully point to a correct container. By default certificates are listed by subject, not SHA-1 hash. Luckily the self-signed RDP-cert is located in a folder "Remote Desktop" narrowing down the set.

To state the second obvious problem: WMI is a tricky beast. Poking around it from CLI isn't easy.

1: The certificate

Ok. With that you're on your own. If you cannot figure how Let's Encrypt works, doing this may not be your thing.

2: PKCS#12 wrapping

In most scenarios, a certificate is typically delivered in a PEM-formatted file or set of two files (one for public and second for private keys). PEM-format is native in *nix environment and all of tooling there can handle the data with ease. Converting the PEM-data into an interim (Microsoft created) format for Microsoft-world can be done, but is bit tricky in a *nix. Usage for this PKCS#12-formatted data is ephemeral, the certificate will stay in the file for only short while before being imported to Windows and there is no need for it anymore. A cert can be re-packaged if needed as long as the original PEM-formatted files exist. Also, the certificate data can be exported from Windows back to a file, if needed.

As PEM-format is native in *nix for certs, it is completely unsupported in Windows. A simple operation of "here is the PEM-file, import it to Windows" is literally impossible to accomplish! There is a reason why instructions have a rather complex spell with openssl-command to get the job done.

2.1: What others have done

When talking about PowerShell-scripting and PEM-format, I'll definitely have to credit people of PKISolutions. They publish a very nice library of PSPKI (source code is at https://github.com/PKISolutions/PSPKI) with PEM-import capability. As Windows Certificate Store is a complex beast, that code doesn't directly work as I'd need it to be for importing into Machine Store. Given existence of source code, the logic they wrote can be lifted, modified and re-used to do what is needed for RDP-cert installation process.

Among PKISolutions' excellent work is blog post by Vadims Podāns, Accessing and using certificate private keys in .NET Framework/.NET Core. There he explains in detail dark/bright/weird ages about how Microsoft's libraries have approached the subject of PKI and how thing have evolved from undefined to well-behaving to current situation where everything is... well... weird.

Why I mention this is imperative for practical approach. PSPKI-library works perfectly in PowerShell 5.x, which is built on Microsoft .NET Framework 4.5. That particular framework version is bit old, and given its age, it falls into bright bracket of doing things.

However, not living in past, the relevant version of PowerShell is PowerShell Core. At the time of writing the LTS (or Long-Term-Support) version is 7.0. A version of 7.1 is in preview and version 6 is still actively used. Those versions run obviously on modern .Net Core, an open-source version of DotNet running in Windows, Linux and macOS. In transition of Microsoft .Net into open-source .Net Core, most operating system -dependant details have changed while bumping things from closed-source-Windows-only thingie. This most definitely include implementation and interface details of Public Key Infrastructure.

In short: PSPKI doesn't work anymore! It did in "bright ages" but not anymore in current "weird ages".

2.2: What I need to get done

Sticking with an obsoleted PowerShell-version is a no-go. I need to get stuff working with something I actually want to run.

In detail, this involves figuring out how .Net Core's System.Security.Cryptography.CngKey can import an existing key into Machine Store as an exportable one. That was bit tricky even in .Net Framework's System.Security.Cryptography.RSACryptoServiceProvider. Also note, that when I talk about PKI, I most definitely mean RSA and other practical algorithms like ECDSA, which is fully supported in Cryptography Next Generation (CNG). The biggest problem with CNG is, there is lack of usable documentation and practical examples.

Having elliptic curve support is important. RSA is absolutely not obsoleted and I don't see it being so in near future. It's the classic: having options. This is something I already addressed in a blog post this spring.

Most people are using only RSA and can get their job done using old libraries. I ran out of luck as I needed to do something complex. Using new libraries was the only way of getting forward. That meant lots of trial and error. Something R&D is about.

3: Import the PKCS#12-packaged certificate into a proper certificate store of a Windows-machine

When the very difficult part is done and a PKCS#12-file exists and contains a valid certificate and the private key of it, importing the information is surprisingly easy to accomplish with code.

On the other hand, this one is surprisingly complex to accomplish manually. Good thing I wasn't aiming for that.

4: Inform RDP-services which certificate to use

Getting a SHA-1 hash of a X.509 certificate is trivial. Stamping a well-known value into registry is easy. Having correct permissions set was nearly impossible, but ultimately it was doable.

5: Make sure RDP-services will use the certificate

For this, there are number of ways to do. Many of them will involve reboot or restarting the service with a PowerShell-spell of:

Restart-Service -DisplayName "Remote Desktop Services" -Force

Surprisingly, on a closer look there is a way to accomplish this steop without rebooting anything. It's just not well known nor well documented, but Windows Management Instrumentation (or wmic) can do that too! Assuming the SHA-1 thumbprint of the certificate was in variable $certThumbprint, running this single-line command will do the trick:

wmic /namespace:"\\root\cimv2\TerminalServices" PATH "Win32_TSGeneralSetting" Set "SSLCertificateSHA1Hash=$certThumbprint"

It will update registry to contain appropriate SHA-1 hash, confirm the access permissions and inform RDP-service about the change. All of which won't require a reboot nor an actual restart of the service. Imagine doing the cert update via RDP-session and restarting the service. Yup. You will get disconnected. Running this WMI-command won't kick you out. Nice!

Solution

Set of tools I wrote is published in GitHub: https://github.com/HQJaTu/RDP-cert-tools

As usual, this is something I'm using for my own systems, so I'll maintain the code and make sure it keeps working in this rapidly evolving world of ours.

Example run

On the target Windows 10 machine, this is how updating my cert would work (as an user with Administrator permissions):

PS C:\> .\update-RDP-cert.ps1 `

-certPath 'wildcard.example.com.cer' `

-keyPath 'wildcard.example.com.key'

Output will be:

Loaded certificate with thumbprint 1234567890833251DCCF992ACBD4E63929ABCDEF

Installing certificate 'CN=*.example.com' to Windows Certificate Store

All ok. RDP setup done.

That's it. You're done!

Example run with SSH

As I mentioned earlier, I'm using Let's Encrypt. There is a blog post about how I approach getting the certificates in my Linux wth Acme.sh. There is an obvious gap with getting certs from LE with a Linux and using the cert in Windows 10. Files in question need to be first transferred and then they can be used.

Realistic example command I'd run to first transfer the newly issued LE-cert from my Linux box to be used as RDP-cert would be:

PS C:\> .\get-RDP-cert.ps1 `

-serverHost server.example.com `

-serverUser joetheuser `

-serverAuthPrivateKeyPath id_ecdsa-sha2-nistp521 `

-remotePrivateKeyPath 'certs/*.example.com/*.example.com.key' `

-remoteCertificatePath 'certs/*.example.com/*.example.com.cer'

The obvious benefit is a simple single command to get and install an RDP-certificate from Linux to Windows. All of the complexity will be taken out. My script will even clean the temporary files to not leave any private key files floating around.

Finally

Enjoy!

Admins/users: If you enjoy this tool, let me know. Drop me a comment.

Developers: If you love my CNG-import code, feel free to use it in your application. The more people know how it works, the better.

OpenSSH 8.3 client fails with: load pubkey invalid format - Part 2

Sunday, September 13. 2020

load pubkey: invalid formatThe original blog post is here.

Now Mr. Stott approached me with a comment. He suggested to check the new OpenSSH file format.

I was like "What?! New what? What new format!".

The obvious next move was to go googling the topic. And yes, indeed there exists two common formats for stored OpenSSH keys. Two pieces of articles I found most helpful were The OpenSSH Private Key Format and Openssh Private Key to RSA Private Key. Reading ssh-keygen man-page states:

-m key_format

Specify a key format for key generation, the -i (import), -e (export) conversion options, and the -p change passphrase operation.

The latter may be used to convert between OpenSSH private key and PEM private key formats.

The supported key formats are: “RFC4716” (RFC 4716/SSH2 public or private key), “PKCS8” (PKCS8 public or private key) or “PEM” (PEM public key).

The commonly used two formats can be identified by first line of the private key. Old format has the header of

-----BEGIN EC PRIVATE KEY-----. Obviously, those who are using RSA or Ed25519 keys, the word "EC" would be different. I've been using ECDSA for a while and am considering moving forward with Ed25519 when all of my clients and servers have proper support for it.

I've always "loved" (to hate) SSH's (non-)intuitive user experience. As suggested by all source, to convert my existing key to new PEM-format all I need is to whip up a key generator and use it to change the passphrase. Yeah. ![]()

As my OpenSSH-client is OpenSSH_8.3p1 31 Mar 2020, its ssh-keygen will default to output keys in the new format. Depending on your version, the defaults might vary. Anyway, if you're on a really old version, you won't be having the mentioned problem in the first place.

Warning: Changing private key passphrase will execute an in-place replace of the file. If you'll be needing the files in old format, best backup them first. Also, you can convert the format back if you want. It won't produce an exact copy of the original file, but it will be in old format.

For those not using passphrases in their private files: you can always enter the same passphrase (nothing) to re-format the files. The operation doesn't require the keys to have any.

Example conversion:

$ ssh-keygen -p -f my-precious-SSH-key

Enter old passphrase:

Enter new passphrase (empty for no passphrase):

Enter same passphrase again:

Your identification has been saved with the new passphrase.

Now the first line of the private key stands at:

-----BEGIN OPENSSH PRIVATE KEY-----

Notice how the key type has been changed into "OPENSSH". The key is still an ECDSA-key, but has been stored in a different.

Testing with the new key indicates a success. Now more warnings, but connectivity is still there. After the conversion, my curves are still elliptic in the right places for the server to grant access! Nice.

Summer pasttime - construction

Tuesday, September 8. 2020

Every summer I tend to do some construction work. By construction, I don't mean writing software or fiddling around with computers. By this I actually mean the act of building something from timber and bricks by attaching stuff together with screws and nails to form something new. Any person who owns property knows there is always something needing fixing, facelift or demolition. Also, anybody who has taken such a venture will also know how you can sink your time and money while at it. In case you didn't get the hint: what I'm trying to do here is explain my absence of blogging.

This year, I tore down the back terrace and re-built it. While at it (btw. its not completed yet), I found number of analogies with software engineering. Initially I had a perfectly good back terrace which (almost) served its purpose. It wasn't perfectly architected nor implemented, it was kinda thrown together like your basic PHP-website. It kinda worked, but there were a few kinks here and there. And to be absolutely clear: I didn't architect nor implement the original one. I just happened to be there, use it and eventually alter the original spec.

On moving in, I ordered really nice glassing to the terrace. Everything worked fine for many years and I was happy. This same thing happens with your really cheap hosting provider, years pass by and eventually it will the hit the fan. When it happens, you're left alone without any kind of support wondering what happened and how you're going to fix the site. I found out that by adding the terrace glassing, I had altered the requirements. Now there existed an implicit requirement for the terrace to stay level, as in not move. At all. Any minuscule movement will be ... well ... not good for your glassing making the glasses not slide in their assigned rails as well as originally intended. Exactly like your cheap website, I had no idea how the entire thing was architected. And any new requirements would de-rail the implementation (in this case: literally) making reality hit me into forehead (in this case: literally). During those years of successful living the terrace had moved and sunk a bit into soft sand. Not much, but enough for the glassings to mis-fit.

Upon realizing this, there was no real alternative. Old design had to go and new one needed to be made. Like in a software project, I begun by investigating what was implemented. In construction you would read this as: removing already constructed materials enough to be able to determine how the terrace was founded and how it was put together. In software engineering investigation is always easier and less intrusive leaving no gaping holes to structure. In this project I simply took a crowbar and let it rip. Also, during re-thinking period I came up with completely new requirements. Obviously I didn't want the thing to be sinking nor moving, I also wanted to have the bottom rails of the glassing on top of something hard instead of wood. Any organic material, like wood, has the tendency to twist, warp, shrink, expand and rot. When talking about millimeter accuracy of a glassing, that's not an optimal attribute in a construction material. Experience has shown, that when wood does all of the mentioned things, it does it in the wrong way making your life miserable. So, no more wood. More bricks.

This is what it nearly looked like in the beginning and how it looks like now (I'm skipping the in-between pics simply because they're boring):

Now everything is back and my new spec has been implemented. During the process of demolition, I yanked out couple of kilos of rusty nails:

Personally, I don't use much nails, not even with a nail gun. My prefenrece, when it makes sense, is always to attach everything with a screw and I think equal amount of screws have been put into appropriate places to hold the thing tightly together.

Moving foward, I obviously want to complete the new terrace extension. Also, I'd love to get back to computer. Blogging, Snowrunner and such.

Memory lane: My C-64 source code from -87

Saturday, July 25. 2020

When I was a kid growing up with computers, there was one (1) definite medium for a Finnish nerd to read. MikroBitti.

Wikipedia describes MikroBitti as:

a Finnish computer magazine published in Helsinki, Finland

For any youngster it will be exteremely difficult to comprehend an era of computing without Internet. It did exist, I did live (and almost survive) through it.

Among the scarce resources was code. Magazines printed on paper published source code for platforms of that era. Regular people copied the code by typing (mostly incorrectly) in an attempt to obtain more working software. As we know, a single mis-typed character in a 10000 character code will crash the entire thing, at minimum produce unpredictable results. Then came modems and era of BBSes. Before that happened, I was sure to learn everything I could from those magazine-published codes. I did that even for platforms I didn't own nor have access to.

Cover and contents.

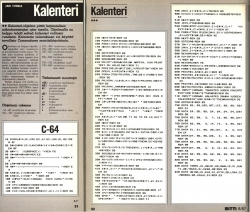

A trivial C-64 BASIC application by an unknown software engineer wanna-be producing a calendar for a given year. It even could print the calendar if you owned a printer.

Background info:

The code was written with a C-128 in C-64 mode. I was aiming for a larger audience C-64 had at the time. I don't remember the fee I received from this, but in my imagination it must have been something around 300 FIM. By using the Value of money converter @ stat.fi, 300 FIM in 1987 would equal to ~95 € in 2019. At the time, the low amount didn't matter! That was the first ever monetary compensation I received for doing something I was doing anyway. For all day, every day.

The brief intro for the calendar app was cut half by editor. What remains is a brief Finnish introduction about the purpose of the app and for other wanna-be software engineers a description what the variables in the code do.

Enjoy! If you find bugs, please report. ![]()

Arpwatch - Upgraded and explained

Friday, July 24. 2020

For many years I've run my own systems at home. Its given most of you do much less system running than I. There are servers and network switches and wireless routers, battery-backed power supplies and so on. Most of that I've written about in this blog earlier.

There is many security aspects any regular Jane lay-person won't spend a second thinking of. One of them is: What hardware runs on my home network? In my thinking that question is in top 3 -list.

The answer to that one is very trivial and can be found easily from your own network. Ask the network! It knows.

ARP - Address Resolution Protocol

This is in basics of IPv4 networking. A really good explanation can be found from a CCNA (Cisco Certified Network Associate) study site https://study-ccna.com/arp/: a network protocol used to find out the hardware (MAC) address of a device from an IP address. Well, to elaborate on that. Every single piece of hardware has an unique identifier in it. You may have heard of IMEI in your 3G/4G/5G phone, but as your phone also supports Wi-Fi, it needs to have an identifier for Wi-Fi too. A MAC-address.

Since Internet doesn't work with MAC-addresses, a translation to an IP-address is needed. Hence, ARP.

Why would you want to watch ARPs?

Simple: security.

If you know every single MAC-address in your own network, you'll know which devices are connected into it. If you think of it, there exists a limited set of devices you WANT to have in your network. Most of them are most probably your own, but what if one isn't? Wouldn't it be cool to get an alert quickly every time your network sees a device it has never seen before. In my thinking, yes! That would be really cool.

OUIs

Like in shopping-TV, there is more! A 48-bit MAC-address uniquely identifies the hardware connected to an Ethernet network, but it also identifies the manufacturer. Since IEEE is the standards body for both wired and wireless Ethernet (aka. Wi-Fi), they maintain a database of Organizationally unique identifiers.

An organizationally unique identifier (OUI) is a 24-bit number that uniquely identifies a vendor, manufacturer, or other organization.

OUIs are purchased from the Institute of Electrical and Electronics Engineers (IEEE) Registration Authority by the assignee (IEEE term for the vendor, manufacturer, or other organization).

The list is freely available at http://standards-oui.ieee.org/oui/oui.csv in CSV-format. Running couple sample queries for hardware seen in my own network:

$ fgrep "MA-L,544249," oui.csv

MA-L,544249,Sony Corporation,Gotenyama Tec 5-1-2 Tokyo Shinagawa-ku JP 141-0001

$ fgrep "MA-L,3C15C2," oui.csv

MA-L,3C15C2,"Apple, Inc.",1 Infinite Loop Cupertino CA US 95014

As we all know, CSV is handy but ugly. My favorite tool Wireshark does pre-process the ugly CSV into something it can chew without gagging. In Wireshark source code there is a tool, make-manuf.py producing output file of manuf containing the information in a more user-friendly way.

Same queries there against Wireshark-processed database:

$ egrep "(54:42:49|3C:15:C2)" manuf

3C:15:C2 Apple Apple, Inc.

54:42:49 Sony Sony Corporation

However, arpwatch doesn't read that file, a minor tweak is required. I'm running following:

perl -ne 'next if (!/^([0-9A-F:]+)\s+(\S+)\s+(.+)$/); print "$1\t$3\n"' manuf

... and it will produce a new database usable for arpwatch.

Trivial piece of information: Apple, Inc. has 789 OUI-blocks in the manuf-file. Given 24-bit addressing they have 789 times 16M addresses available for their devices. That's over 13 billion device MAC-addresses reserved. Nokia has only 248 blocks.

Practical ARP with a Blu-ray -player

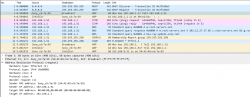

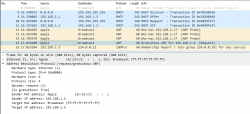

Let's take a snapshot of traffic.

This s a typical boot sequence of a Sony Blu-ray player BDP-S370. What happens is:

- (Frames 1 & 2) Device will obtain an IPv4-address with DHCP, Discover / Offer / Request is missing the middle piece. Hm. weird.

- (Frame 3) Instantly after knowing the own IPv4-address, the device will ARP-request the router (192.168.1.1) MAC-address as the device wants to talk into Internet.

- (Frames 5 & 6) Device will ping (ICMP echo request) the router to verify its existence and availability.

- (Frames 7-9) Device won't use DHCP-assigned DNS, but will do some querying of its own (discouraged!) and check if a new firmware is available at

blu-ray.update.sony.net. - (Frame 12) Device starts populating its own ARP-cache and will query for a device it saw in the network. Response is not displayed.

- (Frames 13 & 14) Router at 192.168.1.1 needs to populate its ARP-cache and will query for the Blu-ray player's IPv4-address. Device will respond to request.

- Other parts of the capture will contain ARP-requests going back and forth.

Practical ARP with a Linux 5.3

Internet & computers do evolve. What we saw there in a 10 year old device is simply the old way of doing things. This is how ARP works in a modern operating system:

In this a typical boot sequence. I omitted all the weird and unrelated stuff and that makes the first frame as #8. What happens in the sequence is:

- (Frames 8-11) Device will obtain an IPv4-address with DHCP, Discover / Offer /Request / Ack -sequence is captured in full.

- (Frames 12-14) Instantly after knowing the own IPv4-address, the device will ARP-request the IPv4 address assigned into it. This is a collision-check to confirm nobody else in the same LAN is using the same address.

- (Frame 15) Go for a Gratuitous ARP to make everybody else's life easier in the network.

- Merriam-Webster will define "gratuitous" as:

not called for by the circumstances :

not necessary, appropriate, or justified :

unwarranted - No matter what, Gratuitous ARP is a good thing!

- Merriam-Webster will define "gratuitous" as:

- (Frame 16) Join IGMPv3 group to enable multicast. This has nothing to do with ARP, though.

The obvious difference is the existence of Gratuitous ARP "request" the device did instantly after joining the network.

- A gratuitous ARP request is an Address Resolution Protocol request packet where the source and destination IP are both set to the IP of the machine issuing the packet and the destination MAC is the broadcast address ff:ff:ff:ff:ff:ff. A new device literally is asking questions regarding the network it just joined from itself! However, the question asking is done in a very public manner, everybody in the network will be able to participate.

- Ordinarily, no reply packet will occur. There is no need to respond to an own question into the network.

- In other words: A gratuitous ARP reply is a reply to which no request has been made.

- Doing this seems no-so-smart, but gratuitous ARPs are useful for four reasons:

- They can help detect IP conflicts. Note how Linux does aggressive collision checking by its own too.

- They assist in the updating of other machines' ARP tables. Given Gratuitous ARP, in the network capture, there are nobody doing traditional ARPing for the new device. They already have the information. The crazy public-talking did the trick.

- They inform switches of the MAC address of the machine on a given switch port. My LAN-topology is trivial enough for my switches to know which port is hosting which MAC-addresses, but when eyeballing the network capture, sometimes switches need to ARP for a host to update their MAC-cache.

- Every time an IP interface or link goes up, the driver for that interface will typically send a gratuitous ARP to preload the ARP tables of all other local hosts. This sums up reasons 1-3.

How can you watch ARPs in a network?

Simple: run arpwatch in your Linux-router.

Nice people at Lawrence Berkeley National Laboratory (LBNL) in Berkeley, California have written a piece of software and are publishing it (among others) at https://ee.lbl.gov/. This ancient, but maintained, daemon has been packaged into many Linux-distros since dawn of time (or Linux, pick the one which suits you).

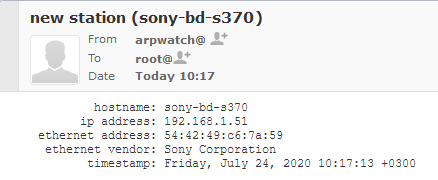

As already established, all devices will ARP on boot. They will ARP also later during normal operations, but that's beside the point. All a device needs to do is to ARP once and it's existence is revealed. When the daemon sees a previously unknown device in your network, it will emit a notification in form of an email. Example:

Here, my router running arpwatch saw a Sony Blu-ray player BDP-S370. The ethernet address contains the 24-bit OUI-part of 54:42:49 and remaining 24-bits of a 48-bit MAC will identify the device. Any new devices are recorded into a time-stamped database and no other notifications will be made for that device.

Having the information logged into a system log and receiving the notification enables me to ignore or investigate the device. For any devices I know can be ignored, but anything suspicious I'll always track.

IPv6 and ARP

Waitaminute! IPv6 doesn't do ARP, it does Neighbor Discovery Protocol (NDP).

True. Any practical implementation does use dual-stack IPv4 and IPv6 making ARP still a viable option for tracking MAC-addresses. In case you use a pure-IPv6 -network, then go for addrwatch https://github.com/fln/addrwatch. It will support both ARP and NDP in same tool. There are some shortcomings in the reporting side, but maybe I should take some time to tinker with this and create a patch and a pull-request to the author.

Avoiding ARP completely?

Entirely possible. All a stealth device needs to do is to piggy-back an existing device's MAC-address in the same wire (or wireless) and impersonate that device to remain hidden-in-plain-sight. ARP-watching is not foolproof.

Fedora updated arpwatch 3.1 RPM

All these years passed and nobody at Fedora / Red Hat did anything to arpwatch.

Three big problems:

- No proper support for

/etc/sysconfig/in systemd-service. - Completely outdated list of Organizationally Unique Identifier (OUIs) used as Ethernet manufacturers list displaying as unknown for anything not 10 years old.

- Packaged version was 2.1 from year 2006. Latest is 3.1 from April 2020.

Here you go. Now there is an updated version available, Bug 1857980 - Update arpwatch into latest upstream contains all the new changes, fixes and latest upstream version.

Given systemd, for running arpwatch my accumulated command-line seems to be:

/usr/sbin/arpwatch -F -w 'root (Arpwatch)' -Z -i eth0

That will target only my own LAN, both wired and wireless.

Finally

Happy ARPing!

openSUSE Leap 15.2 in-place upgrade

Sunday, July 12. 2020

Most operating systems have a mechanism to upgrade the existing version into a newer one. In most cases even thinking about upgrading without a fresh install-as-new makes me puke. The upgrade process is always complex and missing something while at it is more than likely to happen. These misses typically aren't too fatal, but may make your system emit weird messages while running or leave weird files into weird subdirectories. I run my systems clean and neat, so no leftovers for me, thanks.

There are two operating systems, which are exceptions to this rule of mine:

Windows 10 is capable of upgrading itself into a newer build successfully (upgrading a Windows 7 or 8 into a 10 is crap, do that to get your license transferred, then do a fresh install) and openSUSE. Upgrading a macOS is kinda working. It does leave weird files and weird subdirectories, but resulting upgraded OS is stable. Other Linuxes then openSUSE are simply incapable doing doing a good enough job of upgrading and I wouldn't recommend doing that. They'll leave turd, residue and junk behind from previous install and the only reasonable way is taking backups and doing a fresh install. openSUSE engineers seem to have mastered the skill of upgrade to my satisfaction, so that gets my thumbs up.

As openSUSE Leap 15.2 saw daylight on 2nd July, I felt the urge to update my Mac Book Pro into it. Some stories about my install of 15.1 are available here and and here.

The system upgrade of an openSUSE is well documented. See SDB:System upgrade for details.

To assess what needs to change, run zypper repos --uri and see the list of your current RPM-repositories. Pretty much everything you see in the list will have an URL with a version number in it. If it doesn't, good luck! It may or may not work, but you don't know beforehand. My repo list has something like this in it:

# | Alias | Name

---+---------------------------+-----------------------------------

2 | google-chrome | google-chrome

3 | home_Sauerland | Sauerland's Home Project (openSUSE

4 | openSUSE_Leap_15.1 | Mozilla Firefox

5 | packman | packman

6 | repo-debug | Debug Repository

7 | repo-debug-non-oss | Debug Repository (Non-OSS)

8 | repo-debug-update | Update Repository (Debug)

9 | repo-debug-update-non-oss | Update Repository (Debug, Non-OSS)

10 | repo-non-oss | Non-OSS Repository

11 | repo-oss | Main Repository

12 | repo-source | Source Repository

13 | repo-source-non-oss | Source Repository (Non-OSS)

14 | repo-update | Main Update Repository

15 | repo-update-non-oss | Update Repository (Non-Oss)

What I always do, is a backup of the repo-configurations. Commands like this run as root will do the trick and create a file repos-15.1-backup.tar into /etc/zypp/repos.d/:

# cd /etc/zypp/repos.d/

# tar cf repos-15.1-backup.tar *.repo

Next, upgrade versions in the static URLs with a carefully crafted sed-run:

# sed -i 's/15.1/15.2/g' /etc/zypp/repos.d/*.repo

A non-static .repo-file (example: /etc/zypp/repos.d/repo-oss.repo) will contain something like this:

[repo-oss]

name=Main Repository

enabled=1

autorefresh=1

baseurl=https://download.opensuse.org/distribution/leap/$releasever/repo/oss/

path=/

type=rpm-md

keeppackages=0

Notice the variable $releasever. No amount of editing or running sed will change that. Luckily there is an easier way. Run zypper with an argument of --releasever 15.2 to override the value of the variable. More about repository variables like $releasever are in documentation https://doc.opensuse.org/projects/libzypp/HEAD/zypp-repovars.html. zypper arguments are in the man page at https://en.opensuse.org/SDB:Zypper_manual_(plain).

Additional:

As my system is depending on stuff found in Sauerland-repo, I did this to upgrade the entries:

# zypper removerepo home_Sauerland

# zypper addrepo https://download.opensuse.org/repositories/home:Sauerland/openSUSE_Leap_15.2/home:Sauerland.repo

Now all the repo URLs are set. As documented doing some preparations:

# zypper --gpg-auto-import-keys ref

# zypper --releasever 15.2 refresh

Finally going for the actual distro update:

# zypper --releasever 15.2 dist-upgrade --download-in-advance

This will resolve all conflicts between old and new packets. If necessary you'll need to decide a suitable course of action. When all is set, a lengthy download will start. When all the required packets are at your computer, following prompt will be presented for you:

The following product is going to be upgraded:

openSUSE Leap 15.1 15.1-1 -> 15.2-1

The following 7 packages require a system reboot:

dbus-1 glibc kernel-default-5.3.18-lp152.20.7.1 kernel-firmware libopenssl1_1 systemd udev

2210 packages to upgrade, 14 to downgrade, 169 new, 54 to remove, 2 to change arch.

Overall download size: 1.40 GiB. Already cached: 0 B. After the operation, additional 475.5 MiB will be used.

Note: System reboot required.

Continue? [y/n/v/...? shows all options] (y): y

Going for a Yes will start the actual process:

Loading repository data...

Reading installed packages...

Warning: You are about to do a distribution upgrade with all enabled repositories. Make sure these repositories are compatible before you continue. See 'man zypper' for more information about this command.

Computing distribution upgrade...

When everything is done, following message will be displayed:

Core libraries or services have been updated.

Reboot is required to ensure that your system benefits from these updates.

This is your cue. Reboot the system.

If your upgrade went ok, you'll end up in a successfully upgraded system. To confirm the version of openSUSE, you can as an exmple query which package owns /etc/motd:

# rpm -q -f /etc/motd

The expected answer should be something like: openSUSE-release-15.2-lp152.575.1.x86_64

Also, second thing you need to verify is the version of Linux kernel your system is running with a:

# cat /proc/version

In openSUSE Leap 15.2 you'll get something like: Linux version 5.3.18-lp152.20.7-default (geeko@buildhost). If your kernel version isn't in the 5.3-series, something went wrong. 15.2 will use that. If you see that version, you're golden.

Congratulations! You did it!

Quite fast and painless, wasn't it?

OpenSSH 8.3 client fails with: load pubkey invalid format

Saturday, July 11. 2020

Update 13th Sep 2020:

There is a follow-up article with a key format conversion infromation.

Ever since updating into OpenSSH 8.3, I started getting this on a connection:

$ ssh my-great-linux-server

load pubkey "/home/me/.ssh/id_ecdsa-my-great-linux-server": invalid format

Whaaaat!

Double what on the fact, that connection works. There is no change in connection besided the warning.

8.3 release notes won't mention anything about that (OpenSSH 8.3 released (and ssh-rsa deprecation notice)). My key-pairs have been elliptic for years and this hasn't bothered me. What's going on!?

Adding verbosity to output with a -vvv reveals absolutely nothing:

debug1: Connecting to my-great-linux-server [192.168.244.1] port 22.

debug1: Connection established.

load pubkey "/home/me/.ssh/id_ecdsa-ecdsa-my-great-linux-server": invalid format

debug1: identity file /home/me/.ssh/id_ecdsa-ecdsa-my-great-linux-server type -1

debug1: identity file /home/me/.ssh/id_ecdsa-ecdsa-my-great-linux-server-cert type -1

debug1: Local version string SSH-2.0-OpenSSH_8.3

Poking around, I found this article from Arch Linux forums: [SOLVED] openssh load pubkey "mykeyfilepath": invalid format

Apparently OpenSSH-client now requires both the private AND public keys to be available for connecting. Mathematically the public key isn't a factor. Why would it be needed? I cannot understand the decision to throw a warning about assumed missing key. I do have the key, but as I won't need it in my client, I don't have it available.

Simply touching an empty file with correct name won't clear the warning. The actual public key of the pair needs to be available to make the ridiculous message go away.

After little bit of debugging points to the problem in ssh.c:

check_load(sshkey_load_public(cp, &public, NULL),

filename, "pubkey");

Link: https://github.com/openssh/openssh-portable/blob/V_8_3_P1/ssh.c#L2207

Tracking the change:

$ git checkout V_8_3_P1

$ git log -L 2207,2207:ssh.c

.. points to a commit 5467fbcb09528ecdcb914f4f2452216c24796790 (Github link), which was made exactly two years ago in July 11th 2018 to introduce this checking of loaded public key and emitting a hugely misleading error message on failure.

To repeat:

Connecting to a server requires only private key. The public key is used only at the server end and is not mathematically required to establish encrypted connection from a client.

So, this change is nothing new. Still the actual reason for introducing the check_load()-call with most likely non-existing public key is a mystery. None of the changes made in the mentioned commit or before it explains this addition, nor there are no significant changes made in the actual public key loading. A check is added, nothing more.

Fast forward two years to present day. Now that the 8.3 is actually used by a LOT of people, less than a month ago the problem was fixed. Commit c514f3c0522855b4d548286eaa113e209051a6d2 (Github link) fixes the problem by simulating a Posix ENOENT when the public key was not found from expected locations. More details about that error are in errno (7) man page.

Problem solved. All we all need to do is wait for this change to propagate to the new clients. Nobody knows how long that will take as I just updated this. ![]()