Formula 1 messing with Apps

Wednesday, October 24. 2018

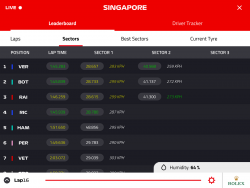

As a Formula 1 fan, while watching a F1 Grad Prix, I've been using real-time information feeds on https://www.formula1.com/en/f1-live.html pretty much since it was released. If memory serves me correct, that must have been around season 2008. After getting my first iPad (yes, the 1st gen one) in 2011, I went for the beautiful paid App by Soft Pauer flooding me with all kinds of information during the race. I assumed, that I had all the same information available as the TV-commentators.

In 2014 something happened. The app was same, but the publisher changed into Formula One Digital Media Limited, making it not a 3rd party software, but an official F1-product. At the same time, the free timing on the website was removed, causing a lot of commotion, as an example Why did FIA dumb-down the Live Timing on F1.com? Also, in a review (Official F1 Live Timing App 2014 reviewed), the paid app was considered very pricey and not worth the value. Years 2015 onwards, made it evident, that in loving care of the Formula One Group, they improved the app adding content, more content, improving the value and finally a TV to it. It was possible to actually watch the race via the app. All this ultimately resulted in a must-have app for every F1 fan.

This year, for Singapore weekend, they did something immensly stupid.

ARGH!

Did they really think nobody would notice? To put it shortly, their change was a drastical one. I ditched the new timing app after 5 minutes of failing attempts to get anything useful out of it. The people doing the design for that piece of sofware completely dropped the ball. Thy simply whipped up "something". Probably in their bright minds it was the same thing than before, it kinda looked the same, right. Obviously, they had zero idea what any form of motor racing is about. What information would be useful to anybody specatating a motor racing event was completely out of their grasp. They just published this change to App Store and went on happily.

Guess what will happen, when you take an expensive piece of yearly subscribed software and remove all the value from it. You will, but the authors of the software didn't.

IT MAKES PEOPLE MAD!

It didn't take too long (next race, to be exact), when they silently dropped the timing feature and released the old app as a spin-off titled F1 Live Timing. Obviously informing any paying customers of such a thing wasn't very high on their list of priorities. In their official statement they went for the official mumbo-jumbo: "The launch of our new F1 app didn't quite live up to your expectations, and live timing didn't deliver the great mobile F1 experience you previously enjoyed." By googling, I found tons of articles like Formula 1 makes old live timing app available again after problems.

As a friendly person, I'll offer my list of things to remember for FOM personnel in charge of those apps:

- Do not annoy paying customers. They won't pay much longer.

- If you do annoy paying customers, make sure they won't be really annoyed. They will for sure stop paying.

- If you make your paying customers mad, have the courtesy of apologizing about that.

- Also, make sure all of your paying customers know about new relevant apps, which are meant to fix your own failures.

- Improve on software design. Hire somebody who actually follows the sport to the design and testing teams.

Thank you.

Facebook security flaw - New accounts created on my email

Thursday, October 18. 2018

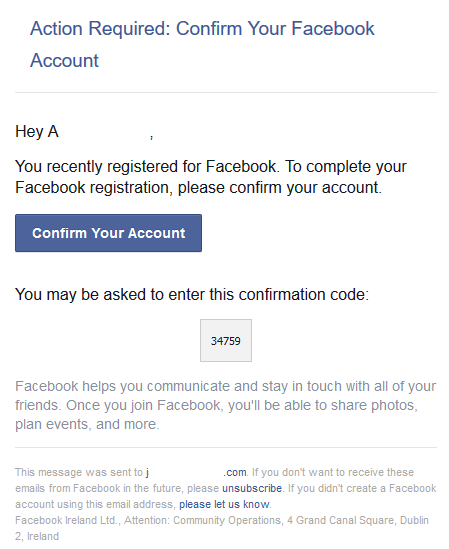

Couple days ago 8pm, I got an email from Facebook. Personally, I think face book is a criminal organization and want absolutely nothing to do with the idiots. Given the nature of the email, I started investigating:

Yeah, right. Like I would create an account there. And if I would, I wouldn't use any of my proper emails. Their information gathering from non-users is criminal and they do know pretty much everything there is to know about me. Still, I wouldn't give them anything too easily.

I did confirm the time. I wasn't even using a computer when above mail was received. So, I'm claiming, it wasn't me!

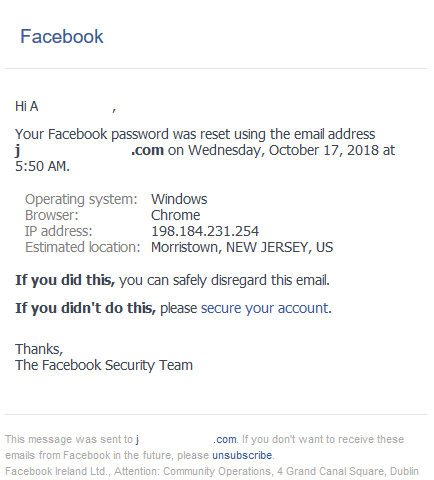

Things started getting really weird, when these arrived:

Somebody from New Jersey, USA had reset the password for this newly created account. How nice of them! ![]()

Also, there was some trouble logging in. Well that can happen, when you're hacking.

Given the option in the mail, I did click the "If you didn't do this, please secure your account". The first thing they want from me is my phone number. Again, they need to steal that information from me, I'm not going to GIVE it to them. Without verified mobile number, face-jerks let me do nothing. I cannot report the security incident. I cannot request closing the account. There is absolutely no options before entering my phone number. Nobody at super-smart face book anticipated things like this to happen. I really love their service design there! ![]()

Just to make sure, I did check my email logins at my service provider. No suspicious activity there. I did reply to security@facebookmail.com regarding this security incident, but I really don't have my hopes up. If somewhere there's a nipple visible or just regular business presentation, then the jerks at FB will censor the material in 5 minutes. When something really serious happens, they don't care. Their only motivator is the stock price. Visible nipples can threaten their revenues, security incidents to so much.

My guess is, that after their last incident (see: Relax, just 30 million Facebook accounts were compromised after all), there is lot of people wanting to crack the site. To me it looks like, somebody is progressing with the attempt and using my mail account at it.

Cleaning out old PCs

Sunday, October 14. 2018

Now that my new PC is done (see my previoius post and Larpdog's Twitch https://www.twitch.tv/videos/319110553):

It's finally time to make some space to my old junk storage. I found two of my previus PCs there, which finally need to go to SER.

When reading this, please understand that I do own other PCs too. Also, I have owned more PCs than I currently run and these two heading out, its just that I don't have those ones anymore to write a blog post about.

New PC - 2018 - Reference

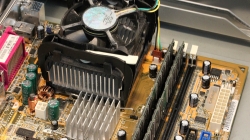

Just to get the difference, I'll post couple bad still images from my new PC:

Both pictures are actually crappy for a number of reasons. First, a Noctua NH-D15 CPU-cooler is huge. It weighs 1.3 kg and is 16 cm tall measured from top of CPU to top of Noctua's fans. It takes 16 x 15 x 15 cm space from the PC case, just to make sure there is proper air flow for the heatpipes coming from the CPU. For photography, it means everything else is hidden by it. Ufffff!

Then Asus motherboard has an aluminium shielding covering everything. That's most likely for EMC protection and heat management. In a properly cooled PC-case, black aluminium transfers heat out of the motherboard faster. Also, a Fractal Design PC-case is pitch black. What I have here is a black picture of blackess on black.

Finally, also the graphics card is quite a beast. It takes 30 cm in lenght and is over 5 cm thick. Yet again covering everything inside the PC, that would be worth looking at. So, this 2018 PC is visually quite boring. Maybe that's why people love having some sort of light show inside their transparent case. Ufffff! My case doesn't have any transparent parts in it, no PC-disco for me, thanks.

PC 1 - Pentium 4 from years 2002-2008

This one I used in couple of hardware configurations for many many years. Mostly with Windows XP.

After serving me well, I decommissioned this around end of year 2008. There are changed files in early 2009, but it looks like I haven't used this PC since. It has been mostly gathering dust in storage.

CPU

Spec:

-

Intel® Pentium® 4 Processor 2.40 GHz, 512K Cache, 533 MHz FSB

-

Launch: 2002/Q1

-

Cores: 1

-

512KiB Cache

-

2.4 GHz

- 533 MHz FSB

- Socket 478

Details are at Ark Intel.com https://ark.intel.com/products/27438/Intel-Pentium-4-Processor-2-40-GHz-512K-Cache-533-MHz-FSB

It was my last PC not running 64-bit AMD64-instruction set at the end of 32-bit era Mighty powerful at the time, still less powerful than my iPhone 8 today. It looks like this with Intel's boxed CPU-cooler:

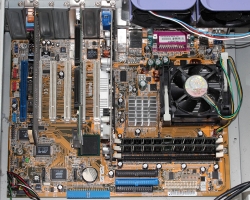

Board

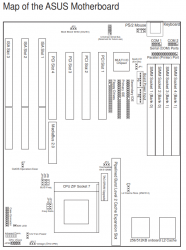

The motherboard for this project is an Asus P4PE:

More details are at Asus' website: https://www.asus.com/supportonly/P4PE-XTE/HelpDesk_Manual/

Graphics card

Given the fact, that I never like putting enormous amounts of money into GPU's, so this one has a Club-3D Radeon 9800 PRO 128MB DDR GDDR:

The manufacturer's fan on top of the GPU was a piece of junk. I had to replace it in a year or so. Also, note the S-Video output between standard VGA D15 and DVI-D. Before HDMI, hooking up a PC to an everyday TV was tricky. S-Video was one of the supported connectors in TVs having multiple SCART-connectors.

Also looking at the pics of the CPU-cooler and the graphics card make me laugh. There is almost no cooling at all in neither. Also the simpliness of graphics card is something that really sticks out. Modern cooling, especially in graphics cards, looks HUGE!

One notable thing to mention, is that this graphics card connected to the MoBo via AGP or Accelerated Graphics Port and having DirectX 9.0 hardware acceleration making it pretty fast (at the time). If you've never heard of AGP, don't worry, it was just an attempt to make GPUs connect to CPU/RAM-package faster. It was little bit faster than PCI, but not much. Obviously, it wasn't such a great invention and was quickly surpassed by PCIe, which seems to stand the test of time. For curious ones, I googled up some specs for the card: https://icecat.biz/en-in/p/club3d/cga-p988tvd/graphics-cards-RADEON+9800+PRO+128MB+DDR-113233.html

PC 2 - Pentium from years 1994-1998

Ok, this baby is an old one! To make it really ancient, it even runs IBM OS/2 as operating system. Those padawans who don't know what an OS/2 is, just hit to Wikipedia for https://en.wikipedia.org/wiki/OS/2. During the active years in servie, I recall upgrading the CPU once, doubling the RAM once and swapping the GPU-card to a faster one. This is the oldest hardware I have, and didn't create any records at the time. For anything newer, I have proper records of the history in my wiki.

CPU

Spec:

- Intel® Pentium® Processor 150 MHz, 60 MHz FSB

- Launch: 1996/01

- Cores: 1

- 8 + 8 KiB Cache

- 150 MHz

- 60 MHz FSB

- Socket 7

Details are at Ark Intel.com https://ark.intel.com/products/49958/Intel-Pentium-Processor-150-MHz-60-MHz-FSB

This old puppy has one distinguishing feature: it is Spectre/Meltdown-proof! As it doesn't have any kind of prediction or jump analysis in it, it cannot be fooled. ![]() Actually, there is nothing in it to make it run faster. It is a product of an era when everything was made faster by adding megaherz (note: not gigaherz) to base frequency.

Actually, there is nothing in it to make it run faster. It is a product of an era when everything was made faster by adding megaherz (note: not gigaherz) to base frequency.

The installed CPU with Intel's boxed cooler looks like this:

Please, notice how a heatsink and a fan on top of it are almost 20 mm high! ![]()

Also, don't confuse this Pentium to any of the modern day CPUs Intel calls Pentium. This one was really one of the first ever manufactured Pentiums. Initially this had a slower Pentium in it (I think 90 MHz), just getting an upgrade was inexpensive at the time.

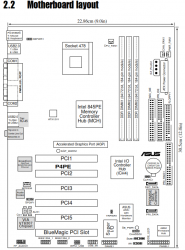

Board

Motherboard is a Asus P/I-P55T2P4. Some specs of it can be found from: https://www.asus.com/supportonly/PI-P55T2P4/HelpDesk_CPU

Note how this is clearly a PCI-era board, but it still has three ISA-slots in it. And please, don't confuse PCI for a modern PCI-express. That's probably why, the word "conventional" is used in Wikipedia article of PCI: https://en.wikipedia.org/wiki/Conventional_PCI

GPU-card

Oh, this is a trip to memory lane. Card is a S3 Trio64V+. Some information about this relic is at https://en.wikipedia.org/wiki/S3_Trio and http://www.vgamuseum.info/index.php/cpu/item/359-s3-trio64v.

Note how I have Scotch Tape on two holes of the mounting bracket. Reason is simple, back in the 1990s we didn't know how air in a PC case should flow and we had it wrong. Sucking air trough graphics cards (or any other necessary components) is not smart and that's why its done differently today.

This particular card first appeared in 1995 and I've been using it for couple of years around that time. S3 was a really successful graphics company and their products were really good at the time. Also, at the time companies did design and manufacture their own cards. Today manufacturers just release specs and reference designs for the actual manufacturers to do the heavy lifting. Also, for old geezers like me, it is soooo weird to see a graphics card to be running just-the-regular PCI.

For brief history of GPUs in the 90s, read something like From Voodoo to GeForce: The Awesome History of 3D Graphics @ PC Gamer. Just like CPUs, also GPUs have gone giant leaps during past 20 years.

If you've never heard of company called S3 Graphics, go educate your self at https://en.wikipedia.org/wiki/S3_Graphics. Brief highlights are January 1989, founding of the company, November 2000 at point where S3 had officially been outran by competition, they changed their name to SONICBlue. And finally March 2003, when filed for Chapter 11 bankruptcy. They had their moment, but given the fierce competition by Nvidia and Ati and Matrox and many others, they just couldn't keep up with the technological advances fast enough to be able to offer interesting products to customers. Today, what remains of S3 is part of HTC, the Chinese cellphone manufacturer.

Ok, the junk's gone - What then?

Yes, it cleaing up doesn't end here. I still have ~1000 floppy discs in storage. Now that PCs having a 5.25" or 3.5" drive are gone, I'll keep cleaning some more. Maybe I should take a peek into some of those floppies to see if there is anything valuable in them.

Also, I have the images of the OS/2 hard drives. Next I need to figure how to read them.

Twitch'ing with Larpdog - Assembly of my new PC

Wednesday, October 3. 2018

Next Saturday, on 6th October, I'll be joining (again) with Larpdog on his Twitch-channel https://www.twitch.tv/larpdog to assemble my new PC. The stream language will be Finnish and we will start on 17 Finnish summer time, making it 14 UTC.

This is something similar we did last year (see the post about it).

Unfortunately Twitch-videos are kept only for 14 days, so that recording link has gone sour.

Update:

Link to the stream is https://www.twitch.tv/videos/319110553

RouterPing - Gathering ICMPv4 statistics about a router

Monday, September 24. 2018

When talking about the wonderful world of computers, networking and all the crap we enjoy tinkering with, sometime somewhere things don't go as planned. When thinking this bit closer, I think that's the default. Okok. The environment is distributed and in a distributed environment, there are tons of really nice places that can be misconfigured or flaky.

My fiber connection (see the details from an old post) started acting weird. Sometimes it failed to transmit anything in or out. Sometimes it failed to get a DHCP-lease. The problem wasn't that frequent, once or twice a month for 10-15 minutes. As the incident happened on any random moment, I didn't much pay attention to it. Then my neighbor asked if my fiber was flaky, because his was. Anyway, he called ISP helpdesk and a guy came to evaluate if everything was ok. Cable-guy said everything was "ok", but he "cleaned up" something. Mightly obscure description of the problem and the fix, right?

Since the trust was gone, I started thinking: Why isn't there a piece of software you can run and it would actually gather statistics if your connection works and how well ISP's router responds. Project is at https://github.com/HQJaTu/RouterPing. Go see it!

How to use the precious router_ping.py

Oh, that's easy!

First you need to establish a point in network you want to measure. My strong suggestion is to measure your ISP's router. That will give you an idea if your connection works. You can also measure a point away from you, like Google or similar. That woul indicate if your connection to a distant point would work. Sometimes there has been a flaw in networking preventing access to a specific critical resource.

On Linux-prompt, you can do a ip route show. It will display your default gateway IP-address. It will say something like:

default via 62.0.0.1 dev enp1s0

That's the network gateway your ISP suggest you would want to use to make sure your Internet traffic would be routed to reach distant worlds. And that's precisely the point you want to measure. If you cannot reach the nearest point in the cold Internet-world, you cannot reach anything.

When you know what to measure, just run the tool.

Running the precious router_ping.py

Run the tool (as root):

./router_ping.py <remote IP>

To get help about available options --help is a good option:

optional arguments:

-h, --help show this help message and exit

-i INTERVAL, --interval INTERVAL

Interval of pinging

-f LOGFILE, --log-file LOGFILE

Log file to log into

-d, --daemon Fork into background as a daemon

-p PIDFILE, --pid-file PIDFILE

Pidfile of the process

-t MAILTO, --mail-to MAILTO

On midnight rotation, send the old log to this email

address.

A smart suggestion is to store the precious measurements into a logfile. Also running the thing as a daemon would be smart, there is an option for it. Also, the default interval to send ICMP-requests is 10 seconds. Go knock yourself out to use whatever value (in seconds) is more appropriate for your measurements.

If you're creating a logfile, it will be auto-rotated at midnight of your computer's time. That way you'll get a bunch of files instead a huge one. The out-rotated file will be affixed with a date-extension describing that particular day the log is for. You can also auto-email the out-rotated logfile to any interested recipient, that's what the --mail-to option is for.

Analysing the logs of the precious router_ping.py

Outputted logfile will look like this:

2018-09-24 09:09:25,105 EEST - INFO - 62.0.0.1 is up, RTT 0.000695

2018-09-24 09:09:35,105 EEST - INFO - 62.0.0.1 is up, RTT 0.000767

2018-09-24 09:09:45,105 EEST - INFO - 62.0.0.1 is up, RTT 0.000931

Above log excerpt should be pretty self-explanatory. There is a point in time, when a ICMP-request to ISP-router was made and response time (in seconds) was measured.

When something goes south, the log will indicate following:

2018-09-17 15:01:42,264 EEST - ERROR - 62.0.0.1 is down

The log-entry type is ERROR instead of INFO and the log will clearly indicate the failure. Obviously in ICMP, there is nothing you can measure. The failure is defined as "we waited a while and nobody replied". In this case, my definition of "a while" is one (1) second. Given the typical measurement being around 1 ms, thousand times that is plenty.

Finally

Unfortunately ... or fortunately depending on how you view the case, the cable guy fixed the problem. My system hasn't caught a single failure since. When it happens, I'll have proof of it.

DocuSign hacked: Fallout of leaked E-mail addresses

Sunday, September 23. 2018

Over an year ago, DocuSign was hacked (again). Initially they denied the entire thing, but eventually they had to come clean about their userbase being leaked. I think their wording was, that only email addresses were stolen, but ... given their lack of transparency I wouldn't take their word on that. Read my post about the 2017 incident.

After the May 2017 leak, I've received at least dozen emails to my DocuSign address. For the jerks pulling the userbase, it surely has been a source of joy, a gift that keeps on giving.

Couple days ago I got one of these:

Looks like a perfectly valid DocuSign you've-got-mail -announcement, except it has a really funny recipient address, DocuSign knows my real name. Also, the link won't land on DocuSign website. The Sign Invoice -link doesn't even have HTTPS-address, which is pretty much mandatory after July 24th 2018, so without a doubt it is a fake. I'm not sure if its sensible to publish the UID of CRIQQABU2AHOQ0TUYBUD or the code E1ABA59517. Doing that might bite me later, but its done already.

At the time of writing, GoDaddy took the entire target site down. Obviously, some innocent website (most likely a WordPress) got re-purposed to act as DocuSign "mirror" harvesting data of click-baitable victims and offering them malware and/or junk. GoDaddy is notorious for taking down domains and websites on a hint of a complaint, so I really cannot comprehend why anybody would want to use their services. Given their enormous size, most of their paying customers won't realize, that anybody can take any GoDaddy-hosted site down in a jiffy. But that's the way the World works, you harvest money from the unware.

DocuSign is learning, slowly, but looks like the direction is correct. Their website (https://www.docusign.com/trust) has words "Transparency is essential" in it. Yup. That's right. Your mess, own it! This time they actually do own it, they published an alert ALERT:09/19/2018 @ 9.03 AM Pacific Time - New Phishing Campaign Observed Today. That's what you do when somebody pwns you and your entire userbase get stolen. Good job!

It remains to be seen, if those buggers dare to deny their next leak. So far I'm not trusting those liars, but I'm liking their new approach.

QNAP Stopping Maintenance of TS-419P II (again)

Saturday, August 25. 2018

This is a weird one. Beginning of this year Qnap made a choice to EOL my NAS-box. I posted about that at the time. Well, that happens. Its just that my current box works for me and all, I don't necessarily need a new one.

Without announcing anything, I got a firmware upgrade for it! Actually, I got two. Initially I assumed to be hallucinating or something. On a 2nd time I had to confirm my original blog post, that EOL really did happen.

Today on their EOL-page (or Product Support Status) https://www.qnap.com/en/product/eol.php they state:

Model: TS-419U II

Hardware Repair or Replacement: Full

OS and Firmware Updates and Maintenance: 2017-12 (QTS 4.3.3)

Technical Support and Security Updates: 2020-12

What the ... happened? Now they're announcing (or NOT announcing, informing) to provide full support until end of year 2020. Well, maybe I should go purchase a new one anyway.

Summer pasttime - flying a quadcopter

Sunday, August 12. 2018

The summer here in Finland has been extremely warm. Given that, I've mostly not been inside doing computer-things, but outside doing outdoorsy things. Here we get couple good months per year, if we're lucky, so I decided to enjoy them fully.

Besides SUPping around the Lake Saimaa, I got a DJI Phantom 3 quadcopter to test out. At the time of writing, I already returned the loaner.

The thing looks like this, when the controller has my iPad attached to it:

Here is some sample footage:

YouTube link: https://youtu.be/w-ISgv08ad0

Just getting the thing flying is pretty easy, but controlling it in a sensible fashion so that the 4K-camera would actually capture a beautiful video is very hard. The above video is a first run and it has tons of camera operator mistakes in it. Some of my un-published videos I did do 3-4 runs to get it right. Still, flying the thing was tons of fun.

Automating IPMI 2.0 management Let's Encrypt certificate update

Thursday, July 12. 2018

Today, I'm combining to previous post into a new one. I've written earlier about going fully Let's Encrypt and the problems I have with them. Now that I'm using them, and those certs have ridiculously short life-span, I need to keep automating all possible updates. That would include the IPMI 2.0 interface on my Supermicro SuperServer.

Since Aten, the manufacturer of the IPMI-chip chose not to make the upload of a new certificate automateable (is that a word?), I had to improvise something. I chose to emulate web browser in a simple Python-script doing first the user login via HTTP-interface, and then upload the new X.509 certificate and the appropriate private key for it. Finally the IPMI BMC will be rebooted. Now its automated!

So, the resulting script is at https://gist.github.com/HQJaTu/963db9af49d789d074ab63f52061a951. Go get it!

Parsing multi-part sitemap.xml with Python

Wednesday, July 11. 2018

A perfectly valid sitemap.xml can be split into multiple files. For that see, the specs at https://www.sitemaps.org/protocol.html. This is likely to happen in any content management system. For example WordPress loves to split your sitemaps into multiple dynamically generated parts to reduce the size of single XML-download.

It should be an easy task to slurp in the entire sitemap containing all the child-maps, right? I wouldn't be blogging about it, if it would be that easy.

Best solution I bumped into was by Viktor Petersson and his Python gist. I had to fix his sub-sitemap parsing algorithm first to get a desired result. What he didn't account was the fact, that a sub-sitemap address can have URL-parameters in it. Those dynamically generated ones do sometimes have that.

Go get my version from https://gist.github.com/HQJaTu/cd66cf659b8ee633685b43c5e7e92f05

My thanks go to Mr. Petersson and Mr. Addyman for creating the initial versions.

User experience (or ux) in a nutshell

Monday, July 9. 2018

Two Zoltáns, Gócza and Kollin are maintaining a superbly good webiste about UX-myths, uxmyths.com.

Given the excellent quality of the site, they list common mis-beliefs people have regarding websites and they thoroughly debunk them with references to actual measured information.

Just by reading their list we learn, that

- People don't read the text you post (myth #1)

- Navigation isn't about 3 clicks, it's about usability of the navigation (myth #2)

- People do scroll, on all devices (myth #3)

- Accessibility doesn't make your website look bad and is not difficult (myths #5 and #6)

- Having graphics, photos and icons won't help (myths #7, #8 and #13)

- IT'S ALWAYS ABOUT THE DETAILS! (myth #10)

- More choices make users confused (myths #12 and #23)

- You're nothing like your users and never will be! (myths #14, #24, #29 and #30)

- User experience is about how user feels your site (myth #27)

- Simple is not minimal is not clarity, they are three different things! (myths #25 and #34)

- ... aaaand many more

Go see all the studies, research and details in their site.

And thank you for your work Mr. Gócza and Mr. Kollin. Thanks for sharing with all of us.

Refurbishing APC Replacement Battery Cartridge #7

Sunday, May 20. 2018

Roughly 4 years ago, I blogged about a battery change to my UPS. The post is at APC Smart-UPS battery change. My unit eats APC Replacement Battery Cartridge #7 as replacment, and they are generally available in the net. The price point is there, such a replacement costs 250,- € easily. Much more, if you're not careful.

Couple years after publication Mr. Oliver commented my post (https://blog.hqcodeshop.fi/archives/195-APC-Smart-UPS-battery-change.html#c2000) about getting a pair of Yasa NP7-12 batteries. In his comment, he posted a PDF-spec http://www.yuasabatteries.com/pdfs/NP_7_12_DataSheet.pdf. Just by eyeballing the details, it became obvious, that there is no way in freezing hell, to be able to use that particular battery unit as replacment.

While I dismissed the suggestion quickly, Mr. Oliver succesfully incepted the idea (if somebody hasn't seen Inception, you missed my point there). In life, there are situations where the plan is a crappy one to begin with. On the other hand, sometimes the plan is rock solid, but implementation falls short. For best results, a good plan and good implementation is needed. So, I decided to investigate this battery replacement thingie and come up with a good plan. Initially it was more like a wish, I had no way of knowing how my chips would fall out.

The Investigation

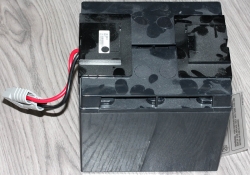

So, when yanked out of the UPS, a APC Replacement Battery Cartridge #7 looks like this:

During the 4 years of running, it gathered some amount of dust. If I would care, I would have cleaned the worn out unit before taking the pics, but ... naah. And if you want to know how to actually yank it out, see my previous post.

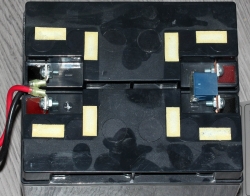

In a glance, the #7 doesn't have any moving parts in it. There is nothing to remove, nothing to un-screw. But a closer inspection reveals some plastic covers just attached to the battery with a two-sided tape:

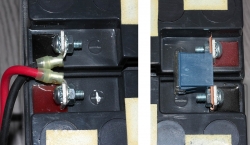

Yes! I'm, getting somewhere here. A close-up on the battery connectors:

The battery connectors have holes in them and there is a M6-screw running trough them. A 10mm wrench and a PH2 screwdriver will do magic there.

Finally I had all the parts separated:

There was some adhesive tape to make the two batteries stick together. As all the connector bits were removed, I just applied brute force to separate the lead acid batteries from each other.

The Plan

A Hitachi Chemical Energy Technology Co. Ltd, GP12170. Spec is at: http://www.csb-battery.com/english/01_product/02_detail.php?fid=5&pid=13

My simple plan was to:

- Find out if a suitable replacement battery was available. Mr. Oliver suggested that the price range would be £30,-

- Get the replacement batteries

- Apply some adhesive tape and screw the APC-connector bits and their plastic covers back

- Plug the refurbished unit back to my UPS and admire the results (success of failure)

The Implementation

Finding and getting the replacment units

Nope. Just by googling, I didn't find that particular GP 12170 battery anywhere where the shipping costs wouldn't kill me. Lead acid batteries are heavy, as in expensive to ship, remember, the lead-part there.

- Since asking doesn't hurt, I just popped by my local battery-guy at Akku-Arkka Oy.

- His first question was: "Which lawnmower did your take that from?"

- I was in luck! He had suitable units in stock. For some reason, they are sold as a twin-box:

- Obviously, a twin-box is exactly what I needed for this purpose!

Assembly

At this point, my plan was coming together.

- I just got some two-sided tape, stuck some of that on the side of the battery and stuck the other battery to the tape to form a single unit.

- I screwed the APC-bits back to the connectors. Even the holes were precisely the same size.

- More two-sided tape to the top and battery connectors were nicely covered.

I didn't bother taking any pics of this. My final result looked un-surprisingly like the original APC-unit.

Plugging it in & testing

Since these quality UPS-things have hot-swappable batteries, the UPS-unit was running my computers all the time since the batteries failed, I removed the old battery-pack and finally was about to test the new battery-pack. The obvious risk at this point was if I made a mistake and my UPS would completely fry because of that.

But no, it didn't happen. Everything worked perfectly! My APC utilities on Linux indicated following:

# apcaccess

APC : 001,043,1009

DATE : 2018-05-20 13:03:11 +0300

VERSION : 3.14.14 (31 May 2016) redhat

CABLE : USB Cable

DRIVER : USB UPS Driver

UPSMODE : Stand Alone

STARTTIME: 2018-05-20 13:03:07 +0300

MODEL : Smart-UPS 1500

STATUS : ONLINE

LINEV : 234.7 Volts

LOADPCT : 13.6 Percent

BCHARGE : 100.0 Percent

TIMELEFT : 91.0 Minutes

Finally

Looks like all the lead-acid batteries in world come from Vietnam. See article Is Vietnam the new China for lead-acid battery manufacturers? about that.

I saved ~150,- € by doing this instead of going for the official unit. Nice!

Back to blogging - back from Finland

Saturday, May 19. 2018

Again, bit of a pause from blogging. I dragged my ass back to Finland. See my post from last year about moving to Sweden for further info.

I served my contract at King and chose not to continue there. The reasoning was actually very simple: my entire life has always been and is in Finland. Taking a brief side-step and living abroad was fun and all, but quite soon it become obvious, that I cannot sustain that for very long time.

So, I started a new job and am trying to continue my projects here. That does include some hacking and blogging about that.

New toys: iPhone 8

Wednesday, April 25. 2018

Whenever there are new toys, I'm excited! Now that I have new iPhone 8, there is no other way to phrase it: it IS same as 7, which was same as 6. The observation Woz pointed out when X and 8 came out:

I’m happy with my iPhone 8 — which is the same as the iPhone 7, which is the same as the iPhone 6, to me.

Last year around this time, I wasn't especially impressed when I got my 7. See and/or read it at /archives/345-New-toys-iPhone-7.html. This year, I'm kinda hoping to still have my 6. The good part is, that I won't have to pay for these toys myself. If I would, I would be really really disappointed.

An end is also a beginning

Tuesday, April 24. 2018

Today, I had my last day at King. Right now I'm toasting this fine drink to my wonderful ex-colleagues.

Next I'm taking a breather and next week starting something new back in Finland!