RFID Mifare Classic "clone"

Saturday, April 21. 2018

Toying around with RFID, tags has always been something I wanted to do, but never had the time. Contactless payment is gaining traction all around the world. The reason is very simple: it is fast and convenient for both the customer and vendor to just touch'n'go with your credit card or mobile phone on a point-of-sale.

Credit/Debit card payments are based on EMV, or Europay MasterCard and Visa, standard. See generic EMV info at https://en.wikipedia.org/wiki/EMV. Back in 2011 Visa started driving the contactless standard worldwide, and given the situation today, their efforts paid off. However, my understanding is, that at the time of writing this, regardless number of people attempting it, there are no known vulnerabilities in the contactless EMV. Finding one, would be sweet, but finding one would also be extremely hard and time consuming. So, I decided to go for something easier. MIFARE Classic.

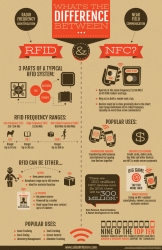

What's this RFID, isn't it NFC?

Short answer: yes and no.

This infographic is courtesy of atlasRFIDstore

http://blog.atlasrfidstore.com/rfid-vs-nfc

MIFARE Classic info

Since this topic isn't especially new, I'm just posting some useful sites I found to be very useful when doing RFID-hacks:

- Finding the encryption keys:

- Cloning the tag:

- Information about Mifare Classic encryption key hacking:

- ISO/IEC 14443 Type A generations:

Going to eBay for the hardware

I had a real-world RFID -tag, and wanted to take a peek into it. For that to happen, I needed some hardware.

The choice for reader/writer is obvious, an ACR122. Info is at: https://www.acs.com.hk/en/products/3/acr122u-usb-nfc-reader/. The thing costs almost nothing and is extremely well supported by all kinds of hacking software.

Going to GitHub for the software

All the software needed in this project can be found from GitHub:

- libusb, https://github.com/libusb/libusb

- Some software want libusb 0.1 some 1.0. I had only 0.1 installed so I had to compile the latest also.

- libnfc, https://github.com/nfc-tools/libnfc

- Latest installed

- MiFare Classic Universal toolKit, mfcuk, https://github.com/nfc-tools/mfcuk

- Installed, because it has Mifare Classic DarkSide Key Recovery Tool. This is an advanced approach into cracking the encryption keys.

- mfoc, https://github.com/nfc-tools/mfoc

- offline nested attack by Nethemba

- This is the one, NXP tried to prevent the hack to be publicly released, see info from https://www.secureidnews.com/news-item/nxp-sues-to-prevent-hackers-from-releasing-mifare-flaws/

- Creating an own encryption algorithm and expecting nobody to figure out how it works will work for a very short period of time. Going to a judge to prevent the information from leaking also works... if you're high on something!

But on real life it works never.

But on real life it works never.

All of the above software was installed with ./configure --prefix=/usr/local/rfid to avoid breaking anything already installed into the system.

Running the tools

Basic information from the tag (the actual tag UID is omitted):

# nfc-list

NFC device: ACS / ACR122U PICC Interface opened

1 ISO14443A passive target(s) found:

ISO/IEC 14443A (106 kbps) target:

ATQA (SENS_RES): 00 04

UID (NFCID1): 11 22 33 44

SAK (SEL_RES): 08

ATQA 00, 04 is listed in ISO/IEC 14443 Type A generations and is identified as MIFARE Classic. Goody! It's weak and hackable.

Just running mfoc to see if a slow attack can proceed:

# mfoc -O card.dmp

Found Mifare Classic 1k tag

ISO/IEC 14443A (106 kbps) target:

ATQA (SENS_RES): 00 04

UID size: single

bit frame anticollision supported

UID (NFCID1): 11 22 33 44

SAK (SEL_RES): 08

Not compliant with ISO/IEC 14443-4

Not compliant with ISO/IEC 18092

Fingerprinting based on MIFARE type Identification Procedure:

MIFARE Classic 1K

MIFARE Plus (4 Byte UID or 4 Byte RID) 2K, Security level 1

* SmartMX with MIFARE 1K emulation

And very soon, it results:

We have all sectors encrypted with the default keys..

Auth with all sectors succeeded, dumping keys to a file!

WHAAT! The card wasn't encrypted at all! ![]()

A closer look into card.dmp reveals, that there was no payload in the 1024 bytes this particular MIFARE Classic stored.

Since, the card doesn't have any payload, the application has to work based on childish assumption, that the UID of a RFID-tag cannot be changed. Nice! Because it can be set to whatever I want it to be! Like this:

# nfc-mfsetuid 11223344

NFC reader: ACS / ACR122U PICC Interface opened

Found tag with

UID: 01234567

ATQA: 0004

SAK: 08

My blank UID-writable tag had UID of 01 02 03 04, but I changed it into something else. Note: This is not allowed by the specs, but using very cheap eBay-hardware, obviously it can be done! Nice. ![]()

To verify my hack:

I walked into the appliation and used my clone successfully. Also, I informed the owners, that their security is ... well ... not secure. ![]() They shouldn't use UIDs as the only authentication mechanism. It's only 4 bytes and anybody in the world can use that 4-byte password. Using encrypted payload would make more sense, if MIFARE Classic wouldn't have a major security flaw in it's key generation algorithm.

They shouldn't use UIDs as the only authentication mechanism. It's only 4 bytes and anybody in the world can use that 4-byte password. Using encrypted payload would make more sense, if MIFARE Classic wouldn't have a major security flaw in it's key generation algorithm.

This was one of the easiest hacks I've completed for years.

iPhone Mobile Profile for a new CA root certificate - Case CAcert.org

Friday, April 20. 2018

Year ago, I posted about CAcert root certificate being re-hashed with SHA-256 to comply with modern requirements. The obvious problem with that is, that it is not especially easy to install own certificates (or the new CAcert root) into a phone anymore.

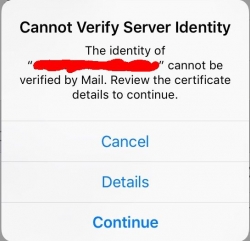

When you try to access your mail server (or any other resource via HTTPS), the result will be something like this:

Not very cool. Getting the "Cannot Verify Server Identity" -error. This is especially bad, because in modern iOS you really don't have a clue how to get the new root cert installed and trusted. No worries! I can describe the generic process here.

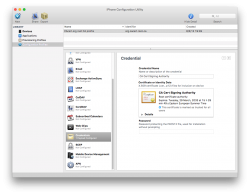

Apple Configurator 2

Get it from https://itunes.apple.com/us/app/apple-configurator-2/id1037126344. Install it into your Mac. It doesn't cost anything, but will help you a lot!

If you dream on running it on a Windows/Linux/BSD, just briefly visit your nearest Mac-store and with your newly purchased Mac start over from the part "Get it from..."

Root certificate to be installed

Get a root certificate you want to distribute as trusted root CA. With the Apple Configurator 2, create a profile containing only one payload. The certificate in question.

This is what it would look like:

When that mobile profile is exported from Apple Configurator 2, you will get an unsigned .mobileconfig-file. That will work, but just give grievance during install-time about not being unsigned. If you can live with an extra notice, then just go to next step. If you cannot, get a real code-signing -certificate and sign your profile with that.

Publishing your .mobileconfig from a web server

Your precious .mobileconfig-doesn't just automatically fly into your iOS-device, you need to do some heavy lifting first.

On your favorite web-server, which can be accessed from your iOS-device and you can fully control, place the .mobileconfig-file there as a static resource and make it have content-type application/x-apple-aspen-config.

On Apache:

AddType application/x-apple-aspen-config .mobileconfig

On Nginx:

types {

application/x-apple-aspen-config mobileconfig;

}

Testing the content-type setting with curl:

# curl --verbose "https:...."

> User-Agent: curl/7.32.0

>

< HTTP/1.1 200 OK

< Content-Type: application/x-apple-aspen-config

Install the profile into your iOS-gadget

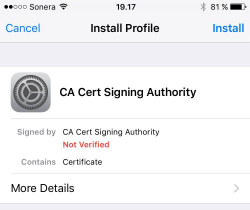

That's simple: just whip up Mobile Safari and surf to the URL. Given the correctly set content-type, it will launch profile installer:

During the process you will need to punch in your PIN-code (if you're using one in your device). There are way too many confirmations, if you really, really, for sure want to install that particular profile. The questions are there for a very good reason. A mobile profile can contain a combination of settings that will eventually either leave you powerless to control your own device, or alternatively allow remote control of your very own device. Or both. So, be very careful when installing those mobile profiles!

Finally

Now you have your new root certificate installed and trusted. Go test it!

For those who are very brave:

My recommendation is not to do this. Do not trust me or my published files!

I have published my own .mobileconfig into the web server of this blog. The address for the profile is:

https://blog.hqcodeshop.fi/CAcert/CAcert.org%20root%20CA%20profile.mobileconfig

I'll repeat: That is there for your reference only. Do not trust me for such a security-sensitive file.

DynDNS updates to your Cloud DNS

Sunday, April 15. 2018

People running servers at home always get dynamic IP-addresses. Most ISPs have a no-servers -clause in their terms of contract, but they really don't enforce the rule. If you play a multiplayer on-line game and have voice chat enabled, you're kinda server already, so what's a server is very difficult to define.

Sometimes the dynamic IP-address does what dynamic things do, they change. To defeat this, people have had number of different approaches to solve the problem. For example, I've ran a DHIS-server (for details, see: https://www.dhis.org/) and appropriate client counterpart to make sure my IP-address is properly updated if/when it changes. Then there are services like Dyn.com or No-IP to do exactly the same I did with a free software.

The other day I started thinking:

I'm already using Rackspace Cloud DNS as it's free-of-charge -service. It's heavily cloud-based, robust and has amazing API to do any maintenance or updates to it. Why would I need to run a server to send obscure UDP-packets to to keep my DNS up-to-date. Why cannot I simply update the DNS-record to contain the IP-address my server has?

To my surprise nobody else thought of that. Or at least I couldn't find such solutions available.

A new project was born: Cloud DynDNS!

The Python 3 source code is freely available at https://github.com/HQJaTu/cloud-dyndns, go see it, go fork it!

At this point the project is past prototyping and proof-of-concept. It's kinda alpha-level and it's running on two of my boxes with great success. It needs more tooling around deployment and installation, but all the basic parts are there:

- a command-line -utility to manage your DNS

- an expandable library to include any cloud DNS-provider, current version has Rackspace implemented

- systemd service descriptions to update the IP-address(es) at server boot, it really supports multiple network interfaces/hostnames on a same server

Any comments/feedback is appreciated. Please, file the bug reports directly to GitHub-project.

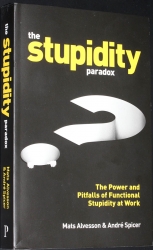

Book club: The Stupidity Paradox - The Power and Pitfalls of Functional Stupidity at Work

Saturday, April 7. 2018

Human stupidity has always intrigued me. It is, after all, the driving force of this world. Most people will disagree with me and say, the driving force being money or sex or ... whatever. But take my word for it, it is stupidity! All you have to to is look around and realize, that stupid clowns get elected to powerful positions in democratic systems, idiots are disputing easily proven scientific facts and as an example are claiming that world is flat. Also in our everyday life we do stupid things just because "we always have done so". So, it is stupidity that drives you! People in general are not designed to make critical observations, instead we wired to crowd-behave in a socially acceptable manner. Being stupid.

When taken the context of any regular place of work, two gentlemen Mr. Alvesson and Mr. Spicer took a very close look on this phenomenon. And their book The Stupidity Paradox investigates these.

Stupidity: Entire book is written around a concept of functional stupidity. Authors define functional stupidity as:

Functional stupidity is the inclination to reduce one's scope of thinking and focus only on the narrow, technical aspects of the job.

Paradox: For an organization, just focusing on a narrow scope is often beneficial. Cutting corners makes everybody's life easier and consumes less resources, it is simply thoughtless and useful. There comes the paradox, your useful thing might be very destructive without you knowing it.

Nobody is safe from functional stupidity, especially smart people are able to wrap themselves in a "comfort zone" or a bubble, where everything they do and say appear smart. Outside the bubble, things may look a bit different.

The book describes functional stupidity inducing from five different sources:

- Leadership:

- Often leaders are deluded. They really don't have a grasp what's going on. It doesn't stop them making decisions, though.

- Structure:

- Add bureucracy. If there isn't enough information to do one's job, just add more forms to be filled and couple guidelines to get that information. What harm could dozens of contradicting guidlines and mandatory forms cause, right?

- Alternatively, just change the existing organization structure to "improve" it. Throw in couple "promotions" with really cool title and minor pay raise without actual change to the tasks to improve morale. Do this couple times and everybody will be so lost.

- Imitation:

- "Since everybody else is doing it, also we must do it". However, whatever "it" is, they may be doing it on a different context and have some kind of minor tweak in their way of doing it. Also, in reality not "everybody" does it. Such activities include typically just corporate window dressing, with little actual changes.

- Branding:

- Sometimes a consultant approaches corporate execs and tells them, that how others perceive the corporation is somehow wrong or bad. This triggers an instant imago "improvement" -campaign to make changes, which are not based on reality but merely on bullshit. Often, the results are not something you'd really want to be proud of.

- Culture:

- Getting bad news really sucks, right? Getting bad news about from your organization really really sucks, right? Easy fix: let's make a decision to never tell each other bad news! The result is a corporate culture, where you really cannot criticise anything, bring forward potential improvements or just not be able to tell, that corporation is in a downward spiral.

At the end of the book, authors cover the most important part, how to manage functional stupidity. How to detect it and how to make sure, that it doesn't cause more harm. If such a mighty force is left un-wrangled, lots of damage can be done. Ultimately the cure is simple: create a culture where smart people can observe and criticise day-to-day practices and top-level execs to take the feedback seriously. Sometimes the emperor is naked, insted of having new clothes.

Of all the vivid examples of stupidity in the book, my favorite is The Credit Crunch of 2007. Really smart (but greedy) people created a money-making-machine called CDOs. Those smart people made tons of money to themselves and to their employers, but lost the focus on the big picture. We know how that ended up.I read trough this book with a mixed feelings. Sometimes laughing out loud but at the same time felt like crying, when the description of functional stupidity ripped open old wounds.

Long live ReCaptcha v1!

Thursday, April 5. 2018

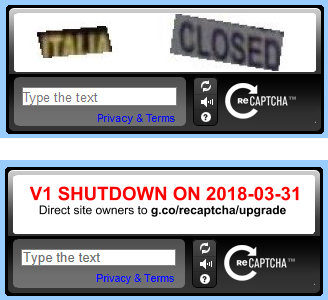

Ok. It's dead! It won't live long.

That seems to suprise few people. I know it did surprise me. ![]()

Google has had this info in their website for couple years already:

What happens to reCAPTCHA v1?

Any calls to the v1 API will not work after March 31, 2018.

Starting in November 2017, a percentage of reCAPTCHA v1 traffic will begin to

show a notice informing users that the old API will soon be retired.

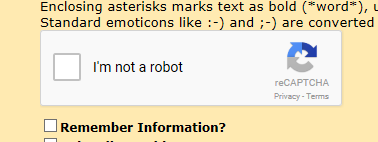

Yup. This blog showed information like this on comments:

Now that the above deadline is gone, I had to upgrade S9y ReCaptcha plugin from git-repo https://github.com/s9y/additional_plugins/tree/master/serendipity_event_recaptcha. There is no released version having that plugin yet.

Now comments display the v2-style:

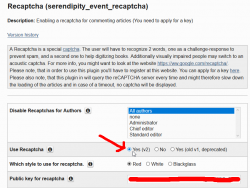

To get that running, I simply got the subdirectory of plugins/serendipity_event_recaptcha with the content from Github and went for settings:

I just filled in the new API-keys from https://www.google.com/recaptcha and done! Working! Easy as pie.

Update 5th April 2018:

Today, I found out that Spartacus has ReCaptcha v2 plugin available to S9y users. No need to go the manual installation path.

Internet in a plane - Really?

Wednesday, April 4. 2018

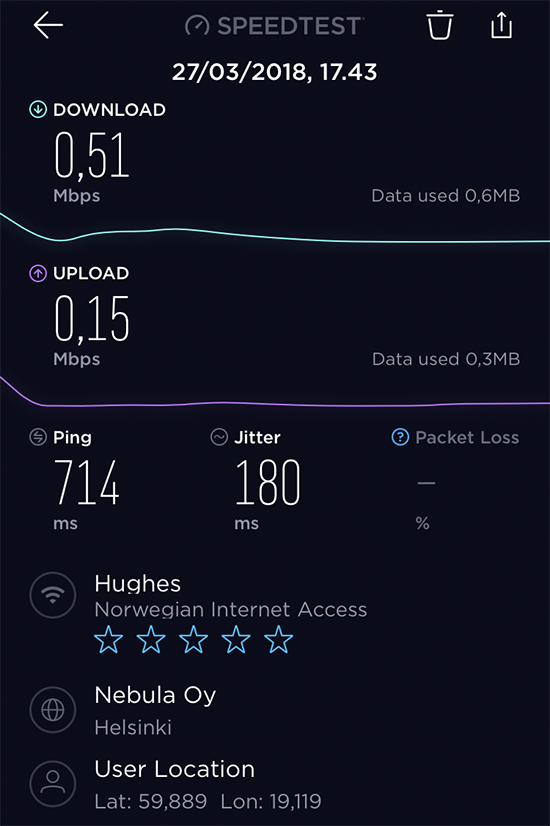

Last week I was sitting in an aeroplane and while being bored, I flicked the phone on to test the Wi-Fi. Actually, I had never done that before and just ran a Speedtest:

Yup. That's the reason I had never done that before. ![]()

Half a meg down, 150 up. That's like using a 56 kbit/s modem or 2G-data for Internet. Both were initially cool, but the trick ran old very fast given the "speed" or ... to be precise - lack of it.

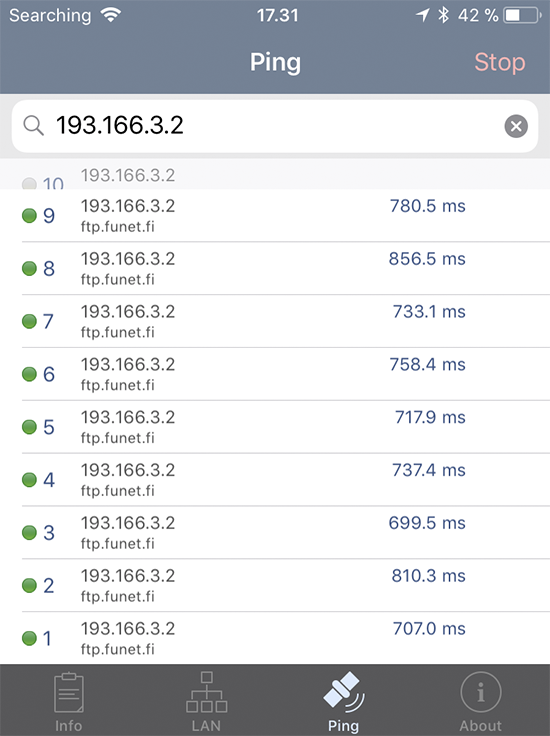

More investigation:

As expected, round-trip time was horrible. Definitely a satellite link. Or ... is it? But the answer to the topic's question is: no. There is no real Internet access mid-flight.

The exit IP-address was in /24 block of 82.214.239.0/24 belonging to Hughes Network Systems GmbH. I took a peek into Hughes Communications Wikipedia page at https://en.wikipedia.org/wiki/Hughes_Communications and yes. They have a German subsidiary with the same name.

After I landed and was in safe hands of a 150 Mbit/s LTE-connection, I did some more googling. Side note: When your internet access gets a 100x boost, it sure feels good! ![]()

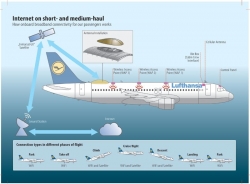

There is a Quora article of How does Wi-Fi internet access in an airplane work? It has following diagram:

That suggests a satellite connection. Also I found The Science of In-Flight Wi-Fi: How Do We Get Internet At 40,000 Feet? from travelpulse.com, but it had some non-relevant information about a 3G-connection being used. That surely was not the case and I seriously doubt, that in Europe such a thing would be used anywhere.

Ultimately the issue was closed when I found the article Row 44 to begin installing connectivity on Norwegian's 737-800s from flightglobal.com. So, it looks like company called Row 44 does in-flight systems for commercial flights. They lease the existing infrastructure from HughesNet, who can offer Internet connectivity to pretty much everywhere in the world.

Wikipedia article Satellite Internet access mentions, that number of corporations are planning to launch a huge number of satellites for Internet access. Hm. that sounds like Teledesic to me. The obvious difference being, that today building a network of satellites is something you can actually do. Back in IT-bubble of 2001, it was merely a dream.

Replacing Symantec certificates

Monday, March 19. 2018

Little bit of background about having certificates

A quote from https://www.brightedge.com/blog/http-https-and-seo/:

Google called for “HTTPS Everywhere” (secure search) at its I/O conference in June 2014 with its Webmaster Trends Analyst Pierre Far stating: “We want to convince you that all communications should be secure by default”

So, anybody with any sense in their head have moved to having their website prefer HTTPS as the communication protocol. For that to happen, a SSL certificate is required. In practice any X.509 would do the trick of encryption, but anybody visiting your website would get all kinds of warnings about that. An excellent website having failing certificates is https://badssl.com/. The precise error for you would see having a randomly selected certificate can be demonstrated at https://untrusted-root.badssl.com/.

Google, as the industry leader, has taken a huge role in driving certificate business to a direction it seems fit. They're hosting the most used website (google.com, according to https://en.wikipedia.org/wiki/List_of_most_popular_websites) and the most used web browser (Chrome, according to https://en.wikipedia.org/wiki/Usage_share_of_web_browsers). So, when they say something, it has a major impact to the Internet.

What they have said, is to start using secured HTTP for communications. There is an entire web page by Google about Marking HTTP As Non-Secure, having the timeline of how every single website needs to use HTTPS or risk being undervalued by GoogleBot and being flagged as insecure to web browsing audience.

Little bit of background about what certificates do

Since people publishing their stuff to the Net, like me, don't want to be downvalued or flagged as insecure, having a certificate is kinda mandatory. And that's what I did. Couple years ago, in fact.

I have no interest in paying the huge bucks for the properly validated certificates, I simply went for the cheapest possible Domain Validated (DV) cert. All validation types are described in https://casecurity.org/2013/08/07/what-are-the-different-types-of-ssl-certificates/. The reasoning, why in my opinion, those different verification types are completely bogus can be found from my blog post from 2013, HTTP Secure: Is Internet really broken?. The quote from sslshopper.com is:

"SSL certificates provide one thing, and one thing only: Encryption between the two ends using the certificate."

Nowhere in the technical specifcation of certificates, you can find anything related to actually identifying the other party you're encrypting your traffic with. A X.509 certificate has attributes in it, which may suggest that the other party is who the certificate says to be, but's an assumption at best. There is simply no way of you KNOWING it. What the SSL certificate industry wants you to believe, is that they doing all kinds of expensive verification makes your communications more secure. In reality it's just smoke and mirrors, a hoax. Your communications are as well encrypted using the cheapest or most expensive certificate.

Example:

You can steal a SSL certificate from Google.com and set up your own website having that as your certificate. It doesn't make your website Google, even the certificate so suggests.

Little bit of background about Symantec failing to do certificates

Nobody from Symantec or its affiliates informed me about this. Given, that I follow security scene and bumped into news about a dispute between Google and Symantec. This article is from 2015 in The Register: Fuming Google tears Symantec a new one over rogue SSL certs. A quote from the article says:

On October 12, Symantec said they had found that another 164 rogue certificates had been issued

in 76 domains without permission, and 2,458 certificates were issued for domains that were never registered.

"It's obviously concerning that a certificate authority would have such a long-running issue

and that they would be unable to assess its scope after being alerted to it and conducting an audit,"

So, this isn't anything new here. This is what all those years of fighting resulted as: Replace Your Symantec SSL/TLS Certificates:

Near the end of July 2017, Google Chrome created a plan to first reduce and then remove trust (by showing security warnings in the Chrome browser) of all Symantec, Thawte, GeoTrust, and RapidSSL-issued SSL/TLS certificates.

And: 23,000 HTTPS certs will be axed in next 24 hours after private keys leak.

In short:

They really dropped the ball. First they issued 164 certificates, which nobody actually ordered from them. Those rogue certificates included one for google.com. Then they somehow "lost" 23k private keys for already issued certificates.

That's really unacceptable for a company by their own words is "Global Leader In Next-Generation Cyber Security". That's what Symantec website https://www.symantec.com/ says, still today.

What next?

Symantec has a website called Check your website for Chrome distrust at https://www.websecurity.symantec.com/support/ssl-checker.

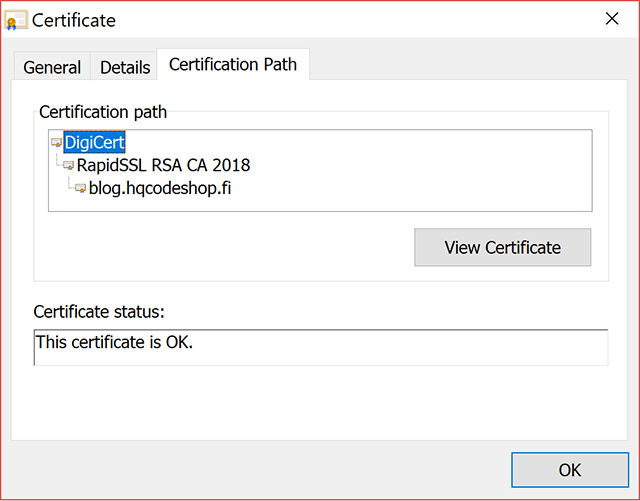

I did check the cert of this blog, and yup. It flagged the certificate as one needing immediate replacement. The certificate details have:

Certificate:

Data:

Version: 3 (0x2)

Serial Number:

24:6f:ae:e0:bf:16:8d:e5:7a:13:fb:bd:1e:1f:8d:a1

Signature Algorithm: sha256WithRSAEncryption

Issuer: C=US, O=GeoTrust Inc., CN=RapidSSL SHA256 CA

Validity

Not Before: Nov 25 00:00:00 2017 GMT

Not After : Feb 23 23:59:59 2021 GMT

Subject: CN=blog.hqcodeshop.fi

That's a GeoTrust Inc. issued certificate. GeoTrust is a subsidiary of Symantec. I did study the history of Symantec' certificate business and back in 2010 they acquired Verisign's certificate business resulting as ownership of Thawte and GeoTrust. RapidSSL is the el-cheapo brand of GeoTrust.

As instructed, I just re-issued the existing certificate. It resulted in:

Now, my certificate traces back to a DigiCert CA root.

That's all good. I and you can continue browsing my blog without unnecessary this-website-is-not-secure -warnings.

Xyloband - What's inside one

Sunday, March 18. 2018

If you're lucky enough to get to go to a really cool event, it may be handing out a Xyloband to everybody attending it.

For those who've never heard of a Xyloband, go see their website at http://xylobands.com/. It has some sample videos, which this screenshot was taken from:

See those colourful dots in the above pic? Every dot is a person having a Xyloband in their wrist.

As you can see, mine is from King's Kingfomarket, Barcelona 2017. There is an YouTube video from the event, including some clips from the party at https://youtu.be/lnp6KjMRKW4. In the video, for example at 5:18, there is our CEO having the Xyloband in his right wrist and 5:20 one of my female colleagues with a flashing Xyloband. Because the thing in your wrist can be somehow remote controlled, it will create an extremely cool effect to have it flashing to the beat of music, or creating colourful effects in the crowd. So, ultimately you get to participate in the lighting of the venue.

After the party, nobody wanted those bands back, so of course I wanted to pop the cork of one. I had never even heard of such a thing and definitely wanted to see what makes it tick. Back of a Xyloband has bunch of phillips-head screws:

Given the size of the circular bottom, a guess that there would be a CR2032 battery in it is correct:

After removing the remaining 4 screws, I found two more CR2016 batteries:

The pic has only two batteries visible, but the white tray indeed has two cells in it. Given the spec of a button cell (https://en.wikipedia.org/wiki/Button_cell), for a CR-battery it says: diameter 20 mm, height 3.2 mm. So, if you need 6 VDC voltage instead of the 3 VDC a single cell can produce, just put two CR2016 instead of one CR2032. They will take exactly the same space than a CR2032, but will provide double the voltage. Handy, huh! My thinking is, that 9 VDC is bit high for a such a system. But having a part with 6 volts and another part with 3 volts would make more sense to me.

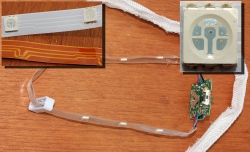

Plastic cover removed, the board of a Xyloband will look like this:

Nylon wristband removed, there is a flexing 4-wire cable having 8 RBG LEDs in it:

The circuits driving the thing are:

Upper one is an Atmel PLCC-32 chip with text Atmel XB-RBG-02 in it. If I read the last line correctly, it says ADPW8B. Very likely a 8-bit Microcontroller Atmel tailored for Xylobands to drive RBG-leds.

The radiochip at the bottom is a Silicon Labs Si4362. The spec is at https://www.silabs.com/documents/public/data-sheets/Si4362.pdf. A quote from the spec says:

Silicon Labs Si4362 devices are high-performance, low-current receivers covering the sub-GHz frequency bands from 142 to 1050 MHz. The radios are part of the EZRadioPRO® family, which includes a complete line of transmitters, receivers, and transceivers covering a wide range of applications.

Given this, they're just using Silicon Labs off-the-shelf RF-modules to transmit data to individual devices. This data can be fed into the Microcontroller making the RBG LEDs work how DJ of the party wants them to be lit.

While investigating this, I found a YouTube video by Mr. Breukink. It is at https://youtu.be/DdGHo7BWIvo?t=1m33s. He manages to "reactivate" a different model of Xylobands in his video. Of course he doesn't hack the RF-protocol (which would be very very cool, btw.), but he makes the LEDs lit with a color of your choosing. Of course on a real life situation when driven by the Atmel chip, the RBG leds can produce any color. Still, nice hack.

Arch Linux failing to start network interface, part 2

Saturday, March 17. 2018

I genuinely love my Arch Linux. It is a constant source of mischief. In a positive sense. There is always something changing making the entire setup explode. The joy I get, is when I need to get the pieces back together. ![]()

In the Wikipedia article of Arch Linux, there is a phrase:

... and expects the user to be willing to make some effort to understand the system's operation

The is precisely what I use my Arch Linux for. I want the practical experience and understanding on the system. And given it's rolling release approaches, it explodes plenty.

Back in 2014, Arch Linux impemented Consistent Network Device Naming. At that time the regular network interface names changed. For example my eth0 become ens3. My transition was not smooth. See my blog post about that.

Now it happened again! Whaat?

Symptoms:

- Failure to access the Linux-box via SSH

- Boot taking very long time

- Error message about service

sys-subsystem-net-devices-ens3.devicefailing on startup

Failure:

Like previous time, the fix is about DHCP-client failing.

You vanilla query for DHCP-client status:

systemctl status dhcpcd@*

... resulted as nothingness. A more specific query for the failing interface:

systemctl status dhcpcd@ens3

... results:

* dhcpcd@ens3.service - dhcpcd on ens3

Loaded: loaded (/usr/lib/systemd/system/dhcpcd@.service; enabled; vendor pre>

Active: inactive (dead)

Yup. DHCP failure. Like previously, running ip addr show revealed the network interface name change:

2: enp0s3: mtu 1500 qdisc fq_codel state UP group default qlen 1000 link/ether 52:54:52:54:52:54 brd ff:ff:ff:ff:ff:ff

There is no more ens3, it is enp0s3 now. Ok.

Fix:

A simple disable for the non-existent interface's DHCP, and enable for the new one:

systemctl disable dhcpcd@ens3

systemctl enable dhcpcd@enp0s3

To test that, I rebooted the box. Yup. Working again!

Optional fix 2, for the syslog:

Debugging this wasn't as easy as I expected. dmesg had nothing on DHCP-clients and there was no kernel messages log at all! Whoa! Who ate that? I know, that default installation of Arch does not have syslog. I did have it running (I think) and now it was gone. Weird.

Documentation is at https://wiki.archlinux.org/index.php/Syslog-ng, but I simply did a:

pacman -S syslog-ng

systemctl enable syslog-ng@default

systemctl start syslog-ng@default

... and a 2nd reboot to confim, that the syslog existed and contained boot information. Done again! ![]()

What:

The subject of Consistent Network Device Naming is described in more detail here: https://access.redhat.com/documentation/en-us/red_hat_enterprise_linux/7/html/networking_guide/ch-consistent_network_device_naming

Apparently, there are five different approaches on how to actually implement the CNDN. And given the old ens-device, which is according to PCI hotplug slot (enS for slot) index number (Scheme 2), the new naming scheme was chosen to be physical location (enP for physical) of the connector (Scheme 3).

The information of when/what/why the naming scheme change was made eludes me. I tried searching Arch discussion forums at https://bbs.archlinux.org/, but nothing there that I could find. But anyway, I got the pieces back together. Again! ![]()

Update 30th March 2018:

Yup. The interface naming rolled back. Now ens3 is the interface used again. Darnnation this naming flapping!

Microsoft Virtual Security Summit

Wednesday, March 14. 2018

I got and ad from Microsoft about a security summit they were organizing. Since it was virtual, I didn't have to travel anywhere and the agenda looked interesting, I signed up.

Quotes:

- Michael Melone, Microsoft

- Jim Moeller, Microsoft, about infosec referring to Michael Melone sitting next to him

- Patti Chrzan, Microsoft

Discussion points:

- Security hygiene

- Run patches to make your stuff up-to-date

- Control user's access

- Invest into your security, to make attackers ROI low enough to attack somebody else

- Security is a team sport!

- Entire industry needs to share and participate

- Law enforcement globally needs to participate

- Attacks are getting more sophisticated.

- 90% of cybercrime start from a sophisticated phishing mail

- When breached, new malware can steal domain admin's credentials and infect secured machines also.

- Command & control traffic can utilize stolen user credentials and corporate VPN to pass trough firewall.

- Attackers are financially motivated.

- Ransomware

- Bitcoin mining

- Petaya/Notpetaya being an exception, it just caused massive destruction

- Identity is the perimeter to protect

- Things are in the cloud, there is no perimeter

- Is the person logging in really who he/she claims to be?

- Enabling 2-factor authentication is vital

Finally:

Goodbye CAcert.org - Welcome Let's Encrypt!

Sunday, March 11. 2018

A brief history of CAcert.org

For almost two decades, my primary source for non-public facing X.509 certificates has been CAcert.org. They were one of the first ever orgs handing out free-of-charge certificates to anybody who wanted one. Naturally, you had to pass a simple verification to prove that you actually could control the domain you were applying a certificate for. When you did that, you could issue certificates with multiple hostnames or even wildcard certificates. And all that costing nothing!

The obvious problem with CAcert.org always was, that they were not included in any of the major web browsers. Their inclusion list at https://wiki.cacert.org/InclusionStatus is a sad read. It doesn't even have Google Chrome, the most popular browser of current time in the list. The list simply hasn't been updated during the lifetime of Chrome! On a second thought looking it bit closer, the browser inclusion status -list is an accurate statement how CAcert.org is doing today. Not so good.

Wikipedia page https://en.wikipedia.org/wiki/CAcert.org has a brief history. Their root certificate was included in initial versions of Mozilla Firefox. When CA/Browser Forum was formed back in 2005 by some of the certificate business vendors of that time to have a set of rules and policies in place regarding web site certificates and the issuence policies, they kicked pretty much everybody out by default. Commerical vendors made the cut back in, but CAcert.org simply couldn't (or wouldn't) comply with those and withdrew their application for membership. The reson for CAcert.org not being able to act on anything is that the entire org is (and has been for a long time) pretty much dead. All the key persons are simply busy doing something else.

Today, the current status of CAcert.org is, that their certs are not trusted and signed by non-accepted hash algorithms. Over an year ago, there was a blip of activity and they managed to re-sign their root certificate with SHA-256, but to me it looks like they exhausted all the energy on the actual signing and newly signed root certs were never published. I wrote a post about how to actually get your hands on the new root certificate and install that to your machines.

Today, when CA/Browser Forum is mostly controlled by Google and Mozilla, a stalled CAcert.org would not be accepted as a member and at the same time there is huge pressure to start using properly signed and hashed certificates on all web traffic, I've run out of road with CAcert.org. So, for me, it's time to move on!

A brief history of Let's Encrypt

Two years ago, the certificate business had been hit hard. I collected a number of failures in my blog post about What's wrong with HTTPS. Roughly at the same time also Electronic Frontier Foundation (EFF) saw the situation as not acceptable. Businesses wanted serious money for their certs, but were not doing an especially good job about trustworthy business practices. With help of some major players (including Mozilla Foundation, Akamai Technologies, Cisco Systems, etc.) they created a non-profit organization for the sole purpose of issuing free-of-charge certificates to anybody who wanted one called Let's Encrypt.

They managed to pull that off, in a very short period of time, they become the most prominent certificate authority in the Internet. At least their product price is right, €0 for a cert. And you can request and get as many as you want to. There are some rate limits, so you cannot denial-of-service them, but for any practical uses, you can get all your certs from them. For free!

The practical benefit for Let's Encrypt operation is, that number of web server in The Net having HTTPS enabled has been rising. Both EFF and CA/Browser Forum is strongly suggesting, that all HTTP-traffic should be encrypted, aka. HTTPS. But the obvious hurdle in achieving that, is that everybody needs to have a certificate in their web server to enable encryption. Given Let's Encrypt, now everybody can do that! EFF has stated for long time, that having secure communications shouldn't be about money, it should be about your simply wanting to do that. The obvious next move is, that in coming years CAB Forum will announce, that all web traffic MUST be encrypted. However, we're not quite yet there.

Breifly on Let's Encrypt tech

Since they wanted to disrupt the certificate business, they abandoned current operation procedures. Their target is to run the entire request/issue-process fully automated and do that in a secure manner. To achieve that, they created a completely new protocol for Automatic Certificate Management Environment, or ACME. The RFC draft can be seen at https://datatracker.ietf.org/doc/draft-ietf-acme-acme/.

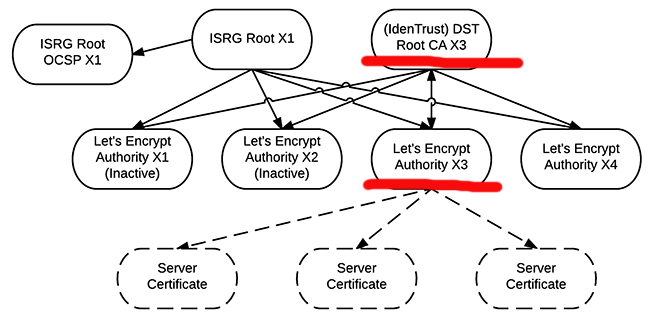

Their chain of trust (taken from https://letsencrypt.org/certificates/) is bit confusing to me:

As the root certificate, they use Digital Signature Trust Co. (red line with Identrust DST Root CA X3). That's something your browser has had for years. So, ultimately they didn't have to add anything. When you request a certificate, it is issued by the intermediate authority Let's Encrypt Authority X3. And as the bottom level is your very own Server Certificate.

However, I don't undertand why there is ISRG Root X1 to double-sign all the intermediates, and signing only their OCSP-data. My computers don't have that root certificate installed. So, what's the point of that?

As a note:

This is the recommened and typical way of setting up your certificate chain, nothing funny about that. For a layman, it will strike as overly complex, but in X.509-world things are done like that for security reasons and being able to "burn" an intermediate CA in a split second and roll forward to a new one without interruptions and need to install anything to your machines.

What the actual connection between Let's Encrypt and Digital Signature Trust Co. (IdenTrust) is unclear to me. If somebody knows, please drop a comment clarifying that.

My beef with Let's Encrypt

Short version: It's run by idiots! So, Let's not. ![]()

Long version:

- Tooling is seriously bad.

- The "official" python-based software won't work on any of my machines. And I don't want to try fixing their shit. I just let those pieces of crap rot.

- To me, Acme Inc. is heavily associated with Wile E Coyote

- Mr. Coyote never managed to catch the Road Runners. Many times it was because the Acme Inc. manufactured equipment failed to function. https://en.wikipedia.org/wiki/Acme_Corporation

- Mr. Coyote never managed to catch the Road Runners. Many times it was because the Acme Inc. manufactured equipment failed to function. https://en.wikipedia.org/wiki/Acme_Corporation

- Did I mention about the bad tools?

- Every single tool I've ever laid my hands on wants to mess up my Apache and/or Nginx configuration.

- I have a very simple rule about my configurations: DON'T TOUCH THEM! If you want to touch them, ask me first (at which point I can reject the request).

- There seems to be no feasible way of running those crappy tools without them trying to break my configs first.

- Most 3rd-party written libraries are really bad

- There are libraries/tools for all imaginable programming languages implementing parts/all of ACME-protocol.

- Every single one of them are either bad or worse.

- Certificate life-span i 90 days. That's three (3) months!

- The disruptive concept behind this is, that you need to renew your certs every 60 days (or at max 90 days). For that to happen, you will need to learn automation. Noble thought, that. Not so easy to implement for all possible usages. The good thing is, that if ever achieved, you won't have to suffer from certificates expiring without you knowing about it.

- As an alternative, you can get a completely free-of-charge SSL certificate from Comodo, a member of Let's Encrypt, which is valid for 90 days, but you have to do it manually using Comodo's web GUI. See: https://ssl.comodo.com/free-ssl-certificate.php if you want one.

- I won't list those commercial CAs who can issue you a 30 day trial certificate for free here, because the list is too long. So, if you just want a short-lived cert, you have been able to that for a long time.

- They're not able to issue wildcard certificates, yet.

- This is on the works, so mentioning this is hitting them below the belt. Sorry about that.

- From their test-API, you can get a wildcard certificate, if you really, really, really need one. It won't be trusted by your browser, but it will be a real wildcard cert.

- Original release date for ACME v2 API in production was 27th Feb 2018, but for <insert explanation here> they were unable to make their own deadline. New schedule is during Q1/2018. See the Upcoming Features -page for details.

My solution

It's obvious, that I need to ditch the CAcert.org and the only viable one is Let's Encrypt (run by idiots). To make this bitter choice work for me, after evaluating a number of solutions, I found a reasonable/acceptable tool called acme.sh. This tool is written with Bash and it uses curl for the ACME access. The author publishes the entire package in Github, and I have my own fork of that, which doesn't have a line of code even thinking about touching my web server configurations. You can get that at https://github.com/HQJaTu/acme.sh

Since I love Rackspace for their cloud DNS. I even wrote a Rackspace API Cloud DNS -plugin for acme.sh to make my automated certificate renewals work seamlessly. After carefully removing all other options for domain verification, it is 100% obvious, that I will do all of my ACME domain verifications only via DNS. Actually, for wildcard certs, it is the only allowed approach. Also, some of the certificates I'm using are for appliance, which I carefully firewall out of the wild wild web. Those ridiculous web site verification wouldn't work for me anyway.

And for those, who are wondering, Why Rackspace Cloud DNS? The answer is simple: price. They charge for $0/domain. That is unlike most cloud/DNS service providers, who want actual money for their service. With Rackspace you'll get premium GUI, premium API, premium anycast DNS servers with the right price, free-of-charge. You will need to enter a valid credit card when you create the account, but as they state: they won't charge it unless you subscribe to a paid service. I've been running their DNS for years, and they never charged me once. (If they see this blog post, they probably will!)

What I'm anxiously waiting is the ACME v2 API release, which is due any day now. That will allow me to get my precious wildcard certificates using these new scripts.

Now that my chips are in, its just me converting my systems to use the Wile E Coyote stuff for getting/renewing certs. And I will need to add tons of automation with Bash, Perl, Ansible, Saltstack, ... whatever to keep the actual servers running as intened. Most probably, I will post some of my automations in this blog. Stay tuned for those!

Azure payment failure

Thursday, March 8. 2018

Since last July, this blog has been running in Microsoft Azure.

In January, Microsoft informed me, that I need to update my payment information or they'll cut off my service. Ever since, I've been trying to do that. For my amazement, I still cannot do it! There are JavaScript errors in their payment management panel, which seem to be impossible to fix.

So, eventually I got a warning, that they will discontinue my service unless I pay. Well ... I'd love to pay, but ... ![]() For the time being, all I can do is backup the site and plan for setting up shop somewhere else. This is so weird!

For the time being, all I can do is backup the site and plan for setting up shop somewhere else. This is so weird!

EBN European Business Number scam - Part 3 - Gorila's findings

Friday, March 2. 2018

Update 25th June 2019: EBN scammers bankrupt

The text below is a comment from Mr.(?) Gorila to my previous EBN scam post, he kindly translated a German article from year 2011 to English reading audience. Given the length of the text, I'm posting the un-altered comment here. I did add the emphasis for subtitles to make the article easier to read.

Why this is important, is of course the legal precedent. EBN-scammers sued somebody and lost!

So: DO NOT PAY! You will win your case in court.

So, to repeat, the text below is not mine, but I think it being very valuable for the people following EBN scam case.

Indeed, Legal German language is very difficult to translate into English. However, in 2010 there were many German articles addressing this court decision. Language of journal articles is simpler and easier to understand. Yet that article is informative and precise enough to conwey the court ruling message to general public.

Here is a good one, with translation below (Please note, that the translation is not literal to avoid German idioms and phrases inconsistent with English language):

http://www.kostenlose-urteile.de/LG-Hamburg_309-S-6610_LG-Hamburg-zu-Branchenbuchabzocke-Eintragungsformular-Datenaktualisierung-2008-des-DAD-Deutscher-Adressdienst-erfuellt-Straftatbestand-des-Betrugs.news11513.htm

Judgement of Regional Court Hamburg (Urteil vom 14.01.2011 - 309 S 66/10)

Regional Court Hamburg on Business directory rip-off:

Registration form "Data update 2008" of the DAD German Address Service constitutes criminal offense of the fraud

Due to an overall view of the court considers the intent to be deceive

The district court Hamburg has confirmed the complaint of a customer in second instance, who had sued against the DAD Deutscher Adress Dienst. This had taken the customer into the Internet address register at www.DeutschesInternetRegister.de, without making it clear that the entry was subject to a charge. The customer should pay 2,280.04 euros for the entry. The customer hired a lawyer with whom he went to the court. There, he sued for decision that he was not required to pay and for reimbursement of his legal fees. The district court Hamburg Barmbek gave the customer right. The district court Hamburg confirmed the judgment in the appeal.

Defendant is a business directory DAD with about 1.2 million registered companies. The vast majority of the registered are of free entries, which DAD has copied from publicly available sources. The customer received a letter from DAD, entittled "Data Update 2008". The letter requested a review of the existing and updated if necessary. It was also said: "The registration and updating of your basic data is free."

Only at the end of the form was an indication of the costs

An employee of the company then entered missing data on the pre-printed form and sent it to DAD as did a large number of authorities and tradespeople. Latter the company received an invoice for 2,280.04 euros for the entry with reference to a cost indication in the lower quarter of the form (annual price of 958 EUR plus VAT).

Deception about actual costs is fraud

The district court Hamburg evaluated this in its judgment as fraud. This does not change the fact that DAD has quite specifically indicated the cost-bearing nature of the offer in the letter. Rather, it is decisive that the possible act of deception in fraud is not only the pretending of false facts or the disfiguration or concealment of existing facts. Moreover, any behavior other than deception is also considered, provided that it may provoke a mistake on the part of the other person and influence the decision to make the desired declaration of intent.

Deception exists when the victim of fraud, knowing all the circumstances, would act differently

On the other hand, it was not decisive whether the deceived person followed the care required in the course of business dealings or even acted negligently with regard to the ommission of certain contractual information. Insofar as the error on the part of the customer has been triggered by a legally relevant deception. The customers claims do not fail because the error is caused by his own negligence in dealing with advertising mail.

The nature and design of the form produce erroneous ideas

In particular, in cases where the author of a contract offers, by presentation and formulation, a kind of design, which should cause the addressee has erroneous ideas about the actual supplied parameters. A deception can be assumed even if the true character of the letter could be recognized after careful reading. This also follows from a judgment of the Federal Court of 26.04.2001, Az. 4 StR 439/00. The respective deception must have been used according to plan and was not merely a consequence, but the purpose of action.

Costs notice at the end of the form could be overlooked by the customers

According to the Federal Court of Justice, in the case of a merely misleading presentation in the offer letter, it is above all a matter of how strongly significant contractual parameters are presented, distorted or edited. In the present case of DAD, the non-binding appearance of the request for review and correction of well-known data can caused that the price will be at least overlooked by some customers.

Form gave the impression of already existing contractual relations

Finally, another indication of the intended deception was that the form had already been pre-filled with the customer's data. Such an approach was apt to give the recipient the impression that it was not a novel business relationship but that it was intended to maintain or extend an existing contractual relationship.

Simple online entries usually cost no 2,280 euros

It is also crucial that none of the addressee with a total cost of over 1,900 euros for a simple online registration have to expect. With this reasoning also the district court Heilbronn decided by resolution of 23.06.2010, Az. 3 S 19/10 in a similarly stored case.

Destiny 2 Nightingale error [Solved!]

Thursday, February 22. 2018

As an employee of (a subsidiary of) Activision/Blizzard, last year those who wanted, got keys for Destiny 2.

It never worked! I never go to play it. ![]()

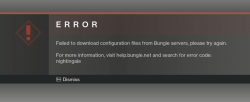

... and BANG! The dreaded Nightingale error:

For past couple of months, that's how much I saw Destiny 2. That isn't much. Darn!

Actually, there is an Internet full of people having the same problem. There are various solutions to, which have worked for some people and for some, not so much.

After doing all the possible things, including throwing dried chicken bones to a magical sand circle, I ran out of options. I had to escalate the problem to Blizzard Support. Since this wasn't a paid game, obviously it didn't reach their highest priority queue. But ultimately the cogs of bureaucracy aligned and I got the required attention to my problem. But ... it was unsovalvable. Or it seemed to be one.

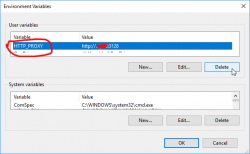

Today, after escalating the problem back to Bungie, they pointed out the problem. My computer didn't manage to reach their CDN, so the game got angry and spat the Nightingale on my face. They also hinted me about what my computer did instead and ...

Somewhere in the guts of the Destiny 2, there is a component reading the value of environment variable HTTP_PROXY. I had that set on the PC because of ... something I did for software development years ago.

After deleting the variable, the game started. WHOA!

So, it wasn't my router, DNS, firewall, or ... whatever I attempted before. Problem solved! ![]()

MaxMind GeoIP database legacy version discontinued

Sunday, February 11. 2018

MaxMind GeoIP is pretty much the de-facto way of doing IP-address based geolocation. I've personally set up the database updates from http://geolite.maxmind.com/download/geoip/database/ to at least dozen different systems. In addition, there are a lot of open-source software, which can utilize those databases, if they are available. Wireshark, IPtables, Bind DNS, to mention few.

The announcement on their site says:

We will be discontinuing updates to the GeoLite Legacy databases as of April 1, 2018. You will still be able to download the April 2018 release until January 2, 2019. GeoLite Legacy users will need to update their integrations in order to switch to the free GeoLite2 or commercial GeoIP databases by April 2018.

In three month's time most software won't be able to use freshly updated GeoIP databases anymore for the sole reason, that NOBODY bothered to update to their new .mmdb DB-format.

To make this clear:

MaxMind will keep providing free-of-charge GeoIP-databases even after 1st April 2018. They're just forcing people to finally take the leap forward and migrate to their newer libraries and databases.

This is a classic case of human laziness. No developer saw the incentive to update to a new format, as it offers precisely the same data than the legacy format. It's just a new file format more suitable for the task. Now the incentive is there and there isn't too much of time to make the transition. What we will see (I guarantee you this!) in 2019 and 2020 and onwards software still running in legacy format using outdated databases providing completely incorrect answers. ![]()

This won't happen often, but these outdated databases will reject your access on occasion, or claim that you're a fraudster.