EU Digital COVID Certificate, December 2021 edition

Sunday, December 5. 2021

Since my August blog post about vacdec, the utility to take a peek into COVID-19 passport internals, I've got lots of feedback and questions. The topic, obviously, touches all of us. As this global pandemic won't end, I've been following different events, occurrences and incdients around digital COVID certificates.

TV and Print media bloopers

To anybody working with software, computers, data, encoding and transmitting data, especially in the era of GDPR, it's second nature to classify data. There is public data, confidential data, data containing GDPR-covered personal details, to classify by simple three criteria.

What's puzzling on how EU Digital COVID Certificate QR-codes where shown in media. Personally I contacted four different journalists in fashion of "Hey, you published all of your personal details in form of a QR-code. Please, retract and do not ever do that again." One journalist I talked in person and his comment was "I couldn't fathom this black-and-white blob should be kept secret!"

These leaks weren't by small and insignificant media. The codes I saw were portrayed from 9 o'clock evening TV news (cinematographer's cert), website of print media (journalist's own cert), Internet news video (producer's cert) and paper print of news (not sure whose cert that was).

As these bloopers aren't regularily seen anymore, it seems all of the media outlets have issued internal memos for not to display the QR-codes in a readable format anymore. I have facts of one media outlet's internal memo informing people not to publish real certs publicly. One positive change is most of them are using sample certs when they really want to portray a QR-code.

Brute-forcing ECDSA cryptography

In one instance, I bumped into a script-kiddies masterpiece. He was convinced of the possibility of brute-forcing the ECDSA-256 private key of one COVID Certificate issuer. I eyeballed the Python-code and indeed, it approached the problem by doing an nearly-forever loop of picking 256 random bits to match those bits against the public key. Nice! By carefully choosing the bits, that is how the thing works.

However, some suspicions rose. There was a comment in the discussion thread saying: "I don't think this code is working. My home computer has been crunching this for two weeks now and there doesn't seem to be any results."

Really! REALLY!!

These kids really have no clue.

Hints for brute-forcing ECDSA-crypto:

- If it was meant to be that easy, everybody was cracking the private key.

- Don't do random bits, instead go through 2^256 bits in sequence. By going random, there is no mechanism to check if that combination has been attempted already.

- Go parallel, split the sequence into chunks and do it simultaneously with multiple computers.

- Two to 256th power is: 115792089237316195423570985008687907853269984665640564039457584007913129639936. If for some reason you come to a conclusion of that particular number being on the larger scale, your conclusion is correct. IT IS!

- If some magic lottery bounces your way and you happend to find the correct sequence. For the love of god, do not tell anybody else of your findings. It takes you couple gazillion years to brute force the key, for the opposing party couple seconds to revoke it.

- Make sure to put in couple lottery tickets while at it. Chances are you'll become rich before finding the private key.

Forged Digital COVID Certificates

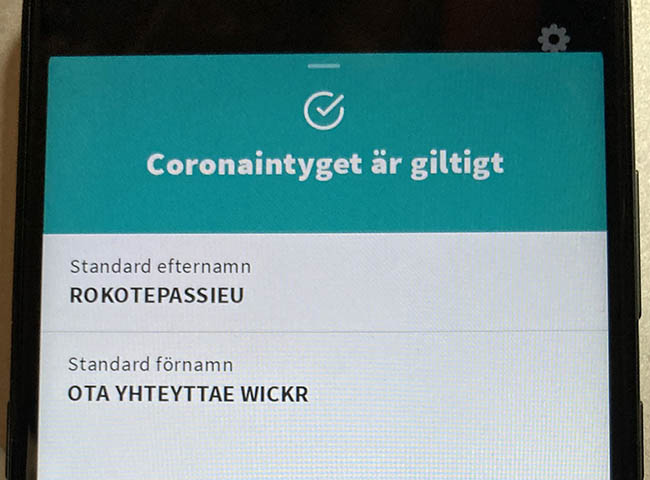

Somebody in Germany got their hands on the actual certificate issuing private key and did throw out couple fully valid and totally verifiable COVID certificates for different names. There were at least three different ones circulating that I know of. Here is one (notice the green color indicating validity):

This one was targeting Finnish customer pool and had first name of "Rokotepassieu" which translates as "Vaccination passport EU". Surname translated: "Contact me via Wickr" (for those who don't do Wickr, it's an AWS owned messaging app with highest standards on security).

According to Swedish government list of valid certificates, Germany has 57 sets of keys in use (disclaimer: at the time of writing this post). What German government had to do is revoke the public key which private key was misplaced. For thousands of people who had a valid COVID cert, they had to get theirs again with different signing key.

This kind of incident/leakage obviously caught attention from tons of officials. Making sellers' business dry up rather swiftly. Unfortunaly no details of this incident were disclosed. The general guess is for some underpaid person to be doing darknet moonlighting on the side with his access to this rare and protected resource.

Leaked Digital COVID Certificates

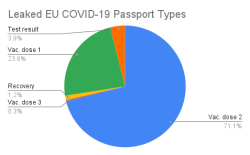

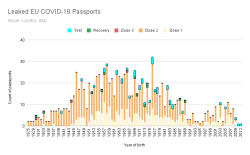

In Italy roughly thousand COVID certs issued to actual people were made available. I downloaded and processed couple thousand files in four different leaks just to find out most of the leaked certs were duplicates. I did combine and de-duplicate publicly available data. Here are the stats:

All leaked certs are still valid (disclaimer: at the time of writing this post). However, Italian government has only three sets of keys in place and replacing one would mean rendering 1/3 of all issued certificates as invalid. They have not chosen to do that. Most likely because the certs in circulation are harvested by somebody doing the checking with a malicious mobile app.

No surprises there. Most of them are for twice vaccinated people. Couple certs are found for three times vaccinated, test results and recovered ones. What's sad is the fact that such a leak exists. These QR-codes are for real human beings and their data should be handled with care. Not cool. ![]()

What next

This unfortunate pandemic isn't going anywhere. More and more countries are expanding the use of COVID certs in daily use. For any cert checking to make sense, the cert must be paired with person's ID-card. Crypto math with the certs is rock solid, humans are the weak link here.

Databricks CentOS 8 containers

Wednesday, November 17. 2021

In my previous post, I mentioned writing code into Databricks.

If you want to take a peek into my work, the Dockerfiles are at https://github.com/HQJaTu/containers/tree/centos8. My hope, obviously, is for Databricks to approve my PR and those CentOS images would be part of the actual source code bundle.

If you want to run my stuff, they're publicly available at https://hub.docker.com/r/kingjatu/databricks/tags.

To take my Python-container for a spin, pull it with:

docker pull kingjatu/databricks:python

And run with:

docker run --entrypoint bash

Databricks container really doesn't have an ENTRYPOINT in them. This atypical configuration is because Databricks runtime takes care of setting everything up and running the commands in the container.

As always, any feedback is appreciated.

MySQL Java JDBC connector TLSv1 deprecation in CentOS 8

Friday, November 12. 2021

Yeah, a mouthful. Running CentOS 8 Linux, in Java (JRE) a connection to MySQL / MariaDB there seems to be trouble. I think this is a transient issue and eventually it will resolve itself. Right now the issue is real.

Here is the long story.

I was tinkering with Databricks. The nodes for my bricks were on CentOS 8 and I was going to a MariaDB in AWS RDS. with MySQL Connector/J. As you've figured out, it didn't work! Following errors were in exception backtrace:

com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

The last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.

com.mysql.cj.jdbc.exceptions.CommunicationsException: Communications link failure

Caused by: com.mysql.cj.exceptions.CJCommunicationsException: Communications link failure

javax.net.ssl.SSLHandshakeException: No appropriate protocol (protocol is disabled or cipher suites are inappropriate)

Weird.

Going to the database with a simple CLI-command of (test run on OpenSUSE):

$ mysql -h db-instance-here.rds.amazonaws.com -P 3306 \

-u USER-HERE -p \

--ssl-ca=/var/lib/ca-certificates/ca-bundle.pem \

--ssl-verify-server-cert

... works ok.

Note: This RDS-instance enforces encrypted connection (see AWS docs for details).

Note 2: Term used by AWS is SSL. However, SSL was deprecated decades ago and the protocol used is TLS.

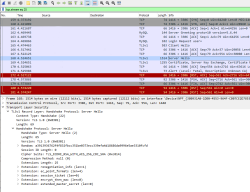

Two details popped out instantly: TLSv1 and TLS_ECDHE_RSA_WITH_AES_256_CBC_SHA cipher. Both deprecated. Both deemed highly insecure and potentially leaking your private information.

Why would anybody using those? Don't MySQL/MariaDB/AWS -people remove insecure stuff from their software? What! Why!

Troubleshooting started. First I found SSLHandShakeException No Appropriate Protocol on Stackoverflow. It contains a hint about JVM security settings. Then MySQL documentation 6.3.2 Encrypted Connection TLS Protocols and Ciphers, where they explicitly state "As of MySQL 5.7.35, the TLSv1 and TLSv1.1 connection protocols are deprecated and support for them is subject to removal in a future MySQL version." Well, fair enough, but the bad stuff was still there in AWS RDS. I even found Changes in MySQL 5.7.35 (2021-07-20, General Availability) which clearly states TLSv1 and TLSv1.1 removal to be quite soon.

No amount of tinkering with jdk.tls.disabledAlgorithms in file /etc/java/*/security/java.security helped. I even created a simple Java-tester to make my debugging easier:

import java.sql.*;

// Code from: https://www.javatpoint.com/example-to-connect-to-the-mysql-database

// 1) Compile: javac mysql-connect-test.java

// 2) Run: CLASSPATH=.:./mysql-connector-java-8.0.27.jar java MysqlCon

class MysqlCon {

public static void main(String args[]) {

try {

Class.forName("com.mysql.cj.jdbc.Driver");

Connection con = DriverManager.getConnection("jdbc:mysql://db.amazonaws.com:3306/db", "user", "password");

Statement stmt = con.createStatement();

ResultSet rs = stmt.executeQuery("select * from emp");

while (rs.next())

System.out.println(rs.getInt(1) + " " + rs.getString(2) + " " + rs.getString(3));

con.close();

} catch (Exception e) {

System.out.println(e);

e.printStackTrace(System.out);

}

}

}

Hours passed by, but no avail. Then I found command update-crypto-policies. RedHat documentation Chapter 8. Security, 8.1. Changes in core cryptographic components, 8.1.5. TLS 1.0 and TLS 1.1 are deprecated contains mention of command:

update-crypto-policies --set LEGACY

As it does the trick, I followed up on it. In CentOS / RedHat / Fedora there is /etc/crypto-policies/back-ends/java.config. A symlink pointing to file containing:

jdk.tls.ephemeralDHKeySize=2048

jdk.certpath.disabledAlgorithms=MD2, MD5, DSA, RSA keySize < 2048

jdk.tls.disabledAlgorithms=DH keySize < 2048, TLSv1.1, TLSv1, SSLv3, SSLv2, DHE_DSS, RSA_EXPORT, DHE_DSS_EXPORT, DHE_RSA_EXPORT, DH_DSS_EXPORT, DH_RSA_EXPORT, DH_anon, ECDH_anon, DH_RSA, DH_DSS, ECDH, 3DES_EDE_CBC, DES_CBC, RC4_40, RC4_128, DES40_CBC, RC2, HmacMD5

jdk.tls.legacyAlgorithms=

That's the culprit! It turns out any changes in java.security -file won't have any effect as the policy is loaded later. Running the policy change and set it into legacy-mode has the desired effect. However, running ENTIRE system with such a bad security policy is bad. I only want to connect to RDS, why cannot I lower the security on that only? Well, that's not how Java works.

Entire troubleshooting session was way too much work. People! Get the hint already, no insecure protocols!

macOS Monterey upgrade

Monday, November 1. 2021

macOS 12, that one I had been waiting. Reason in my case was WebAuthN. More about that is in my article about iOS 15.

The process is as you can expect. Simple.

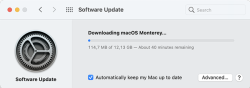

Download is big-ish, over 12 gigabytes:

After the wait, an install will launch. At this point I'll typically quit to create the USB-stick. This way I'll avoid downloading the same thing into all of my Macs.

To create the installer, I'll erase an inserted stick with typical command of:

diskutil partitionDisk /dev/disk2 1 GPT jhfs+ "macOS Monterey" 0b

Then change into /Applications/Install macOS Monterey.app/Contents/Resources and run command:

./createinstallmedia \

--volume /Volumes/macOS\ Monterey/ \

--nointeraction

It will output the customary erasing, making bootable, copying and done as all other macOSes before this:

Erasing disk: 0%... 10%... 20%... 30%... 100%

Making disk bootable...

Copying to disk: 0%... 10%... 20%... 30%... 40%... 50%... 60%... 70%... 80%... 90%... 100%

Install media now available at "/Volumes/Install macOS Monterey"

Now stick is ready. Either boot from it, or re-run the Monterey installed from App Store.

When all the I's have been dotted and T's have been crossed, you'll be able to log into your newly upgraded macOS and verify the result:

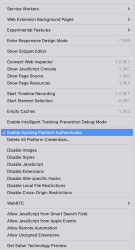

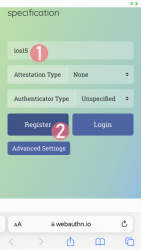

At this point disappointment hit me. The feature I was looking for, WebAuthN or Syncing Platform Authenticator as Apple calls it wasn't available in Safari. To get it working, follow instructions in Apple Developer article Supporting Passkeys. First enable Developer-menu for your Safari (if you haven't already) and secondly, in it:

Tick the box on Enable Syncing Platform Authenticator. Done! Ready to go.

Now I went to https://webauthn.io/, registered and account with the Mac's Safari, logged in with WebAuthN to confirm it works on the Mac's Safari. Then I took my development iPhone with iOS 15.2 beta and with iOS Safari went to the same site and logged in using the same username. Not using a password! Nice. ![]()

Maybe in near future WebAuthN will be enabled by default for all of us. Now unfortunate tinkering is required. Anyway, this is a really good demo how authentication should work, cross-platform, without using any of the insecure passwords.

OpenSSH 8.8 dropped SHA-1 support

Monday, October 25. 2021

OpenSSH is a funny beast. It keeps changing like no other SSH client does. Last time I bumped my head into format of private key. Before that when CBC-ciphers were removed.

Something similar happened again.

I was just working with a project and needed to make sure I had the latest code to work with:

$ git pull --rebase

Unable to negotiate with 40.74.28.9 port 22: no matching host key type found. Their offer: ssh-rsa

fatal: Could not read from remote repository.

Please make sure you have the correct access rights and the repository exists.

What? What? What!

It worked on Friday.

Getting a confirmation:

$ ssh -T git@ssh.dev.azure.com

Unable to negotiate with 40.74.28.1 port 22: no matching host key type found. Their offer: ssh-rsa

Yes, SSH broken. As this was the third time I seemed to bump into SSH weirdness, it hit me. I DID update Cygwin in the morning. Somebody else must have the same problem. And gain, confirmed: OpenSSH 8.7 and ssh-rsa host key. Going to https://www.openssh.com/releasenotes.html for version 8.8 release notes:

Potentially-incompatible changes ================================ This release disables RSA signatures using the SHA-1 hash algorithm by default. This change has been made as the SHA-1 hash algorithm is cryptographically broken, and it is possible to create chosen-prefix hash collisions for <USD$50K [1] For most users, this change should be invisible and there is no need to replace ssh-rsa keys. OpenSSH has supported RFC8332 RSA/SHA-256/512 signatures since release 7.2 and existing ssh-rsa keys will automatically use the stronger algorithm where possible.

With a suggestion to fix:

Host old-host

HostkeyAlgorithms +ssh-rsa

PubkeyAcceptedAlgorithms +ssh-rsa

Thank you. That worked for me!

I vividly remember back in 2019 trying to convince Azure tech support they had SHA-1 in Application Gateway server signature. That didn't go so well, no matter who Microsoft threw to the case, the poor person didn't understand TLS 1.2, server signature and why changing cipher wouldn't solve the issue. As my issue here is with Azure DevOps, it gives me an indication how Microsoft will be sleeping throught this security update too. Maybe I should create them a support ticket to chew on.

Why Windows 11 won't be a huge success

Tuesday, October 12. 2021

Lot of controversy on Microsoft's surprise release of Windows 11. I'm not talking about when they declared Windows 10 to be the "last Windows" and then releasing 11. Also changes in GUI have lots of discussion points. Neither the forced requirement of TPM2.0 which can be lowered to TPM1.2 with a registry change is not the deal-breaker. Is somebody whispering "Vista" back there? ![]()

What really makes all the dominoes fall is the lack of CPU-support.

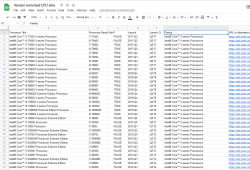

In above graphs is visualization of three pieces of informatinon I scraped. First I took the list of Windows 11 supported CPUs from Windows Hardware Developer - Windows Processor Requirements. Then I went for https://ark.intel.com/ to collect Intel CPU data. Also did the same for https://www.amd.com/en/products/specifications/. As for some reason AMD releases only information from 2016 onwards, for visualization I cut the Intel to match the same.

Ultimate conclusion is, Microsoft won't support all Intel CPUs released after Q2 2017. With AMD stats are even worse, threshold is somewhere around Q2 2018 and not all CPUs are supported. Percentages for AMD are better, but also their volume is smaller.

If you want to do the same, I published my source code into https://github.com/HQJaTu/Windows-CPU-support-scraper. When run, it will produce a Google Spreadsheet like this:

... which can bevisualized further to produce above graphs.

One of my laptops is a Lenovo T570 from 2017. It ticks all the Windows 11 installer boxes, except CPU-support. Obviously, CPU-support situation is likely to change on progression of time, so I may need to keep running the scripts every quarter to see if there will be better support in the later releases of Windows 11.

WebAuthN Practically - iOS 15

Monday, September 20. 2021

As Apple has recently released iOS 15, and iPadOS 15 and macOS 12 will be released quite soon. Why that is important is for Apple's native support for WebAuthN. In my WebAuthN introduction -post there is the release date for the spec: W3C Recommendation, 8 April 2021. Given the finalization of the standard, Apple was the first major player to step forward and start supporting proper passwordless authentication in it's operating systems. For more details, see The Verge article iOS 15 and macOS 12 take a small but significant step towards a password-less future.

For traditional approach with USB-cased Yubikey authenticator, see my previous post.

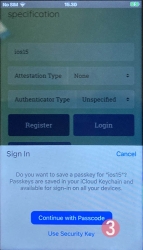

Registration

Step 1: Enter the username you'd like to register as.

Step 2: Go for Register

Step 3: Your browser will need a confirmation for proceeding with registration.

In Apple's ecosystem, the private key is stored into Apple's cloud (what!?). To allow access to your cloud-based secerts-storage, you must enter your device's PIN-code and before doing that, your permission to proceed is required.

Note: The option for "Use Security Key" is for using the Yubikey in Lightning-port. Both are supported. It is entirely possible to login using the same authenticator with a USB-C in my PC or Mac and Lightning with my iPhone or iPad.

Step 4: Enter your device PIN-code

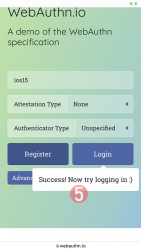

Step 5: You're done! Now you have successfully registered.

Best part: No passwords! Private key is stored into Syncing Platform Authenticator. Btw. weird name that for WebAuthN in Apple-lingo. Ok, to be honest, WebAuthN is a mouthful too.

This was couple steps simpler than with Yubikey. Also there is the benefit (and danger) of cloud. Now your credential can be accessed from your other devices too.

Login

Step 1: Enter the username you'd like to log in as.

Step 2: Go for Login

Step 3: Your browser will need a confirmation for proceeding with login. A list of known keys and associated user names will be shown.

Step 4: Enter your device PIN-code

Step 5: You're done! Now you have successfully logged in.

Best part: No passwords!

That's it. Really.

Finally

I don't think there is much more to add into it.

In comparison to Yubikey, any of your Apple-devices are authenticators and can share the private key. Obviously, you'll need iOS 15 or macOS 12 for that support.

WebAuthN Practically - Yubikey

Sunday, September 19. 2021

Basics of WebAuthN have been covered in a previous post. Go see it first.

As established earlier, WebAuthN is about specific hardware, an authenticator device. Here are some that I use:

These USB-A / USB-C / Apple Lightning -connectibe Yubikey devices are manufactured by Yubico. More info about Yubikeys can be found from https://www.yubico.com/products/.

To take a WebAuthN authenticator for a test-drive is very easy. There is a demo site run by Yubico at https://demo.yubico.com/ containing WebAuthN site. However, as a personal preference I like Duo Security's demo site better. This Cisco Systems, Inc. subsidiary specializes on multi-factor authentication and are doing a great job running a WebAuthN demo site at https://webauthn.io/.

Registration

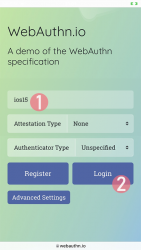

This illustrated guide is run using a Firefox in Windows 10. I've done this same thing with Chrome, Edge (the chromium one) and macOS Safari. It really doesn't differ that much from each other.

In every website, a one-time user registration needs to be done. This is how WebAuthN would handle the process.

Step 1: Enter the username you'd like to register as.

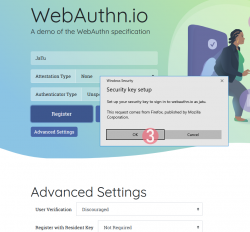

Step 2: Go for Register

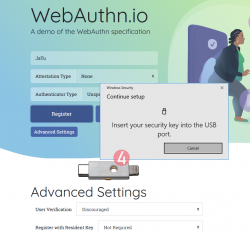

Step 3: Your browser will need a confirmation for proceeding with registration.

The main reason for doing this is to make you, as the user, aware that this is not a login. Also the authenticator devices typically have limited space for authentication keys available. For example: Yubikeys have space for 25 keys in them. The bad thing about limited space is because of high level of security yielding low level of usability. You cannot list nor manage the keys stored. What you can do is erase all of them clean.

Step 4: Insert your authenticator into your computing device (PC / Mac / mobile).

If authenticator is already there, this step will not be displayed.

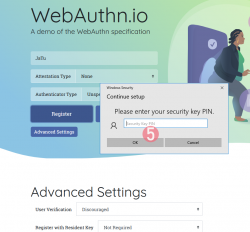

Step 5: Enter your authenticator PIN-code.

If you have not enabled the second factor, this step won't be displayed.

To state the obvious caveat here, anybody gaining access to your authenticator will be able to log in as you. You really should enable the PIN-code for increased security.

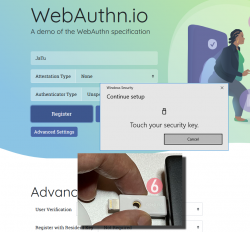

Step 6: Touch the authenticator.

The physical act of tringgering the registration is a vital part of WebAuthN. A computer, possibly run by a malicious cracker, won't be able to use your credentials without human interaction.

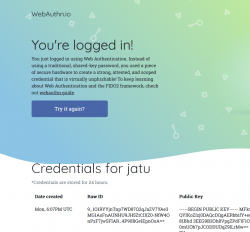

Step 7: You're done! Now you have successfully registered.

Best part: No passwords!

In this Duo Security test site, the sandbox will be raked on daily basis. Natually on a real non-demo site your information will be persisted much longer. Also note how your contact information like, E-mail address, mobile number or such wasn't asked. A real site would obviously query more of your personal details. Secondly, WebAuthN best practice is to have multiple authenticators associated with your user account. If you happen to misplace the device used initially for registration, having a backup(s) is advisable.

Next, let's see how this newly created user account works in a practical login -scenario.

Login

Step 1: Enter the username you'd like to log in as.

Step 2: Go for Login

Step 3: Insert your authenticator into your computing device (PC / Mac / mobile).

If authenticator is already there, this step will not be displayed.

Step 4: Enter your authenticator PIN-code.

If you have not enabled the second factor, this step won't be displayed.

Step 5: Touch the authenticator.

Again, human is needed here to confirm the act of authentication.

Step 6: You're done! Now you have successfully logged in.

Best part: No passwords!

Note how the public key can be made, well... public. It really doesn't make a difference if somebody else gets a handle of my public key.

Closer look into The Public Key

As established in the previous post, you can not access the private key. Even you, the owner of the authenticator device, can not access that information. Nobody can lift the private key, possibly without you knowing about it. Just don't lose the Yubikey.

Public-part of the key is known and can be viewed. The key generated by my Yubikey in PEM-format is as follows:

-----BEGIN PUBLIC KEY-----

MFkwEwYHKoZIzj0CAQYIKoZIzj0DAQcDQgAERbbifY+euxnszcMis99CsnH81Bhd

3EEG9B2Oh8VpgZPdFlF1OQ8FEbfuSxbbAK+l0mUOb7pJCODDUDqZ9lLrMw==

-----END PUBLIC KEY-----

Popping the ASN.1 cork with a openssl ec -pubin -noout -text -in webauthn-pem.key will result:

read EC key

Public-Key: (256 bit)

pub:

04:45:b6:e2:7d:8f:9e:bb:19:ec:cd:c3:22:b3:df:

42:b2:71:fc:d4:18:5d:dc:41:06:f4:1d:8e:87:c5:

69:81:93:dd:16:51:75:39:0f:05:11:b7:ee:4b:16:

db:00:af:a5:d2:65:0e:6f:ba:49:08:e0:c3:50:3a:

99:f6:52:eb:33

ASN1 OID: prime256v1

NIST CURVE: P-256

From that we learn, the key-pair generated is an ECDSA 256-bit. Known aliases for that are secp256r1, NIST P-256 and prime256v1. That weird naming means elliptic curves, aka. named curves.

For those into math, the actual arithmetic equation of secp256r1 -named curve can be viewed in an open-source book by Svetlin Nakov, PhD at https://cryptobook.nakov.com/. All the source code in this freely available book are at https://github.com/nakov/practical-cryptography-for-developers-book. The mathemathical theory how WebAuthN signs the messages is described in detail at https://cryptobook.nakov.com/digital-signatures/ecdsa-sign-verify-messages.

Back to those "pub"-bytes. Reading RFC5480 indicates out of those 65 bytes, the first one, valued 04, indicates this data being for an uncompressed key. With that information, we know rest of the bytes are the actual key values. What remains is a simple act of splitting the remaining 64 bytes into X and Y, resulting two 32-byte integers in hex:

X: 45b6e27d8f9ebb19eccdc322b3df42b271fcd4185ddc4106f41d8e87c5698193

Y: dd165175390f0511b7ee4b16db00afa5d2650e6fba4908e0c3503a99f652eb33

A simple conversion with bc will result in decimal:

X: 31532715897827710605755558209082448985317854901772299252353894644783958819219

Y: 100000572374103825791155746008338130915128983826116118509861921470022744730419

Yes, that's 77 and 78 decimal numbers in them. Feel free to go after the prime number with that public information! ![]()

Finally

The mantra is: No passwords.

With WebAuthN, you'll get hugely improved security with multiple authentication factors built into it. What we need is this to spread and go into popular use!

WebAuthN - Passwordless registration and authentication of users

Monday, September 13. 2021

WebAuthN, most of you have never heard of it but can easily understand it has something to do with authenticating "N" in The Web. Well, it is a standard proposed by World Wide Web Consortium or  . In spec available at https://www.w3.org/TR/webauthn/, they describe WebAuthN as follows:

. In spec available at https://www.w3.org/TR/webauthn/, they describe WebAuthN as follows:

Web Authentication:

An API for accessing Public Key Credentials

Level 2W3C Recommendation, 8 April 2021

What motivates engineers into inventing an entirely new form of authenticating end users to web services is obvious. Passwords are generally a bad idea as humans are not very good using memorized secrets in multiple contexts. Your password is supposed to be a secret, but when it leaks from a website and is made public for The World to see your secret isn't a secret anymore. Using a different password for every possible website isn't practical without proper tooling (which many of you won't utilize for number of reasons). As all this isn't new and I've already covered it numerous posts, example Passwords - Part 3 of 2 - Follow up, this time I'm not going there.

Instead, let's take a brief primer on how authentication works. To lay some background for WebAuthN, I'm introducing three generations of authentication. Honestly it is weird how I cannot find anybody's previous work on how to define 1st gen or 2nd gen auth mechanisms. As usual, if you want to have something done properly, you'll have to do it by yourself. I'm defining the generations of authentication techniques here.

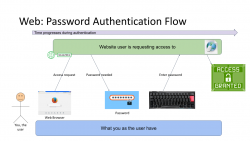

First Generation: Password - a memorized secret

This is your basic password-scenario, something most of us know and understand easily. In the picture below is something I drew to illustrate the flow of events during user authentication. On the left is you, the user. You want to login to a website you've registered before. As anybody having access to The Internet, anywhere in the globe, they can show up and claim to be you, the mechanism to identify you being YOU is with the secret you chose during registration process, aka. your password.

Process goes as follows: You open a web browser, go to a known address of the website, when authentication is requested, using a keyboard (touch screen or a real keyboard) enter your password credential to complete the claim of you being you. Finally as your password matches what the website has in its records, you are granted access and go happily forward.

The obvious weakness in this very common authentication mechanism has already been explained. What if somebody gets your password? They saw you typing it, or found the yellow post-it sticker next to your monitor, or ... many possibilities of obtaining your secret, including a guess. To mitigate this, there are MFA of 2-FA to introduce a Multi-Factor Authentication (or Two-Factor), but for the sake of introducing this generation, we skip that. It's an add-on and doesn't really alter this most commonly used process.

It's the simplicity that made this original one the most popular method of authentication. Nothing special really needed and this approach kinda works. It won't resist much of an attack, but it works.

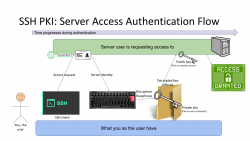

Second Generation: Public Key Infrastructure (PKI)

One of the most prominent attempts to stop using passwords is PKI. As this mechanism isn't widely used in The Web, note the difference for the 1st gen diagram, this is not web, this is Secure Shell, or SSH.

In reality, you can use SSH with passwords also, but it is typical for not to. Actually, I always (no exceptions!) disable password authentication in my servers by introducing PasswordAuthentication no into sshd_config. Simply by not using passwords, my security improves a lot.

Second reality check: In HTTP/1.1 you can use certificate (aka. PKI) authentication. However, on currently used HTTP/2 that is not possible. Quote: "[RFC7540] prohibits renegotiation after any application data has been sent. This completely blocks reactive certificate authentication in HTTP/2". So, that's effectively gone in the form used previously. And no, to those shouting "my national ID-card has an ISO-7816 -chip in it and I can use the certificate in it to authenticate!". That tech isn't based on any of the web standards. All nations invented their own stack of custom software attaching a running process in your computer to your browser's Security Device API. As this isn't even commonly used, this changes nothing and I'll stand behind my claim: In web there really isn't PKI-auth in use.

SSH authentication process goes as follows: On your computer (or mobile device), you open your SSH-client to enter a known server. Authentication is integral part of a connection and cannot really be bypassed. On authentication, you will get the server's identity as a response and your client will verify the address+signature -pair to a previously stored value to make sure the server is in fact The Server you have accessed before. Next your private key will be needed. You have generated this during "registration" process (in SSH there really isn't registration, but declaring the keys to be used can be considered one). Best practice is to have your private keys encrypted, so to access the key I need to enter a password (pass phrase) for decryption. Note the difference for the previous gen. here: the password I type, nor my private key does not leave my computer, only a data packet signed with my private key will. Given PKI-math, the server can verify my signature using the public key on that end. As signature matches, server has verified the claim of me being me and allows access.

In general, this is very nice and safe approach. The introduction of server identity increases your security as a random party cannot claim to act as "The Server". Also the authentication mechanism used is a seriously thought/engineered and complex enough to withstand any brute-forcing or guesswork. Even if your private key would be encrypted with a super-easy passphrase, or not encrypted at all, it won't be easy for somebody else to mimic you. They really really really need to have your private key.

There is no real obvious weakness in this (besides this is not Web). What could be described as a "weakness" is the private key storage. What if it leaks? Your keys to "The Kingdom" are gone. Remember: the private keys should be encrypted to mitigate them being lost. However, your keys being lost/stolen is a lot less likely incident to happen in comparison of somebody guessing or harvesting your password from the server database. Even if you set your mind into generating a "poor" public/private key pair, in fact it is actually very secure. Much more secure than your default "123456" easily guessable password.

On the other hand, if your public key on the server side leaks, that's not a big deal. Servers do get hacked. Data does get stolen, but public is public. You can climb to a mountain and shout your public key for all to know (actually that's literally what GPG does). The math PKI uses in either RSA or ECDSA is considered really really very safe.

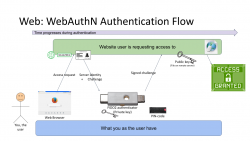

Third Generation: WebAuthN

Back to web with PKI. Building on top of first and second generations, there is an improvement: in WebAuthN the private key storage isn't a file in your computer. It's a FIDO2-compliant detachable hardware device. Note: in FIDO Alliance specs, the authenticator "device" can be a software running in your computer (or mobile phone, or whatever you're using as your personal computer). However, most typically deployed WebAuthN-solutions do use actual physical devices you can plug in and out of your computer. The authenticator devices don't have any batteries, are powered from the computer and are typically small enough to be stored in your pocket.

The authentication process goes as follows: You open a web browser, go to a known address of the website. On the website you choose to login and when authentication is requested by the server, it will send you its identification and a challenge as a response to your browser. The callenge will contain data signed by your public key. Obviously your public key was stored to the server during user registration process. As your browser will require help from the authenticator device, a connection will be made there. If your authenticator is protected by a PIN, a request to unlock is indicated by your browser. Using your keyboard, you punch in the PIN-code and your browser will pass on the challenge to the authenticator device. It will check the server's identification and see if it would have a private key in its storage to match that. If it does, it will signal your browser about the found private key. You choose to go forward with authentication on your browser and authenticator device will return the challenge with a signature added into it. Then your browser will deliver signed data over the Net, server on the other side will verify the signature and on successful match you're in! This entire process is a variation of 2nd gen PKI-authentication.

Drawback is obvious: you need to have the device at your hand to be able to log in. Most of regular users like you don't have a compatible FIDO2 authenticator at hand. If you do, on a failing scenario, what if you left the device at home? Or your dog ate it? Or you made an unfortuante mistake and dropped it into a toilet by accident. Well, good thing you thought of this and have configured a spare authenticator, right? ![]() Ok, you being a carefree fellow, you forgot where you placed it. At this point you are eventually locked out of the remote system, which is bad.

Ok, you being a carefree fellow, you forgot where you placed it. At this point you are eventually locked out of the remote system, which is bad.

What's good is the fact that your private key cannot be stolen. Nobody, including you, don't know it nor can access it. All you can do with a device is to get a signed challenge (besides the obvious act of adding a new key during registration). Your security is standing on two factors, the private key stored into an unbreakable device and the fact that a PIN-code is needed on an attempt to access any of the data. That is pretty safe. Worst-case scenario is when your authenticator doesn't have PIN-code protection and somebody gets their hands on it. Then they can present themselves as you.

As WebAuthN is a new and good thing, the only reason it isn't widely used at this point is the need for a specific type of hardware device. These devices are commonly available and they aren't too expensive either. Single device can be used to authenticate into multiple sites, even. The avalability problem is simply because aren't too many manufacturers in existence yet.

Not to worry! This can be navigated around. Let's put on our future-goggles and look a bit ahead. What if "the device" needed here would be your mobile phone? Those things are commonly available and won't require anything else than operating system support and suitable software.

Practical WebAuthN

Enough theory! How does this work in real life?

Well, that's a topic for an another blog post. I'll investigate the USB-A/USB-C/Apple Lightning -solution in closer detail. Also the upcoming mobile device option will be discussed.

macOS Big Sur 11.1 update fail

Monday, August 30. 2021

Big Sur was riddled with problems from getgo. It bricked some models, started installing when there was not enough disc space available and all kinds of weirness. Quite few of the minor versions had issues.

I was stuck with version 11.0.1 for over half a year. Of course my mac was hit by one of the problems, I tried navigating around the problem, contacted Apple Care (yay! finally got something ouf of that extra money) and they said a full install will be required. When doing the full install didn't work I lost motivation and just ignored any updates for months.

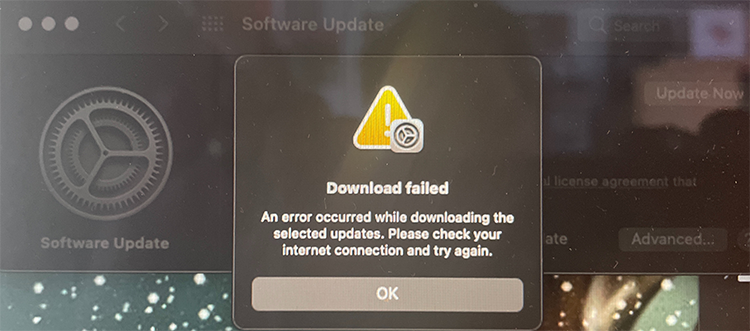

Going for the typical path, macOS of receving a notification about update or via App Store, they are pretty much the same thing, difference being if I actively seeked for updates or was reminded about it. Result:

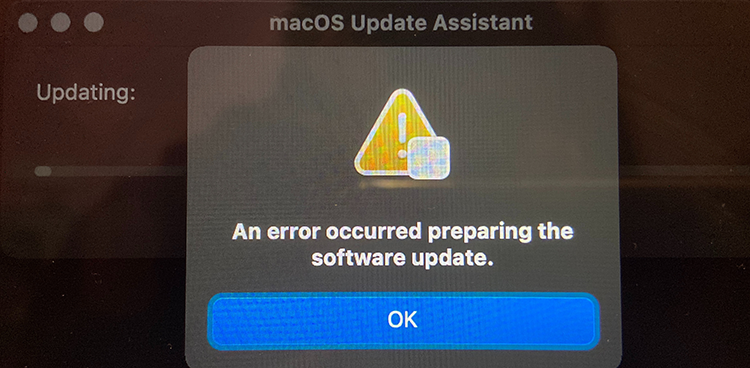

Ok. Doesn't seem to work. I triied downloading on Wi-Fi, on Ethernet with couple of adapters, then I gave up and went for my favorite way of doing updates, USB-stick. Preparing the stick, booting from it, quite soon I was informed about "A software update is required to use this startup disk".

Clicking Update resulted an instant error:

Preparing something to do something didn't go as planned. Error message of "An error occurred preparing the software update" was emitted. Trying again wouldn't help. Booting into Internet recovery wasn't helpful either, error -2003F indicating failure to access resources over Internet. All avenues were explored, everything I knew was done. I totally lost motivation to attempt anything. All that time the Apple Care chat message "just do a clean reinstall" demotivated me more. I WAS TRYING TO DO A CLEAN REINSTALL!! It just didn't work.

I wasn't alone. Many many many users had exactly the same symptoms.

Months passed.

Macs are funny that way. Apple does "support" their old macOSes for security, but old hardware has a cap on what macOS will be the the last one for that particular hardware. At the same time you absolutely positively need to fall forward into latest macOS versions to have support for all nice software. My Mac wasn't capped by obsoleted hardware, it was capped by Apple's own QA failing and allowing a deploy on an OS version that wouldn't work properly. In this case, I needed to update Xcode, the must-have Mac / iOS developer tool. The version I had runing worked, but didn't support the new and shiny things I needed. I wasn't allowed to upgrade as my Big Sur version was too old.

You Maniacs! You blew it up! Ah, damn you! God damn you all to hell!

(For movie-ignorant readers, that quote is from -68 Planet of the Apes)

Need for a recent Xcode sparked my motivation. I went back to the grinding stone and trying to force my thing to update. I re-did everything I had already done before. No avail. Nothing works. Every single thing I attempted resulted in a miserable failure. To expand my understanding about the problem I read everything I could find about the subject and got hints what to try next. Apparently the problem was with the T2-chip.

For the umphteenth time I did a recovery boot and realized something I didn't recall anybody else mentioning:

Among numerous options to install or upgrade macOS I knew about, there is one additional approach in the recovery menu. And IT WORKED!

To possibly help out others still suffering from this, I left my mark into StackExchange https://apple.stackexchange.com/a/426520/251842.

Now I'm banging my head to a brick wall. Why didn't I realize that sooner! ![]()

Anyway, the Mac is updated, newer software is running. Until similar thing happens for the next time, that is.

Nokia 5.3 got Android 11 upgrade

Monday, August 16. 2021

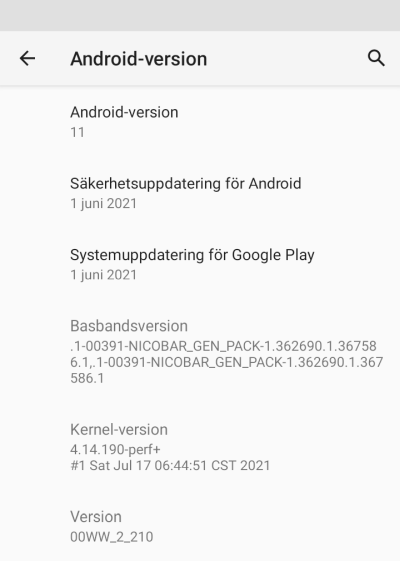

There it is:

This update was promised on 8th September 2020, roughly an year ago. See: https://twitter.com/nokiamobile/status/1303384763705819157

As promised, there it is. And for iPhone user like me, taking a screenshot and sending it into processing via Gmail is nearly impossible. These same Nokia-guys used to make phones where every trivial thing required 5-8 button presses. Even if every generation of Android takes out my user experience, I still appreciate the fact they maintain and provide updates to these no-mandatory-Facebook -phones.

Keep it up!

Wi-Fi 6 - Part 2 of 2: Practical wireless LAN with Linksys E8450

Sunday, August 15. 2021

There is a previous post in this series about wireless technology.

Wi-Fi 6 hardware is available, but uncommon. Since its introduction three years ago, finally it is gaining popularity. A practial example of sometimes-difficult-to-obtain part is an USB-dongle. Those have existed at least 15 years now. There simply is none with Wi-Fi 6 capability.

Additional twist is thrown at me, a person living in EU-reagion. For some weird (to me) reason, manufacturers aren't getting their radio transmitters licensed in EU. Only in US/UK. This makes Wi-Fi 6 appliance even less common here.

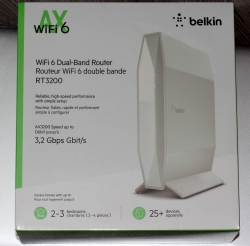

When I throw in my absolute non-negotiable requirement of running a reasonable firmware in my access point, I'll limit my options to almost nil. Almost! I found this in OpenWRT Table-of-Hardware: Linksys E8450 (aka. Belkin RT3200) It is an early build considered as beta, but hey! All of my requirements align there, so I went for it in Amazon UK:

Wi-Fi 6 Access Point: Belkin RT3200

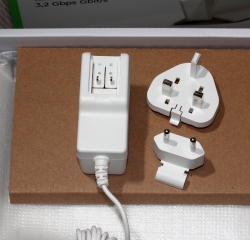

Couple of days waiting for UPS delivery, and here goes:

This is exactly what I wanted and needed! A four-port gigabit switch for wired LAN, incoming Internet gigabit connector. 12 VDC / 2 A barrel connector for transformer. Given UK power plugs are from 1870s they're widely incompatible with EU-ones. Luckily manufacturers are aware of this and this box contains both UK and EU plugs in an easily interchangeable form. Thanks for that!

Notice how this is a Belkin "manufactured" unit. In reality it is a relabled Linksys RT3200. Even the OpenWRT-firmware is exactly same. Me personally, I don't care what the cardobard box says as long as my Wi-Fi is 6, is fast and is secure.

Illustrated OpenWRT Installation Guide

The thing with moving away from vendor firmware to OpenWRT is that it can be tricky. It's almost never easy, so this procedure is not for everyone.

To achieve this, there are a few steps needed. Actual documentation is at https://openwrt.org/toh/linksys/e8450, but be warned: amount of handholding there is low, for newbie there is not much details. To elaborate the process of installation, I'm walking trough what I did to get me OpenWRT running in the box.

Step 0: Preparation

You will need:

- Linksys/Belkin RT3200 access point

- Wallsocket to power the thing

- A computer with Ethernet port

- Any Windows / Mac / Linux will do, no software needs to be installed, all that is required is a working web browser

- Ethernet cable with RJ-45 connectors to access the access point's admin panel via LAN

- OpenWRT firmware from https://github.com/dangowrt/linksys-e8450-openwrt-installer

- Download files into a laptop you'll be doing your setup from

- Linksys-compatible firmware is at at:https://github.com/dangowrt/linksys-e8450-openwrt-installer/releases, get

openwrt-mediatek-mt7622-linksys_e8450-ubi-initramfs-recovery-installer.itb - Also download optimized firmware

openwrt-mediatek-mt7622-linksys_e8450-ubi-squashfs-sysupgrade.itb

- Skills and rights to administer your workstation to have its Ethernet port a fixed IPv4-address from net 192.168.1.1/24

- Any other IPv4 address on that net will do, I used 192.168.1.10

- No DNS nor gateway will be needed for this temporary setup

Make sure not to connect the WAN / Internet into anything. The Big Net is scary and don't rush into that yet. You can do that later when all installing and setupping is done.

Mandatory caution:

If you just want to try OpenWrt and still plan to go back to the vendor firmware, use the non-UBI version of the firmware which can be flashed using the vendor's web interface.

Process described here is the UBI-version which does not allow falling back to vendor firmware.

Step 1: Un-box and replace Belkin firmware

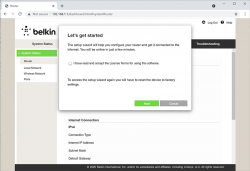

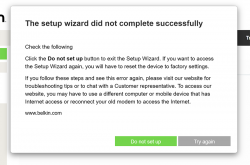

After plugging the Access Point to a wall socket, flicking the I/O-switch on, attaching an Ethernet cable to one of the LAN-switch ports and other end directly to a laptop, going to http://192.168.1.1 with your browser will display you something like this:

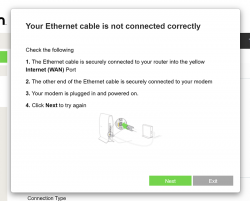

What you need to do is try to exit the out-of-box-experience setup wizard:

For the "Ethernet cable is not connected" you need to click Exit. When you think of the error message bit harder, if you get the message, your Ethernet IS connected. Ok, ok. It is for the WAN Ethernet, not LAN.

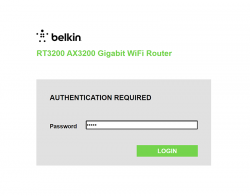

Notice how setup "did not complete succesfully". That is fully intentional. Click "Do not set up". Doing that will land you on a login:

This is your unconfigured admin / admin -scenario. Log into your Linksys ... erhm. Belkin.

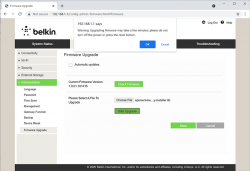

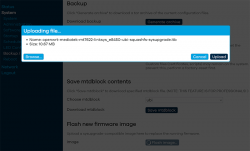

Select Configuration / Administration / Firmware Upgrade. Choose File. Out of the two binaries you downloaded while preparing, go for the ubi-initramfs-recovery-installer.itb. That OpenWRT firmware file isn't from manufacturer, but the file is packaged in a way which makes it compatible to allow easy installation:

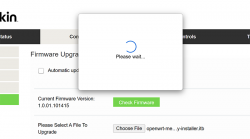

On "Start Upgrade" there will be a warning. Click "Ok" and wait patiently for couple minutes.

Step 2: Upgrade your OpenWRT recovery into a real OpenWRT

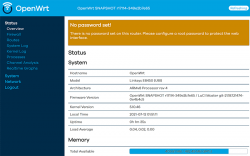

When all the firmware flashing is done, your factory firmware is gone:

There is no password. Just "Login". An OpenWRT welcome screen will be shown:

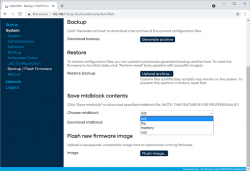

Now that you're running OpenWRT, your next task is to go from recovery to real thing. I'm not sure if I'll ever want to go back, but as recommended by OpenWRT instructions, I did take backups of all four mtdblocks: bl2, fip, factory and ubi. This step is optinal:

When you're ready, go for the firmware upgrade. This time select openwrt-mediatek-mt7622-linksys_e8450-ubi-squashfs-sysupgrade.itb:

To repeat the UBI / non-UBI firmware: This is the UBI-version. It is recommended as it has better optimization for layout and management of SPI flash, but it does not allow fallbacking to vendor firmware.

I unchecked the "Keep settings and retain the current configuration" to make sure I got a fresh start with OpenWRT. On "Continue", yet another round of waiting will occur:

Step 3: Setup your wireless AP

You have seen this exact screen before. Login (there is no password yet):

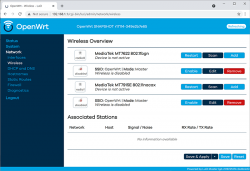

Second time, same screen but with this time there is a proper firmware in the AP. Go set the admin account properly to get rid of the "There is no password set on this router" -nag. Among all settings, go to wireless configuration to verify both 2.4 and 5 GHz radios are off:

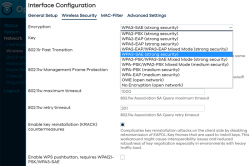

Go fix that. Select "Edit" for the 5 GHz radio and you'll be greeted by a regular wireless access point configuration dialog. It will include section about wireless security:

As I wanted to improve my WLAN security, I steer away from WPA2 and went for a WPA3-SAE security. Supporting both at the same time is possible, but securitywise it isn't wise. If your system allows wireless clients to associate with a weaker solution, they will.

Also for security, check KRACK attack countermeasures. For more details on KRACK, see: https://www.krackattacks.com/

When you've done, you should see radio enabled on a dialog like this:

Step 4: Done! Test.

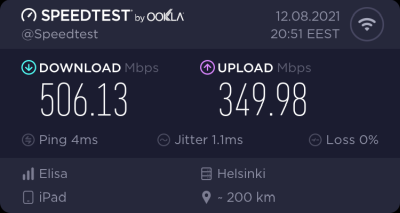

That's it! Now you're running a proper firmware on our precious Wi-Fi 6 AP. But how fast it is?

As I said, I don't have many Wi-Fi 6 clients to test with. On my 1 gig fiber, iPad seems to be pretty fast. Also my Android phone speed is ... well ... acceptable. ![]()

For that speed test I didn't even go for the "one foot distance" which manufacturers love to do. As nobody uses their mobile devices right next to their AP, I tested this on a real life -scenario where both AP and I were located the way I would use Internet in my living room.

Final words

After three year wait Wi-Fi 6 is here! Improved security, improved speed, improved everything!

Wi-Fi 6 - Part 1 of 2: Brief primer on wireless LAN

Friday, August 13. 2021

Wi-Fi. Wireless LAN / WLAN. Nobody wants to use their computing appliance with cords. Yeah, you need to charge them regularily (with a cord or wireless charger). To access The Internet, we all love, is less on wires. The technologies for going wire-less are either mobile data (UMTS / LTE / 5G) or Wi-Fi. Funny how 20 years ago there was no real option, but thanks to advances in technology we're at the point where all you need in life is a working Wi-Fi connection.

Wi-Fi Symbols

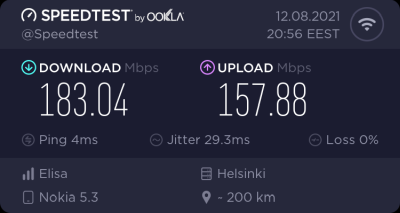

Back in 2018 Wi-Fi Alliance® came up wiith this new naming scheme and baptized their latest (sixth) generation as Wi-Fi 6. At the same time, they retro-actively baptized their previous technologies as 5, 4, and so on (3rd gen. or older isn't really used anymore). In their website https://www.wi-fi.org/discover-wi-fi they depict Wi-Fi generations as follows:

Most typically, you're running on Wi-Fi 5. That's with an 802.11ac transmitter. If you never left 2.4 GHz band there is a likelihood you're still stuck on Wi-Fi 4 with ~10 years old access point. There is wery low chance you're still on Wi-Fi 3, that hardware is nearly 20 years old. Not many consumer-grade electronics last that long.

A practical example on how operating system might use the Wi-Fi symbols from Android. This is what my phone used to look like until some random product owner at Google decided that those symbols are too confusing, dropped them in an OS upgrade and now my Nokia 5.3 won't display the numbers anymore:

Android / Nokia devs: Please, put those numbers back!

Apple devs: Please, put Wi-Fi generation numbers into wireless networks.

Wi-Fi 5+ Radio Bandwidth

Anyway, the 2.4 GHz band is pretty much dead. Don't miss the fact there are no advances happening on lower Wi-Fi -band. All the new stuff like Wi-Fi 6 is only on 5 GHz band. This will only affect people trying to use and old phone or laptop and realize it won't connect.

Reason why 2.4 GHz has been abandoned is obvious: your next door neighbour's microwave oven, nearby babymonitors, all Bluetooth stuff and the guy parking his car blipping the keyfob to lock the doors on the street are using that exact same band. Ok ok, a microwave oven shouldn't emit any signal outside, but still the fact reamains, it uses the same band. As an example of 2.4 GHz band traffic, I've personally been in an apartment building with 50+ wireless networks, when counting also nearby buildings, 100+ networks were visible on Wi-Fi search. With an iPhone, if using a proper antenna a search would yield 200-300 networks. All that on a 100-200 meter radius. Yes, that's crowded.

That much traffic on a narrow band results in nobody getting a proper Internet connection. Unless.... you're at 5 GHz band which can take the hit, won't have babymonitors nor microwave owens.

So, for Wi-Fi 5/6: bye bye 2.4, 5 GHz it is.

Wi-Fi 5 and 6 Speeds

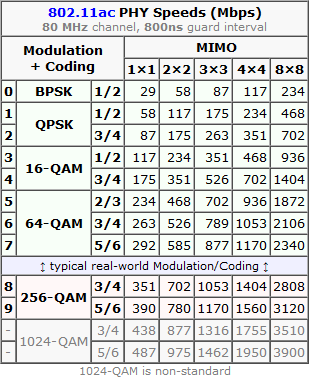

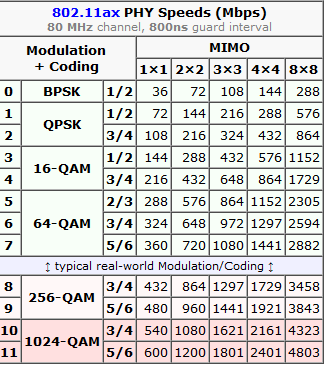

There is an excellent article at Duckware titled Wi-Fi 4/5/6/6E (802.11 n/ac/ax). I'm borrowing two tables from it:

These tables depict the theoretical maximum speeds available at various multiple in/out transmissions (MIMO) aka. simultaneous radios. What a "radio" means in this context is the number of radios/antennas used by the access point and your client. Wi-Fi 4 is intentionally not in this comparison. It was the first generation to be able to utilize MIMO, but it lacked the modern modulation, had less subcarriers and used larger guard interval. Maximum transmission speed for 4-radio 5 GHz Wi-Fi 4 was 600 Mbit/s (1000 for non-standard), much less for 2.4 GHz. By looking at the table Wi-Fi 6 can reach that using a single radio, Wi-Fi 5 with two. So, not reallly a fair comparison.

How many simultaneous radios are you currently using depends. Your current Wi-Fi -connection may be using 1, 2, 3 or 4 radios/antennas, but it depends on how many exist in your access point and phone/laptop. The more expensive hardware you have, number of radios used increases.

Advanced topic: If you really really want to study why Wi-Fi 5 and Wi-Fi 6 speeds differ, there is a really good explanation on how OFDM and OFDMA modulations differ. Most people wouldn't care, but I majored in that stuff back-in-the-university-days.

Wi-Fi 5+ Dynamic Rate Selection

Besides hardware/radios/modulation Wi-Fi 5 introduced CWAP or Dynamic Rate Selection. Read more about that in this article. To state the obvious, also Wi-Fi 6 (and upcoming 7) will be using this.

Dynamic rate selection improves total bandwidth utilization in access point as clients need to declare the amount needed. If you'lre leeching warez via Wi-Fi, your client-radio will announce to access point: "Hey AP! This guy will be leeching warez, gimme a lot of bandwidth." Then access point will allocate you a bigger slice of the pie. When you leeching is done, your radio will announce: "I'm done downloading, won't be needing much bandwidth anymore." Then somebody else at the same access point can get much more. This type of throttling/negotiation vastly improves the actual bandwidth usage when multiple clients are associated with the same wireless network. Please note: "a client" is any Wi-Fi -connected device including your phone, laptop, fridge and bot-vacuum.

Practical example from Windows 10:

On an idle computer, running netsh wlan show interfaces resulted:

There is 1 interface on the system:

Name : WiFi

Description : Intel(R) Dual Band Wireless-AC 8265

State : connected

Network type : Infrastructure

Radio type : 802.11ac

Authentication : WPA2-Personal

Cipher : CCMP

Connection mode : Profile

Channel : 60

Receive rate (Mbps) : 1.5

Transmit rate (Mbps) : 1.5

Signal : 92%

Then while downloading couple gigabytes of Apple iOS upgrade:

There is 1 interface on the system:

Name : WiFi

Description : Intel(R) Dual Band Wireless-AC 8265

State : connected

Network type : Infrastructure

Radio type : 802.11ac

Authentication : WPA2-Personal

Cipher : CCMP

Connection mode : Profile

Channel : 60

Receive rate (Mbps) : 400

Transmit rate (Mbps) : 400

Signal : 94%

Notes:

Using Wi-Fi 5, radio type is 802.11ac. This article is about Wi-Fi 6! ![]()

Receive / transmit rate varies from 1.5 Mbit/s to 400 Mbit/s depending on the need.

How dynamic rate allocation can be determined in Linux or macOS, I have no idea. If you do, please, drop a comment.

Wi-Fi 6 Security

When looking wifi security today, WPA/WPA2 is broken. WPA2 was introduced in 2004 with Pre-Shared Key (PSK). Later in 2010 WPA Enterprise Authentication Protocol (EAP) was introduced and it is still considered secure. For a home user like you and me, EAP is very difficult to setup and maintain. Hint: the word "enterprise" says it all. As bottom line, nobody is running it at home, all enterprises are at the office.

Around 2017/2018 number of cracks were introduced to erode security of WPA2 PSK making it effectively crackable, not completely insecure, but with some effort insecure. One example out of many: Capturing WPA/WPA2 Handshake [MIC/Hash Cracking Process]

To fix this insecurity, carefully designing the new Wi-Fi security model for 14 years Wi-Fi Alliance introduced WPA3. The un-cracable version. EAP is still there in WPA3. Insecure PSK has been obsoleted and replaced by Simultaneous Authentication of Equals (SAE) which is claimed to be cracking resistant even for poor passwords.

WPA3 is not bound to radio technology used, but given consumer electronics manufactures, they're not going to add a completely new security feature to old hardware. So, practically we're speaking Wi-Fi 5 or newer. If you're at Wi-Fi 6, you'll definitely get WPA3. Update: Any WAP3 hardware manufactured after 1st July 2020 will have mandatory WPA3, before that it was optional.

Practical Wi-Fi 6

Enough theory. Now we know 802.11ax is secure and pretty fast. Now we need to see how fast (security is really difficult to measure). There is one practical obstacle, though, Wi-Fi 6 hardware at the time of writing this is well ... uncommon. Such access points and clients exist and are even generally available. Me being me, I wouldn't buy a random access point, oh no! My AP will run DD-WRT or OpenWrt. That's the hurdle.

More about that in my next post.

Decoding EU Digital COVID Certificate

Friday, August 6. 2021

If you live in EU, you most definitely have heard of COVID Passport.

Practically speaking, it is a PDF-file A4-sized when printed and can be folded into A6-pocket size. In Finland a sample would look like this:

What's eye-catching is the QR-code in the top right corner. As I'm into all kinds of nerdy stuff, I took a peek what's in it.

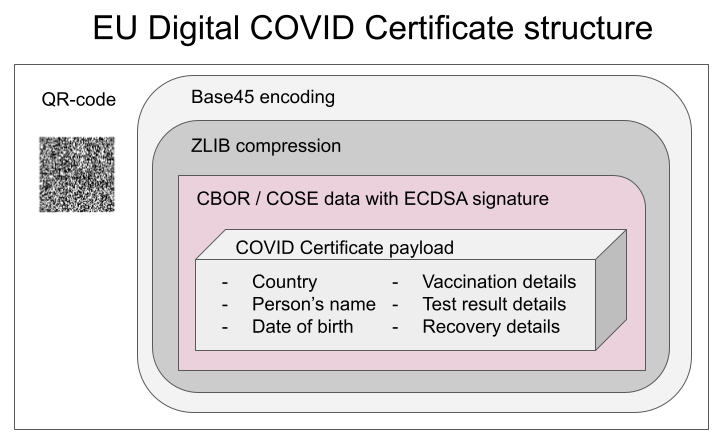

After reading some specs and brochures (like https://www.kanta.fi/en/covid-19-certificate) I started to tear this apart and deduced following:

- An A4 or the QR-code in it can be easily copied / duplicated

- Payload can be easily forged

- There is a claim: "The certificate has a code for verifying the authenticity"

My only question was: How is this sane! Why do they think this mechanism they designed makes sense?

QR-code

Data in QR-code is wrapped multiple times:

This CBOR Object Signing and Encryption (COSE) described in RFC 8152 was a new one for me.

Payload does contain very little of your personal information, but enough to be compared with your ID-card or passport. Also there is your vaccination, test and possible recovery from COVID statuses. Data structure can contain 1, 2 or 3 of those depending if you have been vaccinated or tested, or if you have recovered from the illness.

Python code

It's always about the details. So, I forked an existing git-repo and did some of my own tinkering. Results are at: https://github.com/HQJaTu/vacdec/tree/certificate-fetch

Original code by Mr. Hanno Böck could read QR-code and do some un-wrapping for it to reveal the payload. As I'm always interested in X.509, digital signatures and cryptography, I improved his code by incorporating the digital signature verification into it.

As CBOR/COSE was new for me, it took a while to get the thing working. In reality the most difficult part was to get my hands into the national public certificates to do some ECDSA signature verifying. It's really bizarre how that material is not made easily available.

Links:

- Swagger of DGC-gateway: https://eu-digital-green-certificates.github.io/dgc-gateway/

- This is just for display-purposes, this demo isn't connected to any of the national back-ends

- Sample data for testing verification: https://github.com/eu-digital-green-certificates/dgc-testdata

- All participating nations included

- QR-codes and raw-text ready-to-be-base45-decoded

- Payload JSON_schema: https://github.com/ehn-dcc-development/ehn-dcc-schema/

- Just to be clear, the payload is not JSON

- However, CBOR is effectively binary JSON

- List of all production signature certificates: https://dgcg.covidbevis.se/tp/

- Austrian back-end trust-list in JSON/CBOR: https://greencheck.gv.at/api/masterdata

- Swedish back-end trust-list in CBOR: https://dgcg.covidbevis.se/tp/trust-list

- The idea is for all national back-ends to contain everybody's signing certificate

Wallet

Mr. Philipp Trenz from Germany wrote a website to insert your QR-code into your Apple Wallet. Source is at https://github.com/philipptrenz/covidpass and the actual thing is at https://covidpass.eu/

Beautiful! Works perfectly.

Especially in Finland the government is having a vacation. After that they'll think about starting to make plans what to do next. Now thanks to Mr. Trenz every iOS user can have the COVID Certificate in their phones without government invovement.

Finally

Answers:

- Yes, duplication is possible, but not feasible in volume. You can get your hands into somebody else's certificate and can present a proof of vaccination, but verification will display the original name, not yours.

- Yes, there is even source code for creating the QR-code, so it's very easy to forge.

- Yes, the payload has a very strong elliptic curve signature in it. Any forged payloads won't verify.

Ultimately I was surprised how well designed the entire stack is. It's always nice to see my tax-money put into good use. I have nothing negative to say about the architecture or technologies used.

Bonus:

At very end of my project, I bumped into Mr. Mathias Panzenböck's code https://github.com/panzi/verify-ehc/. He has an excellent implementation of signature handling, much better than mine. Go check that out too.

DynDNS updates to your Cloud DNS - Updated

Monday, July 12. 2021

There is a tool, I've been running for a few years now. In 2018 I published it into GitHub and wrote a blog post about it. Later, I wrote it to support Azure DNS.

As this code is something I do run in my production system(s), I do keep it maintained and working. Latest changes include:

- Proper logging wia logging-module

- Proper setup via

pip install . - Library done as proper Python-package

- Python 3.9 support

- Rackspace Cloud DNS library via Pyrax maintained:

- Supporting Python 3.7+

- Keyword argument

asyncrenamed intoasync_call

- Improved documentation a lot!

- Setup docs improved

- systemd service docs improved

This long-running project of mine starts to feel like a real thing. I'm planning to publish it into PyPI later.

Enjoy!